VS2017 CUDA Programming learning 1: CUDA Programming two variable addition operation

VS2017 CUDA Programming learning 2: executing threads on GPU

VS2017 CUDA Programming learning 3: CUDA obtains attribute information on the device

VS2017 CUDA Programming learning 4: preliminary exploration of CUDA parallel processing - Implementation of vector addition

VS2017 CUDA Programming learning 5: CUDA parallel execution - thread

VS2017 CUDA Programming learning 6: GPU memory architecture

VS2017 CUDA Programming learning 7: thread synchronization - shared memory

VS2017 CUDA Programming learning 8: thread synchronization - atomic operation

VS2017 CUDA Programming learning 9: constant memory

preface

Here we continue to learn CUDA Programming. Today we learned the use of device texture memory. Here we share it with you!

1. Understanding of texture memory

Texture memory is a read-only memory in the device. There is also a corresponding buffer Cache in the device memory to speed up IO operations. Texture memory is very efficient for data with spatial proximity access in programs. The spatial proximity here means that the reading position of each thread is adjacent to the reading position of other threads, which is very useful in image processing, because neighborhood comparison is often required in image processing, such as 4-neighborhood and 8-neighborhood.

Of course, the global memory can also store and access data with spatial proximity characteristics, but the speed is much slower, and a large number of video memory reading operations are required. However, texture memory only needs to be read from video memory once (I don't understand this statement, as the book says). Texture memory supports 2D and 3D texture reading operations.

2. C++ CUDA realizes constant memory usage

Here is a simple example: read data from texture memory and assign values.

Texture memory needs to be used with "texture reference" and CUDA array.

Texture reference through

t

e

x

t

u

r

e

<

p

a

r

a

m

1

,

p

a

r

a

m

2

,

p

a

r

a

m

3

>

texture<param1, param2, param3>

Texture < param1, param2, param3 > is defined by type variables. param1 represents the type of texture element, param2 represents the type of Texture Reference (1-1D, 2-2D, 3-3D), and param3 represents the read mode. This is an optional parameter indicating whether to perform automatic type conversion.

Note that the texture reference variable should be defined as a global static variable (personal understanding, so that it can be used in kernel functions), and ensure that this variable cannot be passed to any other function as a parameter.

In the following example, the thread ID is read as the data of the index position through the texture reference, and then copied to d_ The out pointer points to global memory.

In the main function, the CUDA array is used c u d a A r r a y cudaArray cudaArray and pass c u d a M e m c p y T o A r r a y ( p a r a m 1 , p a r a m 2 , p a r a m 3 , p a r a m 4 , p a r a m 5 , p a r a m 6 ) cudaMemcpyToArray(param1, param2, param3, param4, param5, param6) cudaMemcpyToArray(param1,param2,param3,param4,param5,param6) assigns a value to the CUDA array. param1 represents the target CUDA array variable, param2 and param3 represent the horizontal and vertical offsets of assigning host data to the CUDA array, and 0, 0 represents the upper left corner of the CUDA array.

use c u d a B i n d T e x t u r e T o A r r a y ( ) cudaBindTextureToArray() The cudaBindTextureToArray() function binds the texture reference to the CUDA array, so that the CUDA array data stored in the texture memory can be obtained by accessing the texture reference. Here, the corresponding function of texture is used for access. When the texture memory is used up, the code to unbind the texture reference from the CUDA array is executed, that is, call c u d a U n b i n d T e x t u r e ( ) cudaUnbindTexture() cudaUnbindTexture().

The detailed code is as follows:

#include <stdio.h>

#include <iostream>

#include <cuda.h>

#include <cuda_runtime.h>

#include <cuda_runtime_api.h>

#include <device_launch_parameters.h>

#ifndef __CUDACC__

#define __CUDACC__

#endif

#include <cuda_texture_types.h>//texture<> depend head file

#include <texture_fetch_functions.h>//tex1D() depend head file

#define NUM_THREADS 10

#define N 10

//Defines a 1-dimensional texture reference

texture<float, 1, cudaReadModeElementType> textureRef;

//textureReference textureRef;

//Define kernel function: get data from texture memory and assign it to device memory

__global__ void gpu_texture_memory(int n, float* d_out)

{

int idx = blockIdx.x *blockDim.x + threadIdx.x;

if (idx < n)

{

float temp = tex1D(textureRef, float(idx));

d_out[idx] = temp;

}

}

int main()

{

int num_blocks = N / NUM_THREADS + ((N % NUM_THREADS) ? 1 : 0);

float* d_out;

cudaMalloc(&d_out, sizeof(float) * N);

float* h_out = (float*)malloc(sizeof(float) * N);

float h_in[N];

for (int i = 0; i < N; i++)

{

h_in[i] = float(i);

}

//Define CUDA array

cudaArray *cu_Array;

cudaMallocArray(&cu_Array, &textureRef.channelDesc, N, 1);

cudaMemcpyToArray(cu_Array, 0, 0, h_in, sizeof(float)*N, cudaMemcpyHostToDevice);

//Bind CUDA array to texture reference variable

cudaBindTextureToArray(&textureRef, cu_Array, &textureRef.channelDesc);

//Calling kernel functions

gpu_texture_memory << <num_blocks, NUM_THREADS >> > (N, d_out);

//Copy results to host

cudaMemcpy(h_out, d_out, sizeof(float)*N, cudaMemcpyDeviceToHost);

printf("stay GPU Use texture memory on:\n");

//Print results

for (int i = 0; i < N; i++)

{

printf("%f\n", h_out[i]);

}

//Reclaim allocated resources

free(h_out);

cudaFree(d_out);

cudaFreeArray(cu_Array);

cudaUnbindTexture(&textureRef);

system("pause");

return 0;

}

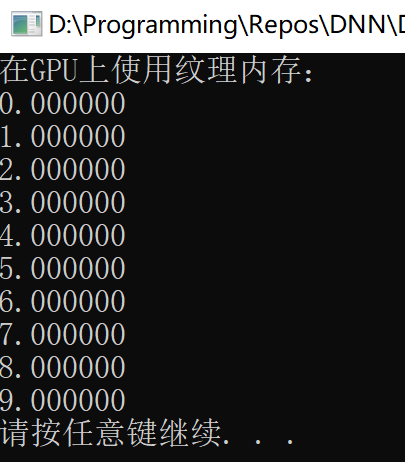

3. Implementation results

summary

To tell you the truth, I still can't feel the acceleration of texture memory here. Here is just a brief introduction to the usage of texture memory. Since this is only for recording personal learning notes, please forgive me if there is any error. If you can correct it, thank you very much!

Learning materials

Computer vision programming based on GPU acceleration