1. Tool preparation

Computer Mac, development tool Android studio 3.4, compilation environment cmake

2. Development steps

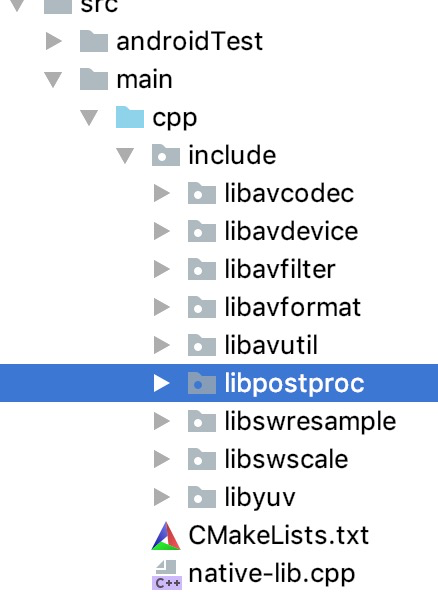

- Create a new module project

- Create a new cpp folder and a new CMakeLists.txt file in the main directory

cmake_minimum_required(VERSION 3.4.1)

include_directories(../cpp/include)

set(SOURCES)

file(GLOB_RECURSE SOURCES ${CMAKE_SOURCE_DIR}/*.cpp ${CMAKE_SOURCE_DIR}/*.c)

# Import the log Library of the system

find_library( log-lib

log )

set(distribution_DIR ../../../../libs)

#add_library( yuv

# SHARED

# IMPORTED)

#set_target_properties( yuv

# PROPERTIES IMPORTED_LOCATION

# ${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libyuv.so)

# Preload libfmod.so

add_library( avcodec-56

SHARED

IMPORTED)

set_target_properties( avcodec-56

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libavcodec-56.so)

# Preload libfmodL.so

add_library( avdevice-56

SHARED

IMPORTED)

set_target_properties( avdevice-56

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libavdevice-56.so)

add_library( avfilter-5

SHARED

IMPORTED)

set_target_properties( avfilter-5

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libavfilter-5.so)

add_library( avformat-56

SHARED

IMPORTED)

set_target_properties( avformat-56

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libavformat-56.so)

add_library( avutil-54

SHARED

IMPORTED)

set_target_properties( avutil-54

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libavutil-54.so)

add_library( postproc-53

SHARED

IMPORTED)

set_target_properties( postproc-53

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libpostproc-53.so)

add_library( swresample-1

SHARED

IMPORTED)

set_target_properties( swresample-1

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libswresample-1.so)

add_library( swscale-3

SHARED

IMPORTED)

set_target_properties( swscale-3

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libswscale-3.so)

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=gnu++11"

add_library( # Sets the name of the library.

ffmpeg

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

${SOURCES})

target_link_libraries( ffmpeg

# yuv

avutil-54

swresample-1

avcodec-56

avformat-56

swscale-3

postproc-53

avfilter-5

avdevice-56

${log-lib} )- Add the native-lib.cpp file under the CPP directory

#include <jni.h>

#include <string>

#include <cstdlib>

#include <android/log.h>

using namespace std;

extern "C"//Because it is a mix of C and C + +, you must add

{

//Code

#include "libavcodec/avcodec.h"

//Package format processing

#include "libavformat/avformat.h"

//Pixel processing

#include "libswscale/swscale.h"

#include "libavutil/avutil.h"

#include "libavutil/frame.h"

}

#define LOGI(FORMAT, ...) __android_log_print(ANDROID_LOG_INFO,"jason",FORMAT,##__VA_ARGS__);

#define LOGE(FORMAT, ...) __android_log_print(ANDROID_LOG_ERROR,"jason",FORMAT,##__VA_ARGS__);

extern "C" JNIEXPORT jstring JNICALL

Java_com_lee_jniffmpeg_MainActivity_stringFromJNI(

JNIEnv *env,

jobject /* this */) {

string hello = "Hello from C++";

return env->NewStringUTF(hello.c_str());

}extern "C"

JNIEXPORT void JNICALL

Java_com_lee_jniffmpeg_VideoUtils_decode(JNIEnv *env, jclass clazz, jstring input_jstr,

jstring output_jstr) {

//Video files to be transcoded (input video files)

const char *input_cstr = env->GetStringUTFChars(input_jstr, nullptr);

const char *output_cstr = env->GetStringUTFChars(output_jstr, nullptr);

//1. Register all components

av_register_all();

//Encapsulate the format context, command the global structure, and save the relevant information of the video file encapsulation format

AVFormatContext *pFormatCtx = avformat_alloc_context();

//2. Open the input video file

if (avformat_open_input(&pFormatCtx, input_cstr, NULL, NULL) != 0) {

LOGE("%s", "Unable to open input video file");

return;

}

//3. Access to video file information

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "Unable to get video file information");

return;

}

//Get index location of video stream

//Traverse all types of streams (audio stream, video stream, subtitle stream) to find the video stream

int v_stream_idx = -1;

int i = 0;

//number of streams

for (; i < pFormatCtx->nb_streams; i++) {

//Type of flow

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

v_stream_idx = i;

break;

}

}

if (v_stream_idx == -1) {

LOGE("%s", "Video stream not found\n");

return;

}

//Only when we know the encoding mode of video can we find the decoder according to the encoding mode

//Get the codec context in the video stream

AVCodecContext * pCodecCtx = pFormatCtx->streams[v_stream_idx]->codec;

//4. Find the corresponding decoding according to the encoding id in the encoding and decoding context

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

//(look at Xunlei. We can't find a decoder. Download a decoder temporarily.)

if (pCodec == NULL) {

LOGE("%s", "Decoder not found\n");

return;

}

//5. Turn on decoder

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("%s", "Decoder cannot be opened\n");

return;

}

//Output video information

LOGI("File format of video:%s", pFormatCtx->iformat->name);

int time=static_cast<int>(pFormatCtx->duration) / 1000000;

LOGI("Video duration:%d",time);

LOGI("Width and height of video:%d,%d", pCodecCtx->width, pCodecCtx->height);

LOGI("Name of decoder:%s", pCodec->name);

//Ready to read

//AVPacket is used to store frame by frame compressed data (H264)

//Buffer zone, open up space

AVPacket *packet = (AVPacket *) av_malloc(sizeof(AVPacket));

//AVFrame is used to store decoded pixel data (YUV)

//memory allocation

AVFrame *pFrame = av_frame_alloc();

//YUV420

AVFrame *pFrameYUV = av_frame_alloc();

//Only when the pixel format and picture size of AVFrame are specified can the memory be truly allocated

//Buffer allocation memory

uint8_t *out_buffer = (uint8_t *) av_malloc(

avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height));

//Initialize buffer

avpicture_fill((AVPicture *) pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width,

pCodecCtx->height);

//Parameters for transcoding (scaling), width and height before and after transcoding, format, etc

struct SwsContext *sws_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height,

pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height,

AV_PIX_FMT_YUV420P,

SWS_BICUBIC, NULL, NULL, NULL);

int got_picture, ret;

FILE *fp_yuv = fopen(output_cstr, "wb+");

int frame_count = 0;

//6. Read compressed data frame by frame

while (av_read_frame(pFormatCtx, packet) >= 0) {

//As long as the video compressed data (according to the index position of the stream)

if (packet->stream_index == v_stream_idx) {

//7. Decode one frame of video compression data to get video pixel data

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0) {

LOGE("%s", "Decoding error");

return;

}

//Decoding completed for 0, decoding not 0

if (got_picture) {

//AVFrame to pixel format YUV420, width and height

//Input and output data

//3 7 the data size of input and output screen row by row AVFrame conversion is line by line conversion

//4 the first column of input data to be transcoded starts from 0

//5 height of input screen

sws_scale(sws_ctx, pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

//Export to YUV file

//AVFrame pixel frame write file

//Data decoded image pixel data (audio sampling data)

//Y brightness UV chroma (compressed) people are more sensitive to brightness

//The number of U V is 1 / 4 of Y

int y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv);

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv);

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv);

frame_count++;

LOGI("Decoding section%d frame", frame_count);

}

}

//Release resources

av_free_packet(packet);

}

fclose(fp_yuv);

env->ReleaseStringUTFChars(input_jstr, input_cstr);

env->ReleaseStringUTFChars(output_jstr, output_cstr);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_free_context(pFormatCtx);

}- Modify the build.gradle file

apply plugin: 'com.android.application'

apply plugin: 'kotlin-android'

apply plugin: 'kotlin-android-extensions'

android {

compileSdkVersion 28

defaultConfig {

applicationId "com.lee.jniffmpeg"

minSdkVersion 15

targetSdkVersion 28

versionCode 1

versionName "1.0"

testInstrumentationRunner "android.support.test.runner.AndroidJUnitRunner"

externalNativeBuild {

cmake {

cppFlags "-frtti -fexceptions"

}

}

//ndk compilation generates. so file

ndk {

moduleName "ffmpeg" //Generated so name

abiFilters 'x86', 'x86_64', 'armeabi-v7a', 'arm64-v8a' //The output specifies the so Library under three abi architectures.

}

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android-optimize.txt'), 'proguard-rules.pro'

}

}

externalNativeBuild {

cmake {

path file('src/main/cpp/CMakeLists.txt')

}

}

}

dependencies {

implementation fileTree(dir: 'libs', include: ['*.jar'])

implementation "org.jetbrains.kotlin:kotlin-stdlib-jdk7:$kotlin_version"

implementation 'com.android.support:appcompat-v7:28.0.0'

implementation 'com.android.support.constraint:constraint-layout:1.1.3'

testImplementation 'junit:junit:4.12'

androidTestImplementation 'com.android.support.test:runner:1.0.2'

androidTestImplementation 'com.android.support.test.espresso:espresso-core:3.0.2'

}

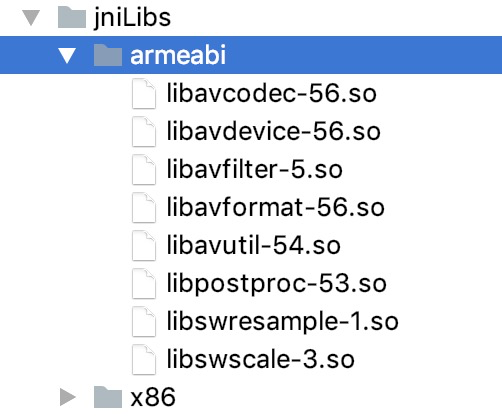

- Copy and paste the compiled files into cpp folder and jniLibs folder respectively, and start compiling after adding. Select Refresh Linked C++ Projects of build

- Writing native methods

public class VideoUtils {

public native static void decode(String input,String output);

static {

System.loadLibrary("ffmpeg");

}

}- End of operation