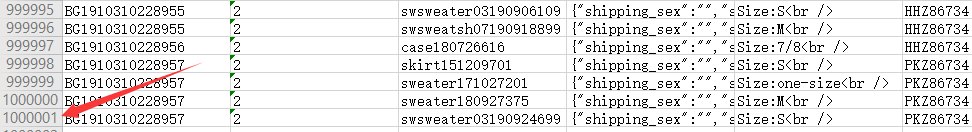

Yesterday, the product put forward an urgent demand to export the commodity data shipped in November, write SQL and send it to DBA for execution, and then get more than 30 Excel files of 100W data. There is a property that exists in a field in the table in JSON format. You need to process the excel file to extract it separately (as shown in the fourth column of the figure).

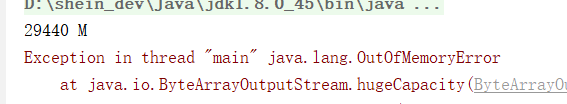

The handler is also written in the process of data export. The general idea is to read in Excel and build a Workbook object, then convert the value of the specified column, and finally write it back to the original file. Nice idea, sad result, OOM. Even if Xmx and Xms are maximized, it doesn't help.

The system usually does not encounter this problem when importing or exporting. The size of the uploaded file is limited when importing, and the maximum number of exports is set in the application when exporting. The export directly from the database is written with SQL and handed over to the DBA for processing. They have their own tools.

Based on the excellent quality of solving difficulties, this paper summarizes the SXSSF in POI, which deals with large amount of data.

1, HSSF, XSSF and SXSSF

The first is HSSF, which supports versions of 2003 and below, that is, Excel files at the end of. xls. Only 65536 pieces of data can be stored.

Then there is XSSF, which supports the version 2007 and above, that is, the Excel file at the end of. xlsx. Although a single Sheet supports 1048576 pieces of data, its performance is not good, and so much data is easy to OOM in memory.

The last is SXSSF, which uses different storage methods, not to mention specifically, as long as you know how to use it in large quantities. Well, it only supports. xlsx files. Just look at its name: "S" + "XSSF". S means SAX event driven mode.

2, SXSSF export data

This is relatively simple. Sxsssf also implements the Workbook interface, so it's similar to HSSF and XSSF, except that the way to build instances is different.

Its basic idea is: when the data in memory is enough for a certain number of lines (the constructor can be set), it will first brush to the hard disk. However, you don't really need to read 100W data into memory at one time. You should load it into memory in batches according to the total amount (for example, reading from the database requires paging).

Go straight to the code

/**

* POI export

*

* @author Zhou Huanghua

* @date 2020/1/11 14:03

*/

public class PoiExport {

private static final Logger LOGGER = Logger.getLogger(MethodHandles.lookup().lookupClass().getName());

public static void main(String[] args) throws Exception {

long begin = System.currentTimeMillis();

// keep 100 rows in memory, exceeding rows will be flushed to disk

try (SXSSFWorkbook wb = new SXSSFWorkbook(100);

OutputStream os = new FileOutputStream("C:/Users/dell/Desktop/tmp/demo.xlsx")) {

Sheet sh = wb.createSheet();

String val = "The first%s Xing di%s column";

for (int rowNum = 0; rowNum < 100_0000; rowNum++) {

Row row = sh.createRow(rowNum);

int realRowNum = rowNum + 1;

Cell cell1 = row.createCell(0);

cell1.setCellValue(format(val, realRowNum, 1));

Cell cell2 = row.createCell(1);

cell2.setCellValue(format(val, realRowNum, 2));

Cell cell3 = row.createCell(2);

cell3.setCellValue(format(val, realRowNum, 3));

Cell cell4 = row.createCell(3);

cell4.setCellValue(format(val, realRowNum, 4));

}

wb.write(os);

}

LOGGER.info("Export 100 W Row data time (seconds):" + (System.currentTimeMillis() - begin)/1000);

}

}The test results in a 17M file, which takes 17 seconds.

3, SXSSF import data

This is more complex, but the steps are also unified. The difference is that you need to implement your own SheetContentsHandler, which I call content processor.

Direct code

/**

* POI Import

*

* @author Zhou Huanghua

* @date 2020/1/11 14:02

*/

public class PoiImport {

private static final Logger LOGGER = Logger.getLogger(MethodHandles.lookup().lookupClass().getName());

public static void main(String[] args) throws Exception {

String filePath = "C:/Users/dell/Desktop/tmp/demo.xlsx";

// OPCPackage.open(...) has multiple overloaded methods, such as String path, File file, InputStream in, etc

try (OPCPackage opcPackage = OPCPackage.open(filePath);) {

// Create XSSFReader to read stylestab and readonlysharedstringstab

XSSFReader xssfReader = new XSSFReader(opcPackage);

StylesTable stylesTable = xssfReader.getStylesTable();

ReadOnlySharedStringsTable sharedStringsTable = new ReadOnlySharedStringsTable(opcPackage);

// Create XMLReader and set ContentHandler

XMLReader xmlReader = SAXHelper.newXMLReader();

xmlReader.setContentHandler(new XSSFSheetXMLHandler(stylesTable, sharedStringsTable, new SimpleSheetContentsHandler(), false));

// Parse each Sheet data

Iterator<InputStream> sheetsData = xssfReader.getSheetsData();

while (sheetsData.hasNext()) {

try (InputStream inputStream = sheetsData.next();) {

xmlReader.parse(new InputSource(inputStream));

}

}

}

}

/**

* Content processor

*/

public static class SimpleSheetContentsHandler implements XSSFSheetXMLHandler.SheetContentsHandler {

protected List<String> row;

/**

* A row with the (zero based) row number has started

*

* @param rowNum

*/

@Override

public void startRow(int rowNum) {

row = new ArrayList<>();

}

/**

* A row with the (zero based) row number has ended

*

* @param rowNum

*/

@Override

public void endRow(int rowNum) {

if (row.isEmpty()) {

return;

}

// Processing data

LOGGER.info(row.stream().collect(Collectors.joining(" ")));

}

/**

* A cell, with the given formatted value (may be null),

* and possibly a comment (may be null), was encountered

*

* @param cellReference

* @param formattedValue

* @param comment

*/

@Override

public void cell(String cellReference, String formattedValue, XSSFComment comment) {

row.add(formattedValue);

}

/**

* A header or footer has been encountered

*

* @param text

* @param isHeader

* @param tagName

*/

@Override

public void headerFooter(String text, boolean isHeader, String tagName) {

}

}

}At present, it's just a line of data to be parsed, which depends on whether you can accept the usage scenario. If it's asynchronous, it's OK.

If you think the data volume and the heap memory of your JVM are OK, you can pass a collection to collect each row of data in the constructor of SimpleSheetContentsHandler, and then process the data uniformly after all the data are parsed. BUT, since you use SXSSF, there is an OOM risk in doing so.

It's better to collect each row of data one by one. However, it needs to reach a certain number of rows before batch processing, and then clear the collection for re collection. Before you can figure out how to write the code gracefully, you should not make a fool of yourself.

By the way, the maven dependency I use is

<dependency>

<groupId>org.apache.poi</groupId>

<artifactId>poi</artifactId>

<version>3.17</version>

</dependency>

<dependency>

<groupId>org.apache.poi</groupId>

<artifactId>poi-ooxml</artifactId>

<version>3.17</version>

</dependency>demo code address: https://github.com/zhouhuanghua/poi-demo.