Introduction to Data Lake Analytics (DLA)

For more reading about the concept of Data Lake, you can refer to:

https://en.wikipedia.org/wiki/Data_lake

And AWS and Azure's interpretation of Data Lake:

https://amazonaws-china.com/big-data/datalakes-and-analytics/what-is-a-data-lake/

https://azure.microsoft.com/en-us/solutions/data-lake/

Finally, Aliyun now has its own data Lake analysis products: https://www.aliyun.com/product/datalakeanalytics

You can click on the application (the current public testing stage is still an invitation mode, we will approve the application as soon as possible), and experience the data analysis journey of TPC-H CSV data format in this tutorial.

Product documentation: https://help.aliyun.com/product/70174.html

1. Opening Data Lake Analytics and OSS Services

If you have already started, you can skip this step. If not, you can refer to: https://help.aliyun.com/document_detail/70386.html

Apply for product opening service.

2. Download TPC-H Test Data Set

You can download the TPC-H 100MB dataset from here:

https://public-datasets-cn-hangzhou.oss-cn-hangzhou.aliyuncs.com/tpch_100m_data.zip

3. Upload data files to OSS

OSS console on Aliyun official website: https://oss.console.aliyun.com/overview

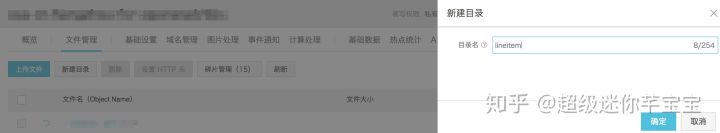

Plan the OSS bucket you want to use. After creating or selecting it, click on File Management, because there are eight data files, create a corresponding file directory for each data file:

Create 8 directories as follows:

Click to enter the directory and upload the corresponding data files, such as the customer directory, then upload the customer.tbl file.

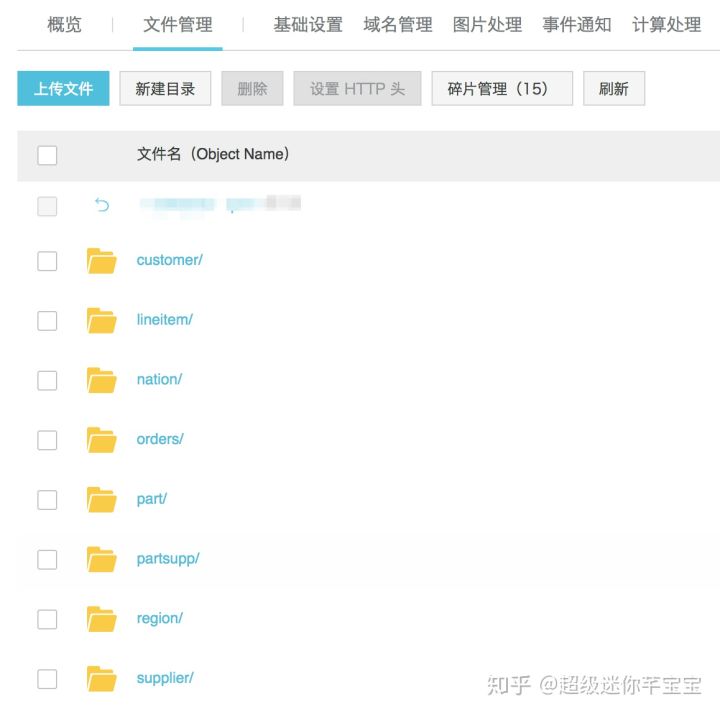

After uploading, as shown below. Then, the other seven data files are uploaded to the corresponding directory in turn.

So far, eight data files have been uploaded to your OSS bucket:

oss://xxx/tpch_100m/customer/customer.tbl oss://xxx/tpch_100m/lineitem/lineitem.tbl oss://xxx/tpch_100m/nation/nation.tbl oss://xxx/tpch_100m/orders/orders.tbl oss://xxx/tpch_100m/part/part.tbl oss://xxx/tpch_100m/partsupp/partsupp.tbl oss://xxx/tpch_100m/region/region.tbl oss://xxx/tpch_100m/supplier/supplier.tbl

4. Log on to the Data Lake Analytics console

https://openanalytics.console.aliyun.com/

Click "Log in to the database", enter the username and password assigned when opening the service, and log in to the Data Lake Analysis console.

5. Create Schema and Table

Enter the statement to create SCHEMA and click Synchronize Execution.

CREATE SCHEMA tpch_100m with DBPROPERTIES( LOCATION = 'oss://test-bucket-julian-1/tpch_100m/', catalog='oss' );

(Note: At present, the schema name of Data Lake Analytics is globally unique in the same Aliyun region. It is suggested that the schema name should be based on the business definition as far as possible. There is already a renamed schema. When creating the schema, an error will be prompted. Please change the schema name.)

When Schema is created, in the drop-down box of the Database, select the schema you just created. Then enter the table-building statement in the SQL text box and click Synchronize Execution.

Grammatical Reference for Table-building Statements: https://help.aliyun.com/document_detail/72006.html

The table-building statements of eight tables corresponding to TPC-H are as follows, which are pasted into the document box and executed separately. (The location of data files in the LOCATION clause should be modified according to your actual OSS bucket directory.) (Note: At present, there is no support for multiple SQL statements in the console. Please execute a single statement.)

CREATE EXTERNAL TABLE nation ( N_NATIONKEY INT, N_NAME STRING, N_ID STRING, N_REGIONKEY INT, N_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/nation'; CREATE EXTERNAL TABLE lineitem ( L_ORDERKEY INT, L_PARTKEY INT, L_SUPPKEY INT, L_LINENUMBER INT, L_QUANTITY DOUBLE, L_EXTENDEDPRICE DOUBLE, L_DISCOUNT DOUBLE, L_TAX DOUBLE, L_RETURNFLAG STRING, L_LINESTATUS STRING, L_SHIPDATE DATE, L_COMMITDATE DATE, L_RECEIPTDATE DATE, L_SHIPINSTRUCT STRING, L_SHIPMODE STRING, L_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/lineitem'; CREATE EXTERNAL TABLE orders ( O_ORDERKEY INT, O_CUSTKEY INT, O_ORDERSTATUS STRING, O_TOTALPRICE DOUBLE, O_ORDERDATE DATE, O_ORDERPRIORITY STRING, O_CLERK STRING, O_SHIPPRIORITY INT, O_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/orders'; CREATE EXTERNAL TABLE supplier ( S_SUPPKEY INT, S_NAME STRING, S_ADDRESS STRING, S_NATIONKEY INT, S_PHONE STRING, S_ACCTBAL DOUBLE, S_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/supplier'; CREATE EXTERNAL TABLE partsupp ( PS_PARTKEY INT, PS_SUPPKEY INT, PS_AVAILQTY INT, PS_SUPPLYCOST DOUBLE, PS_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/partsupp'; CREATE EXTERNAL TABLE customer ( C_CUSTKEY INT, C_NAME STRING, C_ADDRESS STRING, C_NATIONKEY INT, C_PHONE STRING, C_ACCTBAL DOUBLE, C_MKTSEGMENT STRING, C_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/customer'; CREATE EXTERNAL TABLE part ( P_PARTKEY INT, P_NAME STRING, P_MFGR STRING, P_BRAND STRING, P_TYPE STRING, P_SIZE INT, P_CONTAINER STRING, P_RETAILPRICE DOUBLE, P_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/part'; CREATE EXTERNAL TABLE region ( R_REGIONKEY INT, R_NAME STRING, R_COMMENT STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' STORED AS TEXTFILE LOCATION 'oss://test-bucket-julian-1/tpch_100m/region';

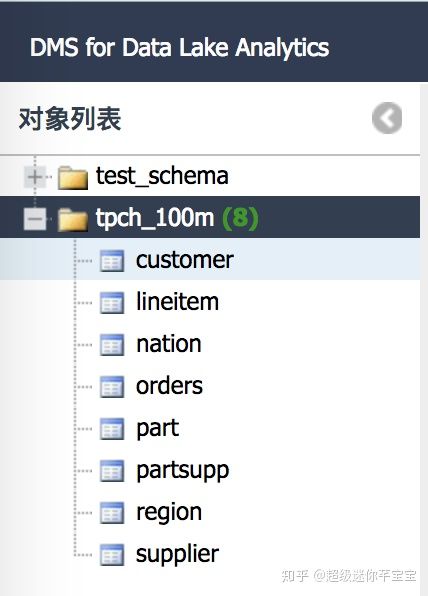

After the table is built, refresh the page and you can see eight tables under schema in the left navigation bar.

6. Perform TPC-H queries

TPC-H has 22 queries as follows:

Q1:

SELECT l_returnflag, l_linestatus, Sum(l_quantity) AS sum_qty, Sum(l_extendedprice) AS sum_base_price, Sum(l_extendedprice * (1 - l_discount)) AS sum_disc_price, Sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) AS sum_charge, Avg(l_quantity) AS avg_qty, Avg(l_extendedprice) AS avg_price, Avg(l_discount) AS avg_disc, Count(*) AS count_order FROM lineitem WHERE l_shipdate <= date '1998-12-01' - INTERVAL '93' day GROUP BY l_returnflag, l_linestatus ORDER BY l_returnflag, l_linestatus LIMIT 1;

Q2:

SELECT s_acctbal, s_name, n_name, p_partkey, p_mfgr, s_address, s_phone, s_comment FROM part, supplier, partsupp, nation, region WHERE p_partkey = ps_partkey AND s_suppkey = ps_suppkey AND p_size = 35 AND p_type LIKE '%NICKEL' AND s_nationkey = n_nationkey AND n_regionkey = r_regionkey AND r_name = 'MIDDLE EAST'

Q3:

SELECT l_orderkey, Sum(l_extendedprice * (1 - l_discount)) AS revenue, o_orderdate, o_shippriority FROM customer, orders, lineitem WHERE c_mktsegment = 'AUTOMOBILE' AND c_custkey = o_custkey AND l_orderkey = o_orderkey AND o_orderdate < date '1995-03-31' AND l_shipdate > date '1995-03-31' GROUP BY l_orderkey, o_orderdate, o_shippriority ORDER BY revenue DESC, o_orderdate LIMIT 10;

Q4:

SELECT o_orderpriority, Count(*) AS order_count FROM orders, lineitem WHERE o_orderdate >= date '1997-10-01' AND o_orderdate < date '1997-10-01' + INTERVAL '3' month AND l_orderkey = o_orderkey AND l_commitdate < l_receiptdate GROUP BY o_orderpriority ORDER BY o_orderpriority LIMIT 1;

Q5:

SELECT n_name, Sum(l_extendedprice * (1 - l_discount)) AS revenue FROM customer, orders, lineitem, supplier, nation, region WHERE c_custkey = o_custkey AND l_orderkey = o_orderkey AND l_suppkey = s_suppkey AND c_nationkey = s_nationkey AND s_nationkey = n_nationkey AND n_regionkey = r_regionkey AND r_name = 'ASIA' AND o_orderdate >= date '1995-01-01' AND o_orderdate < date '1995-01-01' + INTERVAL '1' year GROUP BY n_name ORDER BY revenue DESC LIMIT 1;

Q6:

SELECT sum(l_extendedprice * l_discount) AS revenue FROM lineitem WHERE l_shipdate >= date '1995-01-01' AND l_shipdate < date '1995-01-01' + interval '1' year AND l_discount between 0.04 - 0.01 AND 0.04 + 0.01 AND l_quantity < 24 LIMIT 1;

Q7:

SELECT supp_nation, cust_nation, l_year, Sum(volume) AS revenue FROM ( SELECT n1.n_name AS supp_nation, n2.n_name AS cust_nation, Extract(year FROM l_shipdate) AS l_year, l_extendedprice * (1 - l_discount) AS volume FROM supplier, lineitem, orders, customer, nation n1, nation n2 WHERE s_suppkey = l_suppkey AND o_orderkey = l_orderkey AND c_custkey = o_custkey AND s_nationkey = n1.n_nationkey AND c_nationkey = n2.n_nationkey AND ( ( n1.n_name = 'GERMANY' AND n2.n_name = 'INDIA') OR ( n1.n_name = 'INDIA' AND n2.n_name = 'GERMANY') ) AND l_shipdate BETWEEN date '1995-01-01' AND date '1996-12-31' ) AS shipping GROUP BY supp_nation, cust_nation, l_year ORDER BY supp_nation, cust_nation, l_year LIMIT 1;

Q8:

SELECT o_year, Sum( CASE WHEN nation = 'INDIA' THEN volume ELSE 0 end) / Sum(volume) AS mkt_share FROM ( SELECT Extract(year FROM o_orderdate) AS o_year, l_extendedprice * (1 - l_discount) AS volume, n2.n_name AS nation FROM part, supplier, lineitem, orders, customer, nation n1, nation n2, region WHERE p_partkey = l_partkey AND s_suppkey = l_suppkey AND l_orderkey = o_orderkey AND o_custkey = c_custkey AND c_nationkey = n1.n_nationkey AND n1.n_regionkey = r_regionkey AND r_name = 'ASIA' AND s_nationkey = n2.n_nationkey AND o_orderdate BETWEEN date '1995-01-01' AND date '1996-12-31' AND p_type = 'STANDARD ANODIZED STEEL' ) AS all_nations GROUP BY o_year ORDER BY o_year LIMIT 1;

Q9:

SELECT nation, o_year, Sum(amount) AS sum_profit FROM ( SELECT n_name AS nation, Extract(year FROM o_orderdate) AS o_year, l_extendedprice * (1 - l_discount) - ps_supplycost * l_quantity AS amount FROM part, supplier, lineitem, partsupp, orders, nation WHERE s_suppkey = l_suppkey AND ps_suppkey = l_suppkey AND ps_partkey = l_partkey AND p_partkey = l_partkey AND o_orderkey = l_orderkey AND s_nationkey = n_nationkey AND p_name LIKE '%aquamarine%' ) AS profit GROUP BY nation, o_year ORDER BY nation, o_year DESC LIMIT 1;

Q10:

SELECT c_custkey, c_name, Sum(l_extendedprice * (1 - l_discount)) AS revenue, c_acctbal, n_name, c_address, c_phone, c_comment FROM customer, orders, lineitem, nation WHERE c_custkey = o_custkey AND l_orderkey = o_orderkey AND o_orderdate >= date '1994-08-01' AND o_orderdate < date '1994-08-01' + INTERVAL '3' month AND l_returnflag = 'R' AND c_nationkey = n_nationkey GROUP BY c_custkey, c_name, c_acctbal, c_phone, n_name, c_address, c_comment ORDER BY revenue DESC LIMIT 20;

Q11:

SELECT ps_partkey, Sum(ps_supplycost * ps_availqty) AS value FROM partsupp, supplier, nation WHERE ps_suppkey = s_suppkey AND s_nationkey = n_nationkey AND n_name = 'PERU' GROUP BY ps_partkey HAVING Sum(ps_supplycost * ps_availqty) > ( SELECT Sum(ps_supplycost * ps_availqty) * 0.0001000000 as sum_value FROM partsupp, supplier, nation WHERE ps_suppkey = s_suppkey AND s_nationkey = n_nationkey AND n_name = 'PERU' ) ORDER BY value DESC LIMIT 1;

Q12:

SELECT l_shipmode, sum(case when o_orderpriority = '1-URGENT' or o_orderpriority = '2-HIGH' then 1 else 0 end) AS high_line_count, sum(case when o_orderpriority <> '1-URGENT' and o_orderpriority <> '2-HIGH' then 1 else 0 end) AS low_line_count FROM orders, lineitem WHERE o_orderkey = l_orderkey AND l_shipmode in ('MAIL', 'TRUCK') AND l_commitdate < l_receiptdate AND l_shipdate < l_commitdate AND l_receiptdate >= date '1996-01-01' AND l_receiptdate < date '1996-01-01' + interval '1' year GROUP BY l_shipmode ORDER BY l_shipmode LIMIT 1;

Q13:

SELECT c_count, count(*) AS custdist FROM ( SELECT c_custkey, count(o_orderkey) AS c_count FROM customer, orders WHERE c_custkey = o_custkey AND o_comment NOT LIKE '%pending%accounts%' GROUP BY c_custkey ) AS c_orders GROUP BY c_count ORDER BY custdist DESC, c_count DESC LIMIT 1;

Q14:

SELECT 100.00 * sum(case when p_type like 'PROMO%' then l_extendedprice * (1 - l_discount) else 0 end) / sum(l_extendedprice * (1 - l_discount)) AS promo_revenue FROM lineitem, part WHERE l_partkey = p_partkey AND l_shipdate >= date '1996-01-01' AND l_shipdate < date '1996-01-01' + interval '1' month LIMIT 1;

Q15:

WITH revenue0 AS ( SELECT l_suppkey AS supplier_no, sum(l_extendedprice * (1 - l_discount)) AS total_revenue FROM lineitem WHERE l_shipdate >= date '1993-01-01' AND l_shipdate < date '1993-01-01' + interval '3' month GROUP BY l_suppkey ) SELECT s_suppkey, s_name, s_address, s_phone, total_revenue FROM supplier, revenue0 WHERE s_suppkey = supplier_no AND total_revenue IN ( SELECT max(total_revenue) FROM revenue0 ) ORDER BY s_suppkey;

Q16:

SELECT p_brand, p_type, p_size, count(distinct ps_suppkey) AS supplier_cnt FROM partsupp, part WHERE p_partkey = ps_partkey AND p_brand <> 'Brand#23' AND p_type NOT LIKE 'PROMO BURNISHED%' AND p_size IN (1, 13, 10, 28, 21, 35, 31, 11) AND ps_suppkey NOT IN ( SELECT s_suppkey FROM supplier WHERE s_comment LIKE '%Customer%Complaints%' ) GROUP BY p_brand, p_type, p_size ORDER BY supplier_cnt DESC, p_brand, p_type, p_size LIMIT 1;

Q17:

SELECT sum(l_extendedprice) / 7.0 AS avg_yearly FROM lineitem, part WHERE p_partkey = l_partkey AND p_brand = 'Brand#44' AND p_container = 'WRAP PKG' AND l_quantity < ( SELECT 0.2 * avg(l_quantity) FROM lineitem, part WHERE l_partkey = p_partkey );

Q18:

SELECT c_name, c_custkey, o_orderkey, o_orderdate, o_totalprice, sum(l_quantity) FROM customer, orders, lineitem WHERE o_orderkey IN ( SELECT l_orderkey FROM lineitem GROUP BY l_orderkey HAVING sum(l_quantity) > 315 ) AND c_custkey = o_custkey AND o_orderkey = l_orderkey GROUP BY c_name, c_custkey, o_orderkey, o_orderdate, o_totalprice ORDER BY o_totalprice DESC, o_orderdate LIMIT 100;

Q19:

SELECT sum(l_extendedprice* (1 - l_discount)) AS revenue FROM lineitem, part WHERE ( p_partkey = l_partkey and p_brand = 'Brand#12' and p_container in ('SM CASE', 'SM BOX', 'SM PACK', 'SM PKG') and l_quantity >= 6 and l_quantity <= 6 + 10 and p_size between 1 and 5 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) or ( p_partkey = l_partkey and p_brand = 'Brand#13' and p_container in ('MED BAG', 'MED BOX', 'MED PKG', 'MED PACK') and l_quantity >= 10 and l_quantity <= 10 + 10 and p_size between 1 and 10 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) or ( p_partkey = l_partkey and p_brand = 'Brand#24' and p_container in ('LG CASE', 'LG BOX', 'LG PACK', 'LG PKG') and l_quantity >= 21 and l_quantity <= 21 + 10 and p_size between 1 and 15 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) LIMIT 1;

Q20:

with temp_table as ( select 0.5 * sum(l_quantity) as col1 from lineitem, partsupp where l_partkey = ps_partkey and l_suppkey = ps_suppkey and l_shipdate >= date '1993-01-01' and l_shipdate < date '1993-01-01' + interval '1' year ) select s_name, s_address from supplier, nation where s_suppkey in ( select ps_suppkey from partsupp, temp_table where ps_partkey in ( select p_partkey from part where p_name like 'dark%' ) and ps_availqty > temp_table.col1 ) and s_nationkey = n_nationkey and n_name = 'JORDAN' order by s_name limit 1;

Q21:

select s_name, count(*) as numwait from supplier, lineitem l1, orders, nation where s_suppkey = l1.l_suppkey and o_orderkey = l1.l_orderkey and o_orderstatus = 'F' and l1.l_receiptdate > l1.l_commitdate and exists ( select * from lineitem l2 where l2.l_orderkey = l1.l_orderkey and l2.l_suppkey <> l1.l_suppkey ) and not exists ( select * from lineitem l3 where l3.l_orderkey = l1.l_orderkey and l3.l_suppkey <> l1.l_suppkey and l3.l_receiptdate > l3.l_commitdate ) and s_nationkey = n_nationkey and n_name = 'SAUDI ARABIA' group by s_name order by numwait desc, s_name limit 100;

Q22:

with temp_table_1 as ( select avg(c_acctbal) as avg_value from customer where c_acctbal > 0.00 and substring(c_phone from 1 for 2) in ('33', '29', '37', '35', '25', '27', '43') ), temp_table_2 as ( select count(*) as count1 from orders, customer where o_custkey = c_custkey ) select cntrycode, count(*) as numcust, sum(c_acctbal) as totacctbal from ( select substring(c_phone from 1 for 2) as cntrycode, c_acctbal from customer, temp_table_1, temp_table_2 where substring(c_phone from 1 for 2) in ('33', '29', '37', '35', '25', '27', '43') and c_acctbal > temp_table_1.avg_value and temp_table_2.count1 = 0) as custsale group by cntrycode order by cntrycode limit 1;

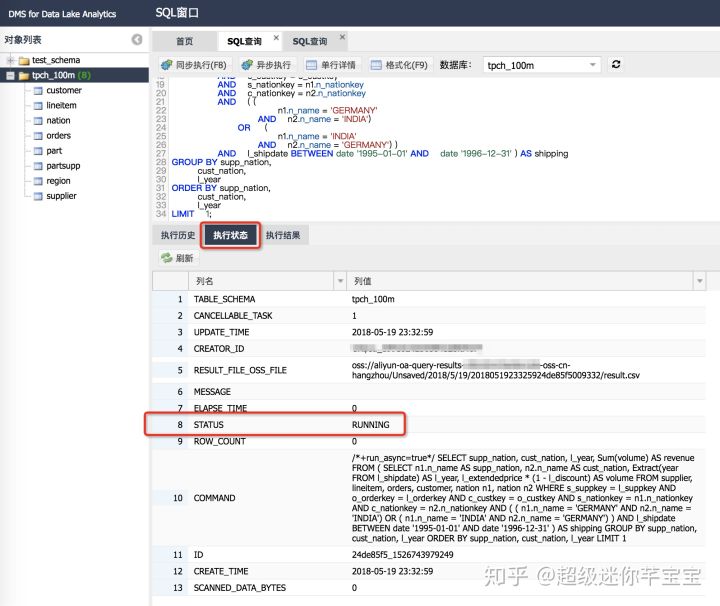

7. Asynchronous execution of queries

Data Lake Analytics supports both synchronous and asynchronous execution modes. In "synchronous execution" mode, the console interface waits for the execution result to return; in "asynchronous execution" mode, the ID of the query task is returned immediately.

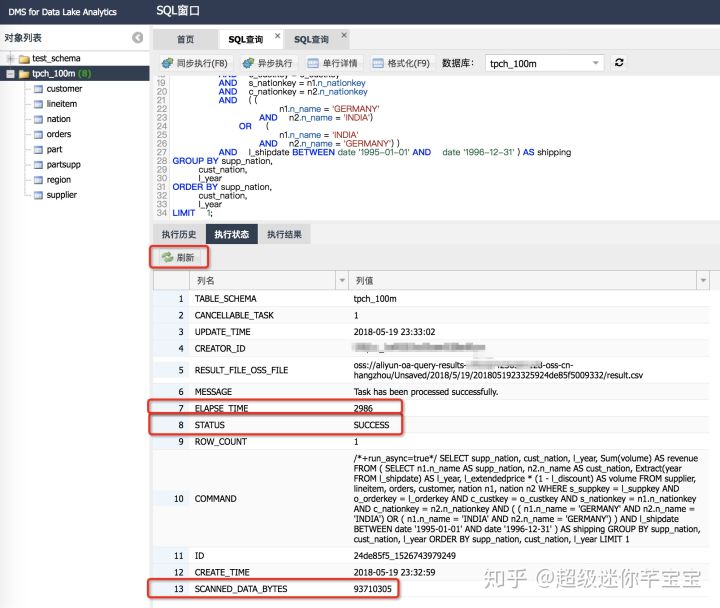

Click on "Execution Status" to see the execution status of the asynchronous query task, mainly divided into: "RUNNING", "SUCCESS", "FAILURE".

Click "Refresh" when STATUS changes to "SUCCESS" to indicate that the query is successful, while viewing the query time-consuming "ELAPSE_TIME" and the number of data bytes scanned by the query "SCANNED_DATA_BYTES".

8. View query history

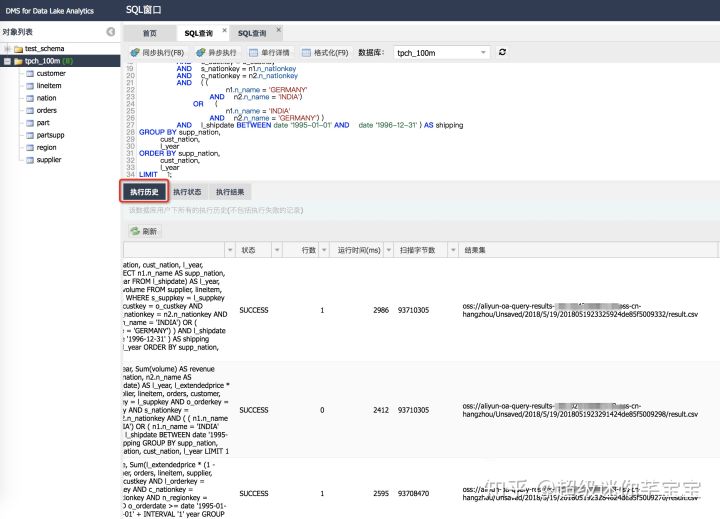

Click "Execution History" to see the historical details of the queries you executed, including:

1) Query statements;

2) Query time-consuming and execution time;

3) The number of rows returned from the query results;

4) Query status;

5) The number of bytes scanned by query;

6) The result set is written back to the target OSS file (Data Lake Analytics saves the query result set in the user's bucket).

The query result file is automatically uploaded to the OSS bucket of the user and region, including the result data file and the metadata description file of the result set.

{QueryLocation}/{query_name}|Unsaved}/{yyyy}/{mm}/{dd}/{query_id}/xxx.csv {QueryLocation}/{query_name}|Unsaved}/{yyyy}/{mm}/{dd}/{query_id}/xxx.csv.metadata

Query Location is:

aliyun-oa-query-results-<your_account_id>-<oss_region>

9. follow up

So far, this tutorial teaches you step by step how to use Data Lake Analytics cloud products to analyze CSV-formatted data files on your OSS. In addition to CSV files, Data Lake Analytics also supports data analysis capabilities of Parquet, ORC, json, RCFile, AVRO and other format files. Parquet and ORC, in particular, have great performance and cost advantages over CSV files (data sets with the same content have smaller storage space and faster query performance, which also means lower analysis costs).

There will be more tutorials and articles to teach you how to easily use Data Lake Analytics for data analysis and Exploration on the data lake, opening up your low-cost, off-the-shelf data analysis and exploration journey on the cloud.

This article is the original content of Yunqi Community, which can not be reproduced without permission.