1, Background

The Stream type is a new type after redis5. In this article, we use Spring boot data redis to consume the data in Redis Stream. Realize independent consumption and consumption group consumption.

2, Integration steps

1. Import jar package

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

<version>2.11.1</version>

</dependency>

</dependencies>It is mainly the package above. For other unrelated packages, the import is omitted here.

2. Configure RedisTemplate dependencies

@Configuration

public class RedisConfig {

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory connectionFactory) {

RedisTemplate<String, Object> redisTemplate = new RedisTemplate<>();

redisTemplate.setConnectionFactory(connectionFactory);

redisTemplate.setKeySerializer(new StringRedisSerializer());

redisTemplate.setValueSerializer(new StringRedisSerializer());

redisTemplate.setHashKeySerializer(new StringRedisSerializer());

// json serialization cannot be used in this place. If ObjectRecord is used to transfer objects, there may be problems. A java.lang.IllegalArgumentException: Value must not be null! error

redisTemplate.setHashValueSerializer(RedisSerializer.string());

return redisTemplate;

}

}be careful:

Attention should be paid to the serialization method of setHashValueSerializer. The specific precautions will be discussed later.

3. Prepare a solid object

This entity object is the object that needs to be sent to the Stream.

@Getter

@Setter

@ToString

public class Book {

private String title;

private String author;

public static Book create() {

com.github.javafaker.Book fakerBook = Faker.instance().book();

Book book = new Book();

book.setTitle(fakerBook.title());

book.setAuthor(fakerBook.author());

return book;

}

}Each time the create method is called, a Book object will be automatically generated, and the object simulation data is simulated and generated using java faker.

4. Write a constant class to configure the name of Stream

/**

* constant

*

*/

public class Cosntants {

public static final String STREAM_KEY_001 = "stream-001";

}5. Write a producer to send production data to the Stream

1. Write a producer to generate ObjectRecord type data to the Stream

/**

* Message producer

*/

@Component

@RequiredArgsConstructor

@Slf4j

public class StreamProducer {

private final RedisTemplate<String, Object> redisTemplate;

public void sendRecord(String streamKey) {

Book book = Book.create();

log.info("Generate information for a Book:[{}]", book);

ObjectRecord<String, Book> record = StreamRecords.newRecord()

.in(streamKey)

.ofObject(book)

.withId(RecordId.autoGenerate());

RecordId recordId = redisTemplate.opsForStream()

.add(record);

log.info("Returned record-id:[{}]", recordId);

}

}2. Produce a data Stream every 5s

/**

* Periodically generate messages into the flow

*/

@Component

@AllArgsConstructor

public class CycleGeneratorStreamMessageRunner implements ApplicationRunner {

private final StreamProducer streamProducer;

@Override

public void run(ApplicationArguments args) {

Executors.newSingleThreadScheduledExecutor()

.scheduleAtFixedRate(() -> streamProducer.sendRecord(STREAM_KEY_001),

0, 5, TimeUnit.SECONDS);

}

}3, Independent consumption

Independent consumption refers to the message in the direct consumption Stream separated from the consumption group. It uses the xread method to read the data in the Stream. The data in the Stream will not be deleted after reading, but still exists. If multiple programs use xread to read at the same time, messages can be read.

1. Realize consumption from scratch - xread implementation

The implementation here is to start consumption from the first message of the Stream

package com.huan.study.redis.stream.consumer.xread;

import com.huan.study.redis.constan.Cosntants;

import com.huan.study.redis.entity.Book;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.DisposableBean;

import org.springframework.beans.factory.InitializingBean;

import org.springframework.data.redis.connection.stream.ObjectRecord;

import org.springframework.data.redis.connection.stream.ReadOffset;

import org.springframework.data.redis.connection.stream.StreamOffset;

import org.springframework.data.redis.connection.stream.StreamReadOptions;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

import org.springframework.util.CollectionUtils;

import javax.annotation.Resource;

import java.time.Duration;

import java.util.List;

import java.util.concurrent.LinkedBlockingDeque;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* Off consumption group - directly consume the data in the Stream, and you can get all the messages in the Stream

*/

@Component

@Slf4j

public class XreadNonBlockConsumer01 implements InitializingBean, DisposableBean {

private ThreadPoolExecutor threadPoolExecutor;

@Resource

private RedisTemplate<String, Object> redisTemplate;

private volatile boolean stop = false;

@Override

public void afterPropertiesSet() {

// Initialize thread pool

threadPoolExecutor = new ThreadPoolExecutor(1, 1, 0, TimeUnit.SECONDS,

new LinkedBlockingDeque<>(), r -> {

Thread thread = new Thread(r);

thread.setDaemon(true);

thread.setName("xread-nonblock-01");

return thread;

});

StreamReadOptions streamReadOptions = StreamReadOptions.empty()

// If there is no data, the blocking 1s blocking time needs to be less than the time configured by 'spring.redis.timeout'

.block(Duration.ofMillis(1000))

// Block until data is obtained, and a timeout exception may be reported

// .block(Duration.ofMillis(0))

// Get 10 data at a time

.count(10);

StringBuilder readOffset = new StringBuilder("0-0");

threadPoolExecutor.execute(() -> {

while (!stop) {

// When reading data with xread, you need to record the last read offset, and then use it as the next read offset, otherwise the read data will have problems

List<ObjectRecord<String, Book>> objectRecords = redisTemplate.opsForStream()

.read(Book.class, streamReadOptions, StreamOffset.create(Cosntants.STREAM_KEY_001, ReadOffset.from(readOffset.toString())));

if (CollectionUtils.isEmpty(objectRecords)) {

log.warn("No data was obtained");

continue;

}

for (ObjectRecord<String, Book> objectRecord : objectRecords) {

log.info("Acquired data information id:[{}] book:[{}]", objectRecord.getId(), objectRecord.getValue());

readOffset.setLength(0);

readOffset.append(objectRecord.getId());

}

}

});

}

@Override

public void destroy() throws Exception {

stop = true;

threadPoolExecutor.shutdown();

threadPoolExecutor.awaitTermination(3, TimeUnit.SECONDS);

}

}

be careful:

The next time data is read, offset is the last id value obtained last time, otherwise data leakage may occur.

2. StreamMessageListenerContainer enables independent consumption

See the code of consumption group below

4, Consumption group consumption

1. Implement the StreamListener interface

The purpose of implementing this interface is to consume the data in the Stream. Note whether the method streamMessageListenerContainer.receiveAutoAck() or streamMessageListenerContainer.receive() is used during registration. If it is the second method, manual ack is required. The code of manual ack is redisTemplate.opsForStream().acknowledge("key","group","recordId");

/**

* Asynchronous consumption via listener

*

* @author huan.fu 2021/11/10 - 5:51 PM

*/

@Slf4j

@Getter

@Setter

public class AsyncConsumeStreamListener implements StreamListener<String, ObjectRecord<String, Book>> {

/**

* Consumer type: independent consumption, consumption group consumption

*/

private String consumerType;

/**

* Consumer group

*/

private String group;

/**

* A consumer in the consumer group

*/

private String consumerName;

public AsyncConsumeStreamListener(String consumerType, String group, String consumerName) {

this.consumerType = consumerType;

this.group = group;

this.consumerName = consumerName;

}

private RedisTemplate<String, Object> redisTemplate;

@Override

public void onMessage(ObjectRecord<String, Book> message) {

String stream = message.getStream();

RecordId id = message.getId();

Book value = message.getValue();

if (StringUtils.isBlank(group)) {

log.info("[{}]: A message was received stream:[{}],id:[{}],value:[{}]", consumerType, stream, id, value);

} else {

log.info("[{}] group:[{}] consumerName:[{}] A message was received stream:[{}],id:[{}],value:[{}]", consumerType,

group, consumerName, stream, id, value);

}

// When it is consumed by a consumption group, if it is not automatic ACK, you need to manually ack in this place

// redisTemplate.opsForStream()

// .acknowledge("key","group","recordId");

}

}2. Error handling in getting consumption or consumption message

/**

* StreamPollTask An exception occurred while getting the message or the corresponding listener consumption message

*

* @author huan.fu 2021/11/11 - 3:44 PM

*/

@Slf4j

public class CustomErrorHandler implements ErrorHandler {

@Override

public void handleError(Throwable t) {

log.error("An exception occurred", t);

}

}3. Consumer group configuration

/**

* redis stream Consumer group configuration

*

* @author huan.fu 2021/11/11 - 12:22 PM

*/

@Configuration

public class RedisStreamConfiguration {

@Resource

private RedisConnectionFactory redisConnectionFactory;

/**

* It can support independent consumption and consumer group consumption at the same time

* <p>

* It can support dynamic addition and deletion of consumers

* <p>

* The consumption group needs to be created in advance

*

* @return StreamMessageListenerContainer

*/

@Bean(initMethod = "start", destroyMethod = "stop")

public StreamMessageListenerContainer<String, ObjectRecord<String, Book>> streamMessageListenerContainer() {

AtomicInteger index = new AtomicInteger(1);

int processors = Runtime.getRuntime().availableProcessors();

ThreadPoolExecutor executor = new ThreadPoolExecutor(processors, processors, 0, TimeUnit.SECONDS,

new LinkedBlockingDeque<>(), r -> {

Thread thread = new Thread(r);

thread.setName("async-stream-consumer-" + index.getAndIncrement());

thread.setDaemon(true);

return thread;

});

StreamMessageListenerContainer.StreamMessageListenerContainerOptions<String, ObjectRecord<String, Book>> options =

StreamMessageListenerContainer.StreamMessageListenerContainerOptions

.builder()

// How many messages can I get at a time

.batchSize(10)

// Run poll task of Stream

.executor(executor)

// It can be understood as the serialization method of Stream Key

.keySerializer(RedisSerializer.string())

// It can be understood as the serialization of the key of the field behind the Stream

.hashKeySerializer(RedisSerializer.string())

// It can be understood as the serialization of the value of the field behind the Stream

.hashValueSerializer(RedisSerializer.string())

// When there is no message in the Stream, the blocking time is less than that of 'spring.redis.timeout'

.pollTimeout(Duration.ofSeconds(1))

// When ObjectRecord, convert the file and value of the object into a map, for example, convert the Book object into a map

.objectMapper(new ObjectHashMapper())

// Exception handling occurs in the process of obtaining a message or obtaining a message for specific message processing

.errorHandler(new CustomErrorHandler())

// Convert the Record sent to the Stream into ObjectRecord. The specific type is the type specified here

.targetType(Book.class)

.build();

StreamMessageListenerContainer<String, ObjectRecord<String, Book>> streamMessageListenerContainer =

StreamMessageListenerContainer.create(redisConnectionFactory, options);

// Independent consumption

String streamKey = Cosntants.STREAM_KEY_001;

streamMessageListenerContainer.receive(StreamOffset.fromStart(streamKey),

new AsyncConsumeStreamListener("Independent consumption", null, null));

// Consumer group A, not automatically ack

// Start consumption from messages not assigned to consumers in the consumption group

streamMessageListenerContainer.receive(Consumer.from("group-a", "consumer-a"),

StreamOffset.create(streamKey, ReadOffset.lastConsumed()), new AsyncConsumeStreamListener("Consumption group consumption", "group-a", "consumer-a"));

// Start consumption from messages not assigned to consumers in the consumption group

streamMessageListenerContainer.receive(Consumer.from("group-a", "consumer-b"),

StreamOffset.create(streamKey, ReadOffset.lastConsumed()), new AsyncConsumeStreamListener("Consumption group consumption A", "group-a", "consumer-b"));

// Consumer group B, auto ack

streamMessageListenerContainer.receiveAutoAck(Consumer.from("group-b", "consumer-a"),

StreamOffset.create(streamKey, ReadOffset.lastConsumed()), new AsyncConsumeStreamListener("Consumption group consumption B", "group-b", "consumer-bb"));

// If you need to personalize a consumer, pass the 'StreamReadRequest' object when calling the register method

return streamMessageListenerContainer;

}

}

be careful:

Establish a consumption group in advance

127.0.0.1:6379> xgroup create stream-001 group-a $ OK 127.0.0.1:6379> xgroup create stream-001 group-b $ OK

1. Unique consumption configuration

streamMessageListenerContainer.receive(StreamOffset.fromStart(streamKey), new AsyncConsumeStreamListener("Independent consumption", null, null));Do not pass Consumer.

2. Configure consumer group - do not automatically ack messages

streamMessageListenerContainer.receive(Consumer.from("group-a", "consumer-b"),

StreamOffset.create(streamKey, ReadOffset.lastConsumed()), new AsyncConsumeStreamListener("Consumption group consumption A", "group-a", "consumer-b"));1. Note the value of ReadOffset.

2. Note that the group needs to be created in advance.

3. Configuring consumer groups - Automatic ack messages

streamMessageListenerContainer.receiveAutoAck()

5, Serialization strategy

| Stream Property | Serializer | Description |

|---|---|---|

| key | keySerializer | used for Record#getStream() |

| field | hashKeySerializer | used for each map key in the payload |

| value | hashValueSerializer | used for each map value in the payload |

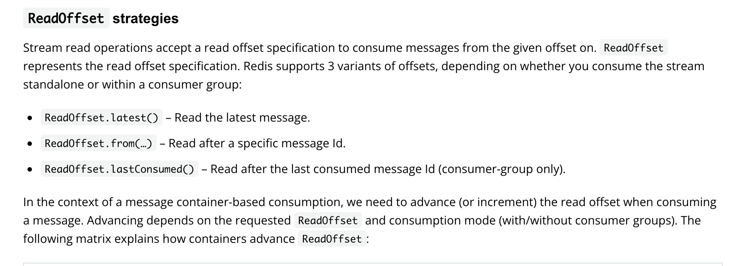

6, ReadOffset policy

Read Offset policy when consuming messages

| Read offset | Standalone | Consumer Group |

|---|---|---|

| Latest | Read latest message | Read latest message |

| Specific Message Id | Use last seen message as the next message id < br / > | Use last seen message as the next message id < br / > |

| Last Consumed | Use last seen message as the next message id < br / > | Last consumed message as per consumer group < br / > |

7, Precautions

1. Timeout for reading messages

When we use StreamReadOptions.empty().block(Duration.ofMillis(1000)) to configure the blocking time, the configured blocking time must be shorter than that configured by spring.redis.timeout, otherwise a timeout exception may be reported.

2. ObjectRecord deserialization error

If the following exceptions occur when reading messages, the troubleshooting idea is as follows:

java.lang.IllegalArgumentException: Value must not be null!

at org.springframework.util.Assert.notNull(Assert.java:201)

at org.springframework.data.redis.connection.stream.Record.of(Record.java:81)

at org.springframework.data.redis.connection.stream.MapRecord.toObjectRecord(MapRecord.java:147)

at org.springframework.data.redis.core.StreamObjectMapper.toObjectRecord(StreamObjectMapper.java:138)

at org.springframework.data.redis.core.StreamObjectMapper.toObjectRecords(StreamObjectMapper.java:164)

at org.springframework.data.redis.core.StreamOperations.map(StreamOperations.java:594)

at org.springframework.data.redis.core.StreamOperations.read(StreamOperations.java:413)

at com.huan.study.redis.stream.consumer.xread.XreadNonBlockConsumer02.lambda$afterPropertiesSet$1(XreadNonBlockConsumer02.java:61)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)1. Detect the serialization mode of HashValueSerializer of RedisTemplate. It is better not to use json. You can use RedisSerializer.string().

2. Check the HashMapper configured in redisTemplate.opsForStream(). The default is ObjectHashMapper, which serializes object fields and values into byte [] format.

Provide an available configuration

# The hash value of RedisTemplate uses the serialization method of string type redisTemplate.setHashValueSerializer(RedisSerializer.string()); # This method uses the default ObjectHashMapper in opsForStream() redisTemplate.opsForStream()

3. Using xread to read data in sequence

If we use xread to read data and find that some write data is missing, we need to check whether the streamofset configured during the second reading is legal. This value must be the last value read last time.

For example:

1. SteamOffset Passes $to read the latest data.

2. Process the data read in the previous step. At this time, another producer inserts several data into the Stream. At this time, the data read has not been processed.

3. If the data in the Stream is read again, or the passed $, it means that the latest data is still read. Then the data flowing into the Stream in the previous step cannot be read by the consumer because it reads the latest data.

4. Use of StreamMessageListenerContainer

1. Consumers can be dynamically added and deleted

2. Consumption group consumption is allowed

3. It can be consumed directly and independently

4. When transferring ObjectRecord, you need to pay attention to the serialization method. Refer to the code above.

8, Complete code

https://gitee.com/huan1993/spring-cloud-parent/tree/master/redis/redis-stream

9, Reference documents

1,https://docs.spring.io/spring-data/redis/docs/2.5.5/reference/html/#redis.streams