background

Since all services in the company run on Alibaba cloud k8s, and the IP reported to the registry by dubbo provider is Pod IP by default, this means that dubbo services cannot be called in the network environment outside the k8s cluster. If local development needs to access dubbo provider services in k8s, it is necessary to manually expose the services to the external network, Our approach is to expose an SLB IP + custom port for each service provider, and use the dubbo provided by DUBBO_IP_TO_REGISTRY and dubbo_ PORT_ TO_ The registry environment variable is used to register the corresponding SLB IP + custom ports in the registry, which enables the connection between the local network and k8s dubbo services. However, this method is very troublesome to manage. Each service has to customize a port, and the ports between each service can not conflict. When there are more services, it is very difficult to manage.

So I wonder if I can implement a seven layer proxy + virtual domain name to reuse a port like nginx ingress, and do the corresponding forwarding through the application.name of the target dubbo provider. In this way, all services only need to register the same SLB IP + port, which greatly improves the convenience. After investigation, it is found that it is feasible to start!

The project is open source: https://github.com/monkeyWie/dubbo-ingress-controller

Technical pre research

thinking

- Firstly, dubbo RPC calls follow the dubbo protocol by default, so I need to see if there is any message information in the protocol that can be used for forwarding, that is, to find the Host request header similar to that in the HTTP protocol. If so, we can forward the reverse proxy and virtual domain name according to this information, and then implement a dubbo gateway similar to nginx.

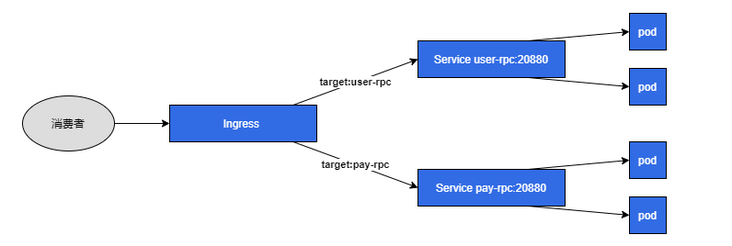

- The second step is to implement the dubbo ingress controller, dynamically update the virtual domain name forwarding configuration of the dubbo gateway through k8s ingress's watcher mechanism, and then all provider services are forwarded by the same service, and the address reported to the registration center is also unified as the address of the service.

Architecture diagram

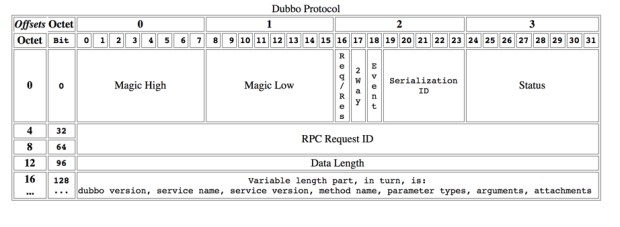

dubbo protocol

First, the last official agreement chart:

It can be seen that the header of dubbo protocol is fixed with 16 bytes, and there is no extensible field similar to HTTP Header, nor does it carry the application.name field of the target provider, so I asked the official issue The official reply is that the application.name of the target provider is put into attachments by the custom Filter. The dubbo protocol is to be tucking out. The extension field is placed in body. If you want to realize the forwarding, you need to make complaints about the message to be finished, but you can't get the message, but it's not a big problem, because it is mainly used for the development environment. This step is barely achievable.

k8s ingress

k8s ingress is designed for HTTP, but there are enough fields in it. Let's take a look at the ingress configuration:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: user-rpc-dubbo

annotations:

kubernetes.io/ingress.class: "dubbo"

spec:

rules:

- host: user-rpc

http:

paths:

- backend:

serviceName: user-rpc

servicePort: 20880

path: /The configuration is the same as http, and the forwarding rules are made through the host. However, the host is configured with the application.name of the target provider, and the back-end service is the service corresponding to the target provider. A special thing here is that a kubernetes.io/ingress.class annotation is used, which can specify which ingress controller this ingress is effective for, Later, our dubbo ingress controller will only parse the ingress configuration with the annotation value of dubbo.

development

The previous technical pre research went well, and then entered the development stage.

Consumer defined Filter

As mentioned earlier, if the application.name of the target provider is to be carried in the request, the consumer needs to customize the Filter. The code is as follows:

@Activate(group = CONSUMER)

public class AddTargetFilter implements Filter {

@Override

public Result invoke(Invoker<?> invoker, Invocation invocation) throws RpcException {

String targetApplication = StringUtils.isBlank(invoker.getUrl().getRemoteApplication()) ?

invoker.getUrl().getGroup() : invoker.getUrl().getRemoteApplication();

// Put the application.name of the target provider into the attachment

invocation.setAttachment("target-application", targetApplication);

return invoker.invoke(invocation);

}

}Here is another point of tucking out. dubbo consumers will make complaints about getting metadata when they visit for the first time. This request is not worth the value of invoker.getUrl().getRemoteApplication(), and can be obtained through invoker.getUrl().getGroup().

dubbo gateway

Here we need to develop a dubbo gateway similar to nginx, and realize seven layer proxy and virtual domain name forwarding. The programming language directly selects go. First, go has a low mental burden for network development. In addition, there is a dubbo go project, which can directly use the decoder inside. Then go has native k8s sdk support, which is perfect!

The idea is to open a TCP Server, parse the message requested by dubbo, get the target application attribute in the attachment, and then reverse proxy it to the real dubbo provider service. The core code is as follows:

routingTable := map[string]string{

"user-rpc": "user-rpc:20880",

"pay-rpc": "pay-rpc:20880",

}

listener, err := net.Listen("tcp", ":20880")

if err != nil {

return err

}

for {

clientConn, err := listener.Accept()

if err != nil {

logger.Errorf("accept error:%v", err)

continue

}

go func() {

defer clientConn.Close()

var proxyConn net.Conn

defer func() {

if proxyConn != nil {

proxyConn.Close()

}

}()

scanner := bufio.NewScanner(clientConn)

scanner.Split(split)

// Parse the request message and get a complete request

for scanner.Scan() {

data := scanner.Bytes()

// Deserialize [] byte s into the dubbo request structure through the library provided by dubbo go

buf := bytes.NewBuffer(data)

pkg := impl.NewDubboPackage(buf)

pkg.Unmarshal()

body := pkg.Body.(map[string]interface{})

attachments := body["attachments"].(map[string]interface{})

// Get the application.name of the target provider from the attachments

target := attachments["target-application"].(string)

if proxyConn == nil {

// Reverse proxy to the real back-end service

host := routingTable[target]

proxyConn, _ = net.Dial("tcp", host)

go func() {

// Original forwarding

io.Copy(clientConn, proxyConn)

}()

}

// Write the original message to the real back-end service, and then go to the original forwarding

proxyConn.Write(data)

}

}()

}

func split(data []byte, atEOF bool) (advance int, token []byte, err error) {

if atEOF && len(data) == 0 {

return 0, nil, nil

}

buf := bytes.NewBuffer(data)

pkg := impl.NewDubboPackage(buf)

err = pkg.ReadHeader()

if err != nil {

if errors.Is(err, hessian.ErrHeaderNotEnough) || errors.Is(err, hessian.ErrBodyNotEnough) {

return 0, nil, nil

}

return 0, nil, err

}

if !pkg.IsRequest() {

return 0, nil, errors.New("not request")

}

requestLen := impl.HEADER_LENGTH + pkg.Header.BodyLen

if len(data) < requestLen {

return 0, nil, nil

}

return requestLen, data[0:requestLen], nil

}Implementation of dubbo ingress controller

A dubbo gateway has been implemented previously, but the routing table in it is still written in the code. What we need to do now is to dynamically update the configuration when k8s ingress is detected to be updated.

First, briefly explain the principle of the ingress controller. Take our commonly used nginx ingress controller as an example. It also monitors k8s ingress resource changes, and then dynamically generates the nginx.conf file. When it is found that the configuration has changed, trigger nginx -s reload to reload the configuration file.

The core technology used is informers , use it to listen for k8s resource changes. Example code:

// Get k8s access configuration within the cluster

cfg, err := rest.InClusterConfig()

if err != nil {

logger.Fatal(err)

}

// Create k8s sdk client instance

client, err := kubernetes.NewForConfig(cfg)

if err != nil {

logger.Fatal(err)

}

// Create an Informer factory

factory := informers.NewSharedInformerFactory(client, time.Minute)

handler := cache.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) {

// New event

},

UpdateFunc: func(oldObj, newObj interface{}) {

// Update event

},

DeleteFunc: func(obj interface{}) {

// Delete event

},

}

// Listening for changes

informer := factory.Extensions().V1beta1().Ingresses().Informer()

informer.AddEventHandler(handler)

informer.Run(ctx.Done())Dynamically update the forwarding configuration by implementing the above three events. Each event will carry the corresponding progress object information, and then carry out corresponding processing:

ingress, ok := obj.(*v1beta12.Ingress)

if ok {

// Filter out dubbo ingress through annotations

ingressClass := ingress.Annotations["kubernetes.io/ingress.class"]

if ingressClass == "dubbo" && len(ingress.Spec.Rules) > 0 {

rule := ingress.Spec.Rules[0]

if len(rule.HTTP.Paths) > 0 {

backend := rule.HTTP.Paths[0].Backend

host := rule.Host

service := fmt.Sprintf("%s:%d", backend.ServiceName+"."+ingress.Namespace, backend.ServicePort.IntVal)

// Obtain the service corresponding to the host in the ingress configuration and notify the dubbo gateway to update it

notify(host,service)

}

}

}docker image provider

All services on k8s need to run in the container. Here is no exception. The dubbo ingress controller needs to be built into a docker image. Here, the image volume is reduced through two-stage Construction Optimization:

FROM golang:1.17.3 AS builder WORKDIR /src COPY . . ENV GOPROXY https://goproxy.cn ENV CGO_ENABLED=0 RUN go build -ldflags "-w -s" -o main cmd/main.go FROM debian AS runner ENV TZ=Asia/shanghai WORKDIR /app COPY --from=builder /src/main . RUN chmod +x ./main ENTRYPOINT ["./main"]

yaml template provided

To access the k8s API in the cluster, you need to authorize the Pod, authorize it through the K8S rbac, and deploy it as a Deployment service. The final template is as follows:

apiVersion: v1

kind: ServiceAccount

metadata:

name: dubbo-ingress-controller

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dubbo-ingress-controller

rules:

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dubbo-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: dubbo-ingress-controller

subjects:

- kind: ServiceAccount

name: dubbo-ingress-controller

namespace: default

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: dubbo-ingress-controller

labels:

app: dubbo-ingress-controller

spec:

selector:

matchLabels:

app: dubbo-ingress-controller

template:

metadata:

labels:

app: dubbo-ingress-controller

spec:

serviceAccountName: dubbo-ingress-controller

containers:

- name: dubbo-ingress-controller

image: liwei2633/dubbo-ingress-controller:0.0.1

ports:

- containerPort: 20880If necessary in the later stage, it can be made into Helm for management.

Postscript

So far, the implementation of dubbo ingress controller has been completed. It can be said that although the sparrow is small, it has all kinds of internal organs. It involves dubbo protocol, TCP protocol, Layer-7 agent, k8s ingress, docker and many other contents. Many of these knowledge need to be mastered in the era when cloud native is becoming more and more popular. After development, I feel I have benefited a lot.

For a complete tutorial, you can github see.

Reference link:

- dubbo protocol

- dubbo-go

- Using multiple - ingress - controllers

- Customizing Kubernetes Ingress Controller using Golang

I'm MonkeyWie. Welcome to scan the code 👇👇 Attention! Share knowledge of dry cargo such as JAVA, Golang, front-end, docker and k8s in official account.