Get started with python Programming quickly (continuous update...)

python crawler from entry to mastery

Scrapy crawler framework

1. Classification and function of the scratch Middleware

1.1 classification of sweep Middleware

According to the different locations in the operation process of the sweep, it can be divided into:

1. Download Middleware

2. Crawler Middleware

1.2 the role of the middle part of the sweep: preprocess the request and response objects

1. Replace and process header s and cookie s

2. Use proxy ip, etc

3. Customize the request, but by default, both middleware are in a file called middlewares.py. The use method of crawler middleware is the same as that of download middleware, and the functions are repeated. Download middleware is usually used

2. How to download middleware:

Learn how to write a downloader middleware by downloading the middleware. Just as we write a pipeline, define a class and open it in setting

The default method of downloader middleware is process_request(self, request, spider):

This method is called when each request passes through the download middleware.

1. Return None value: if there is no return, return None. The request object is passed to the downloader or other processes with low weight through the engine_ Request method

2. Return the response object: return the response to the engine instead of requesting

3. Return the request object: pass the request object to the scheduler through the engine. At this time, it will not pass other processes with low weight_ Request method

Explanation:

None: if all downloaders return none, the request is finally handed over to the downloader for processing

Request: if the return is a request, the request is handed over to the scheduler

Response: if the response is returned as a response, submit the response object to the spide r for parsing

process_response(self, request, response, spider):

Called when the downloader completes the http request and passes the response to the engine

1. Return reposne: the process that is handed over to the crawler through the engine or to other download middleware with lower weight_ Response method

2. Return the request object: pass the engine to the caller to continue the request. At this time, other processes with low weight will not be passed_ Request method

Configure and enable the middleware in settings.py. The smaller the weight value, the more priority will be given to execution

Explanation:

Request: if the return is a request, the request is handed over to the scheduler

Response: submit the response object to the spider for parsing

3. Define and implement the download middleware of random user agent

3.1 reptile watercress

1. Create project

scrapy startproject Douban

2. Modeling (item.py)

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

name = scrapy.Field()

3. Create crawler

cd Douban

scrapy genspider movie douban.com

4. Replace (movie.py)

start_urls = ['https://movie.douban.com/top250']

5. Get class table

movie_list = response.xpath('//*[@id="content"]/div/div[1]/ol/li/div/div[2]') print(len(movie_list))

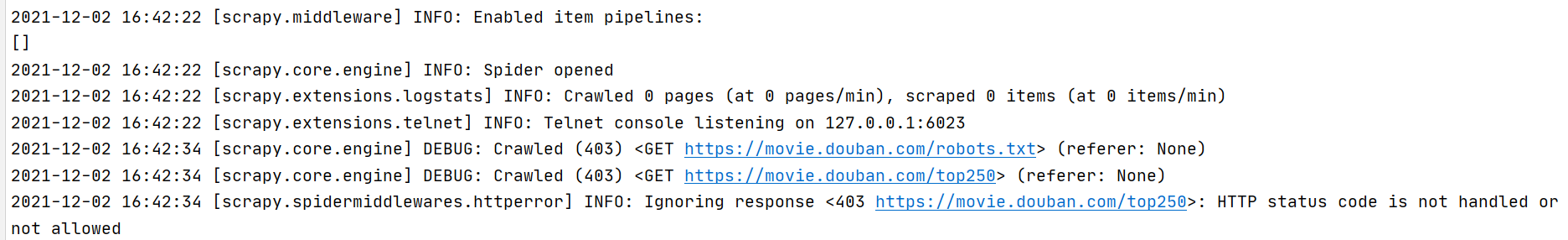

6. Operation test

scrapy crawl movie

Unable to get robots.txt, it is recognized as a crawler

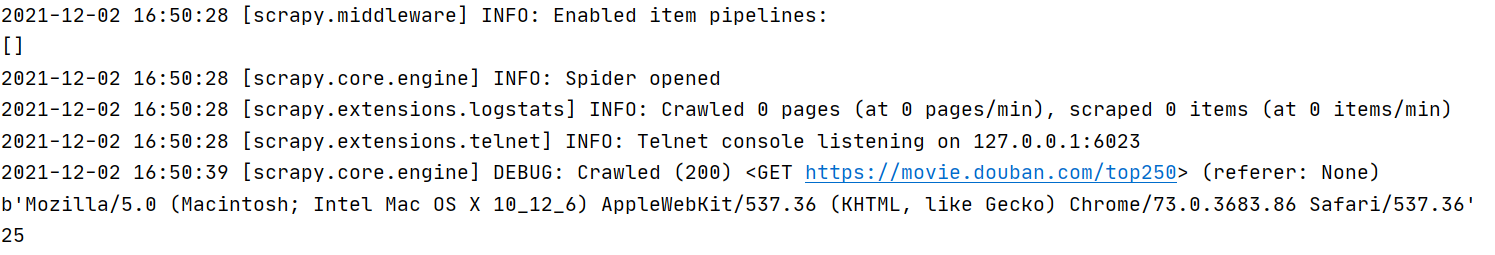

7. Configure User_Agent(settings.py)

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'

8. Code implementation

import scrapy

from Douban.items import DoubanItem

class MovieSpider(scrapy.Spider):

name = 'movie'

allowed_domains = ['douban.com']

start_urls = ['https://movie.douban.com/top250']

def parse(self, response):

print(response.request.headers['User-Agent'])

movie_list = response.xpath('//*[@id="content"]/div/div[1]/ol/li/div/div[2]')

# print(len(movie_list)) #25

for movie in movie_list:

item = DoubanItem()

item['name'] = movie.xpath('./div[1]/a/span[1]/text()').extract_first()

yield item

next_url = response.xpath('//*[@id="content"]/div/div[1]/div[2]/span[3]/a/@href').extract_first()

if next_url != None:

next_url = response.urljoin(next_url)

yield scrapy.Request(url=next_url)

3.2 improve the code in middlewares.py

1. Delete the contents

2.settings add User_Agent list

USER_AGENT_LIST = [ "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/532.5 (KHTML, like Gecko) Chrome/4.0.249.0 Safari/532.5 ", "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.14 (KHTML, like Gecko) Chrome/10.0.601.0 Safari/534.14 ", "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.20 (KHTML, like Gecko) Chrome/11.0.672.2 Safari/534.20 ", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.27 (KHTML, like Gecko) Chrome/12.0.712.0 Safari/534.27 ", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.24 Safari/535.1 ", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.7 (KHTML, like Gecko) Chrome/16.0.912.36 Safari/535.7 ", "Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1 ", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0.1) Gecko/20100101 Firefox/4.0.1 ", "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0a2) Gecko/20110622 Firefox/6.0a2 ", "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:7.0.1) Gecko/20100101 Firefox/7.0.1 ", "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:2.0b4pre) Gecko/20100815 Minefield/4.0b4pre ", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.8 (KHTML, like Gecko) Beamrise/17.2.0.9 Chrome/17.0.939.0 Safari/535.8 ", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/535.2 (KHTML, like Gecko) Chrome/18.6.872.0 Safari/535.2 UNTRUSTED/1.0 3gpp-gba" ]

2. Enable

Set and enable the customized download Middleware in settings. The setting method is the same as that of the pipeline

DOWNLOADER_MIDDLEWARES = {

'Douban.middlewares.RandomUserAgent': 543,

}

3. Random request header

# Define a middleware class

class RandomUserAgent(object):

def process_request(self, request, spider):

# print(request.headers['User-Agent'])

ua = random.choice(USER_AGENT_LIST)

request.headers['User-Agent'] = ua

4. Use of proxy ip

Free proxy ip and paid proxy ip:

1.settings add PROXY_LIST list

PROXY_LIST =[

{"ip_port": "123.207.53.84:16816", "user_passwd": "morganna_mode_g:ggc22qxp"},

{"ip_port": "27.191.60.100:3256"},

]

2. Enable agent

DOWNLOADER_MIDDLEWARES = {

'Douban.middlewares.RandomProxy': 543,

}

3. Proxy ip

class RandomProxy(object):

def process_request(self, request, spider):

proxy = random.choice(PROXY_LIST)

print(proxy)

if 'user_passwd' in proxy:

# Encode the account and password. The data encoded by base64 in Python 3 must be bytes, so encode is required

b64_up = base64.b64encode(proxy['user_passwd'].encode())

# Set authentication

request.headers['Proxy-Authorization'] = 'Basic ' + b64_up.decode()

# Set agent

request.meta['proxy'] = proxy['ip_port']

else:

# Set agent

request.meta['proxy'] = proxy['ip_port']

4. Check whether the proxy ip is available

When proxy ip is used, the process of middleware can be downloaded_ The response () method handles the use of proxy ip. If the proxy ip cannot be used, it can replace other proxy ip

class ProxyMiddleware(object):

def process_response(self, request, response, spider):

if response.status != '200':

request.dont_filter = True # The resend request object can enter the queue again

return request

5. Use selenium in middleware

Based on PM2.5 historical data_ Take the query of historical air quality data as an example. If you need to change a request, several crawls will be disabled

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'

5.1 complete crawler code

# -*- coding: utf-8 -*-

import scrapy

from AQI.items import AqiItem

import time

class AqiSpider(scrapy.Spider):

name = 'aqi'

allowed_domains = ['aqistudy.cn']

host = 'https://www.aqistudy.cn/historydata/'

start_urls = [host]

# Parse the response corresponding to the starting url

def parse(self, response):

#Get city url list

url_list = response.xpath('//div[@class="bottom"]/ul/div[2]/li/a/@href').extract()

# Traversal list

for url in url_list[45:48]:

city_url = response.urljoin(url)

# Initiate a request for the city details page

yield scrapy.Request(city_url, callback=self.parse_month)

# Parse the response corresponding to the detail page request

def parse_month(self, response):

# Get the url list of monthly details

url_list = response.xpath('//ul[@class="unstyled1"]/li/a/@href').extract()

# Traverse the parts of the url list

for url in url_list[30:31]:

month_url = response.urljoin(url)

# Initiate detail page request

yield scrapy.Request(month_url, callback=self.parse_day)

# Parse the data on the details page

def parse_day(self, response):

print (response.url,'######')

# Get all data nodes

node_list = response.xpath('//tr')

city = response.xpath('//div[@class="panel-heading"]/h3/text()').extract_first().split('2')[0]

# Traverse the list of data nodes

for node in node_list:

# Create an item container to store data

item = AqiItem()

# Fill in some fixed parameters first

item['city'] = city

item['url'] = response.url

item['timestamp'] = time.time()

# data

item['date'] = node.xpath('./td[1]/text()').extract_first()

item['AQI'] = node.xpath('./td[2]/text()').extract_first()

item['LEVEL'] = node.xpath('./td[3]/span/text()').extract_first()

item['PM2_5'] = node.xpath('./td[4]/text()').extract_first()

item['PM10'] = node.xpath('./td[5]/text()').extract_first()

item['SO2'] = node.xpath('./td[6]/text()').extract_first()

item['CO'] = node.xpath('./td[7]/text()').extract_first()

item['NO2'] = node.xpath('./td[8]/text()').extract_first()

item['O3'] = node.xpath('./td[9]/text()').extract_first()

# for k,v in item.items():

# print k,v

# print '##########################'

# Return data to the engine

yield item

5.2 using selenium in middlewares.py

from selenium import webdriver

import time

from scrapy.http import HtmlResponse

from scrapy import signals

class SeleniumMiddleware(object):

def process_request(self, request, spider):

url = request.url

if 'daydata' in url:

driver = webdriver.Chrome()

driver.get(url)

time.sleep(3)

data = driver.page_source

driver.close()

# Create response object

res = HtmlResponse(url=url, body=data, encoding='utf-8', request=request)

return res