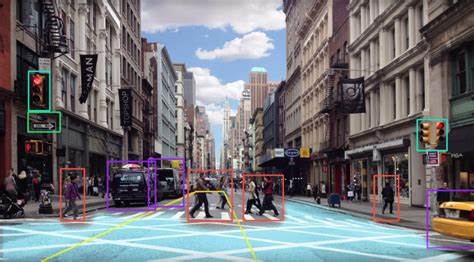

In fact, everything starts with simplicity. Of course, driverless people need artificial intelligence to know their surroundings like the palm of their hand. Everything is mastered. We need to recognize all the objects around us as much as possible. Today's AI senses the surroundings by radar or by scanning the surroundings by laser to get as much information as possible.

import cv2 as cv import numpy as np from matplotlib import pyplot as plt cap = cv.VideoCapture('vtest.avi') while cap.isOpened(): ret, frame = cap.read() cv.imshow('inter',frame) if cv.waitKey(40) == 27: break cv.destroyAllWindows() cap.release()

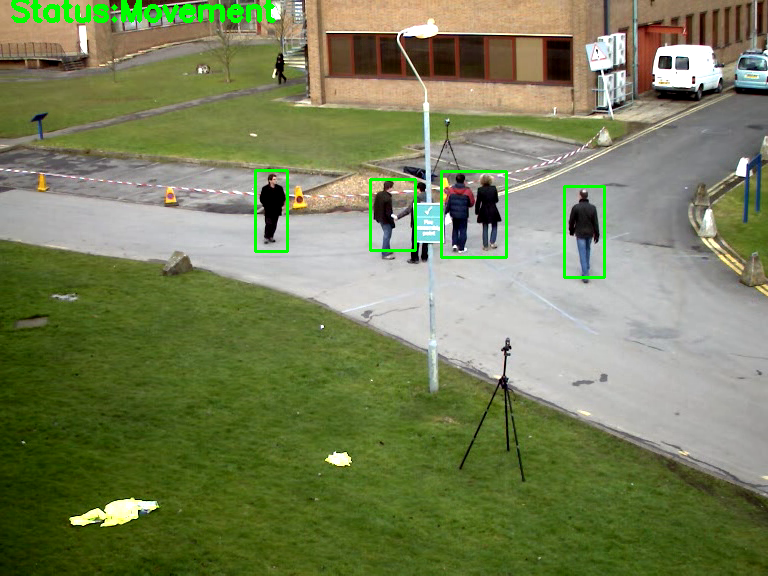

The impact data of the tests were obtained from the opencv official website. There are people running on the impact data. What we need to do is to identify those who are moving, and show them in green boxes. People who first judge sports do not use in-depth learning to identify people who exercise. It just recognizes movement.

If we need to judge the moving object, we need to find the moving object by the difference between two frames. In this paper, absdiff recognizes motion by comparing frames 1 (frame 2) to recognize the difference between two frames.

diff = cv.absdiff(frame1,frame2) gray = cv.cvtColor(diff,cv.COLOR_BGR2GRAY)

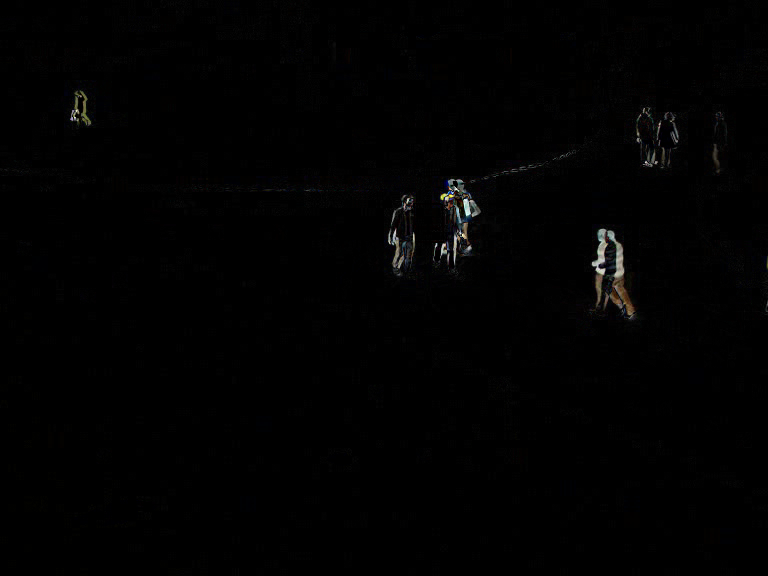

diff = cv.absdiff(frame1,frame2) cv.imshow('diff',diff)

The figure shows absdiff's output by exporting frame1 and frame2, respectively.

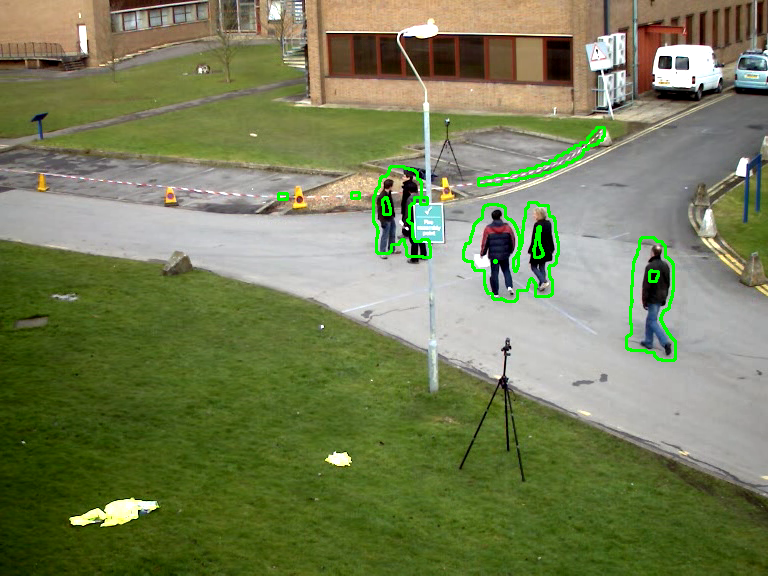

while cap.isOpened(): # ret, frame = cap.read() diff = cv.absdiff(frame1,frame2) gray = cv.cvtColor(diff,cv.COLOR_BGR2GRAY) blur = cv.GaussianBlur(gray,(5,5),0) _, thresh = cv.threshold(blur,20,255,cv.THRESH_BINARY) dilated = cv.dilate(thresh, None, iterations=3) contours, _ = cv.findContours(dilated,cv.RETR_TREE,cv.CHAIN_APPROX_SIMPLE) cv.drawContours(frame1, contours, -1, (0,255,0),2)

Because we are going to do Gauss blurring, color values are not helpful for us to analyze and identify motion problems. So gray image processing. As for Gauss blur, we will analyze it theoretically later. Now we know that it is Gauss blur that smoothes and reduces noise by removing noise points.

blur = cv.GaussianBlur(gray,(5,5),0) cv.imshow('blur',blur)

The dilate() function can expand the input image with a specific structural element, which determines the shape of the neighborhood during the expansion operation. The pixel value of each point will be replaced by the maximum value of the corresponding neighborhood. Expand the display area by dilate, then find contours, and then call drawContours to draw contours.

cap = cv.VideoCapture('vtest.avi') ret, frame1 = cap.read() ret, frame2 = cap.read() while cap.isOpened(): # ret, frame = cap.read() diff = cv.absdiff(frame1,frame2) gray = cv.cvtColor(diff,cv.COLOR_BGR2GRAY) blur = cv.GaussianBlur(gray,(5,5),0) _, thresh = cv.threshold(blur,20,255,cv.THRESH_BINARY) dilated = cv.dilate(thresh, None, iterations=3) contours, _ = cv.findContours(dilated,cv.RETR_TREE,cv.CHAIN_APPROX_SIMPLE) cv.drawContours(frame1, contours, -1, (0,255,0),2) cv.imshow('feed',frame1) frame1 = frame2 ret, frame2 = cap.read() if cv.waitKey(40) == 27: break cv.destroyAllWindows() cap.release()

for contour in contours: (x, y, w, h) = cv.boundingRect(contour) if cv.contourArea(contour) < 700: continue cv.rectangle(frame1,(x,y),(x+w,y+h),(0,255,0),2) cv.putText(frame1,"Status:{}".format("Movement"),(10,20),cv.FONT_HERSHEY_SIMPLEX,1,(0,0,255),3)

We use bounding Rect to read contours to identify the rectangle (that is, bounding box) and draw the range of the recognized operator through the rectangle. Then use putText to add text to the effect.

frame1 = frame2 ret, frame2 = cap.read() if cv.waitKey(40) == 27: break

To assign frame 2 to frame 1 means to record frame 1, that is, to record the last frame. Then the frame 2 is reassigned.