1. Briefly introduce that scrapy official documents have more code but fewer changes, while the second sends fewer codes but changes more.

1. The first method; the official document method.We can go to settings to copy the document web address into the official document for copy and paste.(Only three modifications are required in the pipeline,)

https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import pymongo

class MongoPipeline(object):

collection_name = 'scrapy_items' # Here is the name of the database table to which the connection is made

def __init__(self, mongo_uri, mongo_db):

self.mongo_uri = mongo_uri

self.mongo_db = mongo_db

@classmethod

def from_crawler(cls, crawler):

return cls(

mongo_uri=crawler.settings.get('MONGO_URI'), # There are two parameters in the get, one is the configured MONGO_URL and the other is the localhost

mongo_db=crawler.settings.get('MONGO_DATABASE', 'items') # The first parameter here is the database configuration. The second parameter is the database name of its table

)

def open_spider(self, spider):

self.client = pymongo.MongoClient(self.mongo_uri)

self.db = self.client[self.mongo_db]

def close_spider(self, spider):

self.client.close()

def process_item(self, item, spider):

self.db[self.collection_name].insert_one(dict(item))

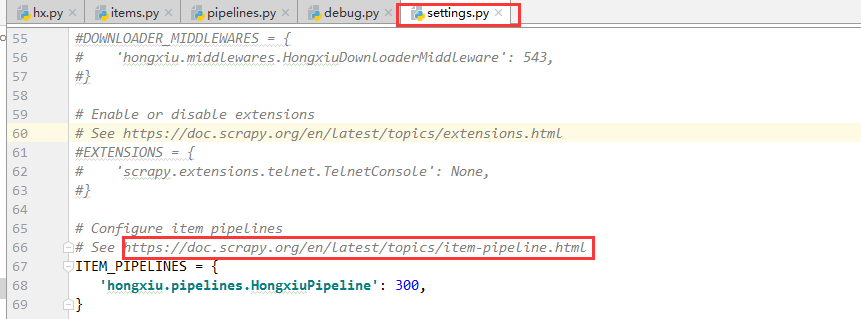

return item2. Next is the configuration of settings.

MONGO_URL = 'localhost' MONGO_DATABASE = 'item' # Name of the database.

2. The second method is to define the mongodb database

1. You only need to define pipelines here. There is no need to configure pipelines in settings. The code is as follows.

import pymongo

class JobsPipeline(object):

def process_item(self, item, spider):

# Parameter 1 {'zmmc': item['zmmc']}: Used to query the table for the existence of a documents document corresponding to zmmc.

# Data to be saved or updated for parameter 2

# Parameter 3 True: Update (True) or Insert (False, insert_one())

self.db['job'].update_one({'zmmc': item['zmmc']}, {'$set': dict(item)}, True) # job is the name of the table connecting to the database

return item

def open_spider(self, spider):

self.client = pymongo.MongoClient('localhost')

self.db = self.client['jobs'] # jobs is the connection database name