catalogue

Configure http load balancing in DR mode of lvs

LVS configuration definition cluster

What is LVS?

LVS - Linux Virtual Server, that is, Linux Virtual Server (virtual host, shared host). Virtual host will not be repeated here. I believe everyone understands.

LVS is a virtual server cluster system, which realizes a high-performance and high availability server. At present, LVS has been integrated into the Linux kernel module.

Composition of LVS

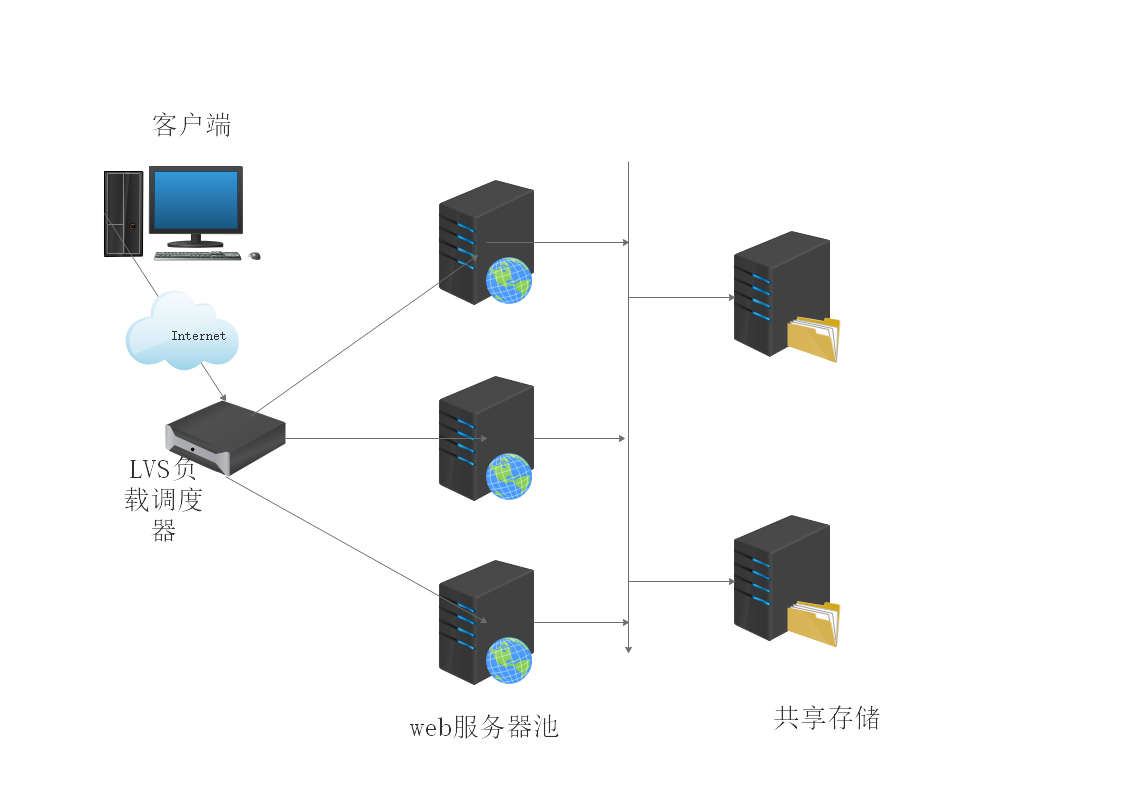

① Physically speaking, the main components of LVS are:

The load balancer / director is the external front-end machine of the whole cluster. It is responsible for sending the customer's request to a group of servers for execution, and the customer thinks that the service comes from an IP address (we can call it a virtual IP address).

Server pool (server pool/ Realserver) is a group of servers that actually execute customer requests. The services executed generally include WEB, MAIL, FTP, DNS, etc.

Shared storage, which provides a shared storage area for the server pool, makes it easy for the server pool to have the same content and provide the same services

As shown in the figure below:

Supplement: generally, in order to achieve high availability, more than two scheduling servers will be used as backup to improve security. (two load scheduling servers will be used in the later experimental deployment of DR mode + keepalive)

② From the software level, LVS consists of two parts of programs, including ipvs and ipvsadm.

1.ipvs(ip virtual server): a piece of code working in the kernel space, called ipvs, is the code that really takes effect to realize scheduling.

2.ipvsadm: the other section works in the user space, called ipvsadm, which is responsible for writing rules for the ipvs kernel framework, defining who is the cluster service and who is the real server at the back end, and then the kernel code realizes the real scheduling algorithm and function.

What is a cluster?

Cluster, or cluster, in English is cluster, which is composed of multiple hosts, but it is only shown as a whole (the same service). The client cannot detect how many servers there are, and knows nothing about which real server it is accessing.

According to the target difference, clusters can be divided into load balancing cluster, high availability cluster (HA) and high-performance computing cluster.

Type of cluster

Load Balance Cluster

Load balancing + clustering can improve the responsiveness of the application system, process more access requests and reduce latency, so as to obtain the overall performance of high concurrency and high load.

Of course, the processing of load balancing is not a simple average distribution, but depends on the scheduling shunting algorithm in the actual situation. The algorithm involves the thoughts of developers and the actual situation of production environment.

High availability cluster

Improve the reliability of the application system, reduce the main outage time, ensure the continuity of service, and achieve the fault-tolerant effect of high availability.

The working mode of HA (high availability) includes duplex and master-slave modes. This involves the idea of "decentralization" and "centralization". The MHA introduced in the previous article is a typical architecture mode of master high availability cluster, but we use MySQL database to build the high availability architecture.

High performance computing cluster

High Performance Computing Cluster - High Performance Computer Cluster can improve the CPU computing speed, expand hardware resources and analysis ability of application system, and obtain high-performance computing ability equivalent to large-scale and supercomputer.

The high performance of high-performance computing cluster depends on "distributed computing" and "parallel computing". The CPU, memory and other resources of multiple servers are integrated through special hardware and software to realize the computing power only large computers have.

Load balancing cluster is the most used cluster type in enterprises at present. The load scheduling technology of cluster has three working modes: Address Translation - NAT, ip Tunnel - ip Tunnel and direct route.

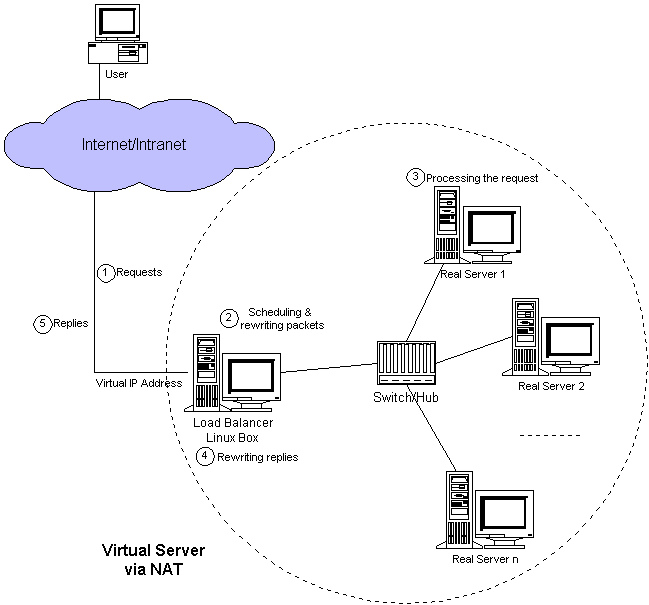

NAT mode

NAT(Network Address Translation) network address translation. Intranet hosts can access external hosts through routing NAT

LVS-NAT model is similar to DNAT, and its working mechanism is the same as DNAT. When the client requests cluster services, LVS modifies the target address of the request message to RIP, forwards it to the backend RealServer, and modifies the source address of the backend response message to VIP to respond to the client.

Features of LVS-NAT:

RS and DIP should use private network address, and RS gateway should point to DIP

Both request and response messages must be forwarded through the director, so the forwarding function of the director needs to be turned on during configuration. In the scenario of extremely high load, the director may become a system performance bottleneck

Support port mapping

RS can use any OS

RIP of RS and DIP of Director must be on the same IP network

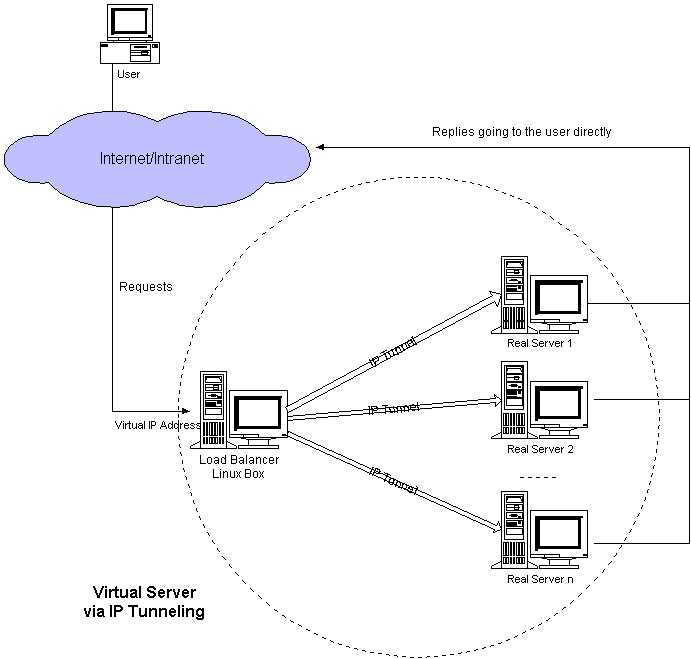

TUN mode

LVS-TUN features:

- RIP, DIP and VIP must be public network addresses

- RS gateway cannot point to DIP

- The request message must be dispatched through the director, but the response message must not be dispatched through the director

- Port mapping is not supported

- The OS of RS must support tunnel function

lvs-fullnat: keepalived

- The director forwards the request message by modifying the target address and source address at the same time

LVS fullnat features:

- VIP is the public network address, RIP and DIP are private network addresses, and RIP and DIP do not need to be in the same network

- The source address of the request message received by RS is DIP, so it should respond to DIP

- Both request message and response message must be sent through Director

- Support port mapping mechanism

- RS can use any OS

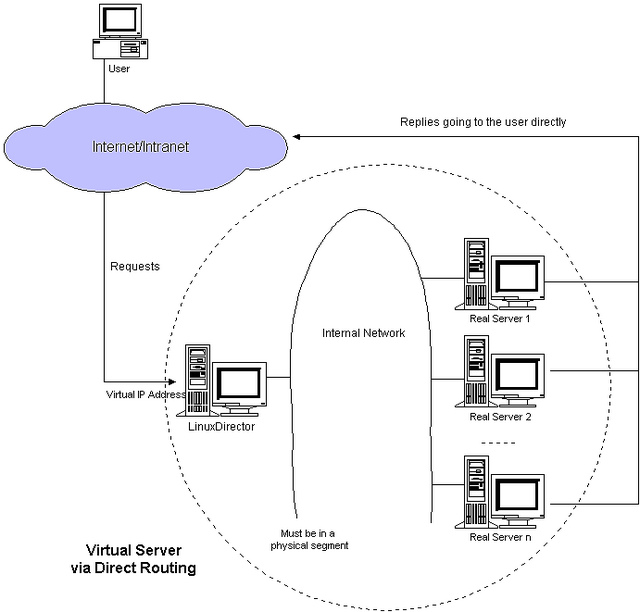

LVS DR mode

- The client requests resources. The request message is routed to the switch. Then the switch checks that the target IP is VIP, modifies the source and target Mac, and then encapsulates the message and sends it to the director. IPVS modifies the source MAC to the MAC address of DIP, and the target Mac to the MAC address of RIP, and then sends it through the POSTROUTING chain and forwards it to RS through the switch. In DR mode, a virtual address VIP will be configured on the lo interface. When RS receives the packet unpacking and finds that the target MAC address is itself and the target IP address is also its own Lo address VIP, it will process the request.

- RS responds to the request. Since the target IP of the request message is VIP, the response message is transmitted to eth0 network card through lo interface.

characteristic

- Ensure that the front-end route sends all messages with the target address of VIP to DS instead of RS

- The RIP of RS can use private address, but it can also use public address

- RS and director must be in the same physical network

- The request message is scheduled by the director, but the response message does not necessarily go through the director

- Port mapping is not supported

- RS gateway cannot point to DIP

Scheduling algorithm:

- rr, the round robin algorithm, the scheduler distributes all requests equally to each server.

- wrr, weight Round Robin, and assign a weight proportion to each RS.

- lc, least connections, allocates new requests to the server with the smallest number of connections. The real server of the cluster system has similar system performance, and the minimum connection scheduling algorithm can better balance the load

- wlc, weighted least connections, assigns a weight ratio to each server in the lc algorithm

Configure http load balancing in DR mode of lvs

Environmental Science:

director:

dip: 192.168.75.143

vip: 192.168.75.145

RS1: 192.168.75.144

RS2: 192.168.75.142

Deployment:

Configure httpd on RS1 to make web pages accessible

//Turn off firewall and selinux [root@RS1 ~]# systemctl stop firewalld [root@RS1 ~]# systemctl disable firewalld [root@RS1 ~]# cat /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of three two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted //Edit httpd [root@RS1 ~]# yum install httpd [root@RS1 ~]# vim /var/www/html/index.html [root@RS1 ~]# cat /var/www/html/index.html this is RS1 ip: 192.168.75.144 //Installing the net tools tool [root@RS1 ~]# yum install -y net-tools

Configure httpd on RS2 to make web pages accessible

//Turn off firewall and selinux [root@RS2 ~]# systemctl stop firewalld [root@RS2 ~]# systemctl disable firewalld Removed /etc/systemd/system/multi-user.target.wants/firewalld.service. Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. [root@RS2 ~]# setenforce 0 [root@RS2 ~]# cat /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these three values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted //Download configuration httpd [root@RS2 ~]# yum install -y httpd [root@RS2 ~]# cat /var/www/html/index.html This is RS2 ip: 192.168.75.142 //Installing the net tools tool [root@RS2 ~]# yum install -y net-tools

Install ipvsadm tool on LVS

yum install -y ipvsadm

Network card configuration

//Set the gateway to the dip of lvs [root@RS1 ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.75.143 0.0.0.0 UG 100 0 0 ens33 192.168.75.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33 [root@RS1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE="Ethernet" BOOTPROTO="static" NAME="ens33" DEVICE="ens33" ONBOOT="yes" IPADDR=192.168.75.144 NETMASK=255.255.255.0 GATEWAY=192.168.75.143 DNS1=223.5.5.5 //RS2 is the same [root@RS2 ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.75.143 0.0.0.0 UG 100 0 0 ens33 192.168.75.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33 [root@RS2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE="Ethernet" BOOTPROTO="static" NAME="ens33" DEVICE="ens33" ONBOOT="yes" IPADDR=192.168.75.142 NETMASK=255.255.255.0 GATEWAY=192.168.75.143 DNS1=223.5.5.5

deploy

Turn on the forwarding function of lvs host

[root@lvs ~]# cat /etc/sysctl.conf # sysctl settings are defined through files in # /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/. # # Vendors settings live in /usr/lib/sysctl.d/. # To override a whole file, create a new file with the same in # /etc/sysctl.d/ and put new settings there. To override # only specific settings, add a file with a lexically later # name in /etc/sysctl.d/ and put new settings there. # # For more information, see sysctl.conf(5) and sysctl.d(5). net.ipv4.ip_forward=1 //Make it effective [root@lvs ~]# sysctl -p net.ipv4.ip_forward = 1 [root@lvs ~]#

LVS configuration definition cluster

//LVS configuration definition cluster

[root@lvs ~]# ipvsadm -A -t 192.168.75.145:80 -s rr

// Add TCP VIP to specify scheduling algorithm polling

//Join the backend RS to the cluster:

[root@lvs ~]# ipvsadm -a -t 192.168.75.145 -r 192.168.75.142 -m

[root@lvs ~]# ipvsadm -a -t 192.168.75.145 -r 192.168.75.144 -m

//see

[root@lvs ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress :Port Scheduler Flags

->RemoteAddress:Port Forward weight ActiveConn InActConn

TCP 172.16.75.145:80 rr

->192.168.75.144:80 Masq 1 0 0

-> 192.168.75.142:80 Masq 1 0 0

//verification

[root@localhost ~]# for i in $(seq 10);do curl 192.168.75.145:80;done

This is RS1 ip: 192.168.75.144

This is RS2 ip: 192.168.75.142

This is RS1 ip: 192.168.75.144

This is RS2 ip: 192.168.75.142

This is RS1 ip: 192.168.75.144

This is RS2 ip: 192.168.75.142

This is RS1 ip: 192.168.75.144