Custom thread pool

package com.sunhui.thread.CompletableFuture.util;

/**

* @Description

* @ClassName ThreadPoolUtil

* @Author SunHui

* @Date 2021/9/24 9:47 morning

*/

import java.util.concurrent.Executors;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* Tool class for thread pool management, encapsulation class

*

* @author SunHui

*/

public class ThreadPoolUtil {

// Manage the thread pool through the agent class of ThreadPoolExecutor

private static volatile ThreadPollProxy mThreadPollProxy;

// Single column object

public static ThreadPollProxy getThreadPollProxy() {

if (mThreadPollProxy == null) {

synchronized (ThreadPollProxy.class) {

if (mThreadPollProxy == null) {

mThreadPollProxy = new ThreadPollProxy(Runtime.getRuntime().availableProcessors() * 2,

Runtime.getRuntime().availableProcessors() * 4,

30);

}

}

}

return mThreadPollProxy;

}

// Manage the thread pool through the agent class of ThreadPoolExecutor

public static class ThreadPollProxy {

public ThreadPoolExecutor poolExecutor;// Thread pool executor, java implements thread pool management through this api

private int corePoolSize;

private int maximumPoolSize;

private long keepAliveTime;

public ThreadPollProxy(int corePoolSize, int maximumPoolSize, long keepAliveTime) {

this.corePoolSize = corePoolSize;

this.maximumPoolSize = maximumPoolSize;

this.keepAliveTime = keepAliveTime;

poolExecutor = new ThreadPoolExecutor(

// Number of core threads

corePoolSize,

// Maximum number of threads

maximumPoolSize,

// The time that a thread remains active when it is idle

keepAliveTime,

// Time unit, in milliseconds

TimeUnit.SECONDS,

// Thread task queue

new LinkedBlockingQueue<>(20000),

// Create a factory for threads

Executors.defaultThreadFactory(),

// Reject policy, discard directly

new ThreadPoolExecutor.AbortPolicy());

}

}

Source code:

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

if (corePoolSize < 0 ||

maximumPoolSize <= 0 ||

maximumPoolSize < corePoolSize ||

keepAliveTime < 0)

throw new IllegalArgumentException();

if (workQueue == null || threadFactory == null || handler == null)

throw new NullPointerException();

this.acc = System.getSecurityManager() == null ?

null :

AccessController.getContext();

this.corePoolSize = corePoolSize;

this.maximumPoolSize = maximumPoolSize;

this.workQueue = workQueue;

this.keepAliveTime = unit.toNanos(keepAliveTime);

this.threadFactory = threadFactory;

this.handler = handler;

}

Thread pool role

- Reduce resource consumption; Improve thread utilization and reduce the consumption of creating and destroying threads.

- Improve response speed; When a task comes, a thread can be executed directly without creating a thread first.

- Improve the manageability of threads; Threads are scarce resources, and thread pool can be used for unified allocation, tuning and monitoring.

Seven parameters of thread pool

- corePoolSize: represents the number of core threads, that is, the number of working threads created under normal circumstances. These threads will not be destroyed after they are created, but will be resident. When the number of core threads is equal to the maximum number of core threads allowed by the thread pool, if a new task comes, no new core thread will be created;

- Maximumpoolsize: represents the maximum number of threads, which corresponds to the number of core threads. It indicates the maximum number of threads allowed to be created. For example, when there are many tasks and the number of core threads is exhausted, but the demand still cannot be met, new threads will continue to be created, but the total number of threads in the thread pool will not exceed the maximum number of threads;

- keepAliveTime: indicates the idle time of threads beyond the number of core threads, that is, the core threads will not be eliminated, but some threads beyond the number of core threads will be destroyed if they are idle for a certain time. We can control the idle time by setting keepAliveTime. Therefore, if there are many tasks and the execution time of each task is relatively short, you can increase the time to improve the thread utilization. Otherwise, the thread will be terminated before it has time to process the next task. When the thread needs to be created again, it will be terminated soon after the task is created, Repeated thread switching will lead to a waste of resources (allowcorethreadtimeout can also be set to true, which will give the threads in the core thread pool time to survive);

- Unit: indicates the unit of thread activity holding time: the optional units are DAYS, HOURS, MINUTES, MILLISECONDS, MICROSECONDS and NANOSECONDS

- workQueue: it is used to save the blocking queue for tasks waiting to be executed. Assuming that all our core threads are used now, and all tasks come in, they will be put into the blocking queue. If we continue to add new tasks until the blocking queue is full of tasks, a new thread will be created;

- ThreadFactory: a factory that creates threads and is used to produce threads to perform tasks. We can choose to use the default creation factory. The production threads are in the same group, have the same priority, and are not daemon threads. Of course, we can also select custom thread factories according to business needs;

- Handler: task rejection policy. When the queue and thread pool are full, it indicates that the thread pool is saturated, so a strategy must be adopted to deal with the new task submitted. By default, this policy is AbortPolicy, which means that an exception is thrown when a new task cannot be processed. In JDK1.5, the Java thread pool framework provides four strategies, which will be explained in detail below.

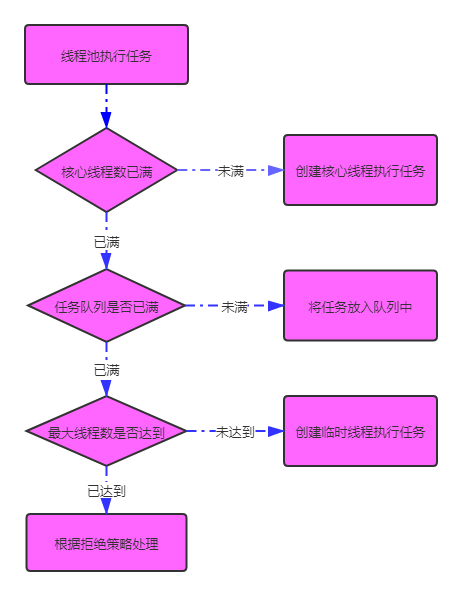

Thread pool processing flow

Four rejection strategies of thread pool

Test code:

// Create 200 tasks, each with an execution time of 1 second

List<MyTask> tasks = IntStream.range(0, 200)

.mapToObj(i -> new MyTask(1))

.collect(toList());

// Create a custom thread pool

ThreadPoolUtil.ThreadPollProxy threadPollProxy = ThreadPoolUtil.getThreadPollProxy();

- AbortPolicy (default policy)

public static ThreadPollProxy getThreadPollProxy() {

if (mThreadPollProxy == null) {

synchronized (ThreadPollProxy.class) {

if (mThreadPollProxy == null) {

mThreadPollProxy = new ThreadPollProxy(

3, 5, 3);

}

}

}

return mThreadPollProxy;

}

// Manage the thread pool through the agent class of ThreadPoolExecutor

public static class ThreadPollProxy {

public ThreadPoolExecutor poolExecutor;// Thread pool executor, java implements thread pool management through this api

private int corePoolSize;

private int maximumPoolSize;

private long keepAliveTime;

public ThreadPollProxy(int corePoolSize, int maximumPoolSize, long keepAliveTime) {

this.corePoolSize = corePoolSize;

this.maximumPoolSize = maximumPoolSize;

this.keepAliveTime = keepAliveTime;

poolExecutor = new ThreadPoolExecutor(

// Number of core threads

corePoolSize,

// Maximum number of threads

maximumPoolSize,

// The time that a thread remains active when it is idle

keepAliveTime,

// Time unit, in milliseconds

TimeUnit.SECONDS,

// Thread task queue

new LinkedBlockingQueue<>(20),

// Create a factory for threads

Executors.defaultThreadFactory(),

// Reject policy, discard directly

new ThreadPoolExecutor.AbortPolicy());

}

Using the default AbortPolicy policy policy, the running results are as follows, and the exception information is thrown directly

pool-1-thread-2 pool-1-thread-1 pool-1-thread-4 pool-1-thread-2 pool-1-thread-5 pool-1-thread-3 Exception in thread "main" java.util.concurrent.RejectedExecutionException: Task java.util.concurrent.CompletableFuture$AsyncSupply@3b0143d3 rejected from java.util.concurrent.ThreadPoolExecutor@731a74c[Running, pool size = 5, active threads = 5, queued tasks = 14, completed tasks = 13] at java.util.concurrent.ThreadPoolExecutor$AbortPolicy.rejectedExecution(ThreadPoolExecutor.java:2063) at java.util.concurrent.ThreadPoolExecutor.reject(ThreadPoolExecutor.java:830) at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1379) at java.util.concurrent.CompletableFuture.asyncSupplyStage(CompletableFuture.java:1604) at java.util.concurrent.CompletableFuture.supplyAsync(CompletableFuture.java:1830) at com.sunhui.thread.CompletableFuture.CompletableFutureTest1.lambda$main$1(CompletableFutureTest1.java:50) at java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193) at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1382) at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482) at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472) at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708) at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234) at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:499) at com.sunhui.thread.CompletableFuture.CompletableFutureTest1.main(CompletableFutureTest1.java:51)

- CallerRunsPolicy policy policy

We found that the last task was actually executed by the main thread for us, because the CallerRunsPolicy policy policy is to take back the task submitted by anyone who can't be handled by the thread pool and let him execute it by himself. It was found that it was the task submitted by the main thread, so it was finally returned to the main thread for execution

pool-1-thread-5 pool-1-thread-4 main pool-1-thread-3 pool-1-thread-4 pool-1-thread-4

- DiscardPolicy policy policy

Abandon the strategy directly, and the exception will not be thrown, and do nothing

pool-1-thread-5 pool-1-thread-1 pool-1-thread-2 pool-1-thread-3 pool-1-thread-4

- DiscardOldestPolicy policy policy

Try to find the first thread to help execute, but it can only be executed after thread 1 is executed. However, this can only be seen when the thread configuration is larger. I have tried for a long time and feel that it has no effect

pool-1-thread-4 pool-1-thread-5 pool-1-thread-2 pool-1-thread-3 pool-1-thread-1

Summary: when the configured abandonment policy does nothing or abandons directly, it will be difficult to find problems, so it is best not to use this policy and use the default policy AbortPolicy.

Role of blocking queue in thread pool

- The general queue can only be used as a buffer of limited length. If the buffer length is exceeded, the current task cannot be retained. Blocking the queue can retain the current task that wants to continue to join the queue.

Blocking queue can ensure that when there is no task in the task queue, the thread that obtains the task is blocked, so that the thread enters the wait state and releases cpu resources. - The blocking and wake-up functions of the blocking queue do not require additional processing. When there is no task, the thread pool uses the take method of the blocking queue to suspend, so as to maintain the survival of the core thread and not occupy cpu resources all the time.

Why do you add queues instead of creating temporary threads when the number of core threads is full

- When creating a new thread, you need to obtain a global lock. At this time, other threads will have to be blocked, affecting the overall execution efficiency of the thread. Adding tasks to the queue buffer avoids the overhead of creating and destroying temporary threads.

- For example, an enterprise has 10 regular employees (core) and can recruit up to 10 regular workers. If the task exceeds the number of regular workers (task > core), the factory leader (line program pool) is not the first to recruit more workers, or these 10 regular workers, allowing a slight backlog of tasks (put in the queue). Let 10 regular workers work slowly and they will finish it sooner or later. If the tasks continue to increase and exceed the overtime tolerance limit of regular workers (the queue is full), temporary workers will have to be recruited. If the tasks that cannot be completed in time after adding temporary workers, they will be rejected by the leaders (implement the rejection strategy)

Summary

- When a new task comes, first check whether the current number of threads exceeds the number of core threads. If not, directly create a new thread to execute the new task. If not, check whether the cache queue is full. If not, put the new task into the cache queue. If full, create a new thread to execute the new task, If the number of threads in the thread pool has reached the specified maximum number of threads, the task will be rejected according to the corresponding policy.

- When the tasks in the cache queue are completed, if the number of threads in the thread pool is greater than the number of core threads, the excess threads will be destroyed until the number of threads in the thread pool is equal to the number of core threads. At this point, these threads will not be destroyed. They are always blocked and waiting for new tasks to come.