Functions of three Synchronizers

This paper mainly introduces three thread synchronizers, their functions are as follows:

- CountDownLatch: make sure that all sub threads are summarized after execution. There is a counter inside. After execution of a sub thread, count down and return;

- CyclicBarrier: a group of threads can be executed at the same time after reaching a state. After all threads are executed, they can be reused after resetting the state of CyclicBarrier;

- Semaphore: it's a familiar semaphore. There is also a counter inside, but it's incremental. At the beginning, I didn't know the number of threads to be synchronized. Instead, I called the acquire method to specify the number of threads to be synchronized.

CountDownLatch

Ensure that all the sub threads perform the summary after the completion of execution. There is a counter inside. After the completion of execution of a sub thread, count down and return. Let's start with a simple way to use it:

//Create a CountDownLatch instance private static CountDownLatch countDownLatch = new CountDownLatch(2); public static void main(String args[]) throws InterruptedException{ ExecutorService executorService = Executors.newFixedThreadPool(2); //Add thread A to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { Thread.sleep(1000); System.out.println("child threadOne over!"); }catch (InterruptedException e) { // TODO: handle exception e.printStackTrace(); }finally { countDownLatch.countDown(); } } }); //Add thread B to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { Thread.sleep(1000); System.out.println("child threadTwo over!"); }catch(InterruptedException e) { e.printStackTrace(); }finally { countDownLatch.countDown(); } } }); System.out.println("wait all child thread over!"); //Wait for the sub thread to finish executing, return countDownLatch.await(); System.out.println("all child thread over!"); executorService.shutdown(); }

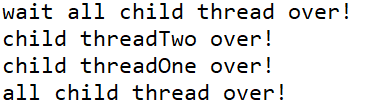

A thread pool is used here, and two threads are created and put into the pool. Then the await() method of CountDownLatch is called in the main thread, waiting for the execution of the two sub threads. The output of this code is as follows:

It can also be like this:

That is, the order of threadOne and threadTwo may be swapped, because it depends on how the thread pool executes them. But the wait statement and all over statement must be one at the beginning and one at the end. Because all over statement is output after await() returns, which is equivalent to a final summary method.

Let's take a look at the construction inside CountDownLatch. It has a private class, Sync, which inherits the AbstractQueuedSynchronizer (AQS) abstract class. In fact, it assigns the value of the counter to the state variable state of AQS, which is used to represent the counter value.

public CountDownLatch(int count) { if (count < 0) throw new IllegalArgumentException("count < 0"); this.sync = new Sync(count); } Sync(int count) { setState(count); }

Its wait() method also delegates sync to call acquiresharedinterruptible method of AQS:

public void await() throws InterruptedException { sync.acquireSharedInterruptibly(1); }

The code of this method is as follows:

public final void acquireSharedInterruptibly(int arg) throws InterruptedException { // Throw an exception if the thread is interrupted if (Thread.interrupted()) throw new InterruptedException(); // Check whether the current counter value is 0. If it is 0, it will return. If it is not 0, it will enter the AQS queue and wait if (tryAcquireShared(arg) < 0) doAcquireSharedInterruptibly(arg); }

The method is very simple. Just look at the counter. If it is 0, it will return directly. If it is not 0, it will be put into the AQS waiting queue. Later, if the counter becomes 0, CountDownLatch will call the AQS release resource method, in which it wakes up the wait () thread in the waiting queue and returns it.

The implementation of the above logic is in the countDown() method:

public void countDown() { sync.releaseShared(1); }

Ah, there's nothing. It's the releseShared method in the sync called.

public final boolean releaseShared(int arg) { if (tryReleaseShared(arg)) { // Let counter-1 doReleaseShared(); // The resource release method of AQS wakes up the await() thread to return return true; } return false; }

Let's take a look at tryrelease shared(), the key method for counter-1:

protected boolean tryReleaseShared(int releases) { // Cycle CAS until CAS is completed successfully, make counter value - 1 and update to state for (;;) { // Get the current state value int c = getState(); // If the current state is 0, false will be returned, because the counter value is already 0, there is no way to - 1 if (c == 0) return false; // CAS execution int nextc = c - 1; if (compareAndSetState(c, nextc)) // CAS is executed successfully. If it is 0, it will return true. Otherwise, it will return false return nextc == 0; } }

The reason why we need to determine whether the current state is 0 is to prevent other threads from calling the countDown() method when the counter value is already 0, so that the counter value becomes negative.

CountDownLatch is more flexible and convenient than using join() to synchronize threads. Set the counter value during initialization. The thread calls the countDown() method to decrement the counter value. When the counter value changes to 0, activate the thread blocked by calling the await() method.

CyclicBarrier

Function: let a group of threads reach a state and execute at the same time. After all threads are executed, they can be reused after resetting the state of CyclicBarrier. Also look at a way to use it:

//Create an instance of CyclicBarrier and add a task to be executed after all the sub threads have reached the barrier private static CyclicBarrier cyclicBarrier = new CyclicBarrier(2, new Runnable() { @Override public void run() { // TODO Auto-generated method stub System.out.println(Thread.currentThread() + "task1 merge result"); } }); public static void main(String args[]) throws InterruptedException{ ExecutorService executorService = Executors.newFixedThreadPool(2); //Add thread A to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { System.out.println(Thread.currentThread() + "task1-1"); System.out.println(Thread.currentThread() + "enter in barrier"); cyclicBarrier.await(); System.out.println(Thread.currentThread() + "enter out barrier"); }catch (Exception e) { // TODO: handle exception e.printStackTrace(); } } }); //Add thread B to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { System.out.println(Thread.currentThread() + "task1-2"); System.out.println(Thread.currentThread() + "enter in barrier"); cyclicBarrier.await(); System.out.println(Thread.currentThread() + "enter out barrier"); }catch(Exception e) { e.printStackTrace(); } } }); executorService.shutdown(); }

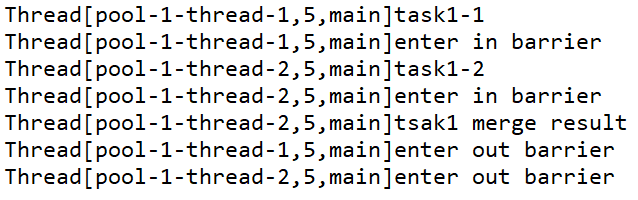

A thread pool with capacity of 2 is also created and put into two threads. The result after execution is as follows:

It can be seen that thread 1 executes first, and when it reaches the await step, thread 1 "stops" and "waits" for thread 2 to execute the await step as well, and then executes the task in cyclicBarrier that is executed when all the sub threads reach the barrier (output the merge result), and then the two threads exit the barrier.

So what about its reusability? Let's take another example:

//Create an instance of CyclicBarrier and add a task to be executed after all the sub threads have reached the barrier private static CyclicBarrier cyclicBarrier = new CyclicBarrier(2, new Runnable() { @Override public void run() { // TODO Auto-generated method stub System.out.println(Thread.currentThread() + "task2 merge result"); } }); public static void main(String args[]) throws InterruptedException{ ExecutorService executorService = Executors.newFixedThreadPool(2); //Add thread A to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { System.out.println(Thread.currentThread() + "step1"); cyclicBarrier.await(); System.out.println(Thread.currentThread() + "step2"); cyclicBarrier.await(); System.out.println(Thread.currentThread() + "step3"); }catch (Exception e) { // TODO: handle exception e.printStackTrace(); } } }); //Add thread B to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { System.out.println(Thread.currentThread() + "step1"); cyclicBarrier.await(); System.out.println(Thread.currentThread() + "step2"); cyclicBarrier.await(); System.out.println(Thread.currentThread() + "step3"); }catch(Exception e) { e.printStackTrace(); } } }); executorService.shutdown(); }

Above, compared with the first example, the other code has not changed, that is, the task executed in the try code block of two threads has been replaced by three steps. Here we require that step 2 can be started only after both threads have completed step 1, and step 3 can be started only after both threads have completed step 2. This can be done through CyclicBarrier, as above. The output results are as follows:

You can see that the tasks are executed in order, and the cyclicbarrier outputs two merge s. This also tells us that the run method in Runnable in cyclicbarrier is triggered as long as cyclicbarrier reaches the barrier point. And in the above code, we don't see that we explicitly reset the status of cyclicbarrier, so its status should be triggered when it reaches the barrier point.

With the above perceptual knowledge, let's look at its source code.

The CyclicBarrier class does not inherit any classes or implement any interfaces. It has a built-in private class called Generation, which also has no inheritance class or interface. It has the following variables:

// Implementation based on exclusive lock, that is to say, the bottom layer is based on AQS private final ReentrantLock lock = new ReentrantLock(); // Conditional variable private final Condition trip = lock.newCondition(); // The parties variable is used to record the number of threads. That is to say, only after the parties call the await() method can the threads break through the barrier and continue to execute private final int parties; // The Runnable in the above construction method triggers the run method when the online process breaks through the barrier private final Runnable barrierCommand; // Initialize generation private Generation generation = new Generation(); // Record the number of threads that actually called the await() method at the current time, because parties cannot be decremented (and reused) private int count;

There's nothing strange about the construction method. I won't talk about it here. Look at the await() method:

public int await() throws InterruptedException, BrokenBarrierException { try { return dowait(false, 0L); } catch (TimeoutException toe) { throw new Error(toe); // cannot happen } }

It seems that a dowait() method has been called. Then go to the dowait() method:

private int dowait(boolean timed, long nanos) throws InterruptedException, BrokenBarrierException, TimeoutException { // Get the lock and lock it final ReentrantLock lock = this.lock; lock.lock(); try { // Get the current generation final Generation g = generation; if (g.broken) throw new BrokenBarrierException(); if (Thread.interrupted()) { breakBarrier(); throw new InterruptedException(); } // Important: count-1. If count is 0 at this time, it proves that the number of threads to the barrier point is enough. The reset state and the run method to trigger the barrierCommand are enough int index = --count; if (index == 0) { // tripped // This ranAction is used to record whether the run method of barrierCommand is called boolean ranAction = false; try { final Runnable command = barrierCommand; if (command != null) command.run(); ranAction = true; // Activate other threads blocked by the call to await() and reset the CyclicBarrier nextGeneration(); return 0; } finally { if (!ranAction) breakBarrier(); } } // If count does not reach 0 for (;;) { try { // Timeout not set if (!timed) trip.await(); // Timeout set else if (nanos > 0L) nanos = trip.awaitNanos(nanos); } catch (InterruptedException ie) { if (g == generation && ! g.broken) { breakBarrier(); throw ie; } else { // We're about to finish waiting even if we had not // been interrupted, so this interrupt is deemed to // "belong" to subsequent execution. Thread.currentThread().interrupt(); } } if (g.broken) throw new BrokenBarrierException(); if (g != generation) return index; if (timed && nanos <= 0L) { breakBarrier(); throw new TimeoutException(); } } } finally { // Release lock lock.unlock(); } }

With such a long code, this is the core function code of CyclicBarrier. From the code, we can see that the variable generation actually indicates that the CyclicBarrier has passed several "generations", that is to say, the number of threads calling await() has gone through a cycle from 0 to parties. First, count-1. If count reaches 0, execute the run method of barrierCommand and call nextGeneration to start the next round (that is, wake up all threads blocked by await() in the condition queue and reset the status of CyclicBarrier). If count does not reach 0, call trip.await() to put the current thread into the condition queue.

See if the code of nextGeneration is as we said above:

private void nextGeneration() { // Wake up all threads waiting in the condition queue trip.signalAll(); // reset state count = parties; generation = new Generation(); }

Such is the case. This is the core code of the CyclicBarrier class.

Semaphore

The advantage of Semaphore over the other two synchronizers is that its counter is incremental. Only when the acquire method is called, the number of threads to be synchronized can be determined by parameters, so it is more flexible.

Use Semaphore to implement the first CountDownLatch example. Start two sub threads to let them execute. After they execute, the main thread continues to run downward:

//Create a Semaphore private static Semaphore semaphore = new Semaphore(0); public static void main(String args[]) throws InterruptedException{ ExecutorService executorService = Executors.newFixedThreadPool(2); //Add thread A to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { System.out.println(Thread.currentThread() + "over"); semaphore.release(); }catch (Exception e) { // TODO: handle exception e.printStackTrace(); } } }); //Add thread B to the thread pool executorService.submit(new Runnable() { @Override public void run() { // TODO Auto-generated method stub try { System.out.println(Thread.currentThread() + "over"); semaphore.release(); }catch(Exception e) { e.printStackTrace(); } } }); semaphore.acquire(2); System.out.println("all child thread over"); executorService.shutdown(); }

The release() method is equivalent to increasing the counter value of semaphore by 1. The acquire(2) method indicates that the thread calling the method will block until the semaphore count becomes 2.

Now let's look at the source code of Semaphore. It is similar to the previous CountDownLatch, and also has a built-in Sync class, inheriting the AQS abstract class. The difference is that Sync is also an abstract class, which has two implementations: NonfairSync and FairSync. In the literal sense, we can see that these two realizations represent the unfair strategy and the fair strategy respectively.

Let's explain the difference between unfair strategy and fair strategy. If a thread is put into the blocking queue and another thread arrives, the lock is released and two threads compete for the lock. The fairness strategy is to ensure that the first thread obtains the lock first, but the non fairness strategy does not. Semaphore's default implementation is unfair. See the constructor:

public Semaphore(int permits) { sync = new NonfairSync(permits); }

If a fair / unfair strategy is to be specified:

public Semaphore(int permits, boolean fair) { sync = fair ? new FairSync(permits) : new NonfairSync(permits); }

If the parameter is true, it means fair; otherwise, it means non fair.

Next, let's see the implementation of several key methods.

acquire method: this method is called by the current thread to obtain a semaphore resource. If the number of current semaphores is greater than 0, the count of current semaphores will be reduced by 1, and then the method returns directly. Otherwise, if the number of current semaphores is equal to 0, the current thread will be put into the blocking queue of AQS.

public void acquire() throws InterruptedException { sync.acquireSharedInterruptibly(1); // If the transfer parameter is 1, obtain 1 semaphore resource } public final void acquireSharedInterruptibly(int arg) throws InterruptedException { // Throw exception if thread is interrupted if (Thread.interrupted()) throw new InterruptedException(); // Otherwise, call the Sync subclass method to try to get a resource if (tryAcquireShared(arg) < 0) // Put in blocking queue if get failed doAcquireSharedInterruptibly(arg); }

The tryAcquireShared method in the above code is implemented by two subclasses of Sync. The unfair strategy is as follows:

static final class NonfairSync extends Sync { private static final long serialVersionUID = -2694183684443567898L; NonfairSync(int permits) { super(permits); } protected int tryAcquireShared(int acquires) { // This method is mainly called return nonfairTryAcquireShared(acquires); } } final int nonfairTryAcquireShared(int acquires) { for (;;) { // Obtain the current semaphore value int available = getState(); // Calculate the current remaining value int remaining = available - acquires; // Return if the current residual value is less than 0 or the semaphore is set successfully if (remaining < 0 || compareAndSetState(available, remaining)) return remaining; } }

This code probably means that the acquisition is finished, no matter what comes first, gets first. FairSync of fairness needs to do some extra processing to ensure the realization of fairness:

protected int tryAcquireShared(int acquires) { for (;;) { // If there are threads already waiting in front of the blocking queue, it will directly return - 1 if (hasQueuedPredecessors()) return -1; int available = getState(); int remaining = available - acquires; if (remaining < 0 || compareAndSetState(available, remaining)) return remaining; } }

That is, to see whether the predecessor node of the current thread node is also waiting to acquire the resource. If it is, it gives up the acquired permission and does not compete with other nodes.

Finally, let's look at the release() method. Its logic is very simple, that is, add the semaphore to 1, and then check whether there are threads in the blocking queue. In some cases, select a thread whose semaphore number can be satisfied to activate it.

public void release() { // Release a resource by default if there is no parameter sync.releaseShared(1); } public final boolean releaseShared(int arg) { // Attempt to release resources, if successful if (tryReleaseShared(arg)) { // Call the park method to wake up the first suspended thread in the AQS queue doReleaseShared(); return true; } return false; } protected final boolean tryReleaseShared(int releases) { for (;;) { // Get the current semaphore int current = getState(); int next = current + releases; // Release < 0, throw exception if (next < current) // overflow throw new Error("Maximum permit count exceeded"); // Set semaphore if (compareAndSetState(current, next)) return true; } }

The above is the use of three thread synchronizers in JUC and the analysis of some source code. Next time I will probably write some notes about AQS and its blocking queue. After all, almost all locks are based on AQS.