Problem description

- Use hint to specify the broadcast table, but cannot perform the specified broadcast;

preparation in advance

hive> select * from test.tmp_demo_small; OK tmp_demo_small.pas_phone tmp_demo_small.age 156 20 157 22 158 15 hive> analyze table test.tmp_demo_small compute statistics; Table test.tmp_demo_small stats: [numFiles=1, numRows=3, totalSize=21, rawDataSize=18] hive> select * from test.tmp_demo_big; OK tmp_demo_big.pas_phone tmp_demo_big.ord_id tmp_demo_big.dt 156 aa1 20191111 156 aa2 20191112 157 bb1 20191111 157 bb2 20191112 157 bb3 20191113 157 bb4 20191114 158 cc1 20191111 158 cc2 20191112 158 cc3 20191113 hive> analyze table test.tmp_demo_big compute statistics; Table test.tmp_demo_big stats: [numFiles=1, numRows=9, totalSize=153, rawDataSize=144]

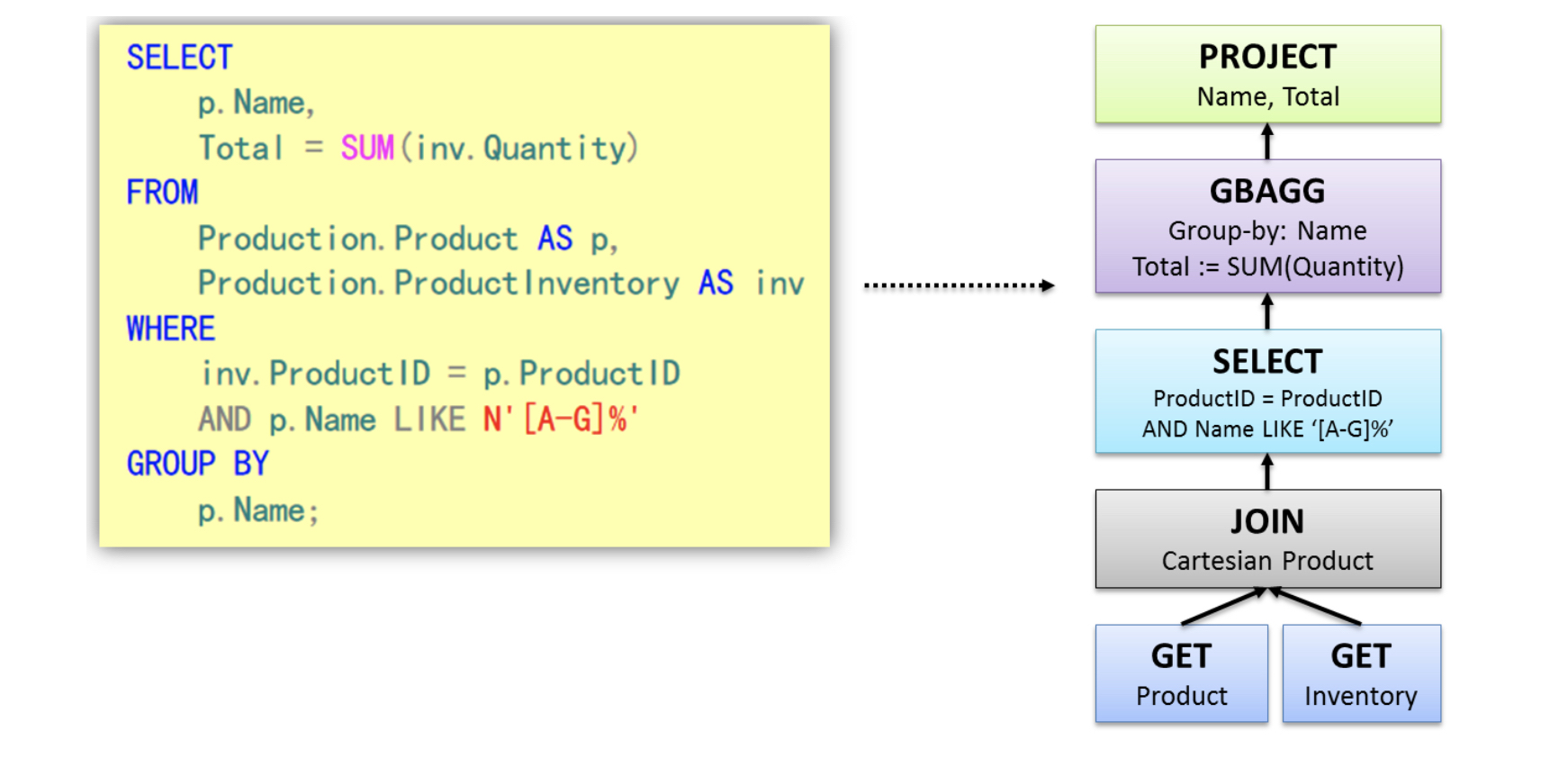

Please refer to: Apache Spark source code reading 11 - sql parsing and execution It's not the point of this article, but there is a parsed syntax tree that is useful. You can obviously show the left table and the right table. Otherwise, you may wonder what builderright is

Verification mode

Conclusion first: when a small table joins a small table (if it does not meet the default broadcast condition spark.sql.autoBroadcastJoinThreshold, 10M by default), when a broadcast object is specified, the right table takes precedence; that is to say, hint fails

- join auto broadcast using default mode

select big.pas_phone, big.ord_id, small.age, sum(1) over(partition by big.pas_phone) as ord_cnt from test.tmp_demo_small as small -- Small table 3 rows join test.tmp_demo_big as big -- Big table 9 rows on small.pas_phone = big.pas_phone where small.age > 21

- View execution plan (each execution process is read from bottom to top, simulation tree structure)

== Parsed Logical Plan == -- Abstract syntax tree, by ANTLR analysis

Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L]

+- Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L, ord_cnt#35L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- Project [pas_phone#39, ord_id#40, age#38] - I only know that the attributes are selected, but I don't know which table these attributes belong to, let alone the data type

+- Filter (age#38 > 21)

+- Join Inner, (pas_phone#37 = pas_phone#39)

:- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

== Analyzed Logical Plan == -- Logical syntax tree

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint -- Data type resolution

Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L]

+- Project [pas_phone#39, ord_id#40, age#38, ord_cnt#35L, ord_cnt#35L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- Project [pas_phone#39, ord_id#40, age#38]

+- Filter (age#38 > 21)

+- Join Inner, (pas_phone#37 = pas_phone#39)

:- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

== Optimized Logical Plan == -- Logic optimization

Window [sum(1) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- Project [pas_phone#39, ord_id#40, age#38]

+- Join Inner, (pas_phone#37 = pas_phone#39)

:- Filter ((isnotnull(age#38) && (age#38 > 21)) && isnotnull(pas_phone#37)) -- predicate push down optimization

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- Project [pas_phone#39, ord_id#40]

+- Filter isnotnull(pas_phone#39)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#39, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#35L], [pas_phone#39]

+- *Sort [pas_phone#39 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 449256327) hashpartitioning(pas_phone#39, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *Project [pas_phone#39, ord_id#40, age#38]

+- *BroadcastHashJoin [pas_phone#37], [pas_phone#39], inner, builderright -- builderright means to use the right table for broadcasting

:- *Filter ((isnotnull(age#38) && (age#38 > 21)) && isnotnull(pas_phone#37))

: +- HiveTableScan [pas_phone#37, age#38], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#37, age#38]

+- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

+- *Filter isnotnull(pas_phone#39)

+- HiveTableScan [pas_phone#39, ord_id#40], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#39, ord_id#40, dt#41]

- Using hint to specify broadcast objects

select /*+ BROADCAST(small) */ big.pas_phone, big.ord_id, small.age, sum(1) over(partition by big.pas_phone) as ord_cnt from test.tmp_demo_small as small -- Small table 3 rows join test.tmp_demo_big as big -- Big table 9 rows on small.pas_phone = big.pas_phone where small.age > 21

- Execution plan

== Parsed Logical Plan ==

Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L]

+- Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L, ord_cnt#57L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- Project [pas_phone#61, ord_id#62, age#60]

+- Filter (age#60 > 21)

+- Join Inner, (pas_phone#59 = pas_phone#61)

:- ResolvedHint isBroadcastable=true

: +- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

== Analyzed Logical Plan ==

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint

Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L]

+- Project [pas_phone#61, ord_id#62, age#60, ord_cnt#57L, ord_cnt#57L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- Project [pas_phone#61, ord_id#62, age#60]

+- Filter (age#60 > 21)

+- Join Inner, (pas_phone#59 = pas_phone#61)

:- ResolvedHint isBroadcastable=true

: +- SubqueryAlias small

: +- SubqueryAlias tmp_demo_small

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- SubqueryAlias big

+- SubqueryAlias tmp_demo_big

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

== Optimized Logical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- Project [pas_phone#61, ord_id#62, age#60]

+- Join Inner, (pas_phone#59 = pas_phone#61)

:- ResolvedHint isBroadcastable=true -- It can be seen here that this parameter is effective during logic optimization

: +- Filter ((isnotnull(age#60) && (age#60 > 21)) && isnotnull(pas_phone#59))

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- Project [pas_phone#61, ord_id#62]

+- Filter isnotnull(pas_phone#61)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#61, ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS ord_cnt#57L], [pas_phone#61]

+- *Sort [pas_phone#61 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 1477200907) hashpartitioning(pas_phone#61, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *Project [pas_phone#61, ord_id#62, age#60]

+- *BroadcastHashJoin [pas_phone#59], [pas_phone#61], inner, builderright -- builderright indicates that the right table is still used for broadcasting

:- *Filter ((isnotnull(age#60) && (age#60 > 21)) && isnotnull(pas_phone#59))

: +- HiveTableScan [pas_phone#59, age#60], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#59, age#60]

+- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

+- *Filter isnotnull(pas_phone#61)

+- HiveTableScan [pas_phone#61, ord_id#62], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#61, ord_id#62, dt#63]

At the beginning, it felt normal. In addition, it was in line with the RBO optimization principle to push some filter conditions down during the logic optimization. However, there was a problem in the final generation of the physical execution plan. In theory, we should compare the two sub tables, which one was broadcast and which one was broadcast. Why did this problem occur? The problem is that we should locate the spark 2.2.0 source code in the selection method of Join in the physical execution plan, starting from apply

Location: spark-2.2.0/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkStrategies.scala

object JoinSelection extends Strategy with PredicateHelper {

/**

* Matches a plan whose output should be small enough to be used in broadcast join.

*/

// 3. canBroadcast(right), the incoming right is a LogicalPlan object, that is, a logic plan, which contains the internal information of the subtree node table, including the meta information, as well as the parsed hint. Here, we will make a judgment. Only the hint statement or the information after the node tree (right table here) filter is greater than 0 and less than a threshold (default 10M) One of the conditions returns true

private def canBroadcast(plan: LogicalPlan): Boolean = {

plan.stats(conf).hints.isBroadcastable.getOrElse(false) ||

(plan.stats(conf).sizeInBytes >= 0 &&

plan.stats(conf).sizeInBytes <= conf.autoBroadcastJoinThreshold)

}

... Hide part of the code

// 2. Under the judgment of canbuilderright (jointype), return true

private def canBuildRight(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | LeftOuter | LeftSemi | LeftAnti => true

case j: ExistenceJoin => true

case _ => false

}

private def canBuildLeft(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | RightOuter => true

case _ => false

}

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

// --- BroadcastHashJoin --------------------------------------------------------------------

// 1. Broadcast judgment condition: first judge (2) canbuilderright (jointype); then judge (3) canBroadcast(right); when (2) and (3) are true, start to execute broadcast, and broadcast the right table, regardless of whether a broadcast table is made in hint

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBuildRight(joinType) && canBroadcast(right) =>

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, BuildRight, condition, planLater(left), planLater(right)))

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBuildLeft(joinType) && canBroadcast(left) =>

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, BuildLeft, condition, planLater(left), planLater(right)))

// --- ShuffledHashJoin ---------------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if !conf.preferSortMergeJoin && canBuildRight(joinType) && canBuildLocalHashMap(right)

&& muchSmaller(right, left) ||

!RowOrdering.isOrderable(leftKeys) =>

...

// --- SortMergeJoin ------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if RowOrdering.isOrderable(leftKeys) =>

...

// --- Without joining keys ------------------------------------------------------------

...

case _ => Nil

}

}

At this point, it explains why hint doesn't work in spark 2.2.0; put the same code in 2.4.3 to see how it works

select /*+ BROADCAST(small) */ big.pas_phone, big.ord_id, small.age, sum(1) over(partition by big.pas_phone) as ord_cnt from test.tmp_demo_small as small -- Small table 3 rows join test.tmp_demo_big as big -- Big table 9 rows on small.pas_phone = big.pas_phone where small.age > 21

- Execution plan

== Parsed Logical Plan ==

Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L]

+- Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L, ord_cnt#0L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- Project [pas_phone#4, ord_id#5, age#3]

+- Filter (age#3 > 21)

+- Join Inner, (pas_phone#2 = pas_phone#4)

:- ResolvedHint (broadcast)

: +- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

== Analyzed Logical Plan ==

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint

Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L]

+- Project [pas_phone#4, ord_id#5, age#3, ord_cnt#0L, ord_cnt#0L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- Project [pas_phone#4, ord_id#5, age#3]

+- Filter (age#3 > 21)

+- Join Inner, (pas_phone#2 = pas_phone#4)

:- ResolvedHint (broadcast)

: +- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

== Optimized Logical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- Project [pas_phone#4, ord_id#5, age#3]

+- Join Inner, (pas_phone#2 = pas_phone#4)

:- ResolvedHint (broadcast) -- analysis hint Statement, specifying broadcast table

: +- Filter ((isnotnull(age#3) && (age#3 > 21)) && isnotnull(pas_phone#2))

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- Project [pas_phone#4, ord_id#5]

+- Filter isnotnull(pas_phone#4)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#4, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#0L], [pas_phone#4]

+- *(3) Sort [pas_phone#4 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 632554218) hashpartitioning(pas_phone#4, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *(2) Project [pas_phone#4, ord_id#5, age#3]

+- *(2) BroadcastHashJoin [pas_phone#2], [pas_phone#4] , inner, buildleft -- buildleft hint formulated and effective

:- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

: +- *(1) Filter ((isnotnull(age#3) && (age#3 > 21)) && isnotnull(pas_phone#2))

: +- Scan hive test.tmp_demo_small [pas_phone#2, age#3], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#2, age#3]

+- *(2) Filter isnotnull(pas_phone#4)

+- Scan hive test.tmp_demo_big [pas_phone#4, ord_id#5], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#4, ord_id#5, dt#6]

- Do not specify broadcast table, default join

select big.pas_phone, big.ord_id, small.age, sum(1) over(partition by big.pas_phone) as ord_cnt from test.tmp_demo_small as small -- Small table 3 rows join test.tmp_demo_big as big -- Big table 9 rows on small.pas_phone = big.pas_phone where small.age > 21

- Execution plan

== Parsed Logical Plan ==

Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L]

+- Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L, ord_cnt#11L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- Project [pas_phone#15, ord_id#16, age#14]

+- Filter (age#14 > 21)

+- Join Inner, (pas_phone#13 = pas_phone#15)

:- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

== Analyzed Logical Plan ==

pas_phone: int, ord_id: string, age: int, ord_cnt: bigint

Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L]

+- Project [pas_phone#15, ord_id#16, age#14, ord_cnt#11L, ord_cnt#11L]

+- Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- Project [pas_phone#15, ord_id#16, age#14]

+- Filter (age#14 > 21)

+- Join Inner, (pas_phone#13 = pas_phone#15)

:- SubqueryAlias `small`

: +- SubqueryAlias `test`.`tmp_demo_small`

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- SubqueryAlias `big`

+- SubqueryAlias `test`.`tmp_demo_big`

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

== Optimized Logical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- Project [pas_phone#15, ord_id#16, age#14]

+- Join Inner, (pas_phone#13 = pas_phone#15)

:- Filter ((isnotnull(age#14) && (age#14 > 21)) && isnotnull(pas_phone#13))

: +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- Project [pas_phone#15, ord_id#16]

+- Filter isnotnull(pas_phone#15)

+- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

== Physical Plan ==

Window [sum(1) windowspecdefinition(pas_phone#15, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#11L], [pas_phone#15]

+- *(3) Sort [pas_phone#15 ASC NULLS FIRST], false, 0

+- Exchange(coordinator id: 1731877543) hashpartitioning(pas_phone#15, 1000), coordinator[target post-shuffle partition size: 67108864]

+- *(2) Project [pas_phone#15, ord_id#16, age#14]

+- *(2) BroadcastHashJoin [pas_phone#13], [pas_phone#15], Inner, BuildLeft -- broadcast left table succeeded

:- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint)))

: +- *(1) Filter ((isnotnull(age#14) && (age#14 > 21)) && isnotnull(pas_phone#13))

: +- Scan hive test.tmp_demo_small [pas_phone#13, age#14], HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#13, age#14]

+- *(2) Filter isnotnull(pas_phone#15)

+- Scan hive test.tmp_demo_big [pas_phone#15, ord_id#16], HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#15, ord_id#16, dt#17]

That's interesting. Look at the source code of 2.4.3

Location: spark-2.4.4/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkStrategies.scala

object JoinSelection extends Strategy with PredicateHelper {

/**

* Matches a plan whose output should be small enough to be used in broadcast join.

*/

private def canBroadcast(plan: LogicalPlan): Boolean = {

plan.stats.sizeInBytes >= 0 && plan.stats.sizeInBytes <= conf.autoBroadcastJoinThreshold

}

/**

* Matches a plan whose single partition should be small enough to build a hash table.

*

* Note: this assume that the number of partition is fixed, requires additional work if it's

* dynamic.

*/

private def canBuildLocalHashMap(plan: LogicalPlan): Boolean = {

plan.stats.sizeInBytes < conf.autoBroadcastJoinThreshold * conf.numShufflePartitions

}

/**

* Returns whether plan a is much smaller (3X) than plan b.

*

* The cost to build hash map is higher than sorting, we should only build hash map on a table

* that is much smaller than other one. Since we does not have the statistic for number of rows,

* use the size of bytes here as estimation.

*/

private def muchSmaller(a: LogicalPlan, b: LogicalPlan): Boolean = {

a.stats.sizeInBytes * 3 <= b.stats.sizeInBytes

}

private def canBuildRight(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | LeftOuter | LeftSemi | LeftAnti | _: ExistenceJoin => true

case _ => false

}

private def canBuildLeft(joinType: JoinType): Boolean = joinType match {

case _: InnerLike | RightOuter => true

case _ => false

}

// 3. Simply compare the sizes of the left and right tables,

private def broadcastSide(

canBuildLeft: Boolean,

canBuildRight: Boolean,

left: LogicalPlan,

right: LogicalPlan): BuildSide = {

def smallerSide =

if (right.stats.sizeInBytes <= left.stats.sizeInBytes) BuildRight else BuildLeft

if (canBuildRight && canBuildLeft) {

// Broadcast smaller side base on its estimated physical size

// if both sides have broadcast hint

smallerSide

} else if (canBuildRight) {

BuildRight

} else if (canBuildLeft) {

BuildLeft

} else {

// for the last default broadcast nested loop join

smallerSide

}

}

// 1. Judge canBroadcastByHints(joinType, left, right), and then judge canBuildLeft(joinType) and canbuildlight (jointype). Only one of them needs to be true, and the join type conditions are basically included. It is mainly to judge that the broadcast is made for the hint of left and right subtree tables

private def canBroadcastByHints(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: Boolean = {

val buildLeft = canBuildLeft(joinType) && left.stats.hints.broadcast

val buildRight = canBuildRight(joinType) && right.stats.hints.broadcast

buildLeft || buildRight

}

// 2. The broadcastsidebyhints (join type, left, right) is hoisted to compare. (3) in fact, it is simply to compare the sizes of two tables

private def broadcastSideByHints(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: BuildSide = {

val buildLeft = canBuildLeft(joinType) && left.stats.hints.broadcast

val buildRight = canBuildRight(joinType) && right.stats.hints.broadcast

broadcastSide(buildLeft, buildRight, left, right)

}

private def canBroadcastBySizes(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: Boolean = {

val buildLeft = canBuildLeft(joinType) && canBroadcast(left)

val buildRight = canBuildRight(joinType) && canBroadcast(right)

buildLeft || buildRight

}

private def broadcastSideBySizes(joinType: JoinType, left: LogicalPlan, right: LogicalPlan)

: BuildSide = {

val buildLeft = canBuildLeft(joinType) && canBroadcast(left)

val buildRight = canBuildRight(joinType) && canBroadcast(right)

broadcastSide(buildLeft, buildRight, left, right)

}

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

// There are two kinds of differences: when making hint and when not making hint

// --- BroadcastHashJoin --------------------------------------------------------------------

// broadcast hints were specified

// In the case of hint, first judge that canBroadcastByHints(joinType, left, right) (1) is true, only that there is hint statement and the included join type meets the conditions; then lift broadcastSideByHints(joinType, left, right) to determine which table to broadcast (2)

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBroadcastByHints(joinType, left, right) =>

val buildSide = broadcastSideByHints(joinType, left, right)

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, buildSide, condition, planLater(left), planLater(right)))

// broadcast hints were not specified, so need to infer it from size and configuration.

// In the case of no hint, go directly to judge the size of two tables to decide who is the broadcast table (of course, some preconditions are met)

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if canBroadcastBySizes(joinType, left, right) =>

val buildSide = broadcastSideBySizes(joinType, left, right)

Seq(joins.BroadcastHashJoinExec(

leftKeys, rightKeys, joinType, buildSide, condition, planLater(left), planLater(right)))

// --- ShuffledHashJoin ---------------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if !conf.preferSortMergeJoin && canBuildRight(joinType) && canBuildLocalHashMap(right)

&& muchSmaller(right, left) ||

!RowOrdering.isOrderable(leftKeys) =>

...

// --- SortMergeJoin ------------------------------------------------------------

case ExtractEquiJoinKeys(joinType, leftKeys, rightKeys, condition, left, right)

if RowOrdering.isOrderable(leftKeys) =>

...

// --- Without joining keys ----------------------------------------------------------

...

}

}

So to sum up

- In the version of spark 2.2.2, when the small table join small table (the two tables meet the broadcast conditions), the hint specified broadcast table will be invalid, and the default broadcast right table will be invalid; if not hint, the default broadcast right table will be invalid

- Spark version 2.4.3 can specify (inner join) broadcast table (even if it does not exceed the broadcast threshold); if it does not hit, it uses a smaller table to broadcast under the condition that it meets the broadcast threshold

- spark does not support full outer join; only the left table can be broadcasted for right outer join; only the right table can be broadcasted for left outer join, left semi join, left anti join, internal join, etc., and the inner join can specify the broadcast

- The rest of the join triggers require: SparkSQL - it's necessary to sit down and talk about Join,Join implementation of Spark SQL

by the way

Originally, there was a problem of having. There was no problem in local execution, but when it was packaged and submitted to the cluster using spark submit, it was inexplicably wrong;

select big.pas_phone, big.ord_id, small.age, sum(1) over(partition by big.pas_phone) as ord_cnt from test.tmp_demo_small as small -- Small table 3 rows join test.tmp_demo_big as big -- Big table 9 rows on small.pas_phone = big.pas_phone where small.age > 21 having ord_cnt > 2 Error in query: grouping expressions sequence is empty, and 'big.`pas_phone`' is not an aggregate function. Wrap '()' in windowing function(s) or wrap 'big.`pas_phone`' in first() (or first_value) if you don't care which value you get.;; 'Project [pas_phone#26, ord_id#27, age#25, ord_cnt#22L] +- 'Project [pas_phone#26, ord_id#27, age#25, ord_cnt#22L, ord_cnt#22L] +- 'Window [sum(cast(1 as bigint)) windowspecdefinition(pas_phone#26, specifiedwindowframe(RowFrame, unboundedpreceding$(), unboundedfollowing$())) AS ord_cnt#22L], [pas_phone#26] +- 'Filter ('ord_cnt > 2) +- Aggregate [pas_phone#26, ord_id#27, age#25] +- Filter (age#25 > 21) +- Join Inner, (pas_phone#24 = pas_phone#26) :- SubqueryAlias `small` : +- SubqueryAlias `test`.`tmp_demo_small` : +- HiveTableRelation `test`.`tmp_demo_small`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#24, age#25] +- SubqueryAlias `big` +- SubqueryAlias `test`.`tmp_demo_big` +- HiveTableRelation `test`.`tmp_demo_big`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [pas_phone#26, ord_id#27, dt#28]

The problem is also found. When I execute locally, I use the yarn client mode, so my driver is my server, and my server spark version is 2.2.2, so there is no problem in execution, because the driver is responsible for generating DAG, dividing task s, etc., which are executed after the sql is converted to rdd, so there is also a pre parsing sql work It's sql - > rdd, which is also done by the driver. The way to submit to the cluster is the yarn cluster mode. The driver is on a machine in the cluster, which is embarrassing. The company has upgraded to 2.4.3, which leads to the mismatch between the sql parsing environment and my local environment. Then check the new version of spark release note

In Spark version 2.3 and earlier, HAVING without GROUP BY is treated as WHERE. This means, SELECT 1 FROM range(10) HAVING true is executed as SELECT 1 FROM range(10) WHERE true and returns 10 rows. This violates SQL standard, and has been fixed in Spark 2.4. Since Spark 2.4, HAVING without GROUP BY is treated as a global aggregate, which means SELECT 1 FROM range(10) HAVING true will return only one row. To restore the previous behavior, set spark.sql.legacy.parser.havingWithoutGroupByAsWhere to true.

cool, the problem is solved, and the reason is found. If you don't want to change the whole code operation as before in 2.2, then add set spark. SQL. Legacy. Parser. Having without groupbyaswhere = true to solve the problem

set spark.sql.legacy.parser.havingWithoutGroupByAsWhere=true; select big.pas_phone, big.ord_id, small.age, sum(1) over(partition by big.pas_phone) as ord_cnt from test.tmp_demo_small as small -- Small table 3 rows join test.tmp_demo_big as big -- Big table 9 rows on small.pas_phone = big.pas_phone where small.age > 21 having ord_cnt > 2

appendix

/** * Select the proper physical plan for join based on joining keys and size of logical plan. * * At first, uses the [[ExtractEquiJoinKeys]] pattern to find joins where at least some of the * predicates can be evaluated by matching join keys. If found, join implementations are chosen * with the following precedence: * * - Broadcast hash join (BHJ): * BHJ is not supported for full outer join. For right outer join, we only can broadcast the * left side. For left outer, left semi, left anti and the internal join type ExistenceJoin, * we only can broadcast the right side. For inner like join, we can broadcast both sides. * Normally, BHJ can perform faster than the other join algorithms when the broadcast side is * small. However, broadcasting tables is a network-intensive operation. It could cause OOM * or perform worse than the other join algorithms, especially when the build/broadcast side * is big. * * For the supported cases, users can specify the broadcast hint (e.g. the user applied the * [[org.apache.spark.sql.functions.broadcast()]] function to a DataFrame) and session-based * [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold to adjust whether BHJ is used and * which join side is broadcast. * * 1) Broadcast the join side with the broadcast hint, even if the size is larger than * [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]]. If both sides have the hint (only when the type * is inner like join), the side with a smaller estimated physical size will be broadcast. * 2) Respect the [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold and broadcast the side * whose estimated physical size is smaller than the threshold. If both sides are below the * threshold, broadcast the smaller side. If neither is smaller, BHJ is not used. * * - Shuffle hash join: if the average size of a single partition is small enough to build a hash * table. * * - Sort merge: if the matching join keys are sortable. * * If there is no joining keys, Join implementations are chosen with the following precedence: * - BroadcastNestedLoopJoin (BNLJ): * BNLJ supports all the join types but the impl is OPTIMIZED for the following scenarios: * For right outer join, the left side is broadcast. For left outer, left semi, left anti * and the internal join type ExistenceJoin, the right side is broadcast. For inner like * joins, either side is broadcast. * * Like BHJ, users still can specify the broadcast hint and session-based * [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold to impact which side is broadcast. * * 1) Broadcast the join side with the broadcast hint, even if the size is larger than * [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]]. If both sides have the hint (i.e., just for * inner-like join), the side with a smaller estimated physical size will be broadcast. * 2) Respect the [[SQLConf.AUTO_BROADCASTJOIN_THRESHOLD]] threshold and broadcast the side * whose estimated physical size is smaller than the threshold. If both sides are below the * threshold, broadcast the smaller side. If neither is smaller, BNLJ is not used. * * - CartesianProduct: for inner like join, CartesianProduct is the fallback option. * * - BroadcastNestedLoopJoin (BNLJ): * For the other join types, BNLJ is the fallback option. Here, we just pick the broadcast * side with the broadcast hint. If neither side has a hint, we broadcast the side with * the smaller estimated physical size. */