Binder is ubiquitous in Android systems, and Binder transmissions occur at every moment. In many cases, there will not only be a single Binder transmission in a process, often concurrent multiple Binder transmissions, and there will be Binder nesting. Especially important processes such as system_server will have more Binder transmissions. When a system problem occurs, if traced to the system_server, it will be found that most of the cases are in Binder transmission. However, no matter how many Binder transmissions or more complex Binder nesting, they are ultimately implemented through two kinds of Binder transmissions: synchronous transmission and asynchronous transmission. Here we attempt to explain the Binder communication process through the simplest transmission.

Binder synchronous transmission

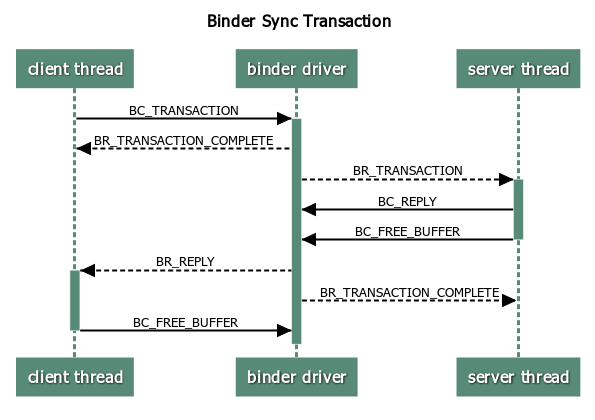

The most common Binder transmission is synchronous transmission. In synchronous transmission, the originator of IPC communication needs to wait until the message is processed by the other end to continue. A complete synchronous transmission is shown in the following figure.

Skip the Binder device initialization process and look directly at the transmission process. The client sends BC_TRANSACTION command to Binder driver through BINDER_WRITE_READ of ioctl.

drivers/staging/android/binder.c static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { ...... switch (cmd) { case BINDER_WRITE_READ: { struct binder_write_read bwr; ...... // Need to write data if (bwr.write_size > 0) { ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed); trace_binder_write_done(ret); if (ret < 0) { bwr.read_consumed = 0; if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto err; } } // Need to read data if (bwr.read_size > 0) { ret = binder_thread_read(proc, thread, bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK); trace_binder_read_done(ret); if (!list_empty(&proc->todo)) wake_up_interruptible(&proc->wait); if (ret < 0) { if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto err; } } ...... break; } ......

1.BC_TRANSACTION

When launching a Binder transfer, you need to write the BC_TRANSACTION command and wait for the command to return.

drivers/staging/android/binder.c static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { ...... case BC_TRANSACTION: case BC_REPLY: { struct binder_transaction_data tr; if (copy_from_user(&tr, ptr, sizeof(tr))) return -EFAULT; ptr += sizeof(tr); binder_transaction(proc, thread, &tr, cmd == BC_REPLY); break; } ...... }

Both BC_TRANSACTION and BC_REPLY call binder_transaction(), which differs in whether reply is set. Binder_transaction() is also the core function for writing data. Functions are long and logical. Try to analyze them.

drivers/staging/android/binder.c static void binder_transaction(struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply) { ...... if (reply) { ...... } else { if (tr->target.handle) { // Find the corresponding binder entity according to handle struct binder_ref *ref; ref = binder_get_ref(proc, tr->target.handle, true); ...... target_node = ref->node; } else { // binder entity of service manager when handle is 0 target_node = binder_context_mgr_node; ...... } e->to_node = target_node->debug_id; // binder_proc of binder entity target_proc = target_node->proc; ...... if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) { struct binder_transaction *tmp; tmp = thread->transaction_stack; ...... // If it is synchronous transmission, find out if there is any transmission from the opposite end in the transport stack, and if so, use the opposite end thread to process the transmission. while (tmp) { if (tmp->from && tmp->from->proc == target_proc) target_thread = tmp->from; tmp = tmp->from_parent; } } } // Find the end-to-end thread and use the thread todo list, otherwise use the process todo list if (target_thread) { e->to_thread = target_thread->pid; target_list = &target_thread->todo; target_wait = &target_thread->wait; } else { target_list = &target_proc->todo; target_wait = &target_proc->wait; } e->to_proc = target_proc->pid; // Allocate binder transaction t = kzalloc(sizeof(*t), GFP_KERNEL); ...... // Allocate binder_work to handle transmission completion tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL); ...... // Synchronized non-reply transmission, setting the current thread to from if (!reply && !(tr->flags & TF_ONE_WAY)) t->from = thread; else t->from = NULL; t->sender_euid = proc->tsk->cred->euid; // Setting the target process and thread for transmission t->to_proc = target_proc; t->to_thread = target_thread; t->code = tr->code; t->flags = tr->flags; t->priority = task_nice(current); // Allocation of transmission space from the target process t->buffer = binder_alloc_buf(target_proc, tr->data_size, tr->offsets_size, !reply && (t->flags & TF_ONE_WAY)); ...... t->buffer->allow_user_free = 0; t->buffer->debug_id = t->debug_id; t->buffer->transaction = t; t->buffer->target_node = target_node; // Increase the reference count of binder entities if (target_node) binder_inc_node(target_node, 1, 0, NULL); offp = (binder_size_t *)(t->buffer->data + ALIGN(tr->data_size, sizeof(void *))); // Copy user data into the transport space of the binder entity if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t) tr->data.ptr.buffer, tr->data_size)) { ...... } // Copy flat_binder_object object information of user data if (copy_from_user(offp, (const void __user *)(uintptr_t) tr->data.ptr.offsets, tr->offsets_size)) { ...... } ...... off_end = (void *)offp + tr->offsets_size; off_min = 0; // Processing flat_binder_object object object information for (; offp < off_end; offp++) { struct flat_binder_object *fp; ...... fp = (struct flat_binder_object *)(t->buffer->data + *offp); off_min = *offp + sizeof(struct flat_binder_object); switch (fp->type) { // Type of binder entity for server registration case BINDER_TYPE_BINDER: case BINDER_TYPE_WEAK_BINDER: { struct binder_ref *ref; // Create a binder entity if it cannot be found struct binder_node *node = binder_get_node(proc, fp->binder); if (node == NULL) { node = binder_new_node(proc, fp->binder, fp->cookie); ...... } ...... // Create references in the target process ref = binder_get_ref_for_node(target_proc, node); ...... // Modify the type of binder object to handle if (fp->type == BINDER_TYPE_BINDER) fp->type = BINDER_TYPE_HANDLE; else fp->type = BINDER_TYPE_WEAK_HANDLE; fp->binder = 0; // Assign the reference handle to the object fp->handle = ref->desc; fp->cookie = 0; // Increase reference count binder_inc_ref(ref, fp->type == BINDER_TYPE_HANDLE, &thread->todo); ...... } break; // Type is a binder reference, client transfers to server case BINDER_TYPE_HANDLE: case BINDER_TYPE_WEAK_HANDLE: { // Get the binder reference in the current process struct binder_ref *ref = binder_get_ref( proc, fp->handle, fp->type == BINDER_TYPE_HANDLE); ...... if (ref->node->proc == target_proc) { // If the binder transport occurs in the same process, use the binder entity directly if (fp->type == BINDER_TYPE_HANDLE) fp->type = BINDER_TYPE_BINDER; else fp->type = BINDER_TYPE_WEAK_BINDER; fp->binder = ref->node->ptr; fp->cookie = ref->node->cookie; binder_inc_node(ref->node, fp->type == BINDER_TYPE_BINDER, 0, NULL); ...... } else { struct binder_ref *new_ref; // Create a binder reference in the target process new_ref = binder_get_ref_for_node(target_proc, ref->node); ...... fp->binder = 0; fp->handle = new_ref->desc; fp->cookie = 0; binder_inc_ref(new_ref, fp->type == BINDER_TYPE_HANDLE, NULL); ...... } } break; // Type is a file descriptor for sharing files or memory case BINDER_TYPE_FD: { ...... } break; ...... } } if (reply) { ...... } else if (!(t->flags & TF_ONE_WAY)) { // Input stack of current thread t->need_reply = 1; t->from_parent = thread->transaction_stack; thread->transaction_stack = t; } else { // Asynchronous transmission uses aync to do list if (target_node->has_async_transaction) { target_list = &target_node->async_todo; target_wait = NULL; } else target_node->has_async_transaction = 1; } // Add the transport to the target queue t->work.type = BINDER_WORK_TRANSACTION; list_add_tail(&t->work.entry, target_list); // Add transport completion to the current thread todo queue tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; list_add_tail(&tcomplete->entry, &thread->todo); // Wake up target thread or process if (target_wait) wake_up_interruptible(target_wait); return; ...... }

BC_TRANSACTION is simply described as follows.

- Find the target process or thread.

- Copy the user space data to the current process space and parse flat_binder_object.

- Transfer to the current thread.

- Add BINDER_WORK_TRANSACTION to the target queue and BINDER_WORK_TRANSACTION_COMPLETE to the current thread queue.

- Wake up the target process or thread for processing.

2.BR_TRANSACTION_COMPLETE

When Client executes BINDER_WRITE_READ, it first writes data through binder_thread_write(), and puts BINDER_WORK_TRANSACTION_COMPLETE into the work queue. Then binder_thread_read() is executed to read the returned data. The command BR_TRANSACTION_COMPLETE is returned to the Client thread.

drivers/staging/android/binder.c static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { ...... // On the first reading, the insert command BR_NOOP is returned to the user if (*consumed == 0) { if (put_user(BR_NOOP, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); } retry: // The current thread does not transfer and the todo queue is empty, processing the process's work queue wait_for_proc_work = thread->transaction_stack == NULL && list_empty(&thread->todo); ...... thread->looper |= BINDER_LOOPER_STATE_WAITING; // If the process work queue is processed, the current thread is idle if (wait_for_proc_work) proc->ready_threads++; ...... // Waiting for the process or thread work queue to wake up if (wait_for_proc_work) { ...... ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread)); } else { ...... ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread)); } ...... // After waking up, start processing the transfer, and subtract the idle thread by 1 if (wait_for_proc_work) proc->ready_threads--; thread->looper &= ~BINDER_LOOPER_STATE_WAITING; ...... while (1) { ...... // Prioritize Thread Work Queue and Reprocess Process Work Queue if (!list_empty(&thread->todo)) w = list_first_entry(&thread->todo, struct binder_work, entry); else if (!list_empty(&proc->todo) && wait_for_proc_work) w = list_first_entry(&proc->todo, struct binder_work, entry); else { if (ptr - buffer == 4 && !(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN)) /* no data added */ goto retry; break; } ...... switch (w->type) { ...... case BINDER_WORK_TRANSACTION_COMPLETE: { // Send the command BR_TRANSACTION_COMPLETE to the user cmd = BR_TRANSACTION_COMPLETE; if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); ...... list_del(&w->entry); kfree(w); binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE); } break; ...... if (!t) continue; ...... }

3.BR_TRANSACTION

TalWithDriver () is called to wait for data to be read after the server thread starts. After the Binder driver processes the BC_TRANSACTION command sent by the Client, the Server thread is awakened. Server thread read data processing is also done in binder_thread_read().

drivers/staging/android/binder.c static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { ...... while (1) { switch (w->type) { // binder_transaction() wakes up the target process after adding the working BINDER_WORK_TRANSACTION to the queue case BINDER_WORK_TRANSACTION: { t = container_of(w, struct binder_transaction, work); } break; ...... // Only BINDER_WORK_TRANSACTION takes out the transport event, so it can continue to execute if (!t) continue; BUG_ON(t->buffer == NUL); // When target_node exists, it indicates that it is a work event generated by BC_TRANSACTION and needs to reply to BR_TRANSACTION. // Otherwise, it is a work event generated by BC_REPLY, reply to BR_REPLY if (t->buffer->target_node) { struct binder_node *target_node = t->buffer->target_node; tr.target.ptr = target_node->ptr; tr.cookie = target_node->cookie; t->saved_priority = task_nice(current); if (t->priority < target_node->min_priority && !(t->flags & TF_ONE_WAY)) binder_set_nice(t->priority); else if (!(t->flags & TF_ONE_WAY) || t->saved_priority > target_node->min_priority) binder_set_nice(target_node->min_priority); cmd = BR_TRANSACTION; } else { tr.target.ptr = 0; tr.cookie = 0; cmd = BR_REPLY; } tr.code = t->code; tr.flags = t->flags; tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid); // sender_pid is the PID of the calling process when transmitting synchronously. Asynchronous transmission time is 0. if (t->from) { struct task_struct *sender = t->from->proc->tsk; tr.sender_pid = task_tgid_nr_ns(sender, task_active_pid_ns(current)); } else { tr.sender_pid = 0; } ...... // Copy data to user space if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); if (copy_to_user(ptr, &tr, sizeof(tr))) return -EFAULT; ptr += sizeof(tr); ...... // Remove the current work event from the queue list_del(&t->work.entry); t->buffer->allow_user_free = 1; if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) { // In the case of synchronous transmission, when the command is BR_TRANSACTION, work events are stacked t->to_parent = thread->transaction_stack; t->to_thread = thread; thread->transaction_stack = t; } else { // In other cases, it indicates that the transmission has been completed and the work event is released. t->buffer->transaction = NULL; kfree(t); binder_stats_deleted(BINDER_STAT_TRANSACTION); } break; } ...... }

BR_REPLY is the same process. The difference is that sending BR_REPLY means that the transmission has been completed and work events can be released.

4.BC_REPLY

After receiving the BR_TRANSACTION command, the Server takes out the buffer for processing, and sends BC_REPLY to the Binder driver after completion.

frameworks/native/libs/binder/IPCThreadState.cpp status_t IPCThreadState::executeCommand(int32_t cmd) { ...... case BR_TRANSACTION: { // Retrieve the transmitted data binder_transaction_data tr; result = mIn.read(&tr, sizeof(tr)); ...... Parcel reply; ...... // BBinder parses data if (tr.target.ptr) { sp<BBinder> b((BBinder*)tr.cookie); error = b->transact(tr.code, buffer, &reply, tr.flags); } else { error = the_context_object->transact(tr.code, buffer, &reply, tr.flags); } if ((tr.flags & TF_ONE_WAY) == 0) { LOG_ONEWAY("Sending reply to %d!", mCallingPid); if (error < NO_ERROR) reply.setError(error); // Synchronized transmission requires BC_REPLY to be sent sendReply(reply, 0); } else { LOG_ONEWAY("NOT sending reply to %d!", mCallingPid); } ...... } break; ...... }

BC_REPLY is also handled by binder_transaction(), except that the parameter reply needs to be set. The following analysis is only different from the previous one.

drivers/staging/android/binder.c static void binder_transaction(struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply) { ...... if (reply) { // Stack out of the current thread in_reply_to = thread->transaction_stack; ...... thread->transaction_stack = in_reply_to->to_parent; // The target thread is the initiator thread target_thread = in_reply_to->from; ...... target_proc = target_thread->proc; } else { ...... } if (target_thread) { e->to_thread = target_thread->pid; target_list = &target_thread->todo; target_wait = &target_thread->wait; } else { ...... } ...... // from of reply transmission is empty if (!reply && !(tr->flags & TF_ONE_WAY)) t->from = thread; else t->from = NULL; ...... if (reply) { // Stack out of the target thread binder_pop_transaction(target_thread, in_reply_to); } else if (!(t->flags & TF_ONE_WAY)) { ...... } else { ...... } t->work.type = BINDER_WORK_TRANSACTION; list_add_tail(&t->work.entry, target_list); tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; list_add_tail(&tcomplete->entry, &thread->todo); if (target_wait) wake_up_interruptible(target_wait); return; ...... }

After the binder_transaction() executes BC_REPLY, it also joins the work queue and wakes up the target. BINDER_WORK_TRANSACTION_COMPLETE returns BR_TRANSACTION_COMPLETE to the current thread, that is, the Server side. BINDER_WORK_TRANSACTION is processed by target, which is the Client side. Based on the above analysis, the driver will return BR_REPLY to the Client side.

5.BC_FREE_BUFFER

Each transmission of Binder, whether from Client to Sever or Server to Client, releases the transmission space through BC_FREE_BUFFER after receiving data and processing at the opposite end. In synchronous transmission, there are two transmissions, BC_TRANSACTION from Client and BC_REPLY from Server.

In BC_TRANSACTION, the Server receives the BR_TRANSACTION command to start processing Binder data, and when the processing is completed, BC_FREE_BUFFER is issued to release buffer. This release command is not issued directly, but through the release function of Parcel. Setting free Buffer as the release function of the Parcel instance buffer calls the release function freeBuffer when the buffer destructs.

frameworks/native/libs/binder/IPCThreadState.cpp status_t IPCThreadState::executeCommand(int32_t cmd) { ...... case BR_TRANSACTION: { binder_transaction_data tr; result = mIn.read(&tr, sizeof(tr)); ...... Parcel buffer; // Set the release function of buffer to freeBuffer buffer.ipcSetDataReference( reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), freeBuffer, this); ...... sp<BBinder> b((BBinder*)tr.cookie); error = b->transact(tr.code, buffer, &reply, tr.flags); ...... } break; ...... } ...... void IPCThreadState::freeBuffer(Parcel* parcel, const uint8_t* data, size_t /*dataSize*/, const binder_size_t* /*objects*/, size_t /*objectsSize*/, void* /*cookie*/) { ...... if (parcel != NULL) parcel->closeFileDescriptors(); // Send BC_FREE_BUFFER command IPCThreadState* state = self(); state->mOut.writeInt32(BC_FREE_BUFFER); state->mOut.writePointer((uintptr_t)data); }

In BC_REPLY, when the Client receives BR_REPLY, it sets freeBuffer as a release function or calls freeBuffer directly.

frameworks/native/libs/binder/IPCThreadState.cpp status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult) { int32_t cmd; int32_t err; while (1) { ...... case BR_REPLY: { binder_transaction_data tr; err = mIn.read(&tr, sizeof(tr)); ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY"); if (err != NO_ERROR) goto finish; if (reply) { if ((tr.flags & TF_STATUS_CODE) == 0) { // Set freeBuffer as the release function reply->ipcSetDataReference( reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), freeBuffer, this); } else { // Call freeBuffer directly when an error occurs err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer); freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), this); } } else { freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), this); continue; } } goto finish; ...... } ......

FreeBuffer() sends the BC_FREE_BUFFER command to the Binder driver.

drivers/staging/android/binder.c static void binder_free_buf(struct binder_proc *proc, struct binder_buffer *buffer) { size_t size, buffer_size; // Get the size of the buffer buffer_size = binder_buffer_size(proc, buffer); size = ALIGN(buffer->data_size, sizeof(void *)) + ALIGN(buffer->offsets_size, sizeof(void *)); ...... // Update free_async_space for asynchronous transmission if (buffer->async_transaction) { proc->free_async_space += size + sizeof(struct binder_buffer); ...... } // Release physical memory binder_update_page_range(proc, 0, (void *)PAGE_ALIGN((uintptr_t)buffer->data), (void *)(((uintptr_t)buffer->data + buffer_size) & PAGE_MASK), NULL); // Erase buffer from allocated_buffers tree rb_erase(&buffer->rb_node, &proc->allocated_buffers); buffer->free = 1; // Merge idle buffer backwards if (!list_is_last(&buffer->entry, &proc->buffers)) { struct binder_buffer *next = list_entry(buffer->entry.next, struct binder_buffer, entry); if (next->free) { rb_erase(&next->rb_node, &proc->free_buffers); binder_delete_free_buffer(proc, next); } } // Forward merge of idle buffer if (proc->buffers.next != &buffer->entry) { struct binder_buffer *prev = list_entry(buffer->entry.prev, struct binder_buffer, entry); if (prev->free) { binder_delete_free_buffer(proc, buffer); rb_erase(&prev->rb_node, &proc->free_buffers); buffer = prev; } } // Insert merged buffers into free_buffers binder_insert_free_buffer(proc, buffer); } ...... static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { ...... case BC_FREE_BUFFER: { binder_uintptr_t data_ptr; struct binder_buffer *buffer; // Getting User Space Data if (get_user(data_ptr, (binder_uintptr_t __user *)ptr)) return -EFAULT; ptr += sizeof(binder_uintptr_t); // Find the corresponding binder_buffer from the buffer tree buffer = binder_buffer_lookup(proc, data_ptr); ...... if (buffer->transaction) { buffer->transaction->buffer = NULL; buffer->transaction = NULL; } // Asynchronous transport moves incomplete async_todo work to the todo queue of threads when buffer s are released if (buffer->async_transaction && buffer->target_node) { BUG_ON(!buffer->target_node->has_async_transaction); if (list_empty(&buffer->target_node->async_todo)) buffer->target_node->has_async_transaction = 0; else list_move_tail(buffer->target_node->async_todo.next, &thread->todo); } trace_binder_transaction_buffer_release(buffer); // Reduce the number of binder references binder_transaction_buffer_release(proc, buffer, NULL); // Release buffer memory space binder_free_buf(proc, buffer); break; } ...... }

Binder asynchronous transmission

In Binder communication, asynchronous transmission can be used if the Client side only wants to send data regardless of the execution results of the Server side. Asynchronous transmission needs to set TF_ONE_WAY bit in flag of transmission data. The simple transmission process is as follows.

The process of asynchronous transmission in Binder driver is the same as that of synchronous transmission. Let's focus on the process of TF_ONE_WAY flag.

drivers/staging/android/binder.c static struct binder_buffer *binder_alloc_buf(struct binder_proc *proc, size_t data_size, size_t offsets_size, int is_async) { ...... // Asynchronous transmission needs to consider free_async_space if (is_async && proc->free_async_space < size + sizeof(struct binder_buffer)) { binder_debug(BINDER_DEBUG_BUFFER_ALLOC, "%d: binder_alloc_buf size %zd failed, no async space left\n", proc->pid, size); return NULL; } ...... buffer->data_size = data_size; buffer->offsets_size = offsets_size; // Setting is_async flag in buffer buffer->async_transaction = is_async; if (is_async) { // Update free_async_space proc->free_async_space -= size + sizeof(struct binder_buffer); binder_debug(BINDER_DEBUG_BUFFER_ALLOC_ASYNC, "%d: binder_alloc_buf size %zd async free %zd\n", proc->pid, size, proc->free_async_space); } return buffer; } ...... static void binder_transaction(struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply) { ...... // In asynchronous transmission, you need to set the front of the transmission event to null if (!reply && !(tr->flags & TF_ONE_WAY)) t->from = thread; else t->from = NULL; ...... // Asynchronous flags when allocating buffer s t->buffer = binder_alloc_buf(target_proc, tr->data_size, tr->offsets_size, !reply && (t->flags & TF_ONE_WAY)); ...... if (reply) { ...... } else if (!(t->flags & TF_ONE_WAY)) { ...... } else { // Asynchronous transmission uses async_todo queues if (target_node->has_async_transaction) { target_list = &target_node->async_todo; target_wait = NULL; } else target_node->has_async_transaction = 1; } t->work.type = BINDER_WORK_TRANSACTION; list_add_tail(&t->work.entry, target_list); tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; list_add_tail(&tcomplete->entry, &thread->todo); if (target_wait) wake_up_interruptible(target_wait); return; ...... } ...... static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { ...... if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) { ...... } else { // Asynchronous transmission is one-way without reverting. t->buffer->transaction = NULL; kfree(t); binder_stats_deleted(BINDER_STAT_TRANSACTION); } break; ...... }