preface:

Two months ago, I wrote the complete steps of TextCNN (less than 60 lines of code), but did not take into account the subsequent engineering deployment and large amount of data (unable to load all into memory), so today I made a transformation and optimization according to the actual case.

The operation steps of TextCNN can generally be divided into the following steps:

1. Data sorting: the text in daily work may not directly give you a csv file like the game, and you may need to integrate it yourself; In addition, textcnn does not recognize classified variables (such as Shanghai, Beijing, etc.) during training and prediction, so it must pass map or label_ The encoder method is modified, and the map is updated after the final sample prediction_ Reverse back.

2. Build Thesaurus: tokenizer.fit_on_texts, this step is very important. If the training accuracy is always in single digits, please go to this one to check carefully;

3. Making tf data set: if there are too many text and memory can't fit, it's recommended to go to batch (32 or 64). But it should be noted that if your train_data and valid_data is made into dataset, so test_data must also be made into a dataset. Although there is no label at present, it can be virtualized into 0;

4. Build TextCNN network: there's nothing to say about this, whether it's [2,3,4] or [3,4,5];

5. Setting weight weight: in classification tasks, most of them are unbalanced, especially multi classification, so setting weight weight is still necessary;

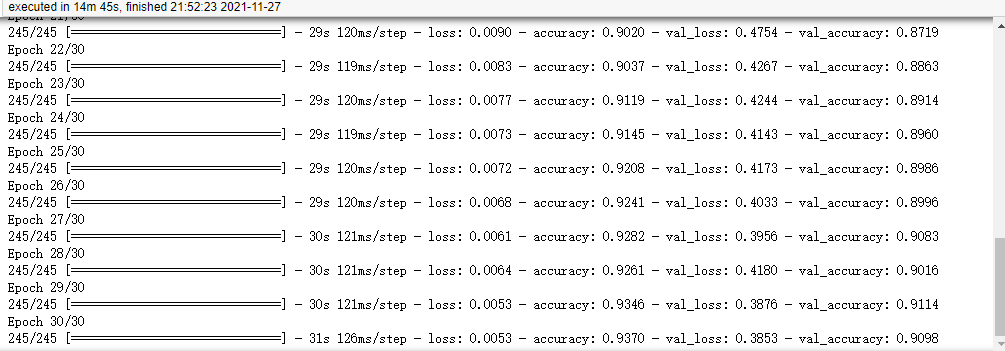

6. Training model: adjustable hyperparametric learning_rate (3e-4 recommended), epochs (30-40 recommended, early stop will be set anyway), optimizer (Adam is good), EARLY_STOP_PATIENCE (early stop times, 3 times);

7. Model solidification: model.save('.. / model/text_cnn.h5') can be directly used in tensorflow 2, which will not be demonstrated in this article;

8. Model loading: textcnn_model = tf.keras.models.load_model(‘service/model/text_cnn.h5’);

9. Sample forecast: text_cnn_model.predict(test_dataset). Note that the result is a floating-point number of 0-1. You need to select the correct label through np.argmax(predictions, axis=-1);

The specific codes are as follows:

1, Import data

import os import pandas as pd import numpy as np import tensorflow as tf from sklearn.utils import resample from sklearn.model_selection import train_test_split

#Training data import

train_type_list = []

train_text_list = []

train_dir_name_list = os.listdir('./train/')

train_dir_name_list.remove('.DS_Store')

for dir_name in train_dir_name_list:

for file in os.listdir('./train/'+dir_name+'/'):

train_type_list.append(dir_name.split('-')[1])

train_text_list.append(open('./train/'+str(dir_name)+'/'+str(file),'r',encoding='gb18030',errors='ignore').read().replace('\n', ' ').replace('\u3000', ''))

print(len(train_type_list))

#Label dictionary

cls_num = len(set(train_type_list))

cls_dict = {}

for k,v in enumerate(set(train_type_list)):

cls_dict[k] = v

cls_dict_reverse = {v:k for k,v in cls_dict.items()}

train_data = pd.DataFrame({'text':train_text_list,'target':train_type_list})

train_data['target'] = train_data['target'].map(cls_dict_reverse)

train_data = resample(train_data)

train_data.head()

#Forecast data import

test_text_list = []

test_filename = []

for file in os.listdir('./test'):

test_filename.append(file)

test_text_list.append(open('./test/'+file,'r', encoding='gb18030', errors='ignore').read().replace('\n',' '))

test_data = pd.DataFrame({'text':test_text_list, 'filename':test_filename})

test_data['target']=0

2, TF data preparation

X_train, X_val, y_train, y_val = train_test_split(train_data['text'], train_data['target'], test_size=0.1, random_state=27) #tokenizer NUM_LABEL = cls_num #Number of categories BATCH_SIZE = 32 MAX_LEN = 200 #Longest sequence length BUFFER_SIZE = tf.constant(train_data.shape[0], dtype=tf.int64) tokenizer = tf.keras.preprocessing.text.Tokenizer(char_level=True) tokenizer.fit_on_texts(X_train)

def build_tf_dataset(text, label, is_train=False):

'''make tf data set'''

sequence = tokenizer.texts_to_sequences(text)

sequence_padded = tf.keras.preprocessing.sequence.pad_sequences(sequence,padding='post',maxlen=MAX_LEN)

dataset = tf.data.Dataset.from_tensor_slices((sequence_padded, label))

if is_train:

dataset = dataset.shuffle(BUFFER_SIZE)

dataset = dataset.batch(BATCH_SIZE)

dataset = dataset.prefetch(BUFFER_SIZE)

else:

dataset = dataset.batch(BATCH_SIZE)

dataset = dataset.prefetch(BATCH_SIZE)

return dataset

train_dataset = build_tf_dataset(X_train, y_train, is_train=True) val_dataset = build_tf_dataset(X_val, y_val, is_train=False) test_dataset = build_tf_dataset(test_data['text'], test_data['target'], is_train=False)

3, Building TextCNN network

VOCAB_SIZE = len(tokenizer.index_word) + 1 print(VOCAB_SIZE) EMBEDDING_DIM = 100 FILTERS = [3, 4, 5] NUM_FILTERS = 128 #Size of convolution kernel DENSE_DIM = 256 #Full connection layer size CLASS_NUM = 20 #Number of categories DROPOUT_RATE = 0.5 #dropout scale

def build_text_cnn_model():

inputs = tf.keras.Input(shape=(None,))

embed = tf.keras.layers.Embedding(

input_dim=VOCAB_SIZE,

output_dim=EMBEDDING_DIM,

trainable=True,

mask_zero=True)(inputs)

embed = tf.keras.layers.Dropout(DROPOUT_RATE)(embed)

pool_outputs = []

for filter_size in FILTERS:

conv = tf.keras.layers.Conv1D(NUM_FILTERS,

filter_size,

padding='same',

activation='relu',

data_format='channels_last',

use_bias=True)(embed)

max_pool = tf.keras.layers.GlobalMaxPooling1D(data_format='channels_last')(conv)

pool_outputs.append(max_pool)

outputs = tf.keras.layers.concatenate(pool_outputs, axis=-1)

outputs = tf.keras.layers.Dense(DENSE_DIM, activation='relu')(outputs)

outputs = tf.keras.layers.Dropout(DROPOUT_RATE)(outputs)

outputs = tf.keras.layers.Dense(CLASS_NUM, activation='softmax')(outputs)

model = tf.keras.Model(inputs=inputs, outputs=outputs)

return model

text_cnn_model = build_text_cnn_model()

text_cnn_model.summary()

#Set weight weight

df_weight = train_data['target'].value_counts().sort_index().reset_index()

df_weight['weight'] = df_weight['target'].min() / df_weight['target']

df_weight_dict = {k:v for k,v in zip(df_weight['index'], df_weight['weight'])}

df_weight_dict

4, Start training

LR = 3e-4

EPOCHS = 30

EARLY_STOP_PATIENCE = 2

loss = tf.keras.losses.SparseCategoricalCrossentropy()

optimizer = tf.keras.optimizers.Adam(LR)

text_cnn_model.compile(loss=loss,

optimizer=optimizer,

metrics=['accuracy'])

callback = tf.keras.callbacks.EarlyStopping(monitor='val_accuracy',

patience=EARLY_STOP_PATIENCE,

restore_best_weights=True)

history = text_cnn_model.fit(train_dataset,

epochs=EPOCHS,

callbacks=[callback],

validation_data=val_dataset,

class_weight=df_weight_dict

)

The effect on CPU is not bad, and the accuracy can reach about 90%.

5, Forecast and export results

test_predict = text_cnn_model.predict(test_dataset)

preds = np.argmax(test_predict, axis=-1)

test_data['category'] = preds

test_data['category'] = test_data['category'].map(cls_dict)

test_data[['filename','category']].to_csv('zhanglei.csv', index=False)