For image tasks of custom datasets, the general process is generally divided into the following steps:

-

Load data

-

Train-Val-Test

-

Build model

-

Transfer Learning

Most of the energy will be spent on data preparation and preprocessing. This paper uses a more general data processing method, and builds a simple model, a deeper resnet network layer, and migration learning based on VGG19.

You can use this example to quickly build a network and get a satisfactory result from the training office.

1. Load data

The dataset comes from Pokemon's five-category data, each with more than 200 pictures, and is a smaller dataset.

Official Project Links:

https://www.pyimagesearch.com/2018/04/16/keras-and-convolutional-neural-networks-cnns/

1.1 Dataset Introduction

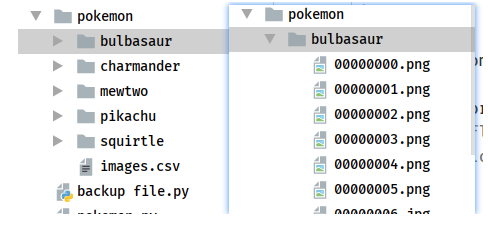

The Pokemon folder contains five subfiles, each of which has a corresponding category name.Folder contains png, jpeg picture files.

1.2 Ideas for Solving Problems

-

Since there are no partitions, training sets, and test sets in the folder, you need to build a csv file to read all the files and their categories

-

After shuffle dataset, divide Train_val_test

-

Data preprocessing, data standardization, data enhancement, visualization

"""python

#Create Number Encoding Table

import os import glob import random import csv import tensorflow as tf from tensorflow import keras import matplotlib.pyplot as plt import time def load_csv(root, filename, name2label): """ //Converts pictures scattered in folders into a dataset file corresponding to the picture and label in csv format :param root: File Path(Files in each subfolder belong to one category) :param filename: file name :param name2label: Class name encoding table {'Class name 1':0, 'Class Name 2':1..} :return: images, labels """ # Determine if csv file has been generated if not os.path.exists(os.path.join(root, filename)): # join-What is a path and file name and returns (no new path will be generated) images = [] # Save file path for name in name2label.keys(): # pokemon\pikachu\00000001.png # glob.glob() Use wildcards to retrieve files in the path, similar to regular expressions images += glob.glob(os.path.join(root, name, '*')) # png, jpg, jpeg print(name2label) print(len(images), images) random.shuffle(images) with open(os.path.join(root, filename), 'w', newline='') as f: writer = csv.writer(f) for img in images: name = img.split(os.sep)[1] # Os.sepRepresents the separator window-'\', linux-'/' label = name2label[name] # 0, 1, 2.. # 'pokemon\\bulbasaur\\00000000.png', 0 writer.writerow([img, label]) # If newline=''is not set, two lines of data will be written print('write into csv file:', filename) # Read Existing Files images, labels = [], [] with open(os.path.join(root, filename)) as f: reader = csv.reader(f) for row in reader: # 'pokemon\\bulbasaur\\00000000.png', 0 img, label = row label = int(label) # str-> int images.append(img) labels.append(label) assert len(images) == len(labels) return images, labels def load_pokemon(root, mode='train'): """ # Create a digital encoding table :param root: root path :param mode: train, valid, test :return: images, labels, name2label """ name2label = {} # {'bulbasaur': 0, 'charmander': 1, 'mewtwo': 2, 'pikachu': 3, 'squirtle': 4} for name in sorted(os.listdir(os.path.join(root))): # sorted() is to reproduce the consistency of results # os.listdir- Returns a list of all files (folders, files) in the path if not os.path.isdir(os.path.join(root, name)): # Is it a folder and exists continue # Code a number for each category name2label[name] = len(name2label) # Read label images, labels = load_csv(root, 'images.csv', name2label) # Partition Dataset [6:2:2] if mode == 'train': images = images[:int(0.6 * len(images))] labels = labels[:int(0.6 * len(labels))] # len(images) == len(labels) elif mode == 'valid': images = images[int(0.6 * len(images)):int(0.8 * len(images))] labels = labels[int(0.6 * len(labels)):int(0.8 * len(labels))] else: images = images[int(0.8 * len(images)):] labels = labels[int(0.8 * len(labels)):] return images, labels, name2label # imagenet dataset mean, variance img_mean = tf.constant([0.485, 0.456, 0.406]) # 3 channel img_std = tf.constant([0.229, 0.224, 0.225]) def normalization(x, mean=img_mean, std=img_std): # [224, 224, 3] x = (x - mean) / std return x def denormalization(x, mean=img_mean, std=img_std): x = x * std + mean return x def preprocess(x, y): # x: path, y: label x = tf.io.read_file(x) # Binary # x = tf.image.decode_image(x) x = tf.image.decode_jpeg(x, channels=3) # RGBA x = tf.image.resize(x, [244, 244]) # data augmentation # x = tf.image.random_flip_up_down(x) x = tf.image.random_flip_left_right(x) x = tf.image.random_crop(x, [224, 224, 3]) # Model reduction should not be too large, otherwise it will make training more difficult x = tf.cast(x, dtype=tf.float32) / 255. # unit8 -> float32 # U[0,1] -> N(0,1) # Improving Training Accuracy x = normalization(x) y = tf.convert_to_tensor(y) return x, y def main(): images, labels, name2label = load_pokemon('pokemon', 'train') print('images:', len(images), images) print('labels:', len(labels), labels) # print(name2label) # The.map() function must precede.batch(), otherwise x=tf.io.read_file() reads one batch picture at a time, causing an error db = tf.data.Dataset.from_tensor_slices((images, labels)).map(preprocess).shuffle(1000).batch(32) # tf.summary() # Various methods (supporting various formats) are provided to save data generated during training (such as loss_value, accuracy, entire variable, # The data is saved as a log file in the specified folder. # Data visualization: while tensorboard canTf.summary() # Log visualization, according to the data format recorded, generates line charts, statistical histograms, picture lists and other graphs. # tf.summary() # By updating the log incrementally, this allows us to use tensorboard to read and visualize the log while training, thereby monitoring the training process in real time. writer = tf.summary.create_file_writer('logs') for step, (x, y) in enumerate(db): with writer.as_default(): x = denormalization(x) tf.summary.image('img', x, step=step, max_outputs=9) # STEP: The default option is that the horizontal axis shows the number of training iterations time.sleep(5) if __name__ == '__main__': main()

"""

2. Build a model to train

2.1 Custom Small Networks

Because of the small number of datasets, there are often fittings in the training of large networks. Here, a small two-layer convolution network is defined.

Introducing early_After stopping callback function, the accuracy of model training was 0.8547 when there was no significant change in the three epoch s.

"""

# 1. Customize small networks

model = keras.Sequential([

layers.Conv2D(16, 5, 3),

layers.MaxPool2D(3, 3),

layers.ReLU(),

layers.Conv2D(64, 5, 3),

layers.MaxPool2D(2, 2),

layers.ReLU(),

layers.Flatten(),

layers.Dense(64),

layers.ReLU(),

layers.Dense(5)

])

model.build(input_shape=(None, 224, 224, 3)) model.summary() early_stopping = EarlyStopping( monitor='val_loss', patience=3, min_delta=0.001 ) model.compile(optimizer=optimizers.Adam(lr=1e-3), loss=losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy']) model.fit(db_train, validation_data=db_val, validation_freq=1, epochs=100, callbacks=[early_stopping]) model.evaluate(db_test)

"""

2.2 Customized Resnet Network

The resnet network greatly improves the trainability of deep network, mainly through an identity layer to ensure that the training effect of deep network is not weaker than that of shallow network.

Other articles have a detailed description of the resnet, not to mention here, we build a resnet18 network, the accuracy is 0.7607.

"""

import os

import numpy as np import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers tf.random.set_seed(22) np.random.seed(22) os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' assert tf.__version__.startswith('2.') class ResnetBlock(keras.Model): def __init__(self, channels, strides=1): super(ResnetBlock, self).__init__() self.channels = channels self.strides = strides self.conv1 = layers.Conv2D(channels, 3, strides=strides, padding=[[0, 0], [1, 1], [1, 1], [0, 0]]) self.bn1 = keras.layers.BatchNormalization() self.conv2 = layers.Conv2D(channels, 3, strides=1, padding=[[0, 0], [1, 1], [1, 1], [0, 0]]) self.bn2 = keras.layers.BatchNormalization() if strides != 1: self.down_conv = layers.Conv2D(channels, 1, strides=strides, padding='valid') self.down_bn = tf.keras.layers.BatchNormalization() def call(self, inputs, training=None): residual = inputs x = self.conv1(inputs) x = tf.nn.relu(x) x = self.bn1(x, training=training) x = self.conv2(x) x = tf.nn.relu(x) x = self.bn2(x, training=training) # Residual Connection if self.strides != 1: residual = self.down_conv(inputs) residual = tf.nn.relu(residual) residual = self.down_bn(residual, training=training) x = x + residual x = tf.nn.relu(x) return x class ResNet(keras.Model): def __init__(self, num_classes, initial_filters=16, **kwargs): super(ResNet, self).__init__(**kwargs) self.stem = layers.Conv2D(initial_filters, 3, strides=3, padding='valid') self.blocks = keras.models.Sequential([ ResnetBlock(initial_filters * 2, strides=3), ResnetBlock(initial_filters * 2, strides=1), # layers.Dropout(rate=0.5), ResnetBlock(initial_filters * 4, strides=3), ResnetBlock(initial_filters * 4, strides=1), ResnetBlock(initial_filters * 8, strides=2), ResnetBlock(initial_filters * 8, strides=1), ResnetBlock(initial_filters * 16, strides=2), ResnetBlock(initial_filters * 16, strides=1), ]) self.final_bn = layers.BatchNormalization() self.avg_pool = layers.GlobalMaxPool2D() self.fc = layers.Dense(num_classes) def call(self, inputs, training=None): # print('x:',inputs.shape) out = self.stem(inputs, training = training) out = tf.nn.relu(out) # print('stem:',out.shape) out = self.blocks(out, training=training) # print('res:',out.shape) out = self.final_bn(out, training=training) # out = tf.nn.relu(out) out = self.avg_pool(out) # print('avg_pool:',out.shape) out = self.fc(out) # print('out:',out.shape) return out def main(): num_classes = 5 resnet18 = ResNet(5) resnet18.build(input_shape=(None, 224, 224, 3)) resnet18.summary() if __name__ == '__main__': main()

"""

"""

# 2.resnet18 training, small number of pictures, training results are not particularly good

# resnet = ResNet(5) # 0.7607

# resnet.build(input_shape=(None, 224, 224, 3))

# resnet.summary()

"""

2.3 VGG19 Migration Learning

Migration learning takes advantage of the similarity between datasets, and when the number of datasets is small, the training results will be much better than others.

Use include_during trainingTop=False, remove the last classified base Dense, rebuild and train.Accuracy 0.9316

"""

# 3. VGG19 migratory learning, which utilizes similarities between datasets, yields much better results than the other two

#For convenience, the resnet name is still used here

net = tf.keras.applications.VGG19(weights='imagenet', include_top=False, pooling='max' )

net.trainable = False

resnet = keras.Sequential([

net,

layers.Dense(5)

])

resnet.build(input_shape=(None, 224, 224, 3)) # 0.9316

resnet.summary()

early_stopping = EarlyStopping( monitor='val_loss', patience=3, min_delta=0.001 ) resnet.compile(optimizer=optimizers.Adam(lr=1e-3), loss=losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy']) resnet.fit(db_train, validation_data=db_val, validation_freq=1, epochs=100, callbacks=[early_stopping]) resnet.evaluate(db_test)

"""

Appendix:

Train_Scratch.pyCode

"""

import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' import tensorflow as tf import numpy as np from tensorflow import keras from tensorflow.keras import layers, optimizers, losses from tensorflow.keras.callbacks import EarlyStopping tf.random.set_seed(22) np.random.seed(22) assert tf.__version__.startswith('2.') # Set GPU Display Memory On Demand Allocation # gpus = tf.config.experimental.list_physical_devices('GPU') # if gpus: # try: # # Currently, memory growth needs to be the same across GPUs # for gpu in gpus: # tf.config.experimental.set_memory_growth(gpu, True) # logical_gpus = tf.config.experimental.list_logical_devices('GPU') # print(len(gpus), "Physical GPUs,", len(logical_gpus), "Logical GPUs") # except RuntimeError as e: # # Memory growth must be set before GPUs have been initialized # print(e) from pokemon import load_pokemon, normalization from resnet import ResNet def preprocess(x, y): # x: the path of the picture, y: the digital encoding of the picture x = tf.io.read_file(x) x = tf.image.decode_jpeg(x, channels=3) # RGBA # Picture zooming # x = tf.image.resize(x, [244, 244]) # pictures rotating # x = tf.image.rot90(x,2) # Random Horizontal Flip x = tf.image.random_flip_left_right(x) # Random Vertical Flip # x = tf.image.random_flip_up_down(x) # Zoom the picture to a slightly larger size first x = tf.image.resize(x, [244, 244]) # Clip to fit size at random x = tf.image.random_crop(x, [224, 224, 3]) # x: [0,255]=> -1~1 x = tf.cast(x, dtype=tf.float32) / 255. x = normalization(x) y = tf.convert_to_tensor(y) y = tf.one_hot(y, depth=5) return x, y batchsz = 32 # create train db images1, labels1, table = load_pokemon('pokemon', 'train') db_train = tf.data.Dataset.from_tensor_slices((images1, labels1)) db_train = db_train.shuffle(1000).map(preprocess).batch(batchsz) # create validation db images2, labels2, table = load_pokemon('pokemon', 'valid') db_val = tf.data.Dataset.from_tensor_slices((images2, labels2)) db_val = db_val.map(preprocess).batch(batchsz) # create test db images3, labels3, table = load_pokemon('pokemon', mode='test') db_test = tf.data.Dataset.from_tensor_slices((images3, labels3)) db_test = db_test.map(preprocess).batch(batchsz) # 1. Customize small networks # resnet = keras.Sequential([ # layers.Conv2D(16, 5, 3), # layers.MaxPool2D(3, 3), # layers.ReLU(), # layers.Conv2D(64, 5, 3), # layers.MaxPool2D(2, 2), # layers.ReLU(), # layers.Flatten(), # layers.Dense(64), # layers.ReLU(), # layers.Dense(5) # ]) # 0.8547 # 2.resnet18 training, small number of pictures, training results are not particularly good # resnet = ResNet(5) # 0.7607 # resnet.build(input_shape=(None, 224, 224, 3)) # resnet.summary() # 3. VGG19 Migrates Learning, which utilizes similarities between datasets and yields much better results than the other two net = tf.keras.applications.VGG19(weights='imagenet', include_top=False, pooling='max' ) net.trainable = False resnet = keras.Sequential([ net, layers.Dense(5) ]) resnet.build(input_shape=(None, 224, 224, 3)) # 0.9316 resnet.summary() early_stopping = EarlyStopping( monitor='val_loss', patience=3, min_delta=0.001 ) resnet.compile(optimizer=optimizers.Adam(lr=1e-3), loss=losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy']) resnet.fit(db_train, validation_data=db_val, validation_freq=1, epochs=100, callbacks=[early_stopping]) resnet.evaluate(db_test)

"""