Write a custom directory title here

Welcome to Markdown Editor

I am an amateur programmer. Recently, I look at artificial intelligence technology. I start with the application of image search. Because I don't have professional knowledge, the use of words will seem unprofessional. This series of articles are coded from the Internet search, I summarize the first commitment to practical, and then carefully study the principles, etc., but also hope that more people to join the exchange, do not dislike my level.

Explain

This default tensorflow+vgg16 has been installed, if someone does not understand the installation, you can feedback me to write another build:

- Computer configuration: personal use is dellD630 computer, dual core + 5G memory + win764 bits;

- Tensorflow version: Because the computer is very old, tensorflow does not use the latest version. Why? Installation of the latest version reported a mistake, so return, according to their own computer situation tensorflow installation, see the problem again.

- Other packages: Using code may also rely on other packages, code use process according to error prompts, what is missing on the line;

- Other problems: before playing on Ubuntu, but feel a little inconvenient, go back to win, the code has no impact, just modify the file path inside the code can be used;

Search logic

From the inverted logic, to get a picture, we first need to generate an identification (that is, feature) for the image, and then compare it in a large number of identification libraries similar to him. Here we will definitely return some comparison result classes, and then sort and display the results. So clarify the essence of the search map:

1. Convert the picture into a digital identifier (feature).

2. Searching for pictures is actually a process of comparing words (features).

The next thing to do is to change the picture into an identification, that is, feature extraction, and then save.

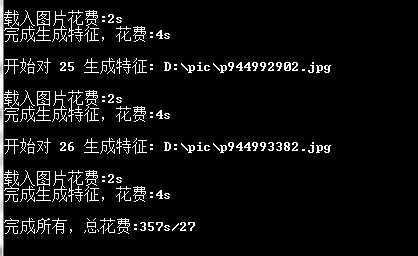

Feature Extraction of Folder Library

# -*- coding: utf-8 -*- import vgg16 import tensorflow as tf import utils import cv2 import numpy as np from scipy.linalg import norm import os import time images = tf.placeholder(dtype=tf.float32, shape=[None, 224, 224, 3]) vgg = vgg16.Vgg16() vgg.build(images) def get_feats_dirs(imagefold): vgg16_feats = np.zeros((27, 4096)) //This may be to generate a matrix, 27 is the number of pictures in my folder, 4096 is the length of the signature. for root, dirs, files in os.walk(imagefold): with tf.Session() as sess: start00=time.clock() for i in range(len(files)): imagePath= root + '\\' + files[i] //Patchwork Picture Path start1=time.clock() print("Start right %d Generative features: %s\n" % (i, imagePath)) img_list = utils.load_image(imagePath)//Loading Pictures start2= time.clock() print("Cost of loading pictures:%ds" % (time.clock() - start1)) batch = img_list.reshape((1, 224, 224, 3))//It can be called formatting pictures to make the standard format of vgg16 start4 = time.clock() feature = sess.run(vgg.fc6, feed_dict={images: batch})#Feed pictures and extract features of fc6 layer start3 = time.clock() feature = np.reshape(feature, [4096]) feature /= norm(feature) # feature normalization feature=np.array(feature) vgg16_feats[i, :] = feature #The eigenvector of each picture is1That's ok dict1.update({i:imagePath}) print("Complete feature generation, cost:%ds\n" % (time.clock()-start1)) print("Complete all, total cost:%ds/%d\n"%(time.clock()-start00,len(dict1))) vgg16_feats = np.save( r'd:\\config\\feats', vgg16_feats)//Preserve all features return vgg16_feats if __name__ == '__main__': get_feats_dirs("D:\\pic")

remaining problems

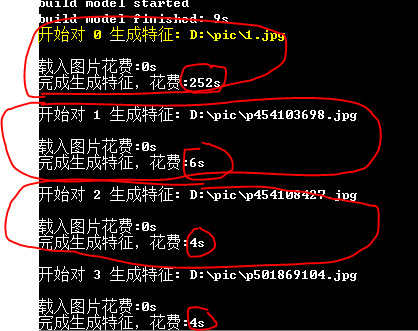

We find that the time of feature generation in the first image is extraordinarily exaggerated, and then it tends to decrease until it is stable. I don't know why this is. The model has been loaded before the program calls the method. What's the reason? Is there any way to make the first image feature stable in the following time? Welcome to teach me. .