Write before:

The Tensorflow object detection API implements the creation of its own dataset and the detection of streaming accounts. Refer to the Dashen Blog for detailed steps and video explanations:

https://blog.csdn.net/dy_guox/article/details/79111949

Environmental Science:

Window 7 64X

Anaconda 3 + python 3.5.2 +Tensorflow 1.9.0 +CPU + Tensorflow object detection API

(1) Establishment of datasets

A: Label datasets: Use LabelImg This small software labels datasets and generates an.xml file with the same name for each picture.(Big Shen Bloggers use to put pictures and.xml in the same folder.)

B: Dataset format conversion: The Tensorflow framework has its own data platform and requires specialized input TFRecords Format Format.

God writes two small python script files, xml_to_csv.py: records the information in the XML file in the folder to the.Csv table (changes the data address at runtime, and the file name of.cvs), generate_tfrecord.py: creates TFRecords format from the.Csv table, see God blogger's github。(Small White's Code Preserve Location)

Note: The filename in the generated.csv file should be the same as the dataset.Store the original image data set in the \models\research\object_detectionimages folder (create one if you don't have one), and if the training and test datasets don't overlap and need to be placed in both folders, I have both placed and not saved in the images folder.

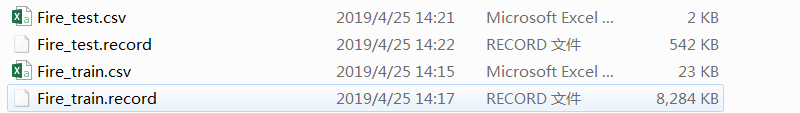

-data/ --test_labels.csv --test.record --train_labels.csv --train.record

-images/ --test/*.jpg --train/*.jpg

Store the converted.csv file in the object_detection\data folder. The blogger suggests that you need to place the generate_tfrecord.py file in the object_detection\ folder, modify the code, and finally generate the corresponding.tfrecond file.

# Change.csv file storage address

os.chdir('D:\\Python3.6.1\\TF_models\\models\\research\\object_detection\\')

flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

FLAGS = flags.FLAGS

# Change the row_label tag name and have several tag categories.

# TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'fire':

return 1

# elif row_label == 'vehicle':

# return 2

else:

NoneResult diagram:

(2) Profiles and Models

(1) Next you need to set up a configuration file to enter Object Detection github Parameter corresponding page download Find a Sample for the configuration file.

Take faster_rcnn_resnet50_coco.config as an example, place it in the training folder (create one if you don't), open it in a text editor (notebook I use), and do the following:

(1)

num_classes: 1

(2)

batch_size: 1

(3)The comment for listening dropped two lines

# fine_tune_checkpoint: "PATH_TO_BE_CONFIGURED/model.ckpt"

# from_detection_checkpoint: true

(4)Modify your own address and.record Name

//train_input_reader:

input_path: "data/Fire_train.record"

label_map_path:"data/Fire.pbtxt"

//eval_input_reader:

input_path: "data/Fire_test.record"

label_map_path: "data/Fire.pbtxt"(2) Create a file under the data folder corresponding to the label_map_path:'data/Fire.pbtxt'modified in the previous step.Copy an original.Pbtxt file named Fire.pbtxt and change the contents to:

item {

id: 1

name: 'fire'

}

#item {

# id: 2

# name: 'blabla'

#}Complete the configuration, you can train the model!!

(3) Training

(1) Open the command line under the models\research\object_detection folder and run the following command: (note the location parameter)

python ./legacy/train.py --logtostderr --train_dir=D:/Python3.6.1/TF_models/models/research/object_detection/training/ --pipeline_config_path=E:\TFmodel\models\research\object_detection\training/faster_rcnn_resnet50_coco.config

Note: It doesn't matter if you interrupt halfway. You can run the Python command again and continue from the last checkpoint.

(2) Tensorboard to visualize the training process.(under the models\research\object_detection folder)

tensorboard --logdir='training'

(3) Save the model

The export_inference_graph.py file is found in the models\researchobject_detection folder. To run this file, you also need to pass in the config and checkpoint parameters.For convenience, create a file Fire_detection folder under the modelsresearchobject_detection folder to store the parameters and test data for this detection, then open the named line under the object_detection folder and run

python export_inference_graph.py \ --input_type image_tensor \ --pipeline_config_path training/faster_rcnn_resnet50_coco.config \ --trained_checkpoint_prefix training/model.ckpt-150 \ --output_directory Fire_detection

--trained_checkpoint_prefix training/model.ckpt-150. This checkpoint (.ckpt-followed number) can find the situation of your own training model under the training folder and fill in the corresponding number (if there are more than one, choose the largest).

--output_directory Fire_detection is the address where the parameter is stored.

(5) Testing

Variable according to object_detection_tutorial.ipynb.

My test code is as follows: for the.py file, some unnecessary comments were removed.

# coding: utf-8

# import cv2

import numpy as np

import os

import six.moves.urllib as urllib

import sys

# import tarfile

import tensorflow as tf

# import zipfile

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from object_detection.utils import ops as utils_ops

if tf.__version__ < '1.4.0':

raise ImportError('Please upgrade your tensorflow installation to v1.4.* or later!')

from utils import label_map_util

from utils import visualization_utils as vis_util

# modify

MODEL_NAME = 'JGB_detection'

PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb'

PATH_TO_LABELS = os.path.join('data', 'Fire.pbtxt')

# modify

NUM_CLASSES = 1

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# modify

PATH_TO_TEST_IMAGES_DIR = 'Fire_detection/test_images'

TEST_IMAGE_PATHS = os.listdir('D:\\Python3.6.1\\TF_models\\models\\research\\object_detection\\Fire_detection\\test_images')

os.chdir('D:\\Python3.6.1\\TF_models\\models\\research\\object_detection\\Fire_detection\\test_images')

IMAGE_SIZE = (12, 8)

def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

i=1

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

# plt.figure(figsize=IMAGE_SIZE)

plt.figure(figsize=image.size)

plt.imshow(image_np)

plt.axis('off')

plt.savefig('D:\\Python3.6.1\\TF_models\\models\\research\\object_detection\\Fire_detection\\output_images\\%d.jpg'%(i))

i=i+1

Output results of 200 iterations of 300 pictures are already good, there is no SSD fast using fasterRcnn.