Recently, we have done several introductory projects on deeplearning.ai, which have benefited a lot. We have made special records to learn new things from the past.

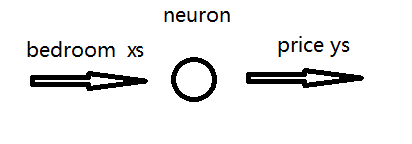

(1) Predicting house prices, linear regression

By giving the market price of house price, 100k for one bedroom and 150k for two bedrooms. Predict the price of seven bedrooms.

Only single neuron structure is used to predict house price, and SGD optimizer is used.

Single neuron structure: equivalent to linear structure  g=1 (i.e. linear activation function).

g=1 (i.e. linear activation function).

SGD: Random gradient optimization.

Code:

import tensorflow as tf import numpy as np from tensorflow import keras model = tf.keras.Sequential([keras.layers.Dense(units=1, input_shape=[1])]) *Single neuron units=1 model.compile(optimizer='sgd', loss='mean_squared_error') *Each iteration trains a sample and the gradient descent runs once to update the loss function. xs = np.array([1, 2, 3, 4, 5, 6]) * Number of rooms ys = np.array([1,1.5 ,2, 2.5, 3, 3.5]) *Scaling house prices/100k,Accelerating the Convergence Rate of the Model model.fit(xs, ys, epochs=500) *Training 500 times print(model.predict([7])) *Output with predicted input of 7...

Result: Because of the small number of samples given, the forecast result after 500 training sessions is 399.8k, and the house price rule of 50k+50k*n is basically fitted.

. . Epoch 497/500 6/6 [==============================] - 0s 509us/sample - loss: 1.3851e-06 Epoch 498/500 6/6 [==============================] - 0s 325us/sample - loss: 1.3749e-06 Epoch 499/500 6/6 [==============================] - 0s 305us/sample - loss: 1.3649e-06 Epoch 500/500 6/6 [==============================] - 0s 388us/sample - loss: 1.3549e-06 [[3.998321]]

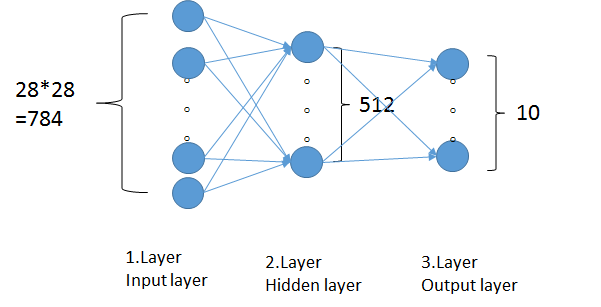

(2) Handwritten numeral recognition, Deep NN structure.

Training through the built-in minist 60000 training set.

Points: calback function call, to achieve the target value that is to interrupt training.

The DNN structure is shown as follows:

Code:

import tensorflow as tf

class myCallback(tf.keras.callbacks.Callback): *callback object

def on_epoch_end(self, epoch, logs={}):

if(logs.get('acc')>0.99):

print("\nReached 99% accuracy so cancelling training!")

self.model.stop_training = True

mnist = tf.keras.datasets.mnist *Import minist data set

(x_train, y_train),(x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

callbacks = myCallback()

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)), *Will 28*28 Pixel Listing

tf.keras.layers.Dense(512, activation=tf.nn.relu),

tf.keras.layers.Dense(10, activation=tf.nn.softmax)

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=10, callbacks=[callbacks]) Result:

Epoch 1/10 60000/60000 [==============================] - 7s 119us/sample - loss: 0.2020 - acc: 0.9411 Epoch 2/10 60000/60000 [==============================] - 7s 116us/sample - loss: 0.0803 - acc: 0.9753 Epoch 3/10 60000/60000 [==============================] - 7s 124us/sample - loss: 0.0536 - acc: 0.9833 Epoch 4/10 60000/60000 [==============================] - 7s 122us/sample - loss: 0.0373 - acc: 0.9879 Epoch 5/10 59872/60000 [============================>.] - ETA: 0s - loss: 0.0264 - acc: 0.9919 Reached 99% accuracy so cancelling training! 60000/60000 [==============================] - 7s 125us/sample - loss: 0.0263 - acc: 0.9920 <tensorflow.python.keras.callbacks.History at 0x7f67010de8d0>