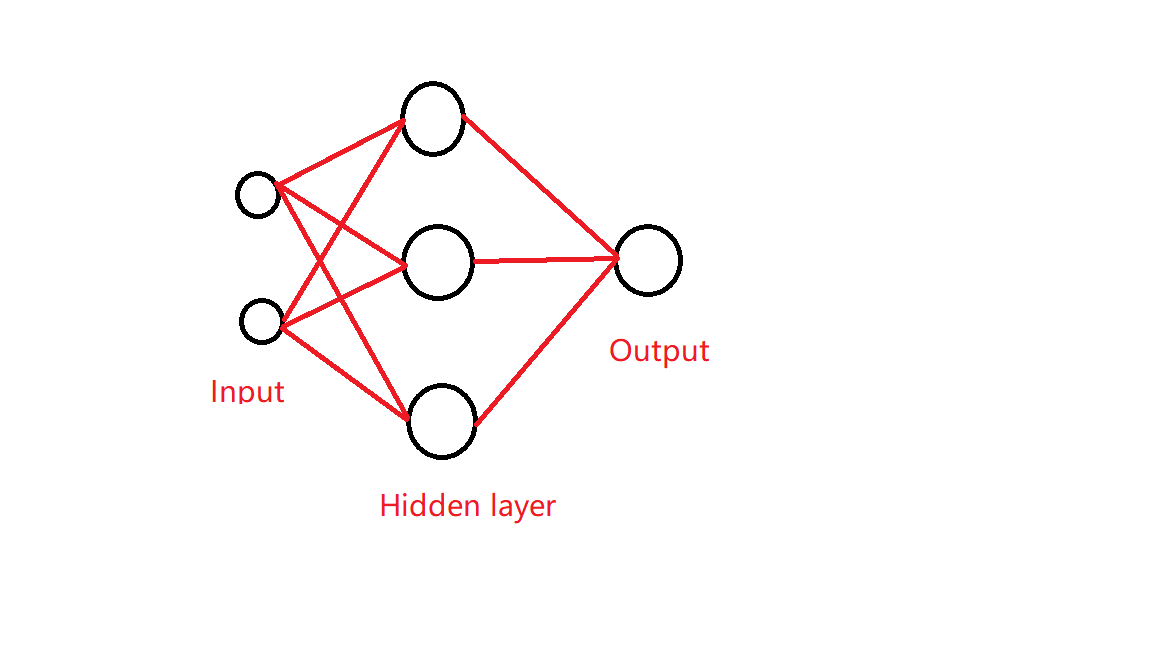

We envision a neural network with two input s, one hidden layer in the middle, three neurons in the hidden layer, and one output.

The following is an example:

Before implementing the forward propagation of this neural network, let's add some important knowledge.

Initialization of weights w and input

We initialize the weight w by generating these weights randomly, which can generally be used to generate random numbers exactly on a normal distribution curve. This is also the most natural way to generate random numbers:

import tensorflow as tf #In general, the parameters on a neural network are w Arrays, of course, we usually use random numbers to generate these parameters w=tf.Variable(tf.random_normal([2,3],stddev=2,mean=0,seed=1)) #among stddev Represents the standard deviation, mean Represents the mean value, [means a number that randomly generates a normal distribution] shape

So our weights are generated, there are several ways we initialize the input, and the pseudocode is as follows:

In addition to this, we can also use tf.constant([1,2,3]) to generate the specified value tf.zeros([2,3],int32), used to generate all zeros tf.ones([2,3],int32), together to generate all 1 tf.fill([3,2],6), generating the specified value

Next, we write a neural network with only one initial value input and use tensorflow to propagate it forward.Since there is only one initial value and there are two ways to achieve it, let's look at the first one:

2. Forward propagation of neural networks (with only one initial value, method 1)

import tensorflow as tf x=tf.constant([[0.7,0.5]])#Notice that there are two brackets written here! w1=tf.Variable(tf.random_normal([2,3],stddev=1,seed=1)) w2=tf.Variable(tf.random_normal([3,1],stddev=1,seed=1)) #Then define the process of forward propagation a=tf.matmul(x,w1) y=tf.matmul(a,w2) #utilize session Compute the results of forward propagation with tf.Session() as sess: init_op=tf.global_variables_initializer() sess.run(init_op) print(sess.run(y))#Use here run(y)Print out the results, because the last output we define is y

Output:

[[3.0904665]]

3. Forward propagation of neural networks (with only one initial value, method 2)

We use placeholder to initialize the data, assign it to input, and use placeholder to assign either one or more values, which is why it's common. The code is as follows:

import tensorflow as tf x=tf.placeholder(tf.float32,shape=(1,2)) w1=tf.Variable(tf.random_normal([2,3],stddev=1,seed=1)) w2=tf.Variable(tf.random_normal([3,1],stddev=1,seed=1)) #Similarly, the process of forward propagation is defined a=tf.matmul(x,w1) y=tf.matmul(a,w2) #utilize session Compute the results of forward propagation with tf.Session() as sess: init_op=tf.global_variables_initializer() sess.run(init_op) print(sess.run(y,feed_dict={x:[[0.7,0.5]]}))#Use here run(y)Print out the results, because the last output we define is y

Output:

[[3.0904665]]

The result is the same as the method.Now you can propagate multiple data forward, using the placeholder method

4. Forward propagation of neural networks (multiple initial values)

The code is as follows:

import tensorflow as tf x=tf.placeholder(tf.float32,shape=(None,2)) w1=tf.Variable(tf.random_normal([2,3],stddev=1,seed=1)) w2=tf.Variable(tf.random_normal([3,1],stddev=1,seed=1) #Similarly, the process of forward propagation is defined a=tf.matmul(x,w1) y=tf.matmul(a,w2 #utilize session Compute the results of forward propagation with tf.Session() as sess: init_op=tf.global_variables_initializer() sess.run(init_op) print(sess.run(y,feed_dict={x:[[0.7,0.5],[0.2,0.3],[0.5,0.5]]}))

Output:

[[3.0904665] [1.2236414] [2.5171587]]

Complete!It looks very simple.Tenorflow is still widely used in industry. Friends who want to start a business and work in the industry can have a good understanding of it.