APM is the abbreviation of Application Performance Monitoring, which monitors and manages the performance and availability of software applications. Application performance management is very important for the continuous and stable operation of an application. So this article talks about how to accurately monitor and report data from the perspective of iOS App performance management

The performance of App is one of the important factors that affect user experience. Performance problems mainly include: Crash, network request error or timeout, slow UI response speed, main thread stuck, high CPU and memory utilization, large power consumption, etc. Most of the problems are caused by developers' misuse of thread locks, system functions, programming specifications, data structures, and so on. The key to solve the problem is to find and locate the problem as early as possible.

This article focuses on the reasons for APM and how to collect data. After APM data collection, combined with data reporting mechanism, upload data to the server according to a certain strategy. The server consumes this information and produces reports. Please combine Sisters It summarizes how to build a flexible, configurable and powerful data reporting component.

1, Caton monitoring

The Caton problem is the problem that the main thread cannot respond to user interaction. It affects the user's direct experience, so the carton monitoring for App is an important part of APM.

FPS (frame per second) the number of frame refreshes per second. For iPhone, 60 is the best. For some iPad models, 120 is also a reference parameter for Caton monitoring. Why is it a reference parameter? Because it's not accurate. Let's talk about how to get FPS first. Cadisplaylink is a system timer that refreshes the view at the same rate as the frame refresh rate. [CADisplayLink displayLinkW ithTarget:self selector :@selector (###:)]. As for why not let's look at the following example code

_displayLink = [CADisplayLink displayLinkWithTarget:self selector:@selector(p_displayLinkTick:)]; [_displayLink setPaused:YES]; [_displayLink addToRunLoop:[NSRunLoop currentRunLoop] forMode:NSRunLoopCommonModes];

As shown in the code, the CADisplayLink object is added to a Mode of the specified RunLoop. So it's still CPU level operation. The experience of carton is the result of the whole image rendering: CPU + GPU. Please keep looking down

1. Screen drawing principle

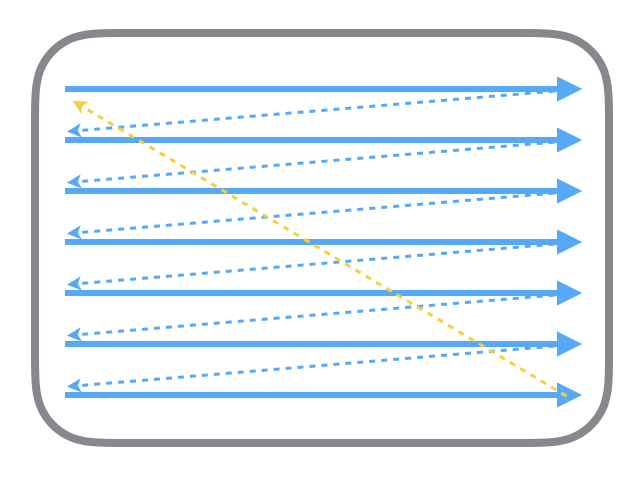

Talk about the principle of the old CRT display. The CRT electron gun scans from the top to the next line according to the above method. After the scanning is completed, the display will show a frame picture, and then the electron gun returns to the initial position to continue the next scanning. In order to synchronize the display process of the display with the video controller of the system, the display (or other hardware) will use the hardware clock to generate a series of timing signals. When the gun changes to a new line and is ready for scanning, the display will send out a horizontal synchronization signal (HSync for short); when a frame of picture is drawn, the gun will return to its original position, and before the next frame is ready to be drawn, the display will send out a Vertical synchronization signal (VSync for short). The display is usually refreshed at a fixed frequency, which is the frequency of VSync signal generation. Although the current display is basically LCD, but the principle remains the same.

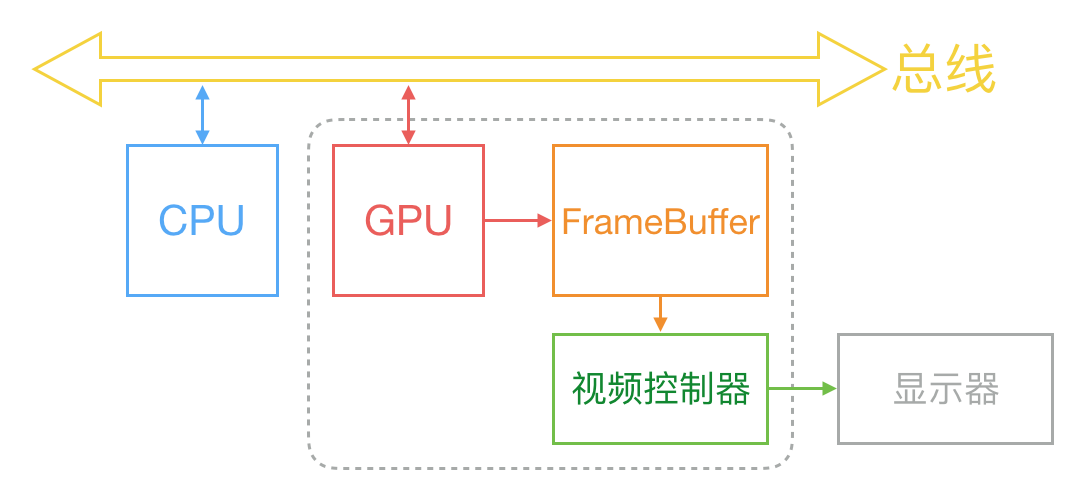

Generally, the display of a picture on the screen is coordinated by CPU, GPU and display according to the above figure. CPU calculates the real content (such as view creation, layout calculation, picture decoding, text drawing, etc.) according to the code written by the engineer, and then submits the calculation results to GPU, which is responsible for layer synthesis and texture rendering, and then GPU submits the rendering results to the frame buffer. Then the video controller will read the data of the frame buffer line by line according to the VSync signal, and pass it to the display through the digital to analog conversion.

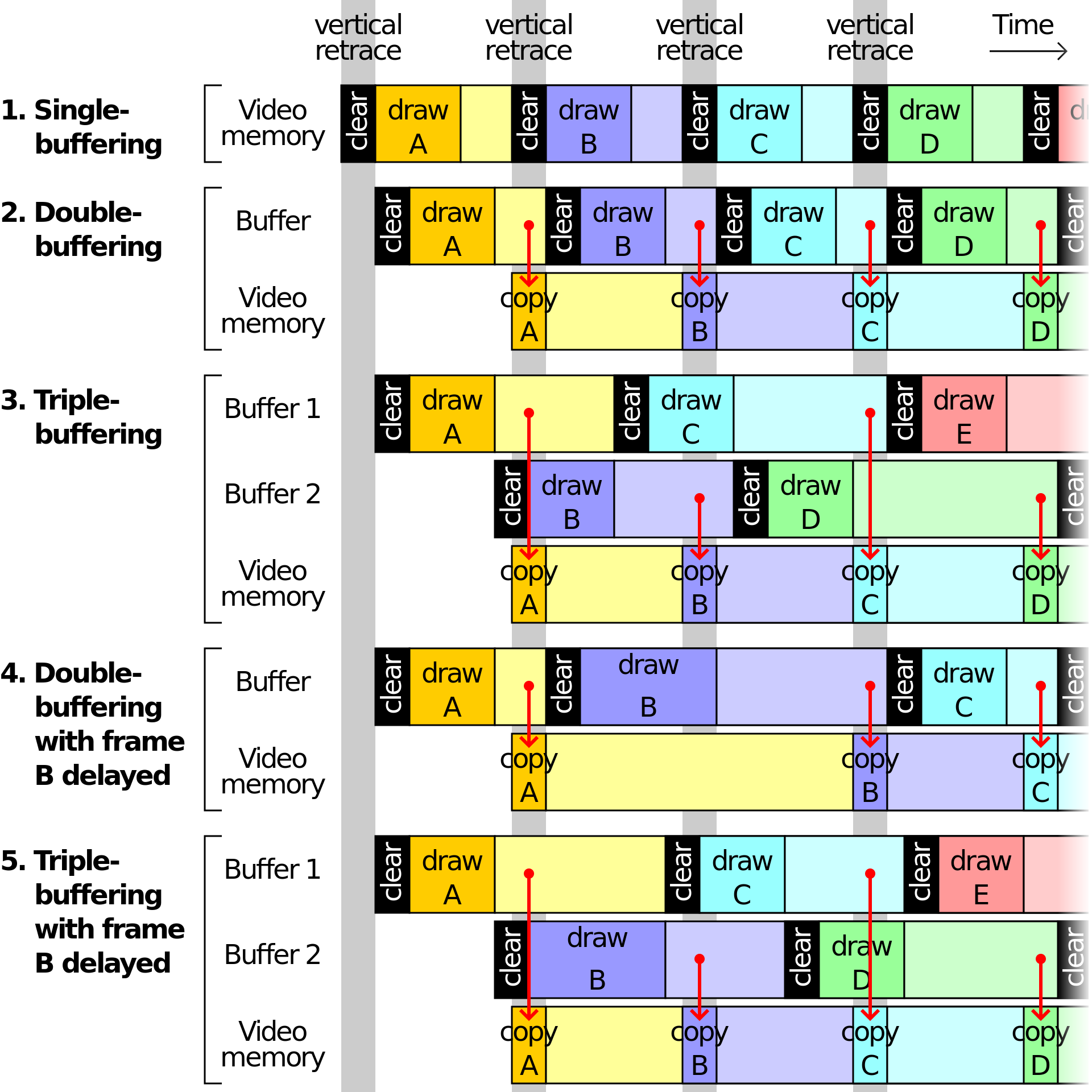

In the case of only one frame buffer, there are efficiency problems in reading and refreshing the frame buffer. In order to solve the efficiency problems, the display system will introduce two buffers, namely double buffer mechanism. In this case, GPU will pre render a frame and put it into the frame buffer for the video controller to read. After the next frame is rendered, GPU will directly point the pointer of the video controller to the second buffer. Improved efficiency.

At present, the double buffer improves the efficiency, but brings new problems: when the video controller has not finished reading, that is, the display part of the screen content, GPU Submit a new rendered frame to another frame buffer and point the pointer of the video controller to the new frame buffer. The video controller will display the lower half of the new frame data on the screen, causing the picture tearing.

To solve this problem, GPU usually has a mechanism called V-Sync. When the V-Sync signal is turned on, GPU will wait until the video controller sends the V-Sync signal before rendering a new frame and updating the frame buffer. These mechanisms solve the problem of tearing the picture and increase the smoothness of the picture. But more computing resources are needed

answering question

Some people may see that "when the vertical sync signal is turned on, the GPU will wait until the video controller sends the V-Sync signal before rendering a new frame and updating the frame buffer". Here they think that the GPU will only render a new frame and update the frame buffer after receiving the V-Sync signal. Does the double buffer lose its meaning?

Imagine a process in which a display shows the first image and the second image. First, in the case of double buffer, GPU first renders a frame image and stores it in the frame buffer, and then makes the pointer of video controller directly in the buffer to display the first frame image. After the content display of the first frame image is completed, the video controller sends V-Sync signal, and the GPU renders the second frame image after receiving the V-Sync signal and points the pointer of the video controller to the second frame buffer.

It seems that the second image is a V-Sync signal sent by the video controller after waiting for the first frame to be displayed. Is it? Is it true? 😭 Of course not. 🐷 Otherwise, double buffer has no meaning

Uncover the secrets. See the picture below

When the first V-Sync signal arrives, first render a frame image and put it into the frame buffer, but do not display it. When the second V-Sync signal is received, read the first rendered result (the video controller's pointer points to the first frame buffer), render a new frame image at the same time and store the result in the second frame buffer, etc. receive the third V-Sync After the signal, read the content of the second frame buffer (the pointer of the video controller points to the second frame buffer), and start the rendering of the third frame image and send it to the first frame buffer, and continue to cycle in turn.

Please check the information, ladder is required: Multiple buffering

2. Causes of carton

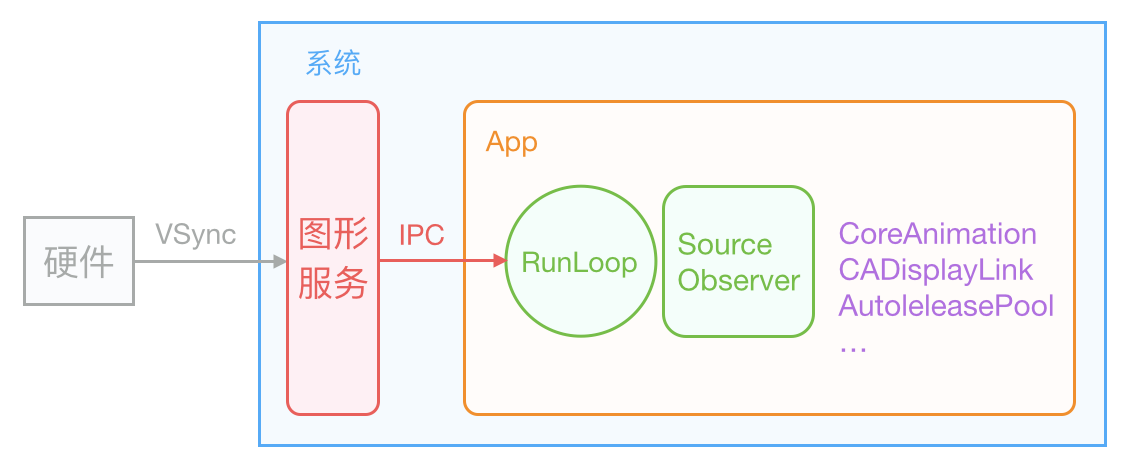

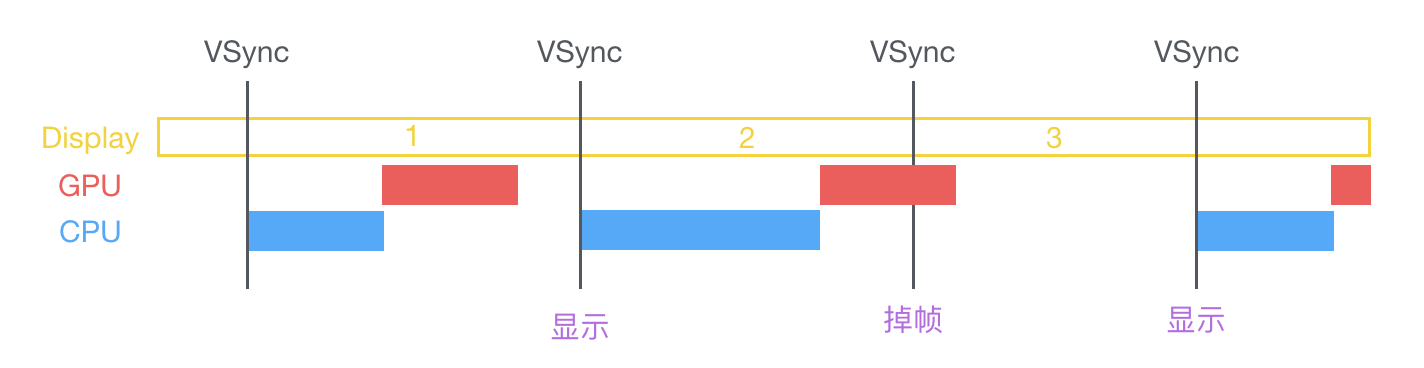

After the VSync signal arrives, the system graphics service will notify the App through CADisplayLink and other mechanisms, and the App main thread starts to calculate the display content (view creation, layout calculation, picture decoding, text drawing, etc.) in the CPU. Then the calculation content is submitted to GPU, which is transformed, synthesized and rendered by layers. Then GPU submits the rendering results to the frame buffer, waiting for the next VSync signal to come and display the rendered results. In the case of the vertical synchronization mechanism, if the CPU or GPU fails to submit the content within a VSync time cycle, the frame will be discarded, waiting for the next opportunity to display again. At this time, the screen is still the previously rendered image, so this is the reason why the CPU and GPU layer interface is stuck.

At present, there are two caching mechanisms and three buffering mechanisms in iOS devices. Android is now the mainstream of three buffering mechanism, and in the early stage, it was a single buffering mechanism.

An example of iOS three buffer mechanism

There are many reasons for CPU and GPU resource consumption, such as frequent creation of objects, attribute adjustment, file reading, view level adjustment, layout calculation (AutoLayout When there are more views, it is more difficult to solve linear equation, picture decoding (reading optimization of large picture), image drawing, text rendering, database reading (reading or writing optimistic lock, pessimistic lock scene), lock use (for example, improper use of spin lock will waste CPU), etc. Developers find the best solution based on their own experience (this is not the focus of this article).

3. How APM monitors and reports Caton

CADisplayLink is definitely not used. This FPS is only for reference. Generally speaking, there are two ways to monitor Caton: monitoring RunLoop status callback and sub thread ping main thread

3.1 RunLoop status monitoring mode

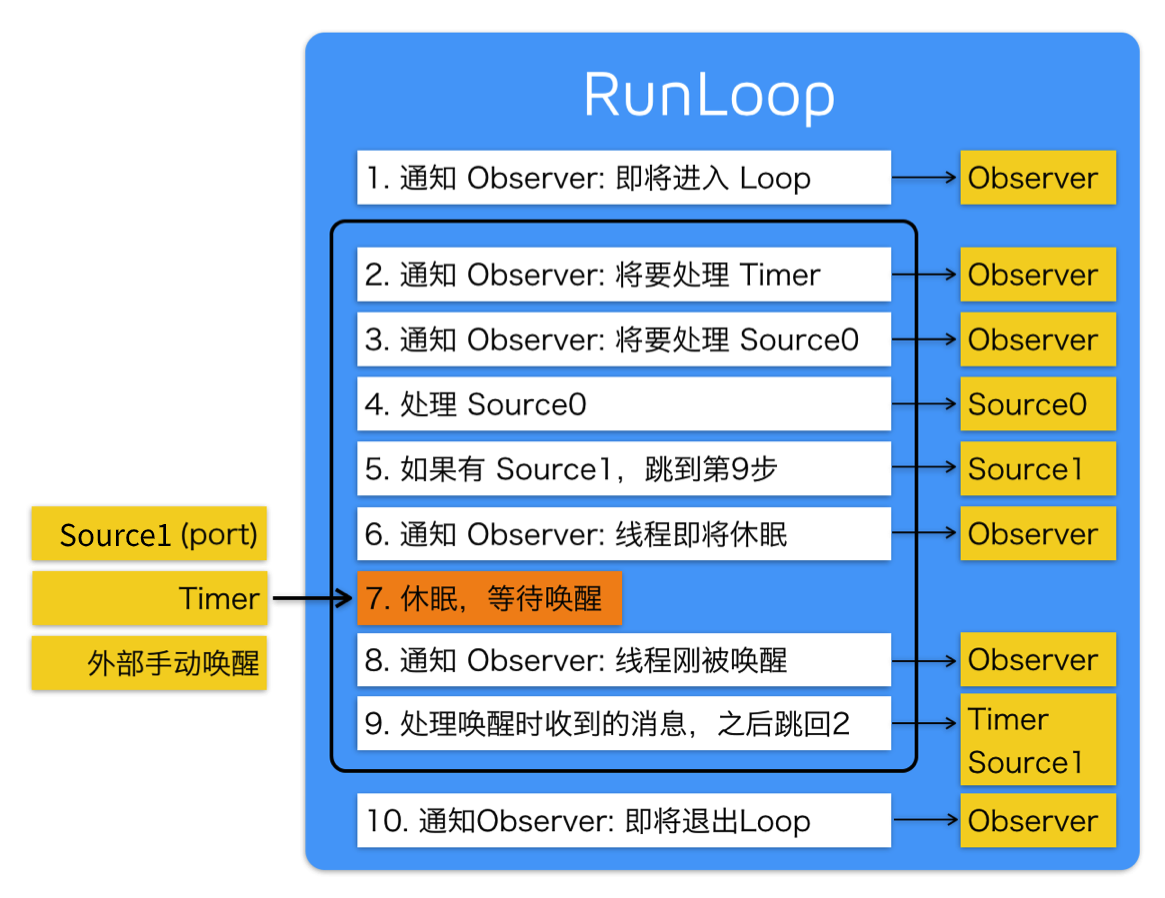

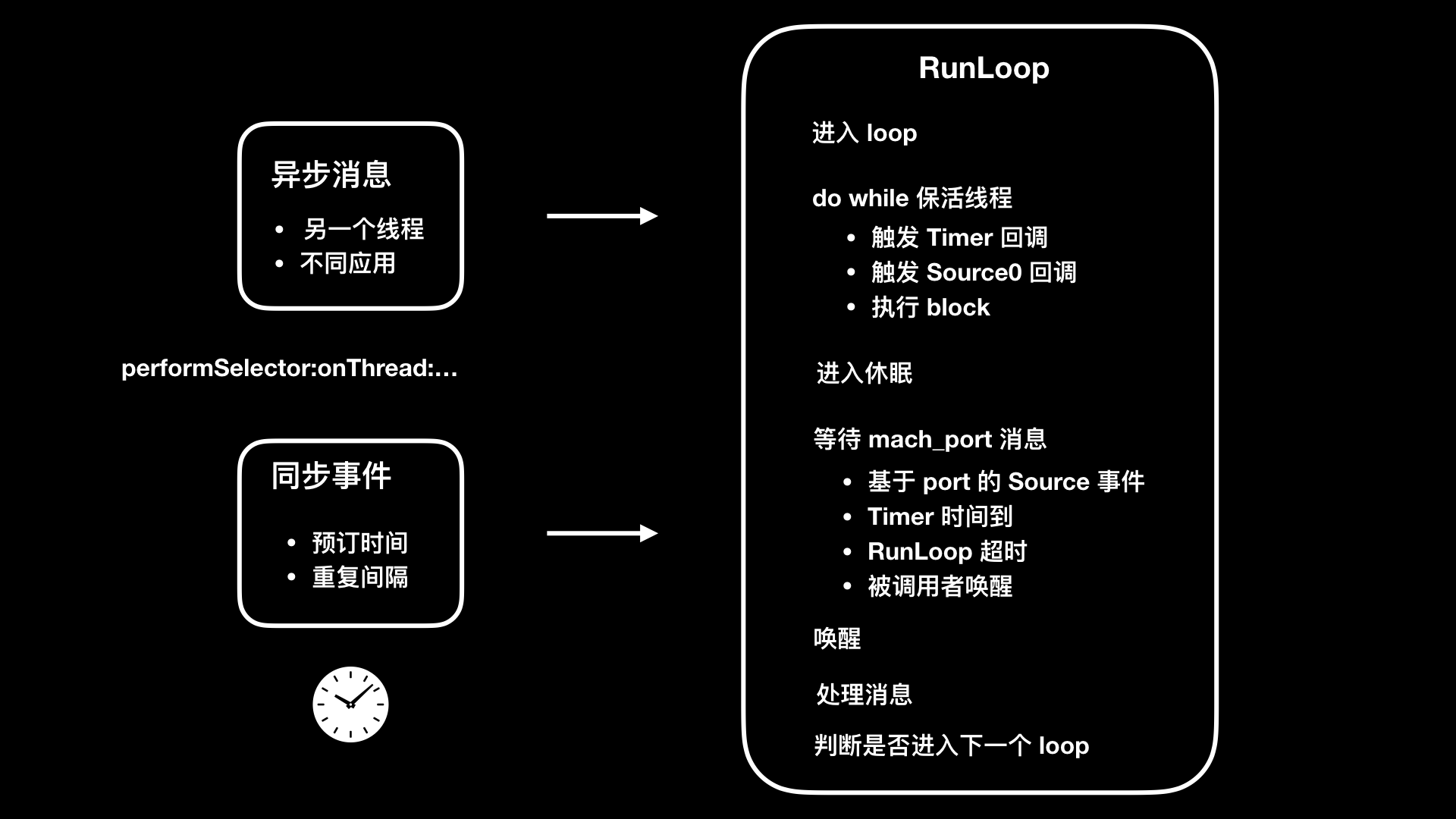

RunLoop is responsible for monitoring the input source for scheduling. For example, network, input device, periodic or delayed event, asynchronous callback, etc. RunLoop receives two types of input sources: one is an asynchronous message (source0 event) from another thread or from different applications, and the other is an event from a scheduled or repeated interval.

The RunLoop status is as follows

Step 1: inform the Observers that the RunLoop will start to enter the loop, and then enter the loop

if (currentMode->_observerMask & kCFRunLoopEntry ) // Notify observers that runloop is about to enter the loop __CFRunLoopDoObservers(rl, currentMode, kCFRunLoopEntry); // Enter loop result = __CFRunLoopRun(rl, currentMode, seconds, returnAfterSourceHandled, previousMode);

Step 2: start the do while loop to keep the thread alive, notify the Observers, run loop to trigger Timer callback and Source0 callback, and then execute the added block

if (rlm->_observerMask & kCFRunLoopBeforeTimers) // Notify Observers: RunLoop is about to trigger Timer callback __CFRunLoopDoObservers(rl, rlm, kCFRunLoopBeforeTimers); if (rlm->_observerMask & kCFRunLoopBeforeSources) // Notify Observers: RunLoop is about to trigger the Source callback __CFRunLoopDoObservers(rl, rlm, kCFRunLoopBeforeSources); // Execute the added block __CFRunLoopDoBlocks(rl, rlm);

Step 3: after the RunLoop triggers the Source0 callback, if Source1 is ready, it will jump to handle_msg to process the message.

// If Source1 (port based) is in the ready state, process this Source1 directly and jump to process the message if (MACH_PORT_NULL != dispatchPort && !didDispatchPortLastTime) { #if DEPLOYMENT_TARGET_MACOSX || DEPLOYMENT_TARGET_EMBEDDED || DEPLOYMENT_TARGET_EMBEDDED_MINI msg = (mach_msg_header_t *)msg_buffer; if (__CFRunLoopServiceMachPort(dispatchPort, &msg, sizeof(msg_buffer), &livePort, 0, &voucherState, NULL)) { goto handle_msg; } #elif DEPLOYMENT_TARGET_WINDOWS if (__CFRunLoopWaitForMultipleObjects(NULL, &dispatchPort, 0, 0, &livePort, NULL)) { goto handle_msg; } #endif }

Step 4: after the callback is triggered, notify the Observers that they are going to sleep

Boolean poll = sourceHandledThisLoop || (0ULL == timeout_context->termTSR); // Notify observers that the thread of runloop is about to enter sleep if (!poll && (rlm->_observerMask & kCFRunLoopBeforeWaiting)) __CFRunLoopDoObservers(rl, rlm, kCFRunLoopBeforeWaiting); __CFRunLoopSetSleeping(rl);

Step 5: after entering sleep, you will wait for mach_port message to wake up again. Only the following four situations can be awakened again.

- port based source events

- Timer time is up

- RunLoop timeout

- Awakened by callee

do { if (kCFUseCollectableAllocator) { // objc_clear_stack(0); // <rdar://problem/16393959> memset(msg_buffer, 0, sizeof(msg_buffer)); } msg = (mach_msg_header_t *)msg_buffer; __CFRunLoopServiceMachPort(waitSet, &msg, sizeof(msg_buffer), &livePort, poll ? 0 : TIMEOUT_INFINITY, &voucherState, &voucherCopy); if (modeQueuePort != MACH_PORT_NULL && livePort == modeQueuePort) { // Drain the internal queue. If one of the callout blocks sets the timerFired flag, break out and service the timer. while (_dispatch_runloop_root_queue_perform_4CF(rlm->_queue)); if (rlm->_timerFired) { // Leave livePort as the queue port, and service timers below rlm->_timerFired = false; break; } else { if (msg && msg != (mach_msg_header_t *)msg_buffer) free(msg); } } else { // Go ahead and leave the inner loop. break; } } while (1);

Step 6: notify the Observer when waking up. The thread of RunLoop has just been woken up

// Notify observers that the thread of runloop has just been woken up if (!poll && (rlm->_observerMask & kCFRunLoopAfterWaiting)) __CFRunLoopDoObservers(rl, rlm, kCFRunLoopAfterWaiting); // Processing messages handle_msg:; __CFRunLoopSetIgnoreWakeUps(rl);

Step 7: after RunLoop wakes up, process the message received during wakeup

- If Timer time is up, trigger Timer's callback

- If it is a dispatch, execute block

- If it is a source1 event, handle this event

#if USE_MK_TIMER_TOO // If a Timer reaches the time, trigger the Timer's callback else if (rlm->_timerPort != MACH_PORT_NULL && livePort == rlm->_timerPort) { CFRUNLOOP_WAKEUP_FOR_TIMER(); // On Windows, we have observed an issue where the timer port is set before the time which we requested it to be set. For example, we set the fire time to be TSR 167646765860, but it is actually observed firing at TSR 167646764145, which is 1715 ticks early. The result is that, when __CFRunLoopDoTimers checks to see if any of the run loop timers should be firing, it appears to be 'too early' for the next timer, and no timers are handled. // In this case, the timer port has been automatically reset (since it was returned from MsgWaitForMultipleObjectsEx), and if we do not re-arm it, then no timers will ever be serviced again unless something adjusts the timer list (e.g. adding or removing timers). The fix for the issue is to reset the timer here if CFRunLoopDoTimers did not handle a timer itself. 9308754 if (!__CFRunLoopDoTimers(rl, rlm, mach_absolute_time())) { // Re-arm the next timer __CFArmNextTimerInMode(rlm, rl); } } #endif // If there is a dispatch to main_ Block of queue, execute block else if (livePort == dispatchPort) { CFRUNLOOP_WAKEUP_FOR_DISPATCH(); __CFRunLoopModeUnlock(rlm); __CFRunLoopUnlock(rl); _CFSetTSD(__CFTSDKeyIsInGCDMainQ, (void *)6, NULL); #if DEPLOYMENT_TARGET_WINDOWS void *msg = 0; #endif __CFRUNLOOP_IS_SERVICING_THE_MAIN_DISPATCH_QUEUE__(msg); _CFSetTSD(__CFTSDKeyIsInGCDMainQ, (void *)0, NULL); __CFRunLoopLock(rl); __CFRunLoopModeLock(rlm); sourceHandledThisLoop = true; didDispatchPortLastTime = true; } // If a source 1 (port based) issues an event, handle the event else { CFRUNLOOP_WAKEUP_FOR_SOURCE(); // If we received a voucher from this mach_msg, then put a copy of the new voucher into TSD. CFMachPortBoost will look in the TSD for the voucher. By using the value in the TSD we tie the CFMachPortBoost to this received mach_msg explicitly without a chance for anything in between the two pieces of code to set the voucher again. voucher_t previousVoucher = _CFSetTSD(__CFTSDKeyMachMessageHasVoucher, (void *)voucherCopy, os_release); CFRunLoopSourceRef rls = __CFRunLoopModeFindSourceForMachPort(rl, rlm, livePort); if (rls) { #if DEPLOYMENT_TARGET_MACOSX || DEPLOYMENT_TARGET_EMBEDDED || DEPLOYMENT_TARGET_EMBEDDED_MINI mach_msg_header_t *reply = NULL; sourceHandledThisLoop = __CFRunLoopDoSource1(rl, rlm, rls, msg, msg->msgh_size, &reply) || sourceHandledThisLoop; if (NULL != reply) { (void)mach_msg(reply, MACH_SEND_MSG, reply->msgh_size, 0, MACH_PORT_NULL, 0, MACH_PORT_NULL); CFAllocatorDeallocate(kCFAllocatorSystemDefault, reply); } #elif DEPLOYMENT_TARGET_WINDOWS sourceHandledThisLoop = __CFRunLoopDoSource1(rl, rlm, rls) || sourceHandledThisLoop; #endif

Step 8: judge whether to enter the next loop according to the current RunLoop status. When it is forced to stop or the loop times out, it will not continue to the next loop, otherwise it will enter the next loop

if (sourceHandledThisLoop && stopAfterHandle) { // When entering the loop, the parameter says that after handling the event, it will return retVal = kCFRunLoopRunHandledSource; } else if (timeout_context->termTSR < mach_absolute_time()) { // Timeout exceeded for incoming parameter token retVal = kCFRunLoopRunTimedOut; } else if (__CFRunLoopIsStopped(rl)) { __CFRunLoopUnsetStopped(rl); // Forced to stop by an external caller retVal = kCFRunLoopRunStopped; } else if (rlm->_stopped) { rlm->_stopped = false; retVal = kCFRunLoopRunStopped; } else if (__CFRunLoopModeIsEmpty(rl, rlm, previousMode)) { // No source/timer retVal = kCFRunLoopRunFinished; }

For complete and annotated RunLoop code, see here . Source1 is used by RunLoop to handle system events from Mach port, and Source0 is used to handle user events. After receiving the system event of source1, it essentially calls the handler of Source0 event.

RunLoop 6 states

typedef CF_OPTIONS(CFOptionFlags, CFRunLoopActivity) { kCFRunLoopEntry , // Enter loop kCFRunLoopBeforeTimers , // Trigger Timer callback kCFRunLoopBeforeSources , // Trigger Source0 callback kCFRunLoopBeforeWaiting , // Wait for mach_port message kCFRunLoopAfterWaiting ), // Receive mach_port message kCFRunLoopExit , // Exit loop kCFRunLoopAllActivities // All status changes of loop }

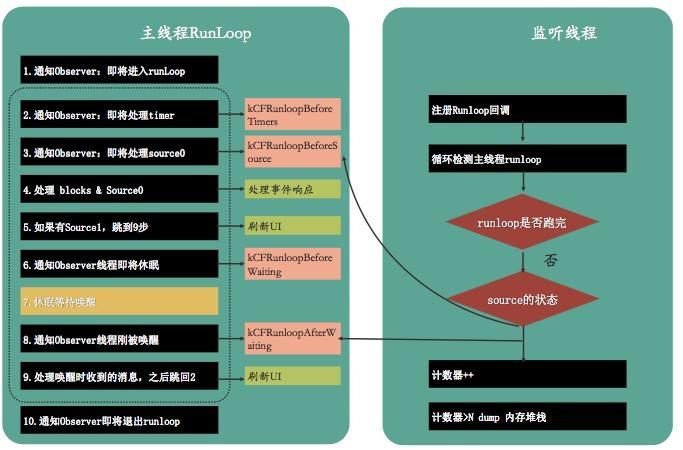

RunLoop will block the thread if the method before entering sleep takes too long to execute, or if the thread wakes up and receives messages for too long to enter the next step. If it's the main thread, it's stuck.

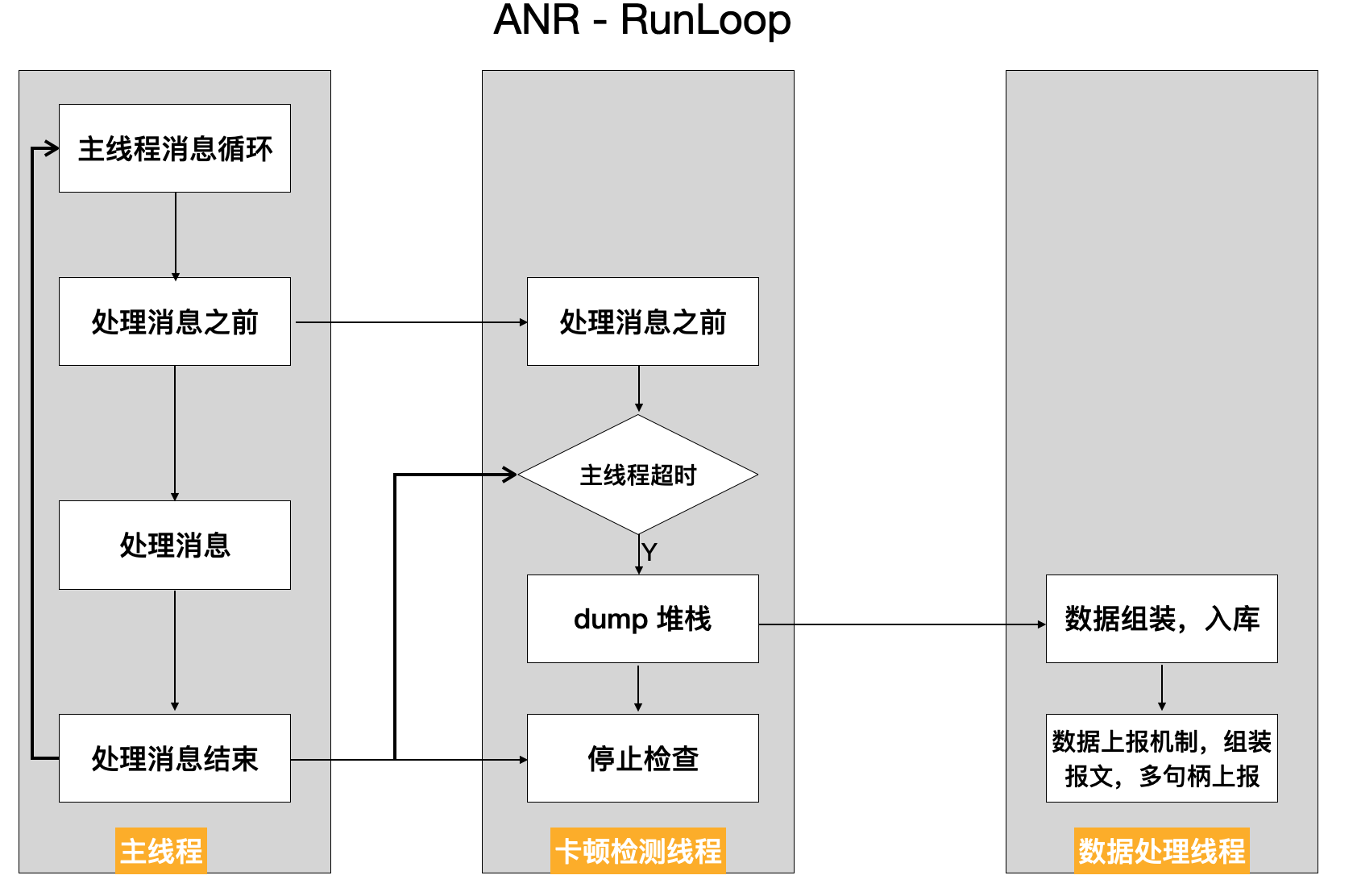

Once it is found that the state of KCFRunLoopBeforeSources before sleep or KCFRunLoopAfterWaiting after wake-up does not change within the set time threshold, it can be judged as stuck. At this time, dump the stack information, restore the crime scene, and then solve the problem of stuck.

Start a subthread to continuously loop to check whether it is stuck. It is considered to be stuck after n times of exceeding the threshold value. After being stuck, stack dump and report (with certain mechanism, data processing will be discussed in the next part).

WatchDog has different values in different states.

- Launch: 20s

- Resume: 10s

- Suspend: 10s

- Quit: 6s

- Background: 3min (10min can be applied before iOS7; changed to 3min later; can be applied continuously, up to 10min)

The Caton threshold is set based on the WatchDog mechanism. The threshold value in the APM system needs to be less than the WatchDog value, so the value range is between [1, 6]. The industry usually chooses 3 seconds.

Through long dispatch_ semaphore_ wait(dispatch_ semaphore_ t dsema, dispatch_ time_ Return zero on success, or non zero if the timeout occurred.

Many people may wonder why KCFRunLoopBeforeSources and KCFRunLoopAfterWaiting are selected when there are so many RunLoop states? Because most of them are between kcfrunloop before sources and kcfrunloop after waiting. For example, App internal events of type Source0

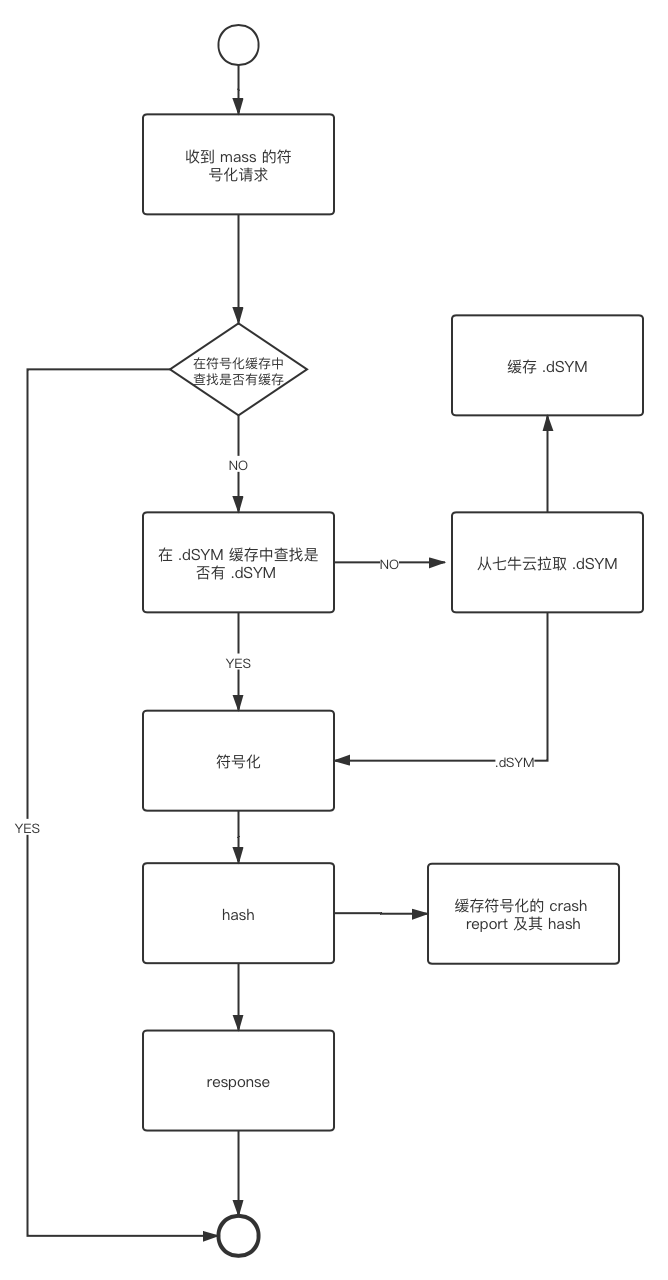

The flow chart of Runloop detection is as follows:

The key codes are as follows:

// Set the running environment of Runloop observer CFRunLoopObserverContext context = {0, (__bridge void *)self, NULL, NULL}; // Create a Runloop observer object _observer = CFRunLoopObserverCreate(kCFAllocatorDefault, kCFRunLoopAllActivities, YES, 0, &runLoopObserverCallBack, &context); // Add the new observer to the runloop of the current thread CFRunLoopAddObserver(CFRunLoopGetMain(), _observer, kCFRunLoopCommonModes); // Create signal _semaphore = dispatch_semaphore_create(0); __weak __typeof(self) weakSelf = self; // Monitoring time in child thread dispatch_async(dispatch_get_global_queue(0, 0), ^{ __strong __typeof(weakSelf) strongSelf = weakSelf; if (!strongSelf) { return; } while (YES) { if (strongSelf.isCancel) { return; } // N times of stuck over threshold T is recorded as one stuck long semaphoreWait = dispatch_semaphore_wait(self->_semaphore, dispatch_time(DISPATCH_TIME_NOW, strongSelf.limitMillisecond * NSEC_PER_MSEC)); if (semaphoreWait != 0) { if (self->_activity == kCFRunLoopBeforeSources || self->_activity == kCFRunLoopAfterWaiting) { if (++strongSelf.countTime < strongSelf.standstillCount){ continue; } // Stack information dump and data reporting mechanism are combined to upload data to the server according to certain strategies. Stack dump is explained below. Data reporting will create powerful, flexible and configurable data reporting components in( https://github.com/FantasticLBP/knowledge-kit/blob/master/Chapter1%20-%20iOS/1.80.md )Talk about } } strongSelf.countTime = 0; } });

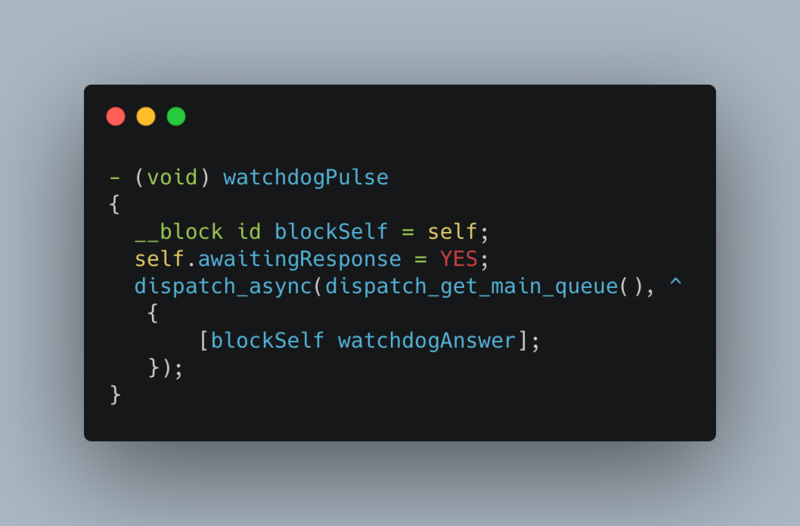

3.2 sub thread ping main thread listening mode

Start a sub thread and create a semaphore with an initial value of 0 and a boolean type flag bit with an initial value of YES. Send the task with flag set to NO to the main thread, and determine whether the flag is successful by the main thread (the value is NO) after the time is up. If it is not successful, it is considered that the pig thread is stuck. At this time, dump stack information and data reporting mechanism are combined to upload data to the server according to certain policies. Data reporting will be done in Build a powerful, flexible and configurable data reporting component speak

while (self.isCancelled == NO) { @autoreleasepool { __block BOOL isMainThreadNoRespond = YES; dispatch_semaphore_t semaphore = dispatch_semaphore_create(0); dispatch_async(dispatch_get_main_queue(), ^{ isMainThreadNoRespond = NO; dispatch_semaphore_signal(semaphore); }); [NSThread sleepForTimeInterval:self.threshold]; if (isMainThreadNoRespond) { if (self.handlerBlock) { self.handlerBlock(); // External dump stack inside the block (to be discussed below), data reporting } } dispatch_semaphore_wait(semaphore, DISPATCH_TIME_FOREVER); } }

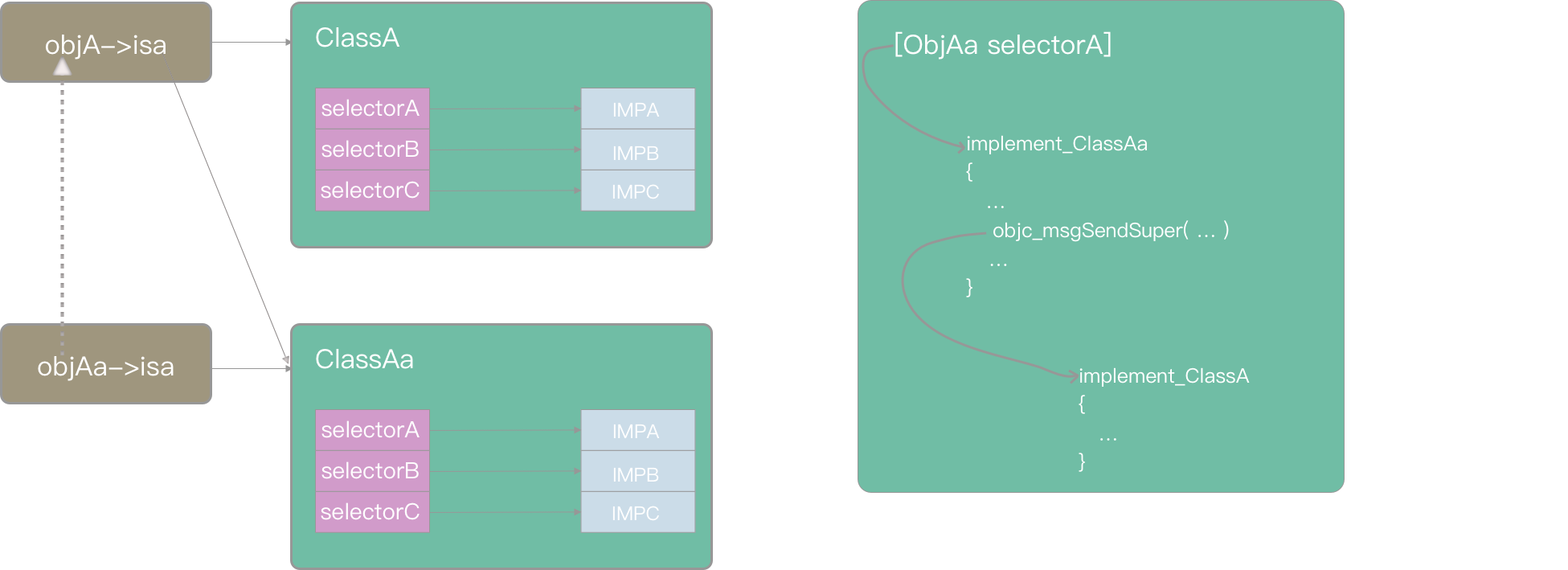

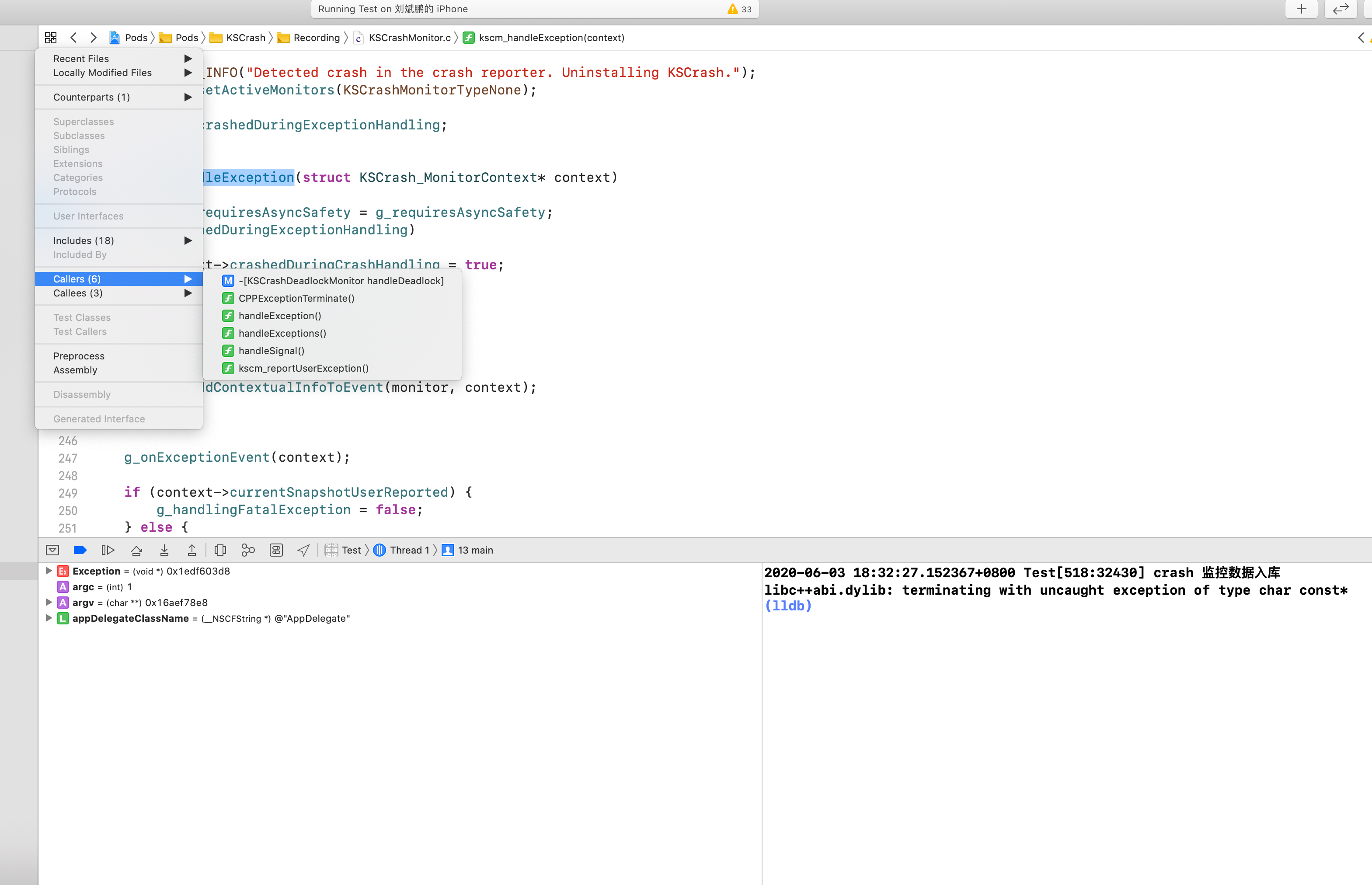

4. Stack dump

Getting the method stack is a hassle. Think about it. [NSThread callStackSymbols] can get the call stack of the current thread. But when monitoring the occurrence of Caton, there is no way to get the stack information of the main thread. The path from any thread back to the main thread doesn't work. Do a knowledge review first.

In computer science, call stack is a stack type data structure used to store thread information about computer programs. This kind of stack is also called execution stack, program stack, control stack, runtime stack, machine stack, etc. The subroutine used by the call stack to trace each activity should return a point of control after execution.

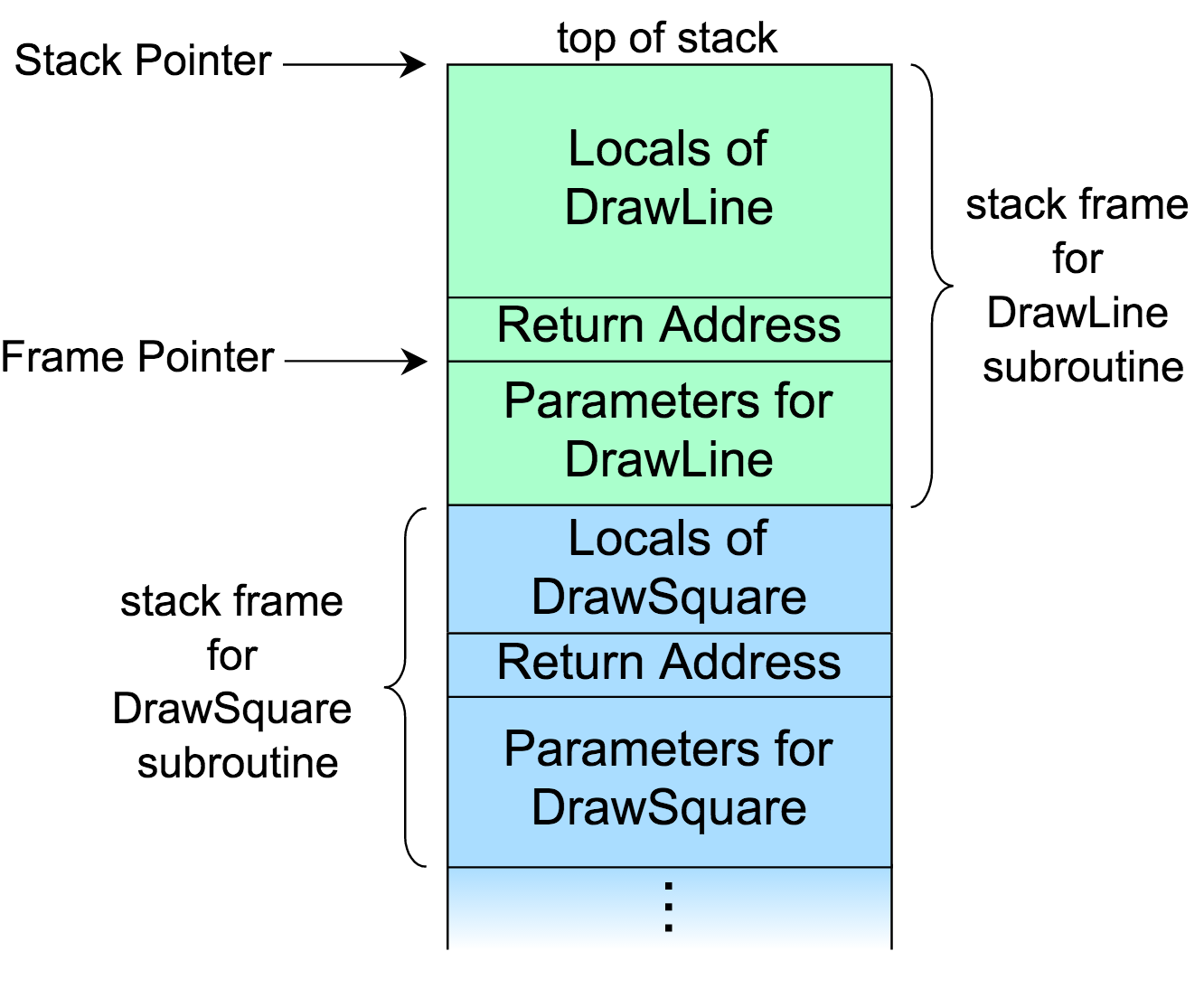

Wikipedia found a picture and an example of "Call Stack", as follows

The image above shows a stack. It is divided into several stack frames, each of which corresponds to a function call. The blue part below represents the DrawSquare function, which invokes the DrawLine function in the process of execution and uses the green part.

It can be seen that stack frame consists of three parts: function parameter, return address and local variable. For example, DrawLine function is called in DrawSquare: first, the parameters required by DrawLine function are pushed into the stack; second, the return address (control information). For example: function A calls function B, and the address of the next line of code that calls function B is the return address) is pushed into the stack; local variables in the third function are also stored in the stack.

Stack Pointer indicates the top of the current stack. Most operating systems are stack down, so Stack Pointer is the minimum value. In the address pointed to by Frame Pointer, the value of the last Stack Pointer is stored, that is, the return address.

In most operating systems, each stack frame also stores the Frame Pointer of the previous stack frame. Therefore, if you know the stack point and frame point of the current stack frame, you can continuously backtrack and recursively obtain the frame at the bottom of the stack.

The next step is to get the stack point and frame point of all threads. Then go back to the scene of the crime.

5. Mach Task knowledge

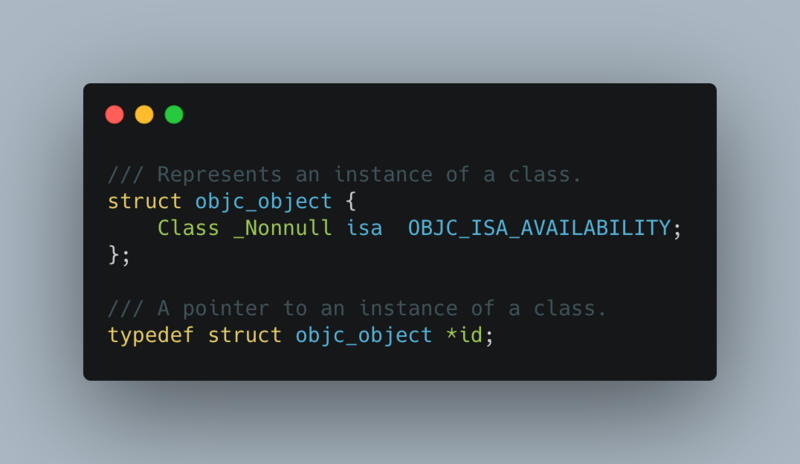

Mach task:

When an App is running, it corresponds to a Mach Task, and there may be multiple threads executing tasks at the same time under the Task. In OS X and iOS Kernel Programming, Mach Task is described as: Task is a container object, through which virtual memory space and other resources are managed, including devices and other handles. Simply summarized as: Mack task is a machine independent thread execution environment abstraction.

Function: task can be understood as a process, including its thread list.

Structure: task_threads, set target_ All threads under the task task are saved in Act_ In the list array, the number of arrays is act_listCnt

kern_return_t task_threads ( task_t traget_task, thread_act_array_t *act_list, //Thread pointer list mach_msg_type_number_t *act_listCnt //Number of threads )

thread_info:

kern_return_t thread_info ( thread_act_t target_act, thread_flavor_t flavor, thread_info_t thread_info_out, mach_msg_type_number_t *thread_info_outCnt );

How to get the stack data of a thread:

System method kern_return_t task_threads(task_inspect_t target_task, thread_act_array_t *act_list, mach_msg_type_number_t *act_listCnt); all threads can be obtained, but the thread information obtained by this method is the lowest Mach thread.

For each thread, Kern can be used_ return_ t thread_ get_ state(thread_ act_ t target_ act, thread_ state_ flavor_ t flavor, thread_ state_ t old_ state, mach_ msg_ type_ number_ t *old_ Statecnt); method gets all its information, which is filled in the_ STRUCT_ Among the parameters of type mcontext, there are two parameters in this method that vary with the CPU architecture. So we need to define the difference between different CPUs of macro mask.

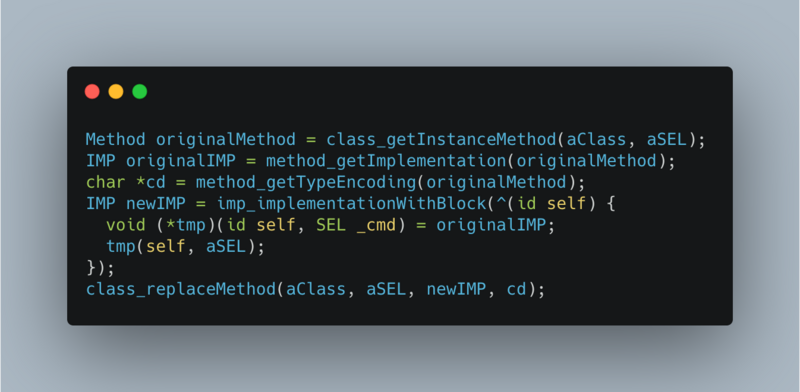

_STRUCT_MCONTEXTIn the structure, the current thread's Stack Pointer And the top stack frame Frame pointer,It then traces back the entire thread call stack.But the above method gets the kernel thread, and the information we need is NSThread,So you need to convert the kernel thread to NSThread.

pthread Of p yes POSIX Abbreviation for「Portable operating system interface」(Portable Operating System Interface). The original intention of the design is that each system has its own thread model, and different systems operate on threads API It's not the same. therefore POSIX The goal is to provide abstract pthread And related API. these ones here API There are different implementations in different operating systems, but the functions are the same.

Unix System provided

task_threadsandthread_get_stateThe kernel system is operated by each kernel thread thread_t Of type id Unique identification. pthread The only identification of is pthread_t Type. Where kernel threads and pthread Transformation of (i.e thread_t and pthread_t)It's easy because pthread The original intention of the design is「Abstract kernel thread」.

memorystatus_action_neededpthread_createMethod to create a thread nsthreadLauncher.static void *nsthreadLauncher(void* thread) { NSThread *t = (NSThread*)thread; [nc postNotificationName: NSThreadDidStartNotification object:t userInfo: nil]; [t _setName: [t name]]; [t main]; [NSThread exit]; return NULL; }

Nsthreaddidstartnotification is actually the string @ "_ NSThreadDidStartNotification".

<NSThread: 0x...>{number = 1, name = main}

In order to match the NSThread with the kernel thread, you can only match one by one through name. Pthread API pthread_getname_np can also get the kernel thread name. np stands for not POSIX, so it cannot be used across platforms.

The idea is as follows: store the original name of NSThread, change the name to a random number (time stamp), and then traverse the name of kernel thread pthread. When the name matches, NSThread corresponds to kernel thread. When found, the thread name will be restored to its original name. For the main thread, pthread cannot be used_ getname_ NP, so get the thread in the load method of the current code_ t. Then match the name.

static mach_port_t main_thread_id; + (void)load { main_thread_id = mach_thread_self(); }

2, App start time monitoring

1. Monitoring of APP startup time

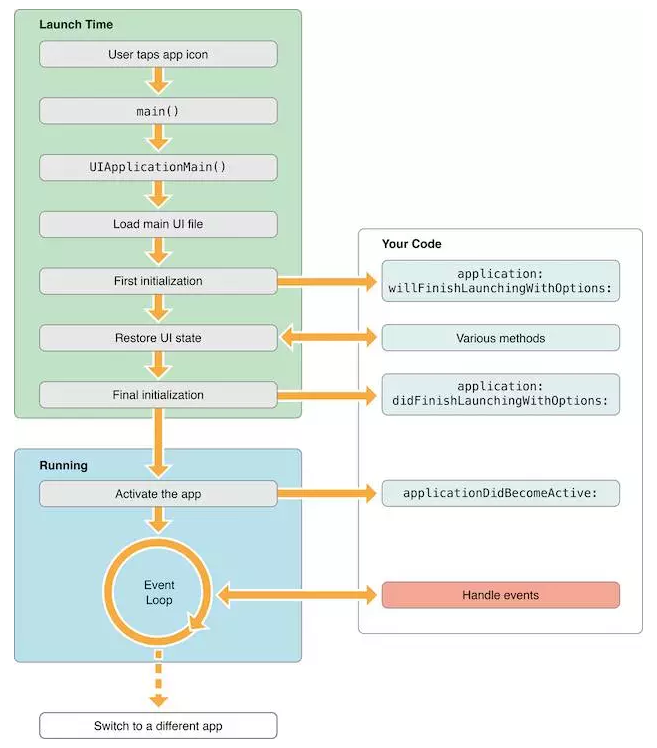

Application startup time is one of the important factors affecting user experience, so we need to quantify how fast an App starts. Start up is divided into cold start and hot start.

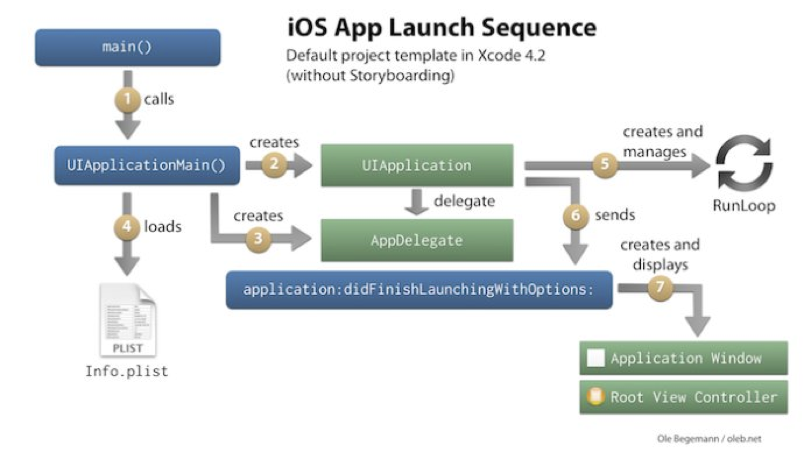

Cold start: App is not running, you must load and build the whole App. Finish initializing the App. There is a large optimization space for cold start. The cold start time is calculated from the application: didFinishLaunchingWithOptions: method. The App usually performs basic initialization of various SDK s and apps here.

Hot start: the application has been running in the background (common scenarios: for example, the user clicks the Home key and then opens the App when using the App). Because some events wake up the App to the foreground, the App will accept the events that the App enters the foreground in the applicationwillenterforegroup: method

The idea is relatively simple. as follows

- Get the current time value in the load method of the monitoring class

- Listen for the notice uiapplicationdidfinishlaunchinginotification after the App is started

- Get the current time after receiving the notice

- The time difference between steps 1 and 3 is the App startup time.

mach_absolute_time is a CPU / bus dependent function that returns the number of CPU clock cycles. It does not increase when the system sleeps. It's a nanosecond number. After 2 nanoseconds before and after acquisition, it needs to be converted to second. Need a system time-based benchmark, via mach_timebase_info.

mach_timebase_info_data_t g_cmmStartupMonitorTimebaseInfoData = 0; mach_timebase_info(&g_cmmStartupMonitorTimebaseInfoData); uint64_t timelapse = mach_absolute_time() - g_cmmLoadTime; double timeSpan = (timelapse * g_cmmStartupMonitorTimebaseInfoData.numer) / (g_cmmStartupMonitorTimebaseInfoData.denom * 1e9);

2. Online monitoring of start-up time is good, but the start-up time needs to be optimized in the development stage.

To optimize the start-up time, we need to know what has been done in the start-up phase, and make plans for the current situation.

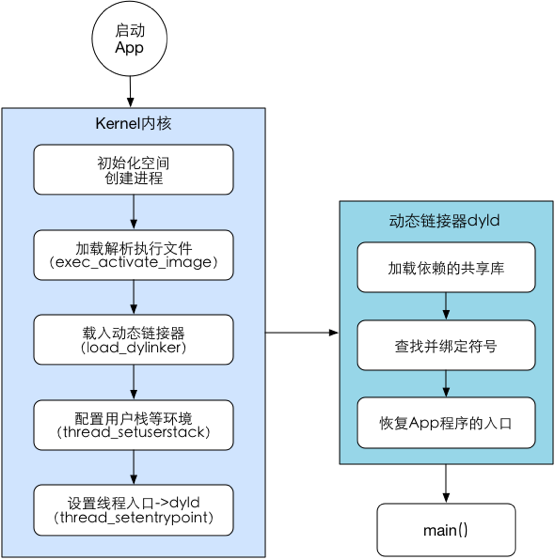

The pre main stage is defined as the stage from the start of App to the system call of main function; the main stage is defined as the viewdidappearance from the main function entry to the main UI framework.

App launch process:

- Analysis Info.plist : load relevant information, such as flash screen; sandbox establishment and permission check;

- Mach-O loading: if it is a fat binary file, look for the part suitable for the current CPU architecture; load all dependent Mach-O files (recursively call the method of Mach-O loading); define internal and external pointer references, such as strings, functions, etc.; load the methods in the classification; load c + + static objects, call the + load() function of Objc; execute the declaration as__ attribute_ c function of ((constructor));

- Program execution: call main(); call UIApplicationMain(); call applicationWillFinishLaunching();

Pre main stage

Main stage

2.1 loading Dylib

For the loading of each dynamic library, dyld needs to

- Dynamic library on which analysis depends

- Find the Mach-O file for the dynamic library

- Open file

- Validation file

- Register file signature in system core

- Call mmap() on each segment of the dynamic library

Optimization:

- Reduce dependence on non system libraries

- Use static rather than dynamic libraries

- Merge non system dynamic library into a dynamic library

2.2 Rebase && Binding

Optimization:

- Reduce the number of Objc classes and selector s, and delete unused classes and functions

- Reduce the number of c + + virtual functions

- Switch to Swift struct (essentially reducing the number of symbols)

2.3 Initializers

Optimization:

- Use + initialize instead of + load

- Do not use attribute*((constructor)) to mark the method display as an initializer, but let it execute when the method is called. For example, using dispatch_one,pthread_once() or std::once(). In other words, it is initialized only when it is used for the first time, which delays part of the work time and tries not to use static objects of c + +

2.4 pre main stage influencing factors

- The more dynamic libraries are loaded, the slower they start.

- The more ObjC classes and functions, the slower the startup.

- The larger the executable, the slower the startup.

- The more constructor functions in C, the slower the startup.

- The more static objects in C + +, the slower to start.

- The more + load ObjC has, the slower it starts.

Optimization means:

- Reduce dependence on unnecessary libraries, whether dynamic or static; transform dynamic libraries into static ones if possible; merge multiple non system dynamic libraries into one

- Check that the framework should be set to optional and required. If the framework exists in all iOS system versions supported by the current App, then it should be set to required. Otherwise, it should be set to optional, because there will be some additional checks

- Merge or delete some OC classes and functions. For cleaning up the classes not used in the project, use the AppCode code code check function to find the classes not used in the current project (it can also be analyzed according to the linkmap file, but the accuracy is not very high)

There is one called FUI The only problem is that it can't deal with the classes provided by dynamic and static libraries, and can't deal with the class templates of C + +

- Delete some useless static variables

- Prune methods that have not been called or have been discarded

- Delay what you don't have to do in the + load method to + initialize, and try not to use C + + virtual functions (creating virtual function tables is expensive)

- Class and method names should not be too long: each class and method name in iOS__ The corresponding string values are stored in the cstring segment, so the length of class and method names also affects the size of the executable

Because it is also the dynamic feature of Object-c, it needs to find the class / method through class / method name reflection to call, and the Object-c object model will save the class / method name string;

- Using dispatch_once() replaces all attribute((constructor)) functions, C + + static object initialization, and ObjC + load functions;

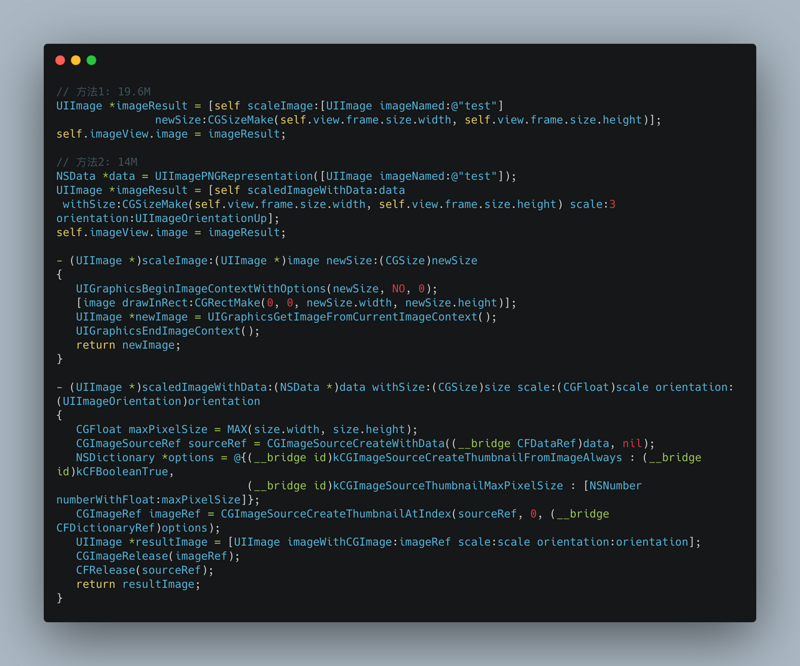

- Compressing the size of the image within the acceptable range of the designer will bring unexpected benefits.

Why can compressed pictures speed up startup? Because it's normal to load a dozen or so large and small images at startup,

If the image is small, the IO operation will be small, and the startup will be fast. The more reliable compression algorithm is TinyPNG.

2.5 main stage optimization

- Reduce the process of initiating initialization. If it can be lazy, it will be lazy. If it can be lazy, it will be lazy. If it can be delayed, it will be delayed. It is not necessary to start the main thread of the card. The business code that has been offline will be deleted directly

- Optimize code logic. Remove unnecessary logic and code to reduce the time consumed by each process

- In the start-up phase, multithreading is used to initialize to maximize CPU performance

- Use pure code instead of xib or storyboard to describe the UI, especially the main UI framework, such as TabBarController. Because xib and storyboard still need to be parsed into code to render the page, a step more.

3, CPU usage monitoring

1. CPU architecture

CPU (Central Processing Unit) central processor. The mainstream architectures in the market include ARM (arm64), Intel (x86), AMD, etc. Intel uses CISC (Complex Instruction Set Computer) and ARM uses RISC (Reduced Instruction Set Computer). The difference lies in different CPU design concepts and methods.

In the early days, all CPUs were CISC architecture, which was designed to complete the required computing tasks with the least machine language instructions. For example, for multiplication, on the CPU of CISC architecture. An instruction MUL ADDRA, ADDRB can multiply the memory ADDRA and the data in the memory ADDRB, and store the result in the ADDRA. What we do is: read the data in ADDRA and ADDRB into the register, and the operation of writing the result of multiplication into the memory depends on the CPU design, so CISC architecture will increase the complexity of CPU and the requirements of CPU technology.

RISC architecture requires software to specify each operation step. For example, the above multiplication instructions are implemented as MOVE A, ADDRA; MOVE B, ADDRB; MUL A, B; STR ADDRA, A;. This architecture can reduce the complexity of CPU and allow more powerful CPU to be produced at the same level of technology, but it has higher requirements for compiler design.

At present, most iPhone s in the market are based on arm64 architecture. And the energy consumption of arm architecture is low.

2. Get thread information

After that, how to monitor CPU utilization

- Turn on the timer and continue to execute the following logic according to the set cycle

- Get the current task. Get all thread information (number of threads, thread array) from the current task

- Traverse all thread information to determine whether the CPU utilization of any thread exceeds the set threshold

- dump stack if thread usage exceeds threshold

- Assembly data, reporting data

Thread information structure

struct thread_basic_info { time_value_t user_time; /* user run time(User run time) */ time_value_t system_time; /* system run time(System operation time) */ integer_t cpu_usage; /* scaled cpu usage percentage(CPU Usage, up to 1000) */ policy_t policy; /* scheduling policy in effect(Effective scheduling strategy) */ integer_t run_state; /* run state (Operation status, see below) */ integer_t flags; /* various flags (All kinds of marks) */ integer_t suspend_count; /* suspend count for thread(Thread hangs) */ integer_t sleep_time; /* number of seconds that thread * has been sleeping(Sleep time) */ };

When talking about stack restore, I've talked about the above analysis

thread_act_array_t threads; mach_msg_type_number_t threadCount = 0; const task_t thisTask = mach_task_self(); kern_return_t kr = task_threads(thisTask, &threads, &threadCount); if (kr != KERN_SUCCESS) { return ; } for (int i = 0; i < threadCount; i++) { thread_info_data_t threadInfo; thread_basic_info_t threadBaseInfo; mach_msg_type_number_t threadInfoCount; kern_return_t kr = thread_info((thread_inspect_t)threads[i], THREAD_BASIC_INFO, (thread_info_t)threadInfo, &threadInfoCount); if (kr == KERN_SUCCESS) { threadBaseInfo = (thread_basic_info_t)threadInfo; // todo: conditional judgment, can't understand if (!(threadBaseInfo->flags & TH_FLAGS_IDLE)) { integer_t cpuUsage = threadBaseInfo->cpu_usage / 10; if (cpuUsage > CPUMONITORRATE) { NSMutableDictionary *CPUMetaDictionary = [NSMutableDictionary dictionary]; NSData *CPUPayloadData = [NSData data]; NSString *backtraceOfAllThread = [BacktraceLogger backtraceOfAllThread]; // 1. Meta information of assembly carton CPUMetaDictionary[@"MONITOR_TYPE"] = CMMonitorCPUType; // 2. Assemble the Payload information of carton (a JSON object whose Key is the agreed STACK_TRACE, stack information after value is base64) NSData *CPUData = [SAFE_STRING(backtraceOfAllThread) dataUsingEncoding:NSUTF8StringEncoding]; NSString *CPUDataBase64String = [CPUData base64EncodedStringWithOptions:0]; NSDictionary *CPUPayloadDictionary = @{@"STACK_TRACE": SAFE_STRING(CPUDataBase64String)}; NSError *error; // The NSJSONWritingOptions parameter must be passed to 0, because the server needs to process logic according to \ n, and the json string generated by passing 0 does not contain \ n NSData *parsedData = [NSJSONSerialization dataWithJSONObject:CPUPayloadDictionary options:0 error:&error]; if (error) { CMMLog(@"%@", error); return; } CPUPayloadData = [parsedData copy]; // 3. Data reporting will create powerful, flexible and configurable data reporting components in( https://github.com/FantasticLBP/knowledge-kit/blob/master/Chapter1%20-%20iOS/1.80.md )Talk about [[PrismClient sharedInstance] sendWithType:CMMonitorCPUType meta:CPUMetaDictionary payload:CPUPayloadData]; } } } }

4, OOM problem

1. Basic knowledge preparation

Hard disk: also called disk, used to store data. The songs, pictures and videos you store are all on your hard disk.

Memory: due to the slow reading speed of the hard disk, if all the data are read directly from the hard disk during the CPU running the program, the efficiency will be greatly affected. So the CPU will read the data needed by the program from the hard disk to the memory. Then CPU and data in memory are calculated and exchanged. Memory is volatile memory (data disappears after power failure). The memory module area is some memory inside the computer (on the main board), which is used to store the intermediate data and results of CPU operation. Memory is the bridge between program and CPU. Read data from hard disk or run program to provide CPU.

Virtual memory is a technology of memory management in computer system. It makes the program think that it has continuous available memory, but in fact, it is usually divided into multiple physical memory fragments, which may be temporarily stored on the external disk (hard disk) memory (when needed, the data in the hard disk is exchanged into memory). It is called "virtual memory" in Windows system and "swap space" in Linux/Unix system.

Does iOS support swap space? Not only does iOS not support switching space, but most mobile systems do not. Because a large amount of memory of mobile devices is flash memory, its reading and writing speed is far smaller than the hard disk used by computers, that is to say, even if the mobile phone uses switching space technology, because of the problem of slow flash memory, it can not improve performance, so there is no switching space technology.

2. iOS memory knowledge

Memory (RAM), like CPU, is the most scarce resource in the system, and it is also easy to compete. Application memory is directly related to performance. iOS has no swap space as an alternative resource, so memory resources are particularly important.

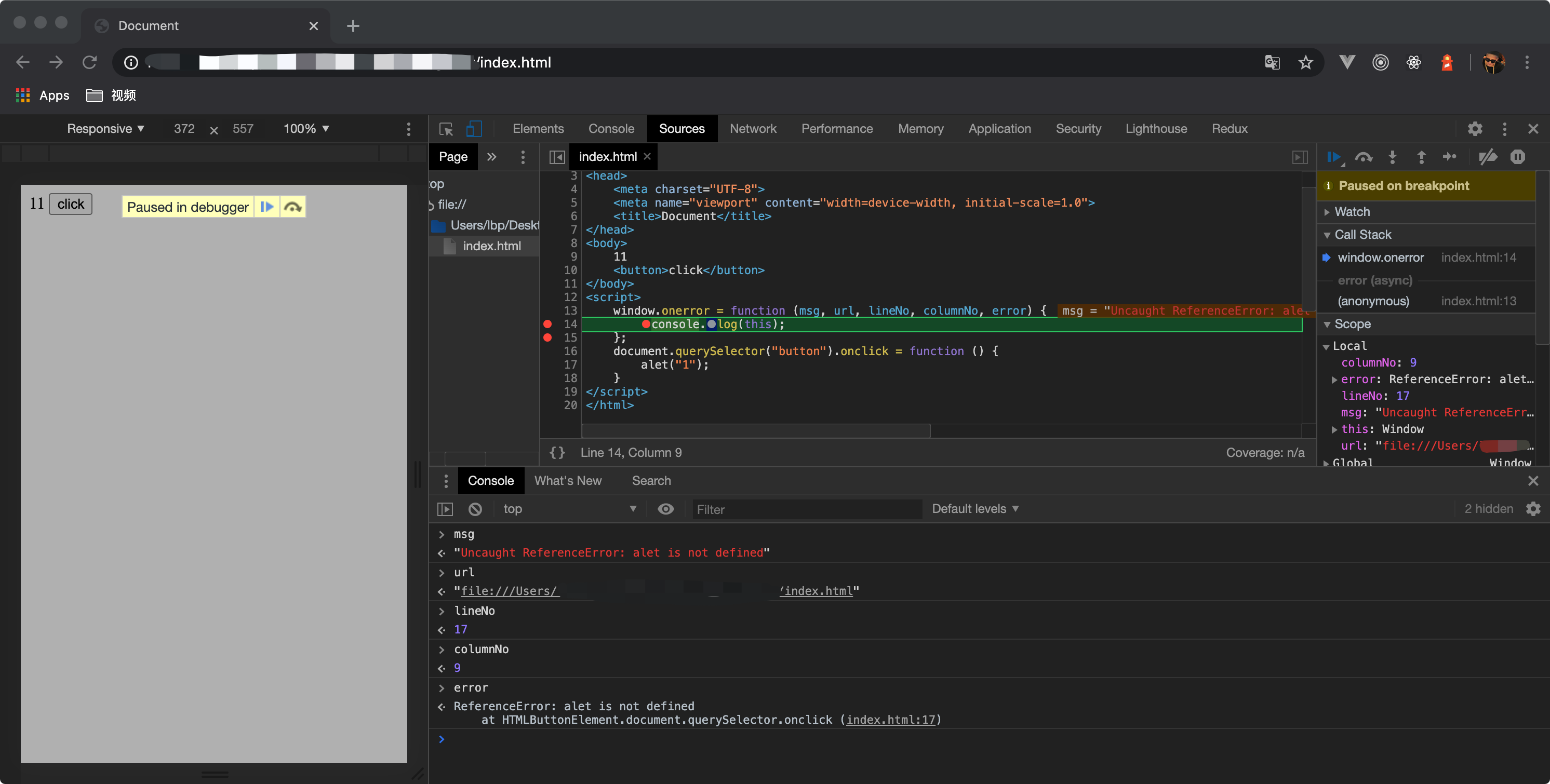

What is oom? Is the abbreviation of out of memory, which literally means that the memory limit is exceeded. It is divided into FOOM (background OOM) and BOOM (background OOM). It is a kind of non mainstream Crash caused by the Jetsam mechanism of iOS. It cannot be captured by the monitoring scheme of Signal.

What is the jetsam mechanism? Jetsam mechanism can be understood as a management mechanism adopted by the system to control the excessive use of memory resources. Jetsam mechanism runs in an independent process, each process has a memory threshold, once the memory threshold is exceeded, jetsam will kill the process immediately.

Why design Jetsam mechanism? Because the memory of the device is limited, memory resources are very important. The system process and other used apps will seize this resource. Because iOS doesn't support switching space, Jetsam will release as much memory as possible once a low memory event is triggered, so that when there is insufficient memory on iOS system, the App will be killed by the system and turned into crash.

Two situations trigger OOM: the system will kill the App with lower priority based on priority policy because the overall memory usage is too high; the current App reaches "high water mark", and the system will also kill the current App (exceeding the memory limit of the system for the current single App).

Read the source code (xnu/bsd/kern/kern_memorystatus.c) will find that there are two mechanisms for memory killing, as follows

highwater processing - > our App can't use more memory than a single limit

- Loop through the priority list to find threads

- Judge whether it meets p_ memstat_ The limitation of memlimit

- Diamonoseactive, FREEZE filtration

- Kill the process, if successful, exit, otherwise loop

memorystatus_act_aggressive processing - > high memory consumption, kill according to priority

- According to the policy home in jld_bucket_count, used to determine whether to be killed

- From JETSAM_PRIORITY_ELEVATED_INACTIVE starts to kill

- Old_bucket_count and memorystatus_jld_eval_period_msecs to determine whether to start killing

- Kill according to priority from low to high until memorystatus_avail_pages_below_pressure

Some cases of excessive memory

- App memory consumption is low, and other app memory management is also great. Even if we switch to other apps, our own app is still "alive" and retains the user status. Good experience

- App memory consumption is low, but other app memory consumption is too large (it may be memory management is poor, or it may itself consume resources, such as games). Then, in addition to the threads in the foreground, other apps will be killed by the system, and memory resources will be recycled to provide memory for active processes.

- App memory consumption is large. After switching to other apps, even if the memory applied by other apps to the system is small, the system will give priority to killing apps with large memory consumption because of memory resource shortage. It means that the user exits the app to the background and later opens it again to find that the app is reloaded and started.

- App memory consumption is very large, which is killed by the system when running in the foreground, causing flash back.

When the App runs out of memory, the system will make more space for use according to a certain strategy. A common method is to move some data with low priority to disk, which is called page out. When the data is accessed again later, the system will be responsible for moving it back to memory. This operation is called page in.

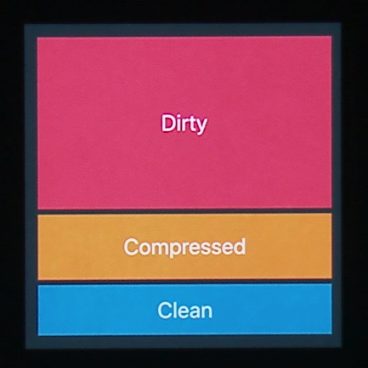

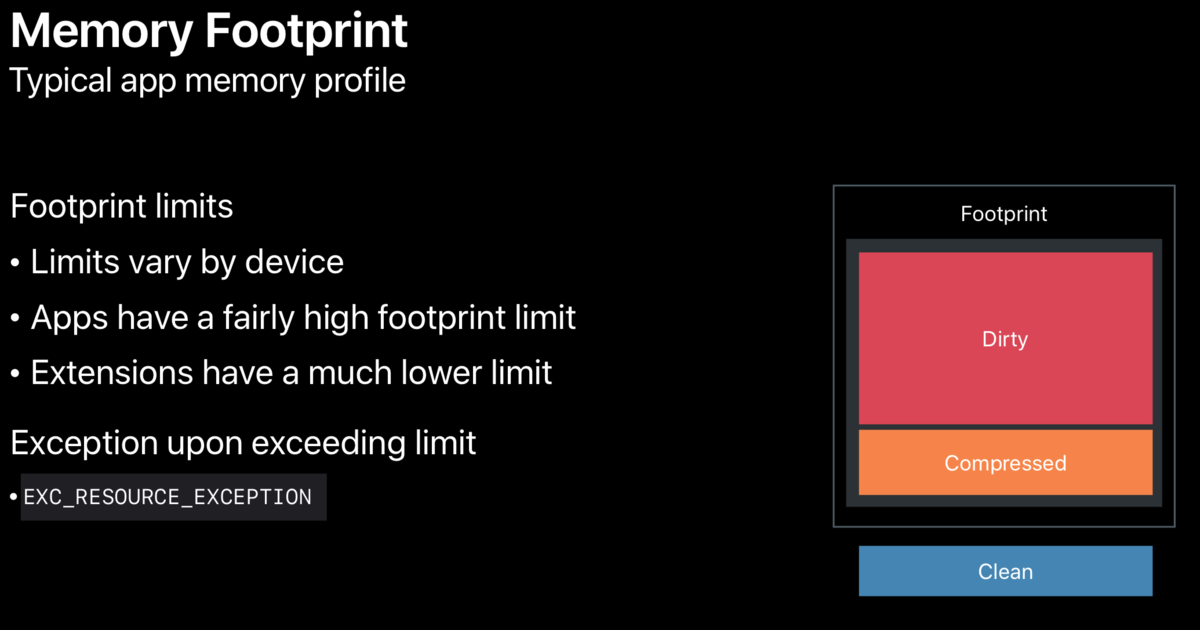

Memory page * * is the smallest unit in memory management. It is allocated by the system. A page may hold multiple objects, or a large object may span multiple pages. Usually it is 16KB in size and has three types of pages.

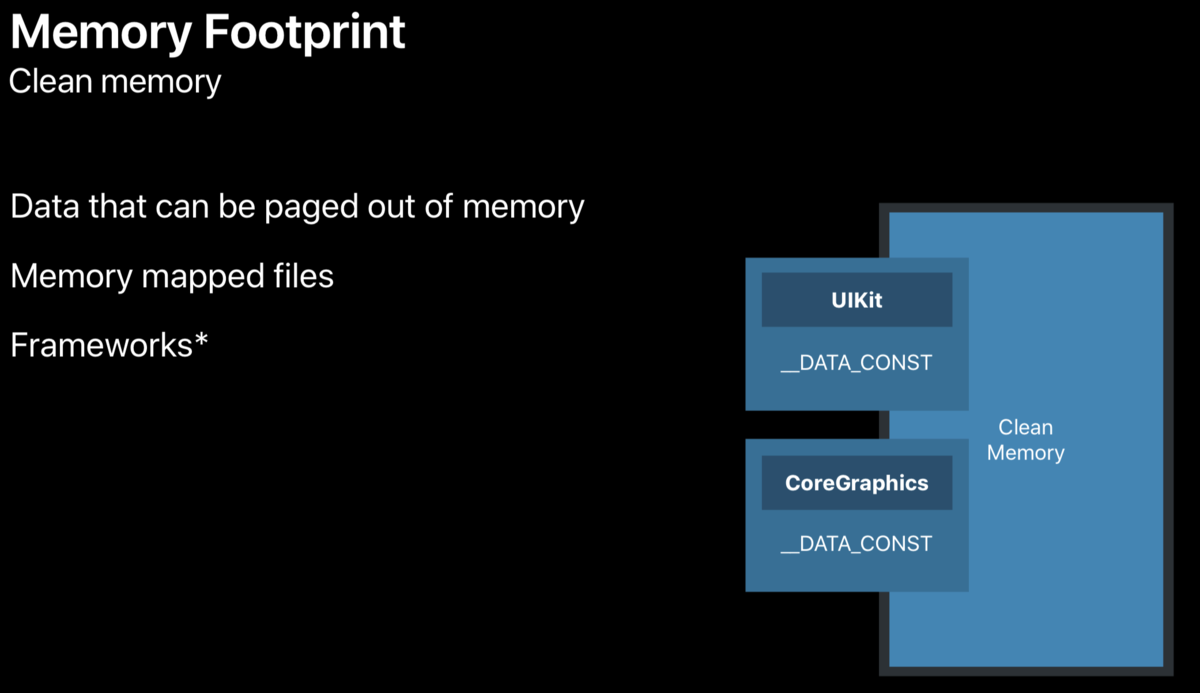

- Clean Memory

Clean memory includes three types: memory that can be paged out, memory mapping file, and framework used by App (each framework has_ DATA_CONST segment, usually in clean state, but with runtime swilling, it becomes dirty).At the beginning, the allocated pages are clean (except for the allocation of objects in the heap). When we write the App data, it becomes dirty. The files read into memory from the hard disk are also read-only and clean page s.

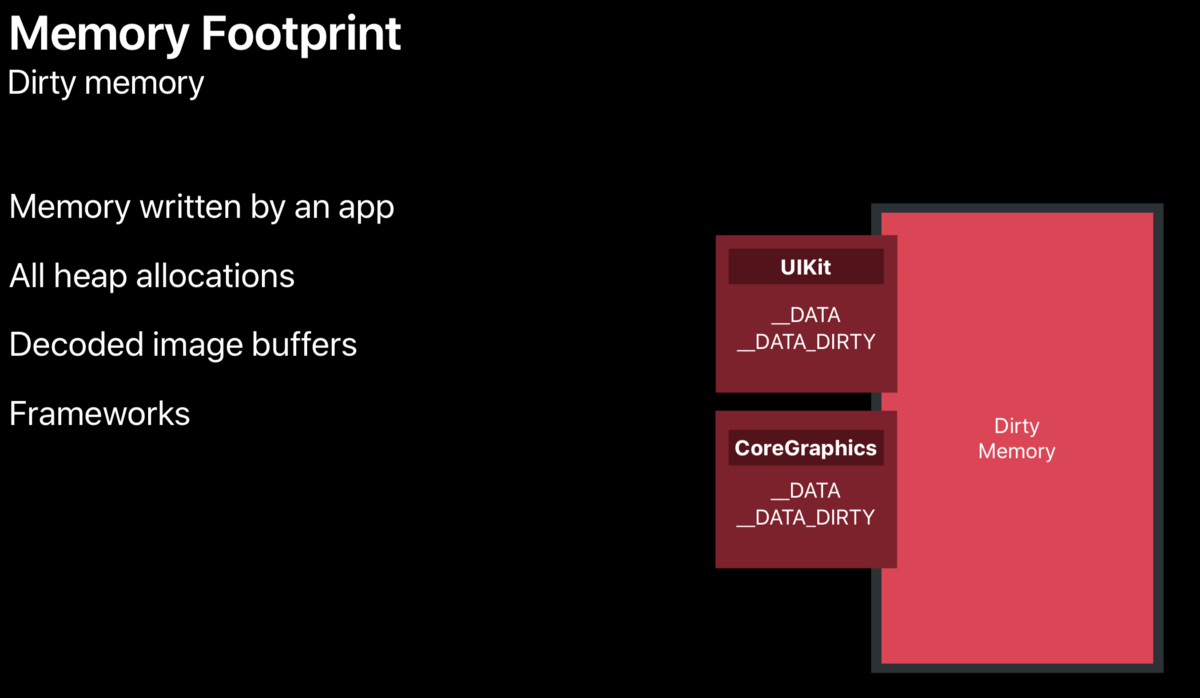

- Dirty Memory

Dirty memory includes four types: memory written by App, all heap allocated objects, image decoding buffer, framework (framework has_ Data segments and_ DATA_DIRTY segments, their memory is dirty).

In the process of using framework, Dirty memory will be generated. Using single instance or global initialization method can help reduce Dirty memory (because once a single instance is created, it will not be destroyed. It is always in memory, and the system does not think of it as Dirty memory).

- Compressed Memory

Due to the limitation of flash memory capacity and read / write, iOS does not have the mechanism of switching space, but introduces memory compressor in iOS 7. It is able to compress the memory objects that have not been used in recent period when the memory is tight. The memory compressor will compress the objects and release more page s. The memory compressor decompresses and reuses it when needed. It can save memory and improve response speed.

For example, when an App uses a Framework, it has an NSDictionary attribute to store data and uses 3 pages of memory. When it is not accessed recently, the memory compressor compresses it to 1 page, and when it is used again, it reverts to 3 pages.

App running memory = pageNumbers * pageSize. Because Compressed Memory belongs to Dirty memory. So Memory footprint = dirtySize + CompressedSize

Different devices have different upper limit of memory occupation, higher upper limit of App, lower upper limit of extension, crash to exc beyond the upper limit_ RESOURCE_ EXCEPTION.

Next, let's talk about how to get the upper limit of memory, and how to monitor whether the App is forced to kill because it occupies too much memory.

3. Get memory information

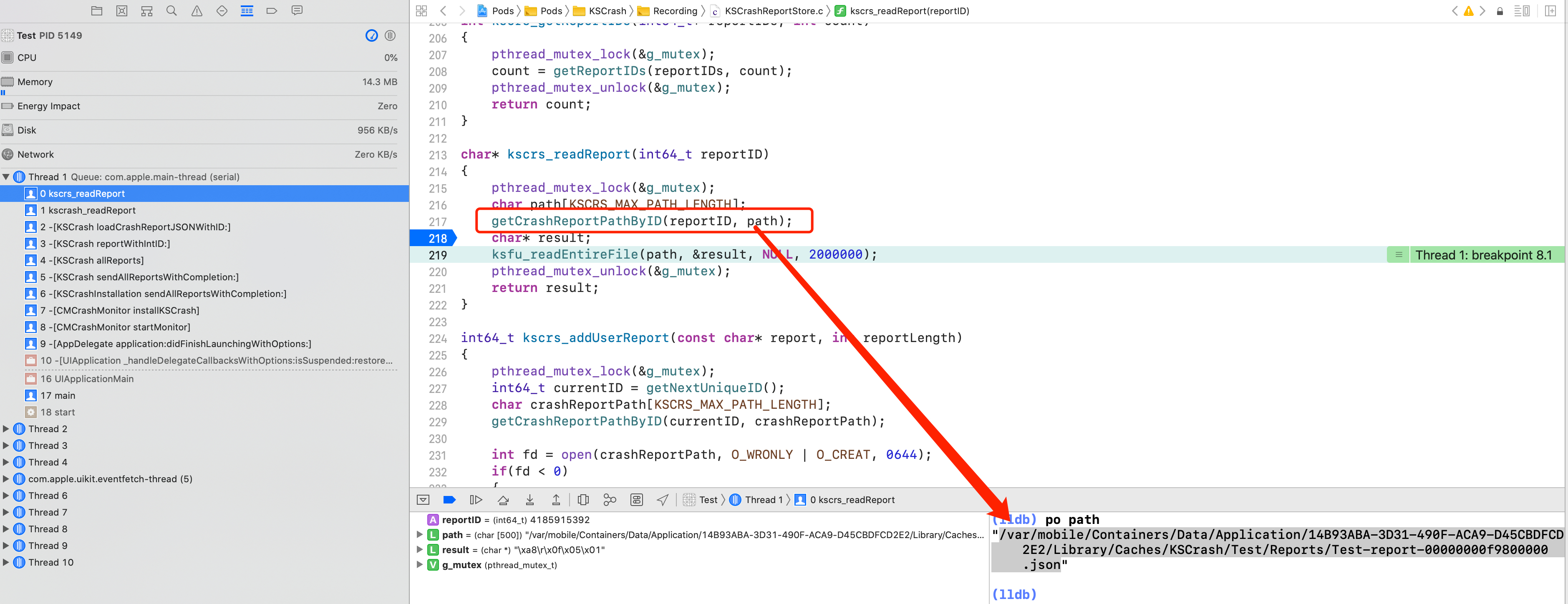

3.1 calculating memory limit through JetsamEvent log

When the App is killed by Jetsam mechanism, the phone will generate a system log. View path: settings privacy Analytics & improvements analytics data. You can see the logs in the form of JetsamEvent-2020-03-14-161828.ips, starting with JetsamEvent. These JetsamEvent logs are all left by the iOS system kernel to kill apps that have low priority (idle, frontmost, suspended) and occupy more memory than the system memory limit.

The log contains App memory information. You can see that there is a pageSize field at the top of the log, and find the per process limit. The rpages in the structure where the node is located can be obtained by rpages * pageSize.

In the log, the largestProcess field represents the App name; the reason field represents the memory reason; and the states field represents the status of the App (idle, suspended, frontmost...) when it crashes.

In order to test the accuracy of the data, I will test all the apps of the two devices (iPhone 6s plus/13.3.1, iPhone 11 Pro/13.3.1) and quit completely. I only ran a Demo App to test the memory threshold. The ViewController code is as follows

- (void)viewDidLoad { [super viewDidLoad]; NSMutableArray *array = [NSMutableArray array]; for (NSInteger index = 0; index < 10000000; index++) { UIImageView *imageView = [[UIImageView alloc] initWithFrame:CGRectMake(0, 0, 100, 100)]; UIImage *image = [UIImage imageNamed:@"AppIcon"]; imageView.image = image; [array addObject:imageView]; } }

iPhone 6s plus/13.3.1 data are as follows:

{"bug_type":"298","timestamp":"2020-03-19 17:23:45.94 +0800","os_version":"iPhone OS 13.3.1 (17D50)","incident_id":"DA8AF66D-24E8-458C-8734-981866942168"} { "crashReporterKey" : "fc9b659ce486df1ed1b8062d5c7c977a7eb8c851", "kernel" : "Darwin Kernel Version 19.3.0: Thu Jan 9 21:10:44 PST 2020; root:xnu-6153.82.3~1\/RELEASE_ARM64_S8000", "product" : "iPhone8,2", "incident" : "DA8AF66D-24E8-458C-8734-981866942168", "date" : "2020-03-19 17:23:45.93 +0800", "build" : "iPhone OS 13.3.1 (17D50)", "timeDelta" : 332, "memoryStatus" : { "compressorSize" : 48499, "compressions" : 7458651, "decompressions" : 5190200, "zoneMapCap" : 744407040, "largestZone" : "APFS_4K_OBJS", "largestZoneSize" : 41402368, "pageSize" : 16384, "uncompressed" : 104065, "zoneMapSize" : 141606912, "memoryPages" : { "active" : 26214, "throttled" : 0, "fileBacked" : 14903, "wired" : 20019, "anonymous" : 37140, "purgeable" : 142, "inactive" : 23669, "free" : 2967, "speculative" : 2160 } }, "largestProcess" : "Test", "genCounter" : 0, "processes" : [ { "uuid" : "39c5738b-b321-3865-a731-68064c4f7a6f", "states" : [ "daemon", "idle" ], "lifetimeMax" : 188, "age" : 948223699030, "purgeable" : 0, "fds" : 25, "coalition" : 422, "rpages" : 177, "pid" : 282, "idleDelta" : 824711280, "name" : "com.apple.Safari.SafeBrowsing.Se", "cpuTime" : 10.275422000000001 }, // ... { "uuid" : "83dbf121-7c0c-3ab5-9b66-77ee926e1561", "states" : [ "frontmost" ], "killDelta" : 2592, "genCount" : 0, "age" : 1531004794, "purgeable" : 0, "fds" : 50, "coalition" : 1047, "rpages" : 92806, "reason" : "per-process-limit", "pid" : 2384, "cpuTime" : 59.464373999999999, "name" : "Test", "lifetimeMax" : 92806 }, // ... ] }

The OOM threshold of iPhone 6s plus/13.3.1 is (16384*92806)/(1024*1024)=1450.09375M

iPhone 11 Pro/13.3.1 data are as follows:

{"bug_type":"298","timestamp":"2020-03-19 17:30:28.39 +0800","os_version":"iPhone OS 13.3.1 (17D50)","incident_id":"7F111601-BC7A-4BD7-A468-CE3370053057"} { "crashReporterKey" : "bc2445adc164c399b330f812a48248e029e26276", "kernel" : "Darwin Kernel Version 19.3.0: Thu Jan 9 21:11:10 PST 2020; root:xnu-6153.82.3~1\/RELEASE_ARM64_T8030", "product" : "iPhone12,3", "incident" : "7F111601-BC7A-4BD7-A468-CE3370053057", "date" : "2020-03-19 17:30:28.39 +0800", "build" : "iPhone OS 13.3.1 (17D50)", "timeDelta" : 189, "memoryStatus" : { "compressorSize" : 66443, "compressions" : 25498129, "decompressions" : 15532621, "zoneMapCap" : 1395015680, "largestZone" : "APFS_4K_OBJS", "largestZoneSize" : 41222144, "pageSize" : 16384, "uncompressed" : 127027, "zoneMapSize" : 169639936, "memoryPages" : { "active" : 58652, "throttled" : 0, "fileBacked" : 20291, "wired" : 45838, "anonymous" : 96445, "purgeable" : 4, "inactive" : 54368, "free" : 5461, "speculative" : 3716 } }, "largestProcess" : "Hangzhou Xiaoliu", "genCounter" : 0, "processes" : [ { "uuid" : "2dd5eb1e-fd31-36c2-99d9-bcbff44efbb7", "states" : [ "daemon", "idle" ], "lifetimeMax" : 171, "age" : 5151034269954, "purgeable" : 0, "fds" : 50, "coalition" : 66, "rpages" : 164, "pid" : 11276, "idleDelta" : 3801132318, "name" : "wcd", "cpuTime" : 3.430787 }, // ... { "uuid" : "63158edc-915f-3a2b-975c-0e0ac4ed44c0", "states" : [ "frontmost" ], "killDelta" : 4345, "genCount" : 0, "age" : 654480778, "purgeable" : 0, "fds" : 50, "coalition" : 1718, "rpages" : 134278, "reason" : "per-process-limit", "pid" : 14206, "cpuTime" : 23.955463999999999, "name" : "Hangzhou Xiaoliu", "lifetimeMax" : 134278 }, // ... ] }

The OOM threshold of iPhone 11 Pro/13.3.1 is: (16384*134278)/(1024*1024)=2098.09375M

How does iOS discover Jetsam?

Mac OS / IOS is a BSD derived system, its kernel is Mach, but the interface exposed to the upper layer is generally based on the packaging of Mach by BSD layer. Mach is a microkernel architecture, in which real virtual memory management is also carried out. BSD provides the upper interface for memory management. Jetsam events are also generated by BSD. bsd_init function is the entry, which basically initializes each subsystem, such as virtual memory management.

// 1. Initialize the kernel memory allocator. Initialize the BSD memory zone. This zone is built based on the zone of the Mach kernel kmeminit(); // 2. Initialize background freezing, a unique feature on IOS, is a resident monitoring thread for memory and process sleep #if CONFIG_FREEZE #ifndef CONFIG_MEMORYSTATUS #error "CONFIG_FREEZE defined without matching CONFIG_MEMORYSTATUS" #endif /* Initialise background freezing */ bsd_init_kprintf("calling memorystatus_freeze_init\n"); memorystatus_freeze_init(); #endif> // 3. iOS unique, JetSAM (i.e. resident monitoring thread for low memory events) #if CONFIG_MEMORYSTATUS /* Initialize kernel memory status notifications */ bsd_init_kprintf("calling memorystatus_init\n"); memorystatus_init(); #endif /* CONFIG_MEMORYSTATUS */

The main function is to open two threads with the highest priority to monitor the memory of the whole system.

CONFIG_ When freeze is on, the kernel freezes rather than kills the process. The freezing function is to start a MEMORYSTATUS in the kernel_ freeze_ Thread performs the process, calling memorystatus_ after receiving the signal. freeze_ top_ Process is frozen.

iOS system will start the highest priority thread vm_pressure_monitor to monitor the memory pressure of the system and maintain all App processes through a stack. The iOS system also maintains a memory snapshot table, which is used to save the consumption of each process memory page. The logic related to Jetsam, or memorystatus, can be found in Kern in XNU project_ memorystatus. H and Kern_ See the source code of memorystatus. C.

Before the iOS system forcibly kills the App due to high memory consumption, at least 6 seconds can be used for priority judgment, and the JetsamEvent log is also generated in these 6 seconds.

As mentioned above, iOS system has no exchange space, so memory status mechanism (also known as Jetsam) is introduced. That is to say, free as much memory as possible on iOS system for current App. This mechanism is manifested in priority, which is to kill the background application first; if there is still not enough memory, it will kill the current application. In Mac OS, MemoryStatus only kills processes marked as idle exits.

The MemoryStatus mechanism will open a memorystatus_jetsam_thread, which is responsible for killing apps and logging, does not send messages, so the memory pressure detection thread cannot get the messages of killing apps.

When the monitoring thread finds that an App has memory pressure, it will issue a notification, and the App with memory will execute the didReceiveMemoryWarning agent method. At this time, we still have the opportunity to do some memory resource release logic, which may prevent the App from being killed by the system.

Source code view

The iOS system kernel has an array dedicated to maintaining thread priority. Each item in the array is a structure containing a list of processes. The structure is as follows:

#define MEMSTAT_BUCKET_COUNT (JETSAM_PRIORITY_MAX + 1) typedef struct memstat_bucket { TAILQ_HEAD(, proc) list; int count; } memstat_bucket_t; memstat_bucket_t memstat_bucket[MEMSTAT_BUCKET_COUNT];

At Kern_ Priority information can be seen in MEMORYSTATUS. H

#define JETSAM_PRIORITY_IDLE_HEAD -2 /* The value -1 is an alias to JETSAM_PRIORITY_DEFAULT */ #define JETSAM_PRIORITY_IDLE 0 #define JETSAM_PRIORITY_IDLE_DEFERRED 1 /* Keeping this around till all xnu_quick_tests can be moved away from it.*/ #define JETSAM_PRIORITY_AGING_BAND1 JETSAM_PRIORITY_IDLE_DEFERRED #define JETSAM_PRIORITY_BACKGROUND_OPPORTUNISTIC 2 #define JETSAM_PRIORITY_AGING_BAND2 JETSAM_PRIORITY_BACKGROUND_OPPORTUNISTIC #define JETSAM_PRIORITY_BACKGROUND 3 #define JETSAM_PRIORITY_ELEVATED_INACTIVE JETSAM_PRIORITY_BACKGROUND #define JETSAM_PRIORITY_MAIL 4 #define JETSAM_PRIORITY_PHONE 5 #define JETSAM_PRIORITY_UI_SUPPORT 8 #define JETSAM_PRIORITY_FOREGROUND_SUPPORT 9 #define JETSAM_PRIORITY_FOREGROUND 10 #define JETSAM_PRIORITY_AUDIO_AND_ACCESSORY 12 #define JETSAM_PRIORITY_CONDUCTOR 13 #define JETSAM_PRIORITY_HOME 16 #define JETSAM_PRIORITY_EXECUTIVE 17 #define JETSAM_PRIORITY_IMPORTANT 18 #define JETSAM_PRIORITY_CRITICAL 19 #define JETSAM_PRIORITY_MAX 21

It can be seen clearly that the background App priority is jetsam_ PRIORITY_ Backgroup is 3, the priority of foreground App is JETSAM_PRIORITY_FOREGROUND is 10.

The priority rules are: kernel thread priority > operating system priority > App priority. And the priority of foreground App is higher than that of background App; when the priority of threads is the same, the priority of threads with more CPU will be reduced.

At Kern_ You can see the possible reasons for OOM in MEMORYSTATUS. C:

/* For logging clarity */ static const char *memorystatus_kill_cause_name[] = { "" , /* kMemorystatusInvalid */ "jettisoned" , /* kMemorystatusKilled */ "highwater" , /* kMemorystatusKilledHiwat */ "vnode-limit" , /* kMemorystatusKilledVnodes */ "vm-pageshortage" , /* kMemorystatusKilledVMPageShortage */ "proc-thrashing" , /* kMemorystatusKilledProcThrashing */ "fc-thrashing" , /* kMemorystatusKilledFCThrashing */ "per-process-limit" , /* kMemorystatusKilledPerProcessLimit */ "disk-space-shortage" , /* kMemorystatusKilledDiskSpaceShortage */ "idle-exit" , /* kMemorystatusKilledIdleExit */ "zone-map-exhaustion" , /* kMemorystatusKilledZoneMapExhaustion */ "vm-compressor-thrashing" , /* kMemorystatusKilledVMCompressorThrashing */ "vm-compressor-space-shortage" , /* kMemorystatusKilledVMCompressorSpaceShortage */ };

View memorystatus_init is the key code to initialize Jetsam thread in this function

__private_extern__ void memorystatus_init(void) { // ... /* Initialize the jetsam_threads state array */ jetsam_threads = kalloc(sizeof(struct jetsam_thread_state) * max_jetsam_threads); /* Initialize all the jetsam threads */ for (i = 0; i < max_jetsam_threads; i++) { result = kernel_thread_start_priority(memorystatus_thread, NULL, 95 /* MAXPRI_KERNEL */, &jetsam_threads[i].thread); if (result == KERN_SUCCESS) { jetsam_threads[i].inited = FALSE; jetsam_threads[i].index = i; thread_deallocate(jetsam_threads[i].thread); } else { panic("Could not create memorystatus_thread %d", i); } } }

/* * High-level priority assignments * ************************************************************************* * 127 Reserved (real-time) * A * + * (32 levels) * + * V * 96 Reserved (real-time) * 95 Kernel mode only * A * + * (16 levels) * + * V * 80 Kernel mode only * 79 System high priority * A * + * (16 levels) * + * V * 64 System high priority * 63 Elevated priorities * A * + * (12 levels) * + * V * 52 Elevated priorities * 51 Elevated priorities (incl. BSD +nice) * A * + * (20 levels) * + * V * 32 Elevated priorities (incl. BSD +nice) * 31 Default (default base for threads) * 30 Lowered priorities (incl. BSD -nice) * A * + * (20 levels) * + * V * 11 Lowered priorities (incl. BSD -nice) * 10 Lowered priorities (aged pri's) * A * + * (11 levels) * + * V * 0 Lowered priorities (aged pri's / idle) ************************************************************************* */

It can be seen that the threads of user state applications cannot be higher than the operating system and kernel. Moreover, there are also differences in the priority allocation of threads among user applications, such as the priority of applications in the foreground is higher than that in the background. The highest priority of applications on iOS is SpringBoard; in addition, the priority of threads is not constant. Mach dynamically adjusts thread priority based on thread utilization and overall system load. If the CPU is consumed too much, the priority of the thread will be reduced. If the thread is starved too much, the priority of the thread will be increased. However, no matter how it changes, the program cannot exceed the priority range of its thread.

It can be seen that the system will turn on Max according to the kernel startup parameters and device performance_ jetsam_ Threads (1 in general, 3 in special) jetsam threads, and the priority of these threads is 95, that is, MAXPRI_KERNEL (note that 95 here is the thread priority, and XNU's thread priority range is 0-127. The macro definition above is the process priority, with the range: - 2-19).

Next, analyze the memory status_ Thread function, mainly responsible for the initialization of thread startup

static void memorystatus_thread(void *param __unused, wait_result_t wr __unused) { //... while (memorystatus_action_needed()) { boolean_t killed; int32_t priority; uint32_t cause; uint64_t jetsam_reason_code = JETSAM_REASON_INVALID; os_reason_t jetsam_reason = OS_REASON_NULL; cause = kill_under_pressure_cause; switch (cause) { case kMemorystatusKilledFCThrashing: jetsam_reason_code = JETSAM_REASON_MEMORY_FCTHRASHING; break; case kMemorystatusKilledVMCompressorThrashing: jetsam_reason_code = JETSAM_REASON_MEMORY_VMCOMPRESSOR_THRASHING; break; case kMemorystatusKilledVMCompressorSpaceShortage: jetsam_reason_code = JETSAM_REASON_MEMORY_VMCOMPRESSOR_SPACE_SHORTAGE; break; case kMemorystatusKilledZoneMapExhaustion: jetsam_reason_code = JETSAM_REASON_ZONE_MAP_EXHAUSTION; break; case kMemorystatusKilledVMPageShortage: /* falls through */ default: jetsam_reason_code = JETSAM_REASON_MEMORY_VMPAGESHORTAGE; cause = kMemorystatusKilledVMPageShortage; break; } /* Highwater */ boolean_t is_critical = TRUE; if (memorystatus_act_on_hiwat_processes(&errors, &hwm_kill, &post_snapshot, &is_critical)) { if (is_critical == FALSE) { /* * For now, don't kill any other processes. */ break; } else { goto done; } } jetsam_reason = os_reason_create(OS_REASON_JETSAM, jetsam_reason_code); if (jetsam_reason == OS_REASON_NULL) { printf("memorystatus_thread: failed to allocate jetsam reason\n"); } if (memorystatus_act_aggressive(cause, jetsam_reason, &jld_idle_kills, &corpse_list_purged, &post_snapshot)) { goto done; } /* * memorystatus_kill_top_process() drops a reference, * so take another one so we can continue to use this exit reason * even after it returns */ os_reason_ref(jetsam_reason); /* LRU */ killed = memorystatus_kill_top_process(TRUE, sort_flag, cause, jetsam_reason, &priority, &errors); sort_flag = FALSE; if (killed) { if (memorystatus_post_snapshot(priority, cause) == TRUE) { post_snapshot = TRUE; } /* Jetsam Loop Detection */ if (memorystatus_jld_enabled == TRUE) { if ((priority == JETSAM_PRIORITY_IDLE) || (priority == system_procs_aging_band) || (priority == applications_aging_band)) { jld_idle_kills++; } else { /* * We've reached into bands beyond idle deferred. * We make no attempt to monitor them */ } } if ((priority >= JETSAM_PRIORITY_UI_SUPPORT) && (total_corpses_count() > 0) && (corpse_list_purged == FALSE)) { /* * If we have jetsammed a process in or above JETSAM_PRIORITY_UI_SUPPORT * then we attempt to relieve pressure by purging corpse memory. */ task_purge_all_corpses(); corpse_list_purged = TRUE; } goto done; } if (memorystatus_avail_pages_below_critical()) { /* * Still under pressure and unable to kill a process - purge corpse memory */ if (total_corpses_count() > 0) { task_purge_all_corpses(); corpse_list_purged = TRUE; } if (memorystatus_avail_pages_below_critical()) { /* * Still under pressure and unable to kill a process - panic */ panic("memorystatus_jetsam_thread: no victim! available pages:%llu\n", (uint64_t)memorystatus_available_pages); } } done: }

You can see that it opens a loop, memorystatus_action_needed() as a loop condition to continuously free memory.

static boolean_t memorystatus_action_needed(void) { #if CONFIG_EMBEDDED return (is_reason_thrashing(kill_under_pressure_cause) || is_reason_zone_map_exhaustion(kill_under_pressure_cause) || memorystatus_available_pages <= memorystatus_available_pages_pressure); #else /* CONFIG_EMBEDDED */ return (is_reason_thrashing(kill_under_pressure_cause) || is_reason_zone_map_exhaustion(kill_under_pressure_cause)); #endif /* CONFIG_EMBEDDED */ }

It's via VM_ The memory pressure sent by pagepout is used to judge whether the current memory resource is tight. Several situations: frequent page exchange in and out is_reason_thrashing, Mach Zone runs out of is_ reason_ zone_ map_ Exhausion, and available pages are lower than memory status_available_pages is the threshold.

Continue with memorystatus_thread, when memory is tight, will trigger high water type OOM first, that is to say, OOM will occur if a process exceeds its maximum limit of using memory in the process of using high water mark. In MEMORYSTATUS_ act_ On_ hiwat_ In processes(), through memorystatus_kill_hiwat_proc() in priority array memstat_ Find the process with the lowest priority in bucket, if the memory of the process is less than the threshold (Footprint_ In_ bytes <= memlimit_ In_ Bytes) will continue to look for processes with lower secondary priority until processes that occupy more than the threshold memory are found and killed.

Generally speaking, it's hard for a single App to touch the high water mark. If it can't finish any process, it will eventually reach the memorystatus_act_aggressive, which is where most OOM s happen.

static boolean_t memorystatus_act_aggressive(uint32_t cause, os_reason_t jetsam_reason, int *jld_idle_kills, boolean_t *corpse_list_purged, boolean_t *post_snapshot) { // ... if ( (jld_bucket_count == 0) || (jld_now_msecs > (jld_timestamp_msecs + memorystatus_jld_eval_period_msecs))) { /* * Refresh evaluation parameters */ jld_timestamp_msecs = jld_now_msecs; jld_idle_kill_candidates = jld_bucket_count; *jld_idle_kills = 0; jld_eval_aggressive_count = 0; jld_priority_band_max = JETSAM_PRIORITY_UI_SUPPORT; } //... }

From the above code, it can be seen that whether to actually execute kill or not is determined according to a certain period of time, provided that JLD_ now_ msecs > (jld_ timestamp_ msecs + memorystatus_jld_eval_period_msecs. That is, in MEMORYSTATUS_ jld_ eval_ period_ Kill in the condition occurs after MSECS.

/* Jetsam Loop Detection */ if (max_mem <= (512 * 1024 * 1024)) { /* 512 MB devices */ memorystatus_jld_eval_period_msecs = 8000; /* 8000 msecs == 8 second window */ } else { /* 1GB and larger devices */ memorystatus_jld_eval_period_msecs = 6000; /* 6000 msecs == 6 second window */ }

Among them, memorystatus_jld_eval_period_msecs takes a minimum value of 6 seconds. So we can do something in six seconds.

3.2 developers' income

stackoverflow There is a piece of data on which the OOM critical values of various devices are sorted out

| device | crash amount:MB | total amount:MB | percentage of total |

|---|---|---|---|

| iPad1 | 127 | 256 | 49% |

| iPad2 | 275 | 512 | 53% |

| iPad3 | 645 | 1024 | 62% |

| iPad4(iOS 8.1) | 585 | 1024 | 57% |

| Pad Mini 1st Generation | 297 | 512 | 58% |

| iPad Mini retina(iOS 7.1) | 696 | 1024 | 68% |

| iPad Air | 697 | 1024 | 68% |

| iPad Air 2(iOS 10.2.1) | 1383 | 2048 | 68% |

| iPad Pro 9.7"(iOS 10.0.2 (14A456)) | 1395 | 1971 | 71% |

| iPad Pro 10.5"(iOS 11 beta4) | 3057 | 4000 | 76% |

| iPad Pro 12.9" (2015)(iOS 11.2.1) | 3058 | 3999 | 76% |

| iPad 10.2(iOS 13.2.3) | 1844 | 2998 | 62% |

| iPod touch 4th gen(iOS 6.1.1) | 130 | 256 | 51% |

| iPod touch 5th gen | 286 | 512 | 56% |

| iPhone4 | 325 | 512 | 63% |

| iPhone4s | 286 | 512 | 56% |

| iPhone5 | 645 | 1024 | 62% |

| iPhone5s | 646 | 1024 | 63% |

| iPhone6(iOS 8.x) | 645 | 1024 | 62% |

| iPhone6 Plus(iOS 8.x) | 645 | 1024 | 62% |

| iPhone6s(iOS 9.2) | 1396 | 2048 | 68% |

| iPhone6s Plus(iOS 10.2.1) | 1396 | 2048 | 68% |

| iPhoneSE(iOS 9.3) | 1395 | 2048 | 68% |

| iPhone7(iOS 10.2) | 1395 | 2048 | 68% |

| iPhone7 Plus(iOS 10.2.1) | 2040 | 3072 | 66% |

| iPhone8(iOS 12.1) | 1364 | 1990 | 70% |

| iPhoneX(iOS 11.2.1) | 1392 | 2785 | 50% |

| iPhoneXS(iOS 12.1) | 2040 | 3754 | 54% |

| iPhoneXS Max(iOS 12.1) | 2039 | 3735 | 55% |

| iPhoneXR(iOS 12.1) | 1792 | 2813 | 63% |

| iPhone11(iOS 13.1.3) | 2068 | 3844 | 54% |

| iPhone11 Pro Max(iOS 13.2.3) | 2067 | 3740 | 55% |

3.3 trigger the high water mark of the current App

We can write timers, constantly apply for memory, and then use physics_ Footprint printing currently occupies memory. In principle, constantly applying for memory can trigger the Jetsam mechanism to kill the App. Then the memory occupation of the last printing is the upper limit of the current device's memory.

timer = [NSTimer scheduledTimerWithTimeInterval:0.01 target:self selector:@selector(allocateMemory) userInfo:nil repeats:YES]; - (void)allocateMemory { UIImageView *imageView = [[UIImageView alloc] initWithFrame:CGRectMake(0, 0, 100, 100)]; UIImage *image = [UIImage imageNamed:@"AppIcon"]; imageView.image = image; [array addObject:imageView]; memoryLimitSizeMB = [self usedSizeOfMemory]; if (memoryWarningSizeMB && memoryLimitSizeMB) { NSLog(@"----- memory warnning:%dMB, memory limit:%dMB", memoryWarningSizeMB, memoryLimitSizeMB); } } - (int)usedSizeOfMemory { task_vm_info_data_t taskInfo; mach_msg_type_number_t infoCount = TASK_VM_INFO_COUNT; kern_return_t kernReturn = task_info(mach_task_self(), TASK_VM_INFO, (task_info_t)&taskInfo, &infoCount); if (kernReturn != KERN_SUCCESS) { return 0; } return (int)(taskInfo.phys_footprint/1024.0/1024.0); }

3.4 acquisition method applicable to IOS 13 system

iOS13 start < OS / proc. H > medium size_t os_proc_available_memory(void); you can view the currently available memory.

Return Value

The number of bytes that the app may allocate before it hits its memory limit. If the calling process isn't an app, or if the process has already exceeded its memory limit, this function returns 0.

Discussion

Call this function to determine the amount of memory available to your app. The returned value corresponds to the current memory limit minus the memory footprint of your app at the time of the function call. Your app's memory footprint consists of the data that you allocated in RAM, and that must stay in RAM (or the equivalent) at all times. Memory limits can change during the app life cycle and don't necessarily correspond to the amount of physical memory available on the device.

Use the returned value as advisory information only and don't cache it. The precise value changes when your app does any work that affects memory, which can happen frequently.

Although this function lets you determine the amount of memory your app may safely consume, don't use it to maximize your app's memory usage. Significant memory use, even when under the current memory limit, affects system performance. For example, when your app consumes all of its available memory, the system may need to terminate other apps and system processes to accommodate your app's requests. Instead, always consume the smallest amount of memory you need to be responsive to the user's needs.

If you need more detailed information about the available memory resources, you can call task_info. However, be aware that task_info is an expensive call, whereas this function is much more efficient.

if (@available(iOS 13.0, *)) { return os_proc_available_memory() / 1024.0 / 1024.0; }

The API of App memory information can be found in Mach layer_ task_ basic_ The info structure stores the memory usage information of Mach task, where phys_footprint is the physical memory size used by the application_ Size is the virtual memory size.

#define MACH_TASK_BASIC_INFO 20 /* always 64-bit basic info */ struct mach_task_basic_info { mach_vm_size_t virtual_size; /* virtual memory size (bytes) */ mach_vm_size_t resident_size; /* resident memory size (bytes) */ mach_vm_size_t resident_size_max; /* maximum resident memory size (bytes) */ time_value_t user_time; /* total user run time for terminated threads */ time_value_t system_time; /* total system run time for terminated threads */ policy_t policy; /* default policy for new threads */ integer_t suspend_count; /* suspend count for task */ };

So get the code as

task_vm_info_data_t vmInfo; mach_msg_type_number_t count = TASK_VM_INFO_COUNT; kern_return_t kr = task_info(mach_task_self(), TASK_VM_INFO, (task_info_t)&vmInfo, &count); if (kr != KERN_SUCCESS) { return ; } CGFloat memoryUsed = (CGFloat)(vmInfo.phys_footprint/1024.0/1024.0);

Maybe some people are curious that it shouldn't be resident_ Does the size field get the memory usage? Found resident at the beginning of the test_ There is a big gap between size and Xcode measurement results. Using physics instead_ Footprint is close to the result of Xcode. And from WebKit source code It is confirmed in.

So on IOS 13, we can use OS_ proc_ available_ The available memory is obtained from memory, and the current available memory is obtained through phys_footprint gets the memory occupied by the current App. The sum of the two is the upper memory limit of the current device. If the sum exceeds, the Jetsam mechanism will be triggered.

- (CGFloat)limitSizeOfMemory { if (@available(iOS 13.0, *)) { task_vm_info_data_t taskInfo; mach_msg_type_number_t infoCount = TASK_VM_INFO_COUNT; kern_return_t kernReturn = task_info(mach_task_self(), TASK_VM_INFO, (task_info_t)&taskInfo, &infoCount); if (kernReturn != KERN_SUCCESS) { return 0; } return (CGFloat)((taskInfo.phys_footprint + os_proc_available_memory()) / (1024.0 * 1024.0); } return 0; }

Currently available memory: 1435.936752MB; currently occupied memory of App: 14.5MB, critical value: 1435.936752MB + 14.5MB= 1450.436MB, the same as the memory critical value obtained in 3.1 method: "iPhone 6s plus/13.3.1 phone OOM critical value is: (16384*92806)/(1024*1024)=1450.09375M".

3.5 get the memory limit value through XNU

In XNU, there are functions and macros specifically used to get the upper memory limit value, which can be accessed through MEMORYSTATUS_ priority_ The entry structure gets the priority and memory limit values of all processes.

typedef struct memorystatus_priority_entry { pid_t pid; int32_t priority; uint64_t user_data; int32_t limit; uint32_t state; } memorystatus_priority_entry_t;

Among them, priority represents the priority of the process, and limit represents the memory limit of the process. But this way requires root permission. I haven't tried it because there is no jailbreak device.

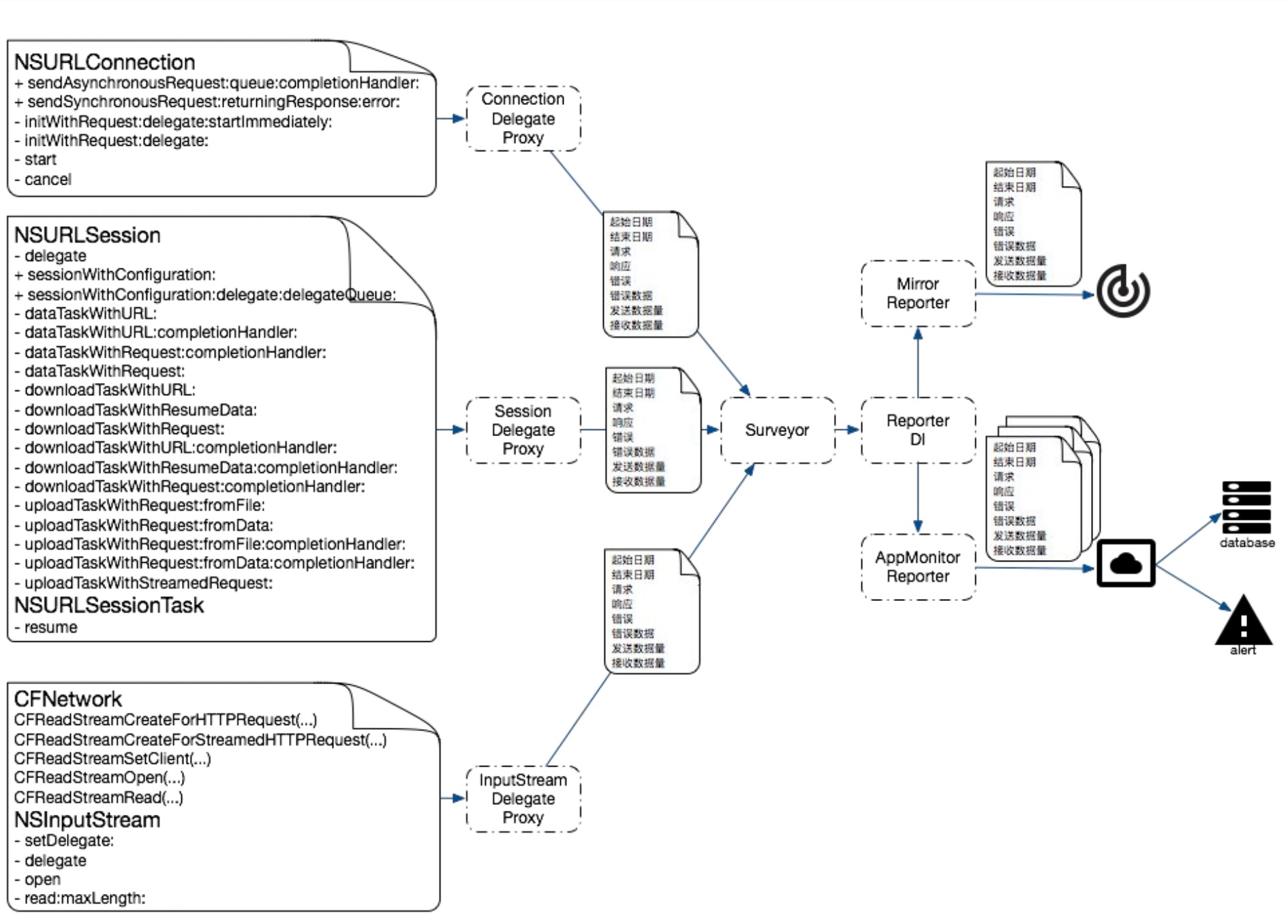

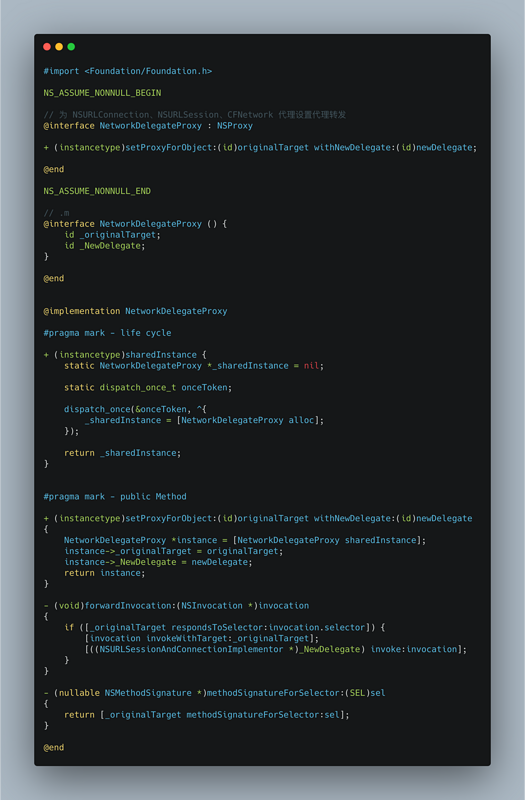

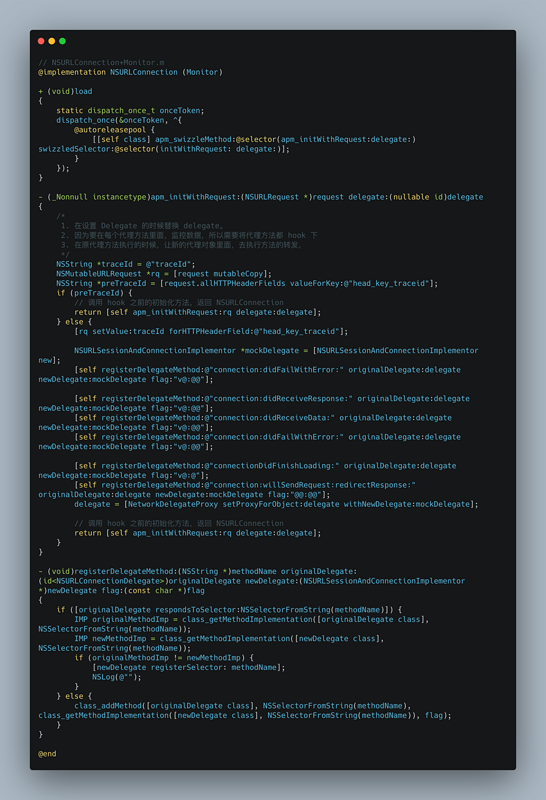

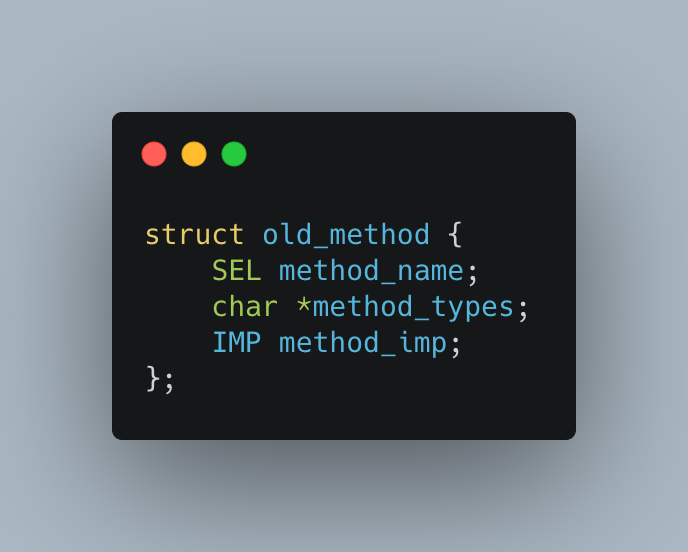

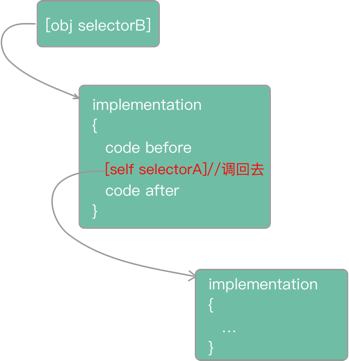

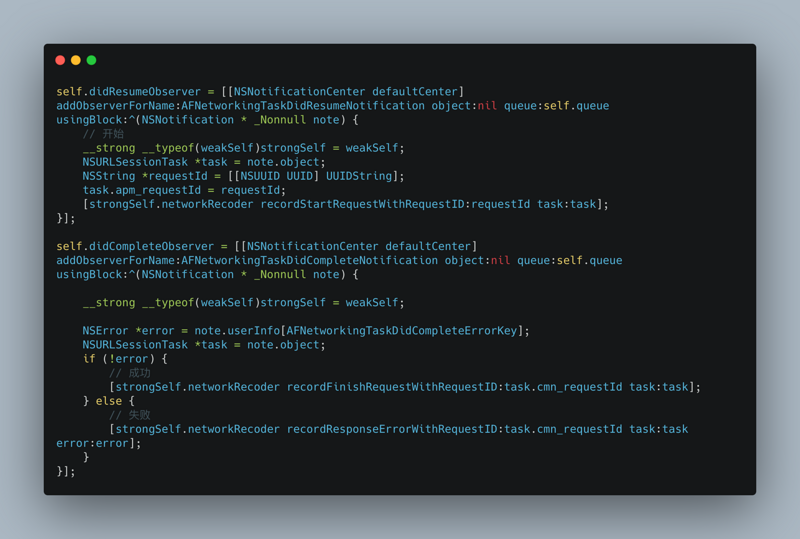

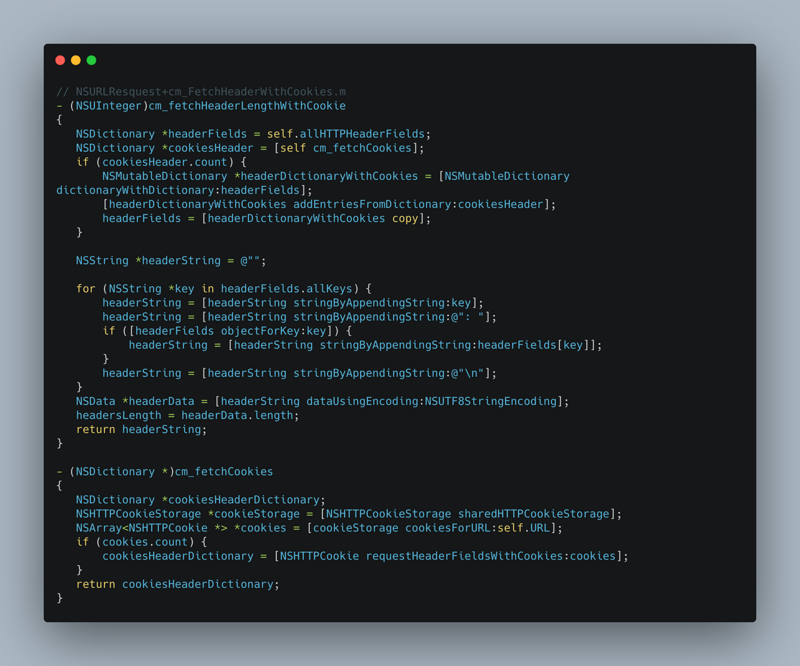

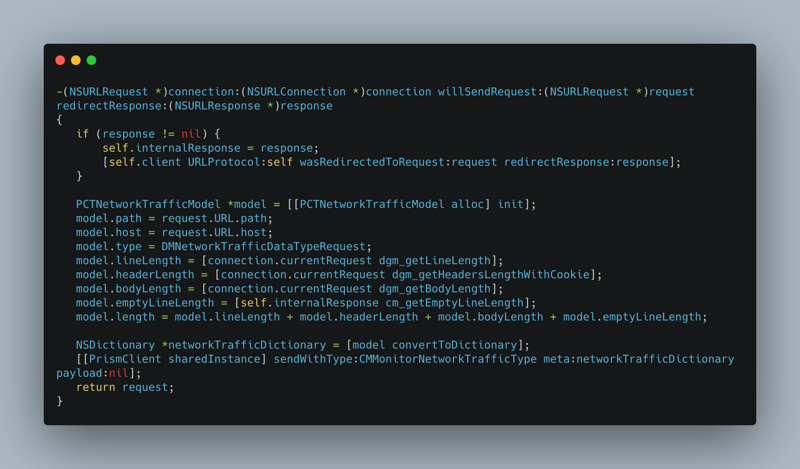

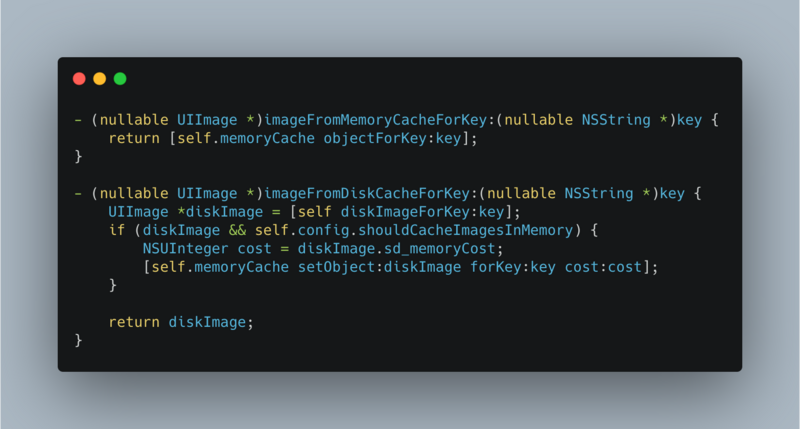

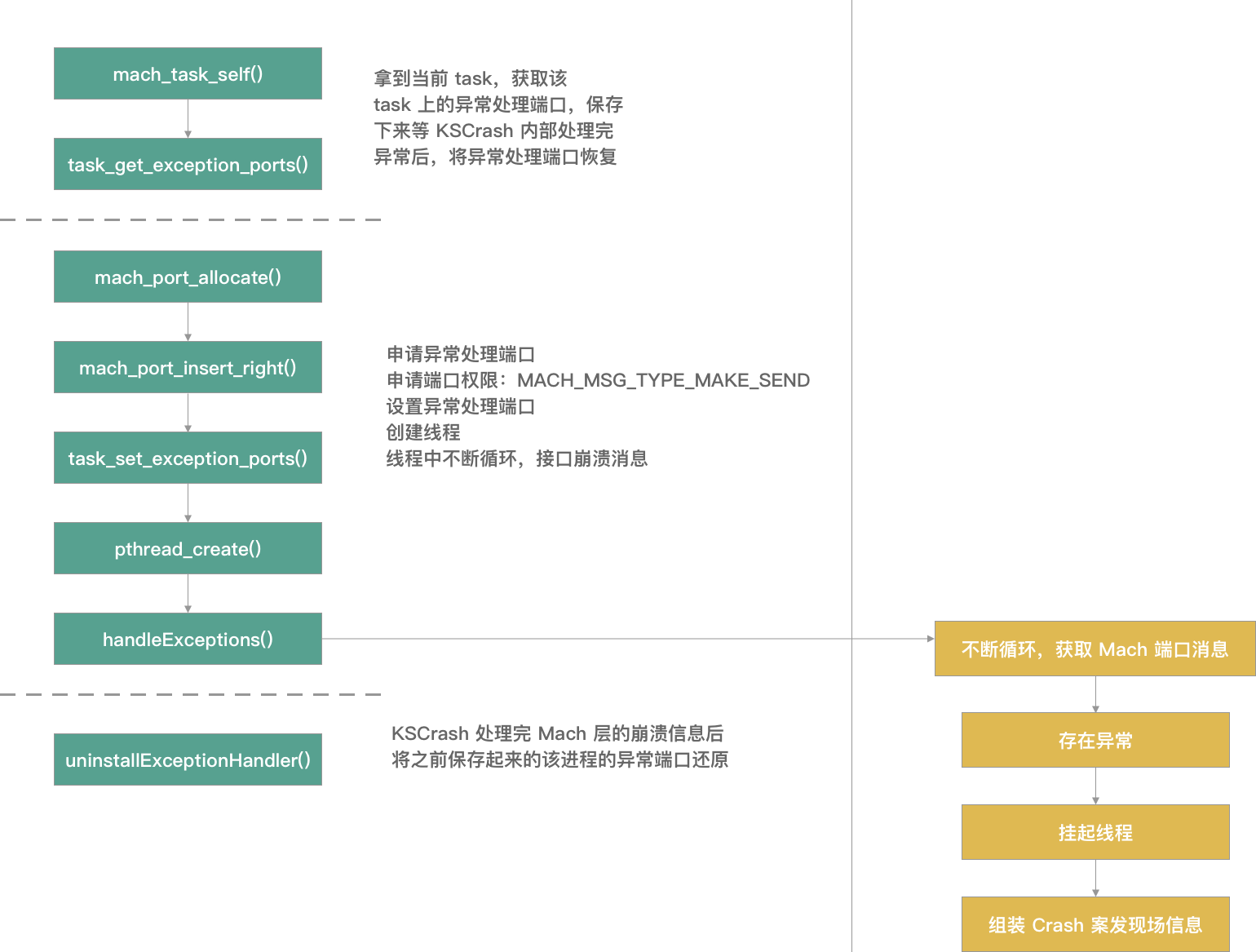

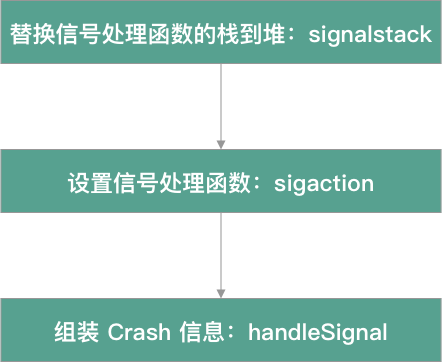

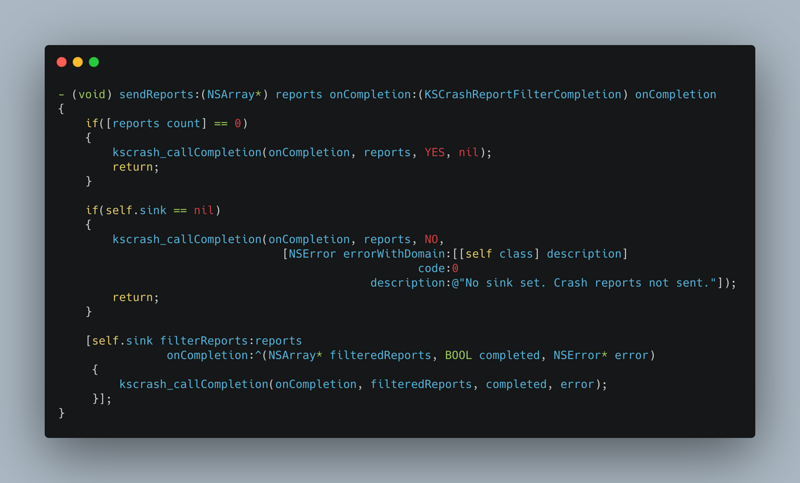

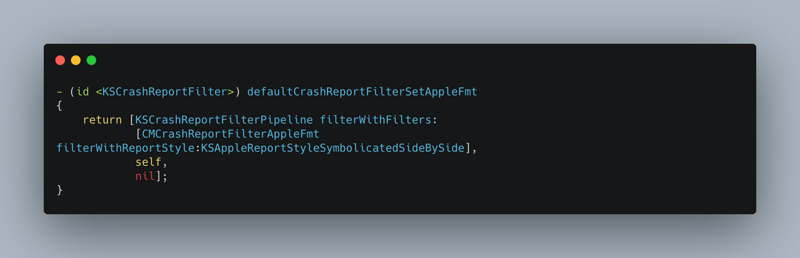

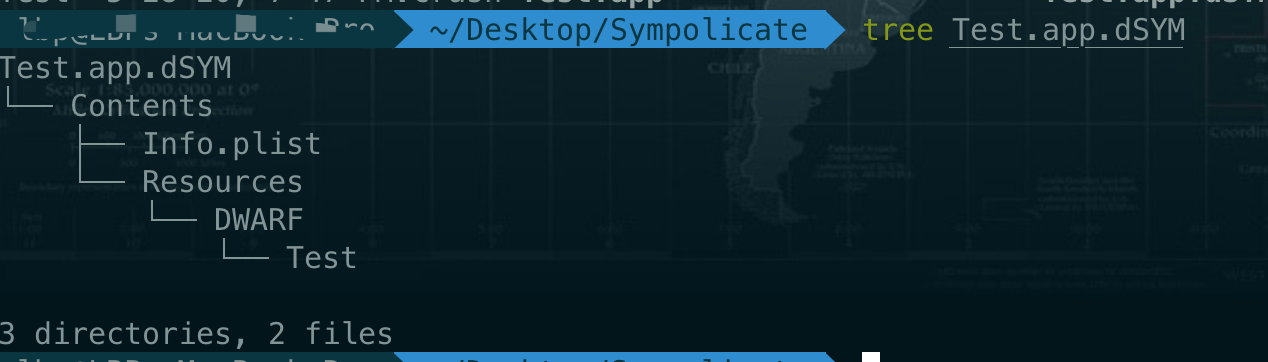

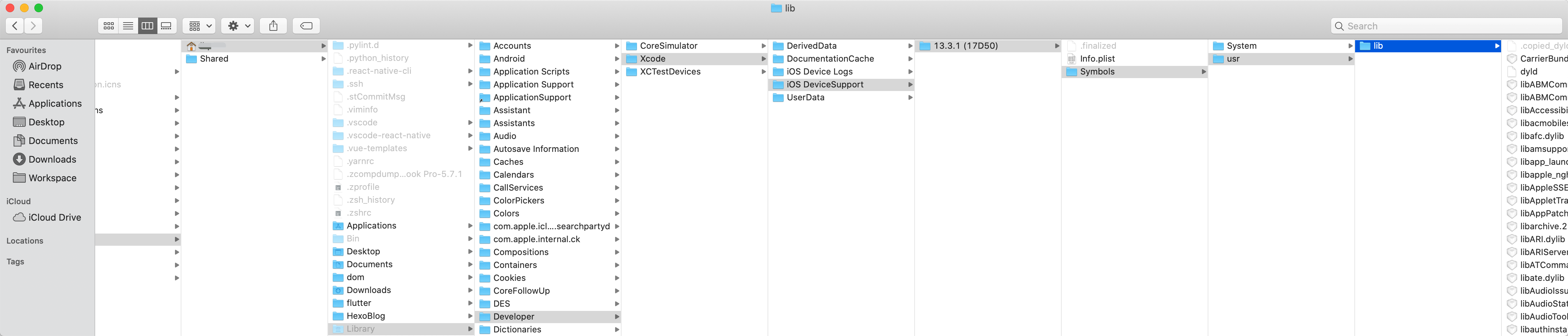

Relevant codes can be found in kern_memorystatus.h file. Function int MEMORYSTATUS is required_ control(uint32_ t command, int32_ t pid, uint32_ t flags, void *buffer, size_ t buffersize);