1, Foreword

. First, we will follow the previous task_struct explains task space management structure mm_struct, and a brief introduction to the physical memory and virtual memory related knowledge, about the detailed basic knowledge and concepts can refer to the book CSAPP, here will not do too much elaboration, but default in the understanding of its mapping relationship on the basis of learning. In the following article, we will continue to introduce the management of physical memory and memory mapping in user state and kernel state.

2, Basic concepts

- The architecture of CPU, cache, memory and main memory is that the faster the device is, the more expensive it is. Therefore, in order to save money, a multi-layer architecture is designed. MMU is included in the CPU

- Because of the limited physical memory and the security problem of multi process sharing physical memory, the design of virtual memory appears

- Virtual memory is designed according to the structure of ELF, including heap, mapping area, stack, data segment and so on

- Considering the structure of virtual memory, there is a heap application called dynamic memory

- Virtual memory allocates a separate address space for each process, which is mapped to physical memory for execution, so there is a mapping method of physical memory and virtual memory: page

- In order to manage virtual memory, there are page tables and multi-level page tables

- To speed up the mapping, TLB in the CPU appears

- In order to meet the needs of sharing, there are shared memory in memory mapping

- Because of the existence of memory fragmentation, the design of fragment management and garbage collector appear

3, Process memory management

User status includes

- Code snippet

- global variable

- Constant string

- Function stack, including function call, local variable, function parameter, etc

- Heap: malloc allocated memory, etc

- Memory mapping, such as the call of glibc, the code of glibc exists in the form of so file and also needs to be put in memory.

Kernel state includes

- Code of kernel part

- Global variables in kernel

- task_struct

- Kernel stack

- There is also dynamically allocated memory in the kernel

- Mapping table from virtual address to physical address

_ Struct management, while task_ There are the following member variables about memory in struct

struct mm_struct *mm; struct mm_struct *active_mm; /* Per-thread vma caching: */ struct vmacache vmacache;

mm_struct structure is also more complex. We will introduce it step by step. First let's look at the address division of kernel state and user state. This is the highest_vm_end stores the maximum address of the current virtual memory address, while task_size is the size of user status.

struct mm_struct { ...... unsigned long task_size; /* size of task vm space */ unsigned long highest_vm_end; /* highest vma end address */ ...... }

task_size is defined as follows. It can be seen from the notes that the user state allocates 3G space in 4G virtual memory, while the 64 bit space is huge, so the free area is reserved between the kernel state and the user state for isolation. The user state only uses 47 bits, i.e. 128TB. The kernel state also allocates 128TB, which is at the highest level.

#ifdef CONFIG_X86_32 /* * User space process size: 3GB (default). */ #define TASK_SIZE PAGE_OFFSET #define TASK_SIZE_MAX TASK_SIZE /* config PAGE_OFFSET hex default 0xC0000000 depends on X86_32 */ #else /* * User space process size. 47bits minus one guard page. */ #define TASK_SIZE_MAX ((1UL << 47) - PAGE_SIZE) #define TASK_SIZE (test_thread_flag(TIF_ADDR32) ? \ IA32_PAGE_OFFSET : TASK_SIZE_MAX) ......

3.1 user memory structure

in user status, mm_struct has the following member variables

- mmap_base: starting address of memory map

- mmap_legacy_base: represents the base address of the map, which is a fixed task in 32 bits_ UNMAPPED_ Base, while in 64 bit, there is a random mapping mechanism of virtual address, so it is TASK_UNMAPPED_BASE + mmap_rnd()

- hiwater_rss: RSS High water level usage of

- hiwater_vm: high water virtual memory usage

- total_vm: total number of pages mapped

- locked_vm: number of locked pages that cannot be swapped out

- pinned_vm: pages that cannot be swapped out or moved

- data_vm: number of pages to store data

- exec_vm: number of pages of executable

- stack_vm: number of pages in the stack

- arg_lock: introduce spin_lock is used to protect parallel access to the following area variables

- start_code and end_code: start and end of executable code

- start_data and end_data: start and end positions of initialized data

- start_brk: start of heap

- brk: the current end of the heap

- start_stack: the starting position and ending position of the stack are in the top pointer of the register

- arg_start and arg_end: the location of the parameter list, at the highest address in the stack.

- env_start and env_end: the location of the environment variable, at the highest address in the stack.

struct mm_struct { ...... unsigned long mmap_base; /* base of mmap area */ unsigned long mmap_legacy_base; /* base of mmap area in bottom-up allocations */ ...... unsigned long hiwater_rss; /* High-watermark of RSS usage */ unsigned long hiwater_vm; /* High-water virtual memory usage */ unsigned long total_vm; /* Total pages mapped */ unsigned long locked_vm; /* Pages that have PG_mlocked set */ atomic64_t pinned_vm; /* Refcount permanently increased */ unsigned long data_vm; /* VM_WRITE & ~VM_SHARED & ~VM_STACK */ unsigned long exec_vm; /* VM_EXEC & ~VM_WRITE & ~VM_STACK */ unsigned long stack_vm; /* VM_STACK */ spinlock_t arg_lock; /* protect the below fields */ unsigned long start_code, end_code, start_data, end_data; unsigned long start_brk, brk, start_stack; unsigned long arg_start, arg_end, env_start, env_end; unsigned long saved_auxv[AT_VECTOR_SIZE]; /* for /proc/PID/auxv */ ...... }

according to these member variables, we can plan the location of each part in the user state, but we also need a structure to describe the attributes of these areas, that is, vm_area_struct

struct mm_struct { ...... struct vm_area_struct *mmap; /* list of VMAs */ struct rb_root mm_rb; ...... }

vm_ area_ The specific structure of struct is defined as follows, actually through vm_next and vm_prev is a bi-directional linked list composed of a series of VMS_ area_ Struct is used to express the content of each area allocated by a process in user state.

- vm_start and vm_end indicates the beginning and end of the block area

- vm_rb corresponds to a red black tree, which will take all VMS_ area_ The combination of struct is easy to add and delete.

- rb_subtree_gap stores the interval between the current area and the previous area for subsequent allocation.

- vm_mm refers to the VM to which the structure belongs_ struct

- vm_page_prot manages the access rights of this page, vm_flags is the flag bit

- rb and rb_subtree_last: with spare space Interval tree structure

- ano_vma and ano_vma_chain: anonymous mapping. Virtual memory area can be mapped to physical memory or to file. When mapping to physical memory, it is called anonymous mapping. VM is required to map to file_ File specifies the mapped file, vm_pgoff stores the offset.

- vm_opts: function pointer to the structure, used to process the structure

- vm_private_data: private data store

/* * This struct defines a memory VMM memory area. There is one of these * per VM-area/task. A VM area is any part of the process virtual memory * space that has a special rule for the page-fault handlers (ie a shared * library, the executable area etc). */ struct vm_area_struct { /* The first cache line has the info for VMA tree walking. */ unsigned long vm_start; /* Our start address within vm_mm. */ unsigned long vm_end; /* The first byte after our end address within vm_mm. */ /* linked list of VM areas per task, sorted by address */ struct vm_area_struct *vm_next, *vm_prev; struct rb_node vm_rb; /* * Largest free memory gap in bytes to the left of this VMA. * Either between this VMA and vma->vm_prev, or between one of the * VMAs below us in the VMA rbtree and its ->vm_prev. This helps * get_unmapped_area find a free area of the right size. */ unsigned long rb_subtree_gap; /* Second cache line starts here. */ struct mm_struct *vm_mm; /* The address space we belong to. */ pgprot_t vm_page_prot; /* Access permissions of this VMA. */ unsigned long vm_flags; /* Flags, see mm.h. */ /* * For areas with an address space and backing store, * linkage into the address_space->i_mmap interval tree. */ struct { struct rb_node rb; unsigned long rb_subtree_last; } shared; /* * A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma * list, after a COW of one of the file pages. A MAP_SHARED vma * can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack * or brk vma (with NULL file) can only be in an anon_vma list. */ struct list_head anon_vma_chain; /* Serialized by mmap_sem & page_table_lock */ struct anon_vma *anon_vma; /* Serialized by page_table_lock */ /* Function pointers to deal with this struct. */ const struct vm_operations_struct *vm_ops; /* Information about our backing store: */ unsigned long vm_pgoff; /* Offset (within vm_file) in PAGE_SIZE units */ struct file * vm_file; /* File we map to (can be NULL). */ void * vm_private_data; /* was vm_pte (shared mem) */ atomic_long_t swap_readahead_info; #ifndef CONFIG_MMU struct vm_region *vm_region; /* NOMMU mapping region */ #endif #ifdef CONFIG_NUMA struct mempolicy *vm_policy; /* NUMA policy for the VMA */ #endif struct vm_userfaultfd_ctx vm_userfaultfd_ctx; } __randomize_layout;

to a mm_ For struct, there are many VMS_ area_ Struct will be loaded in ELF file, that is, load_elf_binary() is constructed. After parsing the ELF file format, the function will establish memory mapping, mainly including

- Call setup_new_exec, set memory map area mmap_base

- Call setup_arg_pages, set VM of stack_ area_ Struct, where mm - > arg is set_ Start refers to the bottom of the stack, current - > mm - > start_ Stack is the bottom of the stack

- elf_map maps the code portion of the ELF file to memory

- set_brk sets the VM of the heap_ area_ Struct, which sets current - > mm - > start_ BRK = current - > mm - > BRK, that is, the heap is still empty

- load_elf_interp maps the dependent so to the memory mapping area in memory

static int load_elf_binary(struct linux_binprm *bprm) { ...... setup_new_exec(bprm); ...... /* Do this so that we can load the interpreter, if need be. We will change some of these later */ retval = setup_arg_pages(bprm, randomize_stack_top(STACK_TOP), executable_stack); ...... error = elf_map(bprm->file, load_bias + vaddr, elf_ppnt, elf_prot, elf_flags, total_size); ...... /* Calling set_brk effectively mmaps the pages that we need * for the bss and break sections. We must do this before * mapping in the interpreter, to make sure it doesn't wind * up getting placed where the bss needs to go. */ retval = set_brk(elf_bss, elf_brk, bss_prot); ...... elf_entry = load_elf_interp(&loc->interp_elf_ex, interpreter, &interp_map_addr, load_bias, interp_elf_phdata); ...... current->mm->end_code = end_code; current->mm->start_code = start_code; current->mm->start_data = start_data; current->mm->end_data = end_data; current->mm->start_stack = bprm->p; ...... }

3.2 kernel state structure

there are also some structural differences due to the large space gap between 32-bit and 64 bit systems. Here we discuss the structure of the two.

3.2.1 32-bit kernel structure

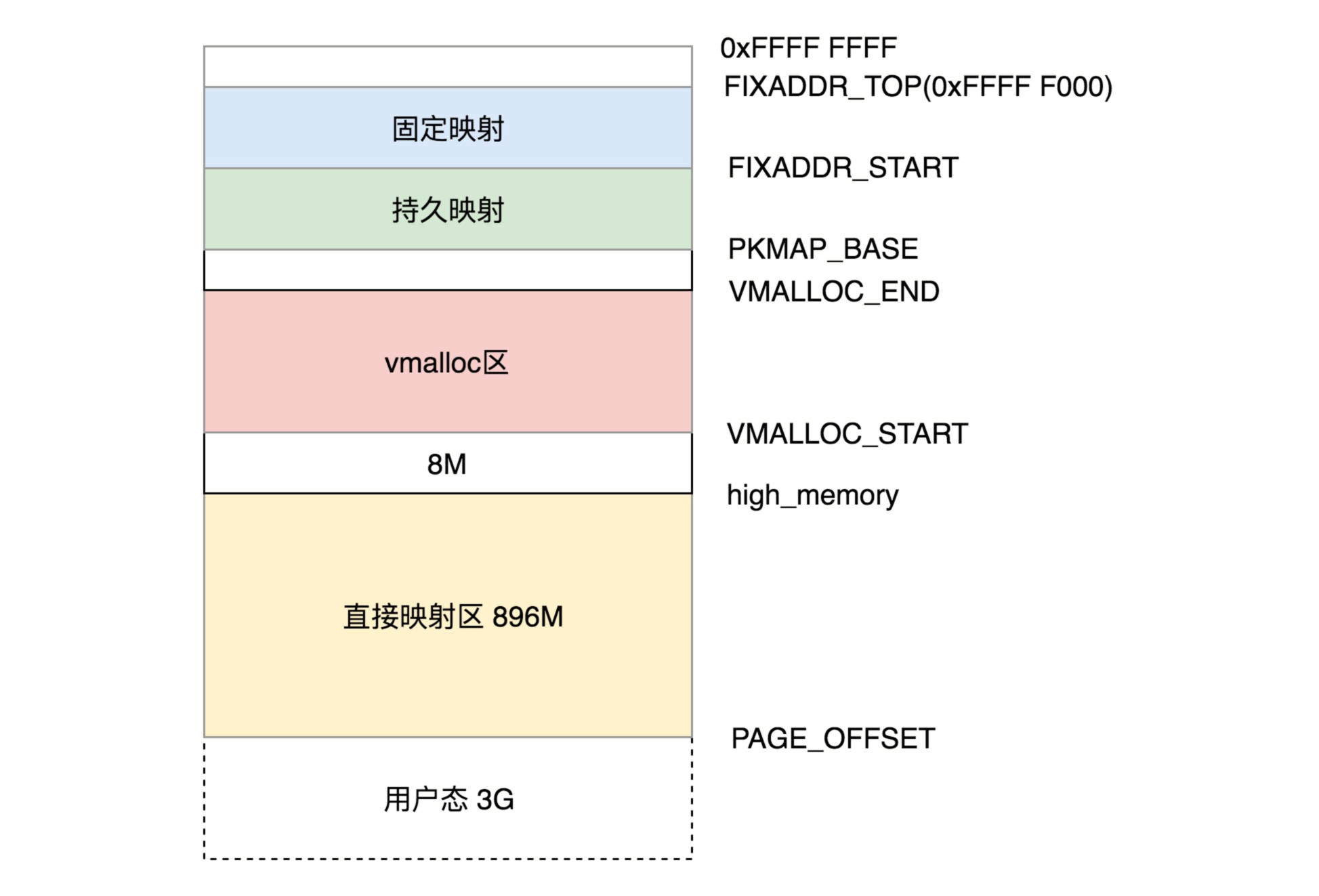

the virtual space in kernel state is irrelevant to the process, that is, after all processes enter the kernel through system call, the virtual address space seen is the same. As shown in the figure below is the distribution of 32-bit kernel virtual space.

-

Direct mapping area

The first 896M is a direct mapping area, which is used for direct mapping with physical memory. Subtract 3G from the virtual memory address to get the location of the corresponding physical memory. In the kernel, there are two macros:

-

Wei pa(vaddr) returns the physical address related to the virtual address vaddr;

-

Wei va(paddr) calculates the virtual address corresponding to the physical address paddr.

.

. In this way, the code segment, global variable and BSS of the kernel will also be mapped to the virtual address space after 3G. Specific physical memory layout can be viewed in / proc/iomem, which will be different due to each person's system, configuration, etc.

- high_memory

The name of high-end memory comes from the division of physical address space into three parts in x86 architecture: ZONE_DMA,ZONE_NORMAL and ZONE_HIGHMEM. ZONE_HIGHMEM is high-end memory.

high end memory refers to the area above the 896M direct mapping area when memory management module views physical memory. **In the kernel, except for the memory management module, the rest operate on the virtual address. **The memory management module will directly operate the physical address to allocate and map the virtual address. The significance of its existence is to access unlimited physical memory space with the limited kernel space of 32-bit system: borrow this logical address space, establish the physical memory mapped to the one you want to access (that is, fill the kernel page table), temporarily use it for a while, and return it after use up.

- Kernel dynamic mapping space

At VMALLOC_START and vmalloc_ The area between ends is called kernel dynamic mapping space, which corresponds to the memory application of malloc in user state process. In kernel state, vmalloc can be used to apply. Kernel state has separate page table management, which is separate from user state.

- Persistent kernel mapping

PKMAP_BASE to fixaddr_ The space for start is called persistent kernel mapping, and the address range is between 4G-8M and 4G-4M. Using alloc_ When the pages() function is used, the struct page structure is obtained in the high-end memory of the physical memory, and kmap can be called to map it to this area. Because the number of permanent mappings allowed is limited, when high-end memory is no longer needed, the mapping should be unmapped, which can be done through the kunmap() function.

- Fixed mapping area

FIXADDR_START to fixaddr_ The space of top (0xFFFF F000), known as fixed mapping area, is mainly used to meet special needs.

- temporary kernel mapping

temporary kernel mapping via kmap_atomic and kunmap_ The implementation of atomic is mainly used for operations when writing to physical memory or main memory, such as writing files.

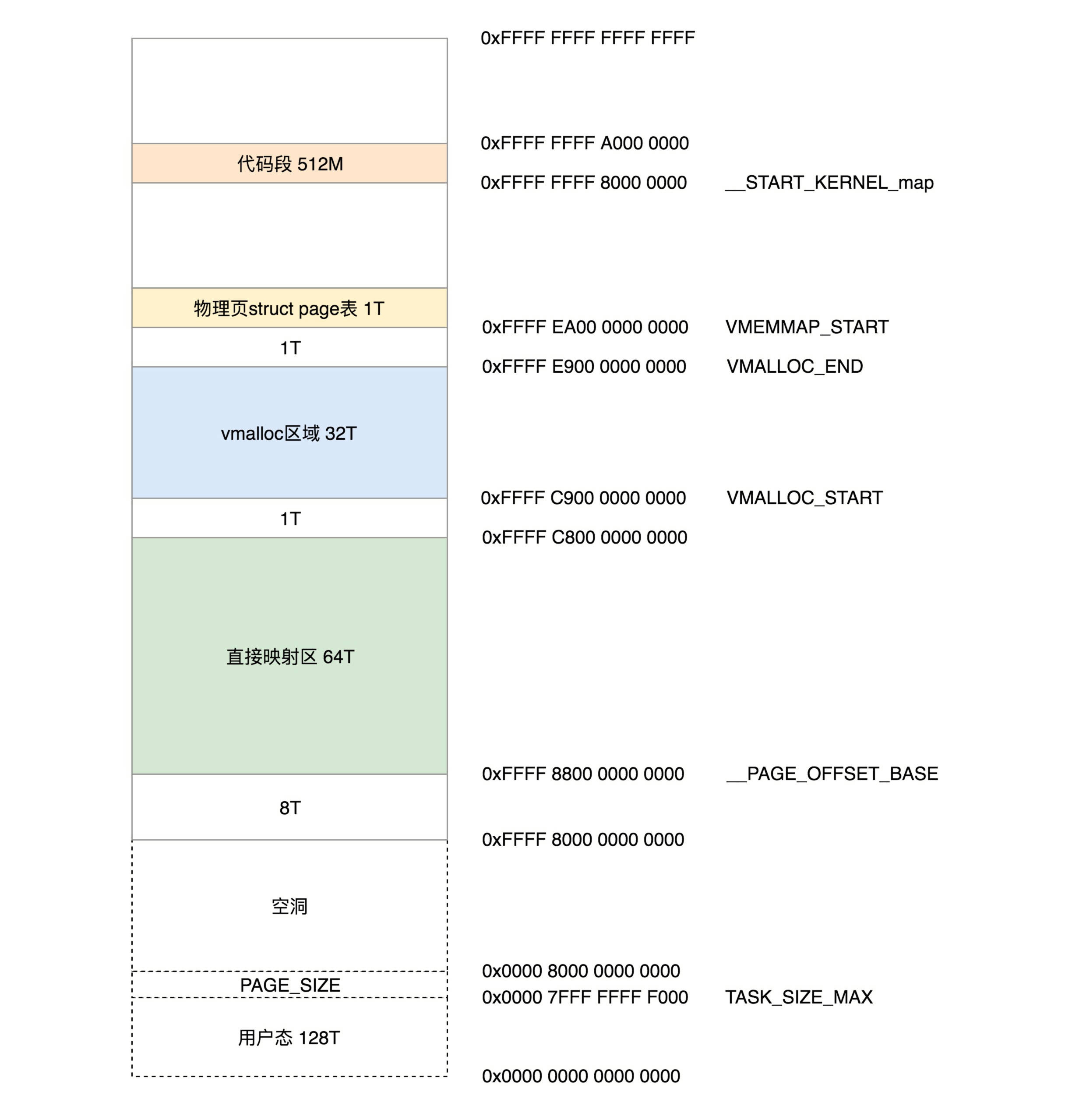

3.2.2 64 bit kernel structure

Since the space of 64 bit kernel state is huge, it does not need to be as careful as 32-bit. It directly divides many free areas for protection. The structure is shown in the following figure

- From 0xffff80000000000000, it's the part of the kernel, but at the beginning, it has 8T of empty area.

- From__ PAGE_OFFSET_ The 64T virtual address space starting from base (0xffff88000000000) is a direct mapping area, that is, subtracting PAGE_OFFSET is the physical address. In most cases, the mapping between virtual address and physical address will still be done by establishing page table.

- From VMALLOC_START (0xffffc900000000) to vmalloc_ The 32T space of end (0xffffe900000000000) is for vmalloc.

- From vmemmap_ The 1T space from start (0xffffea00000000000) is used to store the description structure of struct page of physical page.

- From__ START_ KERNEL_ 512M from map (0xffffffff80000000) is used to store kernel code segments, global variables, BSS, etc. This corresponds to the beginning of physical memory, minus__ START_KERNEL_map can get the address of physical memory. It's a bit similar to the direct mapping area, but it's not contradictory, because there are 8T empty areas before the direct mapping area, which is long past the location where the kernel code is loaded in the physical memory.

summary

.

Code information

[1] linux/include/linux/mm_types.h

reference material

[1] wiki

[3] woboq

[4] Linux-insides

[5] Deep understanding of Linux kernel

[6] The art of Linux kernel design

[7] Geek time interesting talk about Linux operating system