1, Use of Consumer

The source code analysis of Consumer mainly focuses on KafkaConsumer, which is the implementation class of the Consumer interface. KafkaConsumer provides a well encapsulated API. Developers can easily pull messages from Kafka server based on this API. In this way, developers do not care about the underlying operations such as network connection management, heartbeat detection, request timeout retry with Kafka server, or the number of partitions subscribing to Topic The network topology of the partition replica and the specific details of Kafka such as the Consumer Group's Rebalance. KafkaConsumer also provides the function of automatically submitting offset s, so that developers pay more attention to business logic and improve development efficiency.

Let's take a look at an example program of KafkaConsumer:

/**

* @author: WeChat official account

*/

public class KafkaConsumerTest {

public static void main(String[] args) {

Properties props = new Properties();

// kafka addresses. The list format is host1:port1,host2:port2,.... there is no need to add all cluster addresses. kafka will find other addresses according to the provided addresses (it is recommended to provide more to prevent the provided server from shutting down)

props.put("bootstrap.servers", "localhost:9092");

// key serialization method must be set

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// value serialization method must be set

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("group.id", "consumer_riemann_test");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

// Multiple topic s can be consumed to form a list

String topic = "riemann_kafka_test";

consumer.subscribe(Arrays.asList(topic));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

System.out.printf("offset = %d, key = %s, value = %s \n", record.offset(), record.key(), record.value());

try {

Thread.sleep(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

}As can be seen from the example, the core method of KafkaConsumer is poll(), which is responsible for pulling messages from Kafka server. I want to talk about the details of the core method in the next article, which is about the communication model between the client on the Consumer side and the Kafka server. In this article, we mainly analyze the source code of the Consumer from a macro perspective.

2, KafkaConsumer analysis

Let's first look at the Consumer interface, which defines the external API of KafkaConsumer. Its core methods can be divided into the following six categories:

- subscribe() method: subscribe to the specified Topic and automatically allocate partitions for consumers.

- assign() method: the user manually subscribes to the specified Topic and specifies the consumption partition. This method is mutually exclusive with the subscribe() method.

- poll() method: responsible for obtaining messages from the server.

- commit * () method: submit the offset that the consumer has consumed.

- seek * () method: specify the location where the consumer starts consumption.

- pause() and resume() methods: pause and resume the Consumer. After pause, the poll() method will return null.

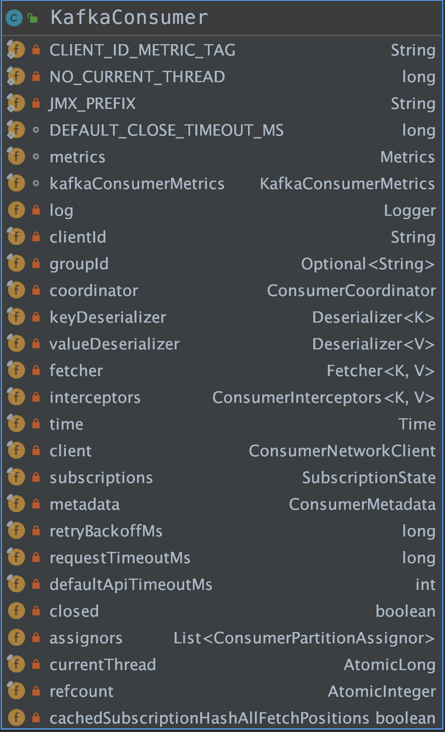

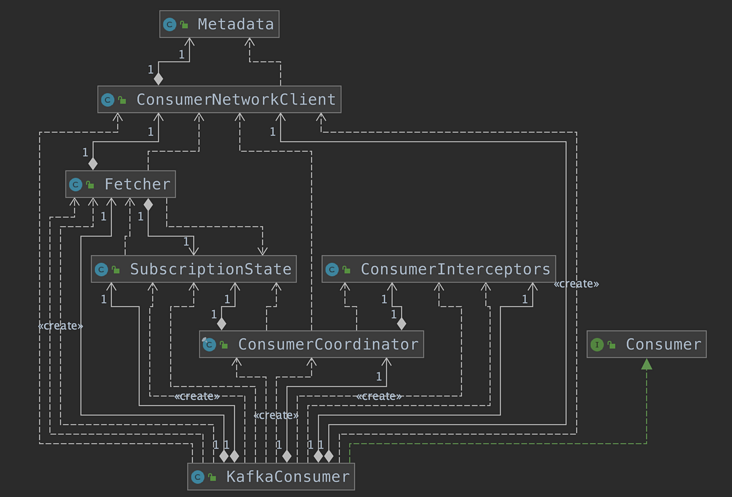

Let's first look at the important attributes of KafkaConsumer and the UML structure diagram.

- clientId: unique identifier of the Consumer.

- groupId: unique identification of the consumer group.

- coordinator: controls the communication logic between the Consumer and the server GroupCoordinator. Readers can understand it as the facade of the communication between the Consumer and the server GroupCoordinator.

- keyDeserializer, valueDeserializer: deserializer for key and value.

- fetcher: responsible for getting messages from the server.

- interceptors: ConsumerInterceptors collection. The ConsumerInterceptors.onConsumer() method can intercept or modify messages before they are returned to users through the poll() method; The ConsumerInterceptors.onCommit() method can also intercept or modify the response of successfully submitting offset on the server.

- client: ConsumerNetworkClient is responsible for the network communication between consumers and Kafka server.

- subscriptions: SubscriptionState maintains the consumption status of consumers.

- metadata: ConsumerMetadata records the meta information of the entire Kafka cluster.

- currentThread and refcount: the thread id and reentry times of KafkaConsumer recorded respectively

3, ConsumerNetworkClient

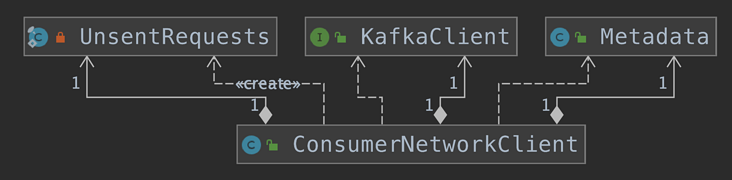

ConsumerNetworkClient is encapsulated on the NetworkClient, providing more advanced functions and easier API s.

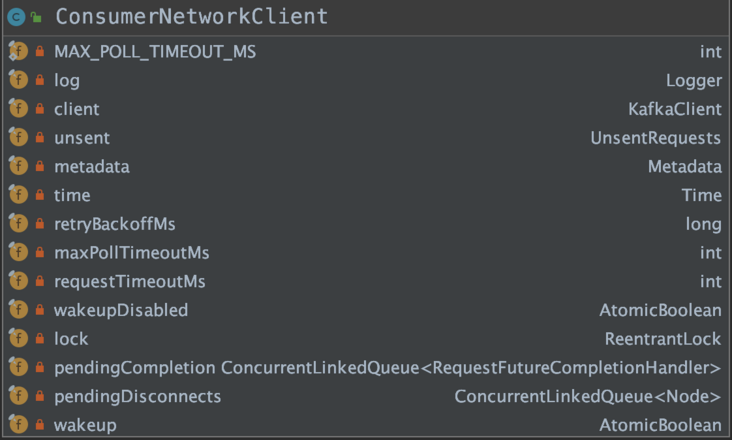

Let's take a look at the important properties of ConsumerNetworkClient and the UML structure diagram.

- client: NetworkClient object.

- Unsent: buffer queue. UnsentRequests object, which internally maintains an unsent attribute, which is concurrentmap < Node, concurrentlinkedqueue < clientrequest > >, key is Node node, and value is concurrentlinkedqueue < clientrequest >.

- Metadata: used to manage Kafka cluster metadata.

- retryBackoffMs: the amount of time to wait before attempting to retry a failed request for a given subject partition, which avoids sending requests repeatedly in a tight loop in some failure cases. Corresponding to retry.backoff.ms configuration, 100 ms by default.

- maxPollTimeoutMs: the expected time between the heartbeats of the consumer coordinator when using Kafka's group management tool. Heartbeat is used to ensure that the consumer's session remains active and to promote rebalancing when new consumers join or leave the group. This value must be set below session.timeout.ms, but should not normally be set above 1 / 3 of this value. It can be adjusted lower to control the expected time of normal rebalancing. Corresponding to heartbeat.interval.ms configuration, 3000 ms by default. In the constructor, maxPollTimeoutMs takes maxPollTimeoutMs and max_ POLL_ TIMEOUT_ Minimum value of MS, MAX_POLL_TIMEOUT_MS defaults to 5000 ms.

- requestTimeoutMs: the configuration controls the maximum time the client waits for a request response. If no response is received before the timeout, the client will resend the request if necessary, or if the retry runs out, the request fails. Corresponding to the request.timeout.ms configuration, the default is 305000 ms.

- wakeupDisabled: set by a thread other than the consumer thread calling the KafkaConsumer object, indicating that the KafkaConsumer thread is to be interrupted.

- lock: we don't need high throughput, so we use fair locks to avoid hunger as much as possible.

- Pending completion: when requests are completed, they are transferred to this queue before the call. The purpose is to avoid calling this object's monitors when they are held, which may open the door for deadlocks.

- Pending disconnects: disconnects the queue of nodes connected to the coordinator.

- wakeup: this flag allows the client to wake up safely without waiting for the above lock. To enable it at the same time, avoid the need to obtain that the above lock is atomic.

The core method of ConsumerNetworkClient is the poll() method. The poll() method has many overloaded methods and will eventually call the poll (timer, pollCondition, pollCondition, Boolean disableWakeup) method. The meanings of these three parameters are: timer indicates that the timer limits how long this method can block; pollCondition indicates nullable blocking condition; disableWakeup indicates that if true, trigger wakeup is disabled.

Let's briefly review the functions of ConsumerNetworkClient:

3.1 org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient#trySend

Cycle the requests cached in unsent. For each Node node, cycle through its ClientRequest linked list. Each cycle calls the NetworkClient.ready() method to detect the connection between the consumer and this Node and the conditions for sending the request. If the conditions are met, call the NetworkClient.send() method, put the request into the InFlightRequest to wait for the response, and put it into the send field in KafkaChannel to wait for sending, and delete the message from the list. The code is as follows:

long trySend(long now) {

long pollDelayMs = maxPollTimeoutMs;

// send any requests that can be sent now

// Traversing unsent sets

for (Node node : unsent.nodes()) {

Iterator<ClientRequest> iterator = unsent.requestIterator(node);

if (iterator.hasNext())

pollDelayMs = Math.min(pollDelayMs, client.pollDelayMs(node, now));

while (iterator.hasNext()) {

ClientRequest request = iterator.next();

// Call NetworkClient.ready() to check whether the request can be sent

if (client.ready(node, now)) {

// Call the NetworkClient.send() method and wait for the request to be sent.

client.send(request, now);

// Remove this request from the unsent collection

iterator.remove();

} else {

// try next node when current node is not ready

break;

}

}

}

return pollDelayMs;

}3.2 calculate timeout

If no request is in progress, the blocking time shall not exceed the retry backoff time.

3.3 org.apache.kafka.clients.NetworkClient#poll

- Determine whether metadata metadata needs to be updated

- Call Selector.poll() to perform socket related IO operations

- Operation after processing (handle a series of handle * () methods, handle request response, disconnection, timeout, etc., and call the callback function of each request)

3.4 call the checkDisconnects() method to check the connection status

The checkDisconnects() method is called to detect the connection state. The connection state between the consumer and each Node is detected. When the connection disconnected Node is detected, all the ClientRequest objects corresponding to the unsent set are cleared, and then the callback functions of these ClientRequest are invoked.

private void checkDisconnects(long now) {

// any disconnects affecting requests that have already been transmitted will be handled

// by NetworkClient, so we just need to check whether connections for any of the unsent

// requests have been disconnected; if they have, then we complete the corresponding future

// and set the disconnect flag in the ClientResponse

for (Node node : unsent.nodes()) {

// Detect the connection status between the consumer and each Node

if (client.connectionFailed(node)) {

// Remove entry before invoking request callback to avoid callbacks handling

// coordinator failures traversing the unsent list again.

// Delete the entry before calling the request callback to avoid the callback handling coordinator failure to traverse the unsent list again.

Collection<ClientRequest> requests = unsent.remove(node);

for (ClientRequest request : requests) {

RequestFutureCompletionHandler handler = (RequestFutureCompletionHandler) request.callback();

AuthenticationException authenticationException = client.authenticationException(node);

// Call the callback function of ClientRequest

handler.onComplete(new ClientResponse(request.makeHeader(request.requestBuilder().latestAllowedVersion()),

request.callback(), request.destination(), request.createdTimeMs(), now, true,

null, authenticationException, null));

}

}

}

}3.5 org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient#maybeTriggerWakeup

Check wakeupDisabled and wakeup to see if there are other thread interrupts. If there is an interrupt request, throw a WakeupException exception and interrupt the current ConsumerNetworkClient.poll() method.

public void maybeTriggerWakeup() {

// Check whether the non interruptible method is being executed through wakeupDisabled, and check whether there is an interrupt request through wakeup.

if (!wakeupDisabled.get() && wakeup.get()) {

log.debug("Raising WakeupException in response to user wakeup");

// Reset interrupt flag

wakeup.set(false);

throw new WakeupException();

}

}3.6 call the trySend() method again

The trySend() method is called again. In the step 2.1.3, the NetworkClient.poll() method is invoked, where the request on the KafkaChannel.send field may have been sent out, or the network connection with some Node has been built, so here we try again to call the trySend() method.

3.7 org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient#failExpiredRequests

Process the timeout request in unsent. It will cycle through the entire unsent collection, detect whether each ClientRequest times out, add the expired request to the expiredRequests collection, and delete it from the unsent collection. Call the callback function onFailure() of the timeout ClientRequest.

private void failExpiredRequests(long now) {

// clear all expired unsent requests and fail their corresponding futures

// Clear all expired unsent requests and fail their corresponding futures

Collection<ClientRequest> expiredRequests = unsent.removeExpiredRequests(now);

for (ClientRequest request : expiredRequests) {

RequestFutureCompletionHandler handler = (RequestFutureCompletionHandler) request.callback();

// Call callback function

handler.onFailure(new TimeoutException("Failed to send request after " + request.requestTimeoutMs() + " ms."));

}

}

private Collection<ClientRequest> removeExpiredRequests(long now) {

List<ClientRequest> expiredRequests = new ArrayList<>();

for (ConcurrentLinkedQueue<ClientRequest> requests : unsent.values()) {

Iterator<ClientRequest> requestIterator = requests.iterator();

while (requestIterator.hasNext()) {

ClientRequest request = requestIterator.next();

// Check for timeout

long elapsedMs = Math.max(0, now - request.createdTimeMs());

if (elapsedMs > request.requestTimeoutMs()) {

// Add expired requests to the expiredRequests collection

expiredRequests.add(request);

requestIterator.remove();

} else

break;

}

}

return expiredRequests;

}4, RequestFutureCompletionHandler

Before talking about RequestFutureCompletionHandler, let's take a look at the ConsumerNetworkClient.send() method. The logic inside will encapsulate the request to be sent into a ClientRequest, and then save it to the unsent collection for sending. The code is as follows:

public RequestFuture<ClientResponse> send(Node node,

AbstractRequest.Builder<?> requestBuilder,

int requestTimeoutMs) {

long now = time.milliseconds();

RequestFutureCompletionHandler completionHandler = new RequestFutureCompletionHandler();

ClientRequest clientRequest = client.newClientRequest(node.idString(), requestBuilder, now, true,

requestTimeoutMs, completionHandler);

// Create a clientRequest object and save it to the unsent collection.

unsent.put(node, clientRequest);

// wakeup the client in case it is blocking in poll so that we can send the queued request

// Wake up the client in case it blocks in polling so that we can send queued requests.

client.wakeup();

return completionHandler.future;

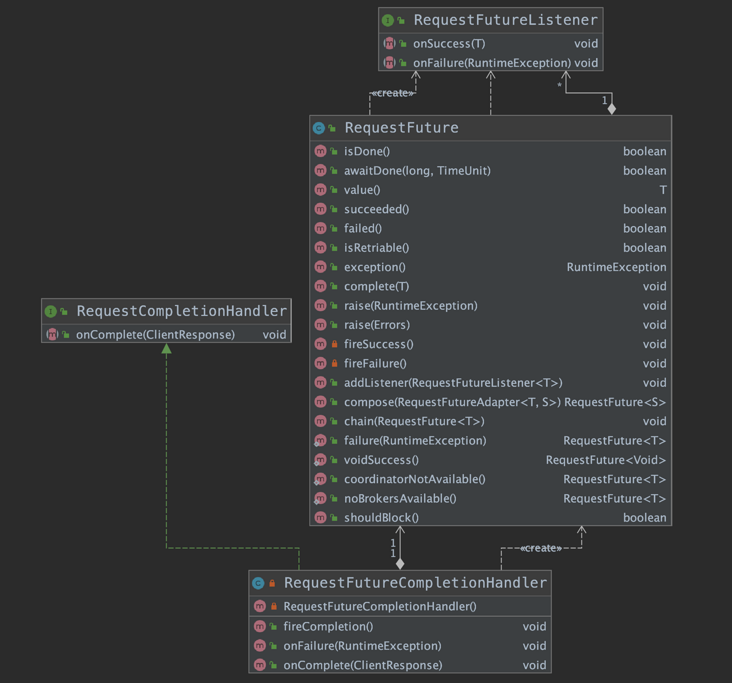

}Let's focus on the callback object used in ConsumerNetworkClient - RequestFutureCompletionHandler. Its inheritance relationship is as follows:

From the inheritance diagram of RequestFutureCompletionHandler, we can know that it not only implements the RequestCompletionHandler interface, but also combines the RequestFuture class. RequestFuture is a generic class, and its core fields and methods are as follows:

- listeners: the RequestFutureListener queue, which is used to listen for the completion of requests. The RequestFutureListener interface has two methods, onSuccess() and onFailure(), corresponding to the normal completion of requests and exceptions.

- isDone(): indicates whether the current request has been completed. This field will be set to true regardless of normal completion or exception.

- value(): records the response received when the request completes normally, which is mutually exclusive with the exception() method. If this field is not empty, it indicates normal completion, otherwise it indicates an exception.

- exception(): records the exception class that causes the request to complete abnormally, which is mutually exclusive with value(). If this field is not empty, it indicates that an exception occurs, otherwise it indicates that the request is completed normally.

The reason why we want to analyze the source code is that there are many design patterns in the source code that can be used for reference and applied to your own work. There are two typical design patterns in RequestFuture. Let's take a look:

- compose() method: adapter mode is used.

- chain() method: the responsibility chain pattern is used.

4.1 RequestFuture.compose()

/**

* Adapter

* Adapt from a request future of one type to another.

*

* @param <F> Type to adapt from

* @param <T> Type to adapt to

*/

public abstract class RequestFutureAdapter<F, T> {

public abstract void onSuccess(F value, RequestFuture<T> future);

public void onFailure(RuntimeException e, RequestFuture<T> future) {

future.raise(e);

}

}

/**

* RequestFuture<T> Adapt to requestfuture < s >

* Convert from a request future of one type to another type

* @param adapter The adapter which does the conversion

* @param <S> The type of the future adapted to

* @return The new future

*/

public <S> RequestFuture<S> compose(final RequestFutureAdapter<T, S> adapter) {

// Results after adaptation

final RequestFuture<S> adapted = new RequestFuture<>();

// Add listener on current RequestFuture

addListener(new RequestFutureListener<T>() {

@Override

public void onSuccess(T value) {

adapter.onSuccess(value, adapted);

}

@Override

public void onFailure(RuntimeException e) {

adapter.onFailure(e, adapted);

}

});

return adapted;

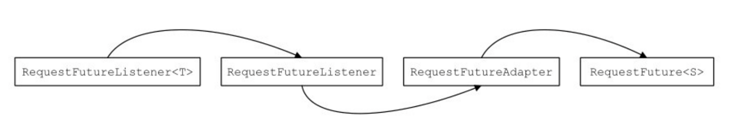

}After adaptation using the compose() method, the calling process during callback can also be regarded as the event propagation process of request completion. When calling the complete() or raise() method of the requestfuture < T > object, the onSuccess() or onFailure() of requestfuturelistener < T > will be called Method, then invoke the corresponding method of RequestFutureAdapter<T, S>, and finally call the corresponding method of RequestFuture<S> object.

4.2 RequestFuture.chain()

The chain() method is similar to the compose() method in that it passes events between multiple requestfutures through the RequestFutureListener. The code is as follows:

public void chain(final RequestFuture<T> future) {

// Add listener

addListener(new RequestFutureListener<T>() {

@Override

public void onSuccess(T value) {

// Pass value to the next RequestFuture object through the listener

future.complete(value);

}

@Override

public void onFailure(RuntimeException e) {

// Pass the exception to the next RequestFuture object through the listener

future.raise(e);

}

});

}Well, the source code analysis of ConsumerNetworkClient is over. I hope the article will help you. See you next time.