What did you do?

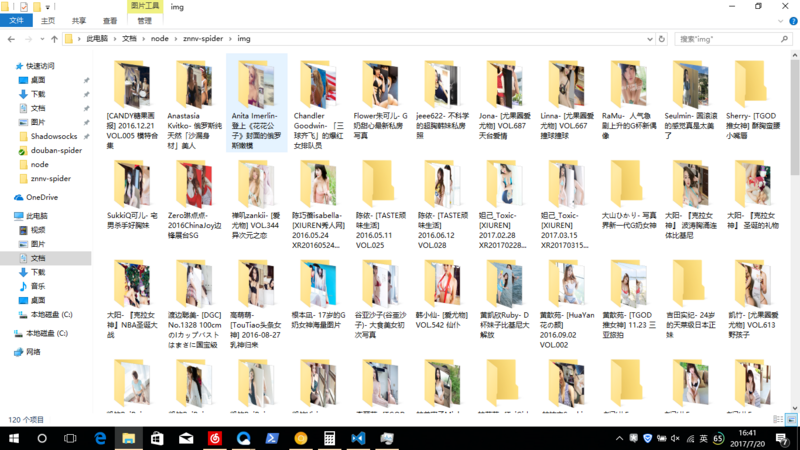

One for crawlingwww.nvshens.com The reptile in the picture of my sister. In case of infringement, close immediately

Reason

It's too much trouble to put them down one by one.

How to use it

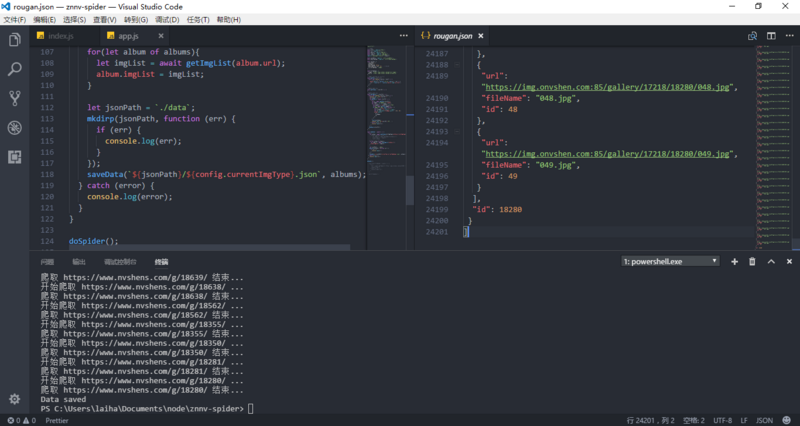

0. node -v >= 7.6 1. git clone https://github.com/laihaibo/beauty-spider.git 2. npm i 3. npm run start 4. npm run calc (get the number of albums and files crawled) 5. npm run download

update

against antireptile

When the picture is downloaded, it will be found that it has become a pirate picture. Then observe the browser's normal browsing behavior. Set referer, accept and user-agent in the request header. Solve the problem

request.get(url).set({ 'Referer': 'https://www.google.com', 'Accept': 'image/webp,image/*,*/*;q=0.8', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3091.0 Safari/537.36' }).end((err, res) => {})

Continue downloading after disconnection

When the picture downloads 700 files, it is often disconnected. It should be that the food crawler mechanism of the website has played a role and can not be solved for the time being. You should skip the downloaded files when you re-download them. So when you save the picture, you first judge whether the picture exists or not.

let isExit = fs.existsSync(path); if (!isExit) { saveOne(...args) }

Get the number of albums and files that should be downloaded

let data = JSON.parse(fs.readFileSync(path)); let count = data.reduce((prev, cur) => prev + cur.imgList.length, 0); console.log(`common ${data.length}Albums, altogether ${count}A picture`);

step

-

Introduce the required libraries

const fs = require("fs"); const mkdirp = require('mkdirp'); const cheerio = require('cheerio'); const request = require('superagent'); require('superagent-charset')(request); -

Page analysis, config file configuration

Analyse the address of the album. Take Korea as an example. The home page is https://www.nvshens.com/gallery/hanguo/, and the second page is https://www.nvshens.com/gallery/hanguo/2.html.const config = { current: 'hanguo', allTags: { rougan: `https://www.nvshens.com/gallery/rougan/`, hanguo: 'https://www.nvshens.com/gallery/hanguo/' } } -

Encapsulate the html content function to get the specified url

//The website is coded utf-8 const getHtml = url => { return new Promise((resolve, reject) => { request.get(url).charset('utf-8').end((err, res) => { err ? reject(err) : resolve(cheerio.load(res.text)); }) }) } -

Get labels for all albums in this category

/** * @param {string} startUrl url address on label front page */ const getAlbums = (startUrl) => { return new Promise((resolve, reject) => { let albums = []; // All album information used to save the label let getQuery = async startUrl => { try { let $ = await getHtml(startUrl); let pages = $('#Listdiv. pagesYY a'). length; // Get the number of pages for (let i = 1; i <= pages; i++) { let pageUrl = `${startUrl + i}.html` // Set the url for each page let $ = await getHtml(pageUrl); // Setting pages values dynamically let compare = $('#listdiv .pagesYY a').map(function (i, el) { return parseInt($(this).text(), 0); }).get().filter(x => x > 0); pages = conmpare.length < 2 ? pages : compare.reduce((prev, cur) => Math.max(prev, cur)); $('.galleryli_title a').each(function () { albums.push({ title: $(this).text(), url: `https://www.nvshens.com${$(this).attr("href")}`, imgList: [], id: parseInt($(this).attr("href").split('/')[2], 10) }) }) } resolve(albums); // Return album information } catch (error) { console.log(error); } } getQuery(startUrl); }) } -

Get picture information for all albums

/** * @param {string} startUrl url address on the front page of the album */ const getImgList = (startUrl) => { return new Promise((resolve, reject) => { let albums = []; // Store all picture information in this album let getQuery = async startUrl => { try { let $ = await getHtml(startUrl); let pages = $('#pages a').length; for (let i = 1; i <= pages; i++) { let pageUrl = `${startUrl + i}.html` let $ = await getHtml(pageUrl); $('#hgallery img').each(function () { let url = $(this).attr('src'); //Picture Address let fileName = url.split('/').pop(); //file name let id = parseInt(fileName.split('.')[0], 10); //id albums.push({ url, fileName, id }) }) } resolve(albums); // Return all picture information in this album } catch (error) { console.log(error); } } getQuery(startUrl); }) } -

Save Album Information

/** * @param {string} path Path to save data * @param {array} albums Album Information Array */ const saveData = (path, albums) => { fs.writeFile(path, JSON.stringify(albums, null, ' '), function (err) { err ? console.log(err) : console.log('Data saved'); }); } -

Save pictures

/** 12. @param {string} title The name of the folder where the picture is located 13. @param {string} url Picture url 14. @param {string} fileName Picture Name 15. @param {array} imgList Picture information for a single album */ // Save a picture const saveOne = (title, url, fileName) => { return new Promise((resolve, reject) => { let path = `./img/${currentImgType}/${title}/${fileName}`; request.get(url).end((err, res) => { if (err) { console.log(`Error: ${err} in getting ${url}`) } fs.writeFile(path, res.body, function (err) { if (err) console.log(`Error: ${err} in downloading ${url}`) }); resolve(); }) }) } //Save multiple pictures in an album const saveImg = ({title,imgList}) => { // create folder mkdirp(`./img/${currentImgType}/${title}`, function (err) { if (err) { console.log(`Error: ${err} in makedir ${title}`); } }); let getQuery = async() => { try { for (let {url,fileName} of imgList) { await saveOne(title, url, fileName); } } catch (error) { console.log(error); } } // Time required to print and download an album console.time(`download ${title}...`) getQuery(); console.timeEnd(`download ${title}...`) } -

Executive crawler

const doSpider = async() => { try { // Getting Album Information let albums = await getAlbums(allTags[current]); // Get each picture information for (let album of albums) { let imgList = await getImgList(album.url); album.imgList = imgList; } // Save json let jsonPath = `./data`; mkdirp(jsonPath, function (err) { if (err) { console.log(`Error: ${err} in makedir of Json`); } }); saveData(`${jsonPath}/${currentImgType}.json`, albums); // Save pictures for (let value of albums) { saveImg(value) } } catch (error) { console.log(error); } }

Experience

Some pits will not vomit blood if they are not trampled once, such as cheerio operation and fs operation.

just do it

Thank

This article has reference to nieheyong's HanhandeSpider And other crawler articles, get a lot of inspiration