background

I believe that all of us have dictation when we first learn a language. Now when primary school students learn Chinese, an important homework is dictation of new words in the text. Many parents have this experience. However, on the one hand, the action of reading words is relatively simple, on the other hand, parents' time is also very valuable. Now there are many pronunciation dictation after class in the market. These announcers record the dictation words in Chinese textbooks and download them for parents to use. However, the recording is not flexible enough. If the teacher leaves some extra words that are not in the after-school exercises today, this The recording can't meet the needs of parents and children. This paper introduces an APP that uses the common text recognition function and speech synthesis function of our ML kit to realize automatic voice broadcast. It only needs to take pictures of the dictation words or texts, and then it can automatically broadcast the words in the photos, and the broadcast tone and tone can be adjusted.

Preparation before development

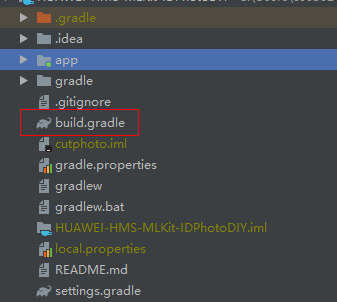

Open Android studio project level build.gradle file

configure the maven address of HMS SDK in allprojects > repositories

allprojects { repositories { google() jcenter() maven {url 'http://developer.huawei.com/repo/'} } }

configure the maven address of HMS SDK in buildscript > repositories

buildscript { repositories { google() jcenter() maven {url 'http://developer.huawei.com/repo/'} } }

configure the maven address of HMS SDK in buildscript > repositories

buildscript { repositories { google() jcenter() maven {url 'http://developer.huawei.com/repo/'} } }

in buildscript > dependencies, configure AGC plug-in

dependencies { classpath 'com.huawei.agconnect:agcp:1.2.1.301' }

Add compilation dependency

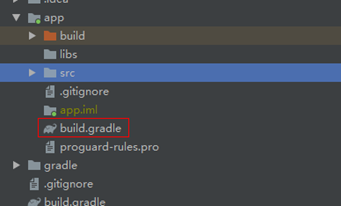

open application level build.gradle file

integrated SDK

dependencies{ implementation 'com.huawei.hms:ml-computer-voice-tts:1.0.4.300' implementation 'com.huawei.hms:ml-computer-vision-ocr:1.0.4.300' implementation 'com.huawei.hms:ml-computer-vision-ocr-cn-model:1.0.4.300' }

apply ACG plug-in and add it to the file header

apply plugin: 'com.huawei.agconnect'

specify permissions and attributes: in AndroidManifest.xml Statement in the

<uses-permission android:name="android.permission.CAMERA" /> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" /> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" /> <uses-feature android:name="android.hardware.camera" /> <uses-feature android:name="android.hardware.camera.autofocus" />

Key steps of reading code

there are two main functions: one is to identify the text of the assignment, the other is to read the assignment. The assignment can be read aloud through OCR+TTS. After taking pictures, click play to read aloud.

- Dynamic permission application

private static final int PERMISSION_REQUESTS = 1; @Override public void onCreate(Bundle savedInstanceState) { // Checking camera permission if (!allPermissionsGranted()) { getRuntimePermissions(); } }

- Start reading interface

public void takePhoto(View view) { Intent intent = new Intent(MainActivity.this, ReadPhotoActivity.class); startActivity(intent); }

- Call createLocalTextAnalyzer() in onCreate() method to create end side text recognizer.

private void createLocalTextAnalyzer() { MLLocalTextSetting setting = new MLLocalTextSetting.Factory() .setOCRMode(MLLocalTextSetting.OCR_DETECT_MODE) .setLanguage("zh") .create(); this.textAnalyzer = MLAnalyzerFactory.getInstance().getLocalTextAnalyzer(setting); }

- In the onCreate() method, createTtsEngine () is called to create the speech synthesis engine, and the speech synthesis callback is used to process the speech synthesis results, and the speech synthesis callback is introduced into the new speech synthesis engine.

private void createTtsEngine() { MLTtsConfig mlConfigs = new MLTtsConfig() .setLanguage(MLTtsConstants.TTS_ZH_HANS) .setPerson(MLTtsConstants.TTS_SPEAKER_FEMALE_ZH) .setSpeed(0.2f) .setVolume(1.0f); this.mlTtsEngine = new MLTtsEngine(mlConfigs); MLTtsCallback callback = new MLTtsCallback() { @Override public void onError(String taskId, MLTtsError err) { } @Override public void onWarn(String taskId, MLTtsWarn warn) { } @Override public void onRangeStart(String taskId, int start, int end) { } @Override public void onEvent(String taskId, int eventName, Bundle bundle) { if (eventName == MLTtsConstants.EVENT_PLAY_STOP) { if (!bundle.getBoolean(MLTtsConstants.EVENT_PLAY_STOP_INTERRUPTED)) { Toast.makeText(ReadPhotoActivity.this.getApplicationContext(), R.string.read_finish, Toast.LENGTH_SHORT).show(); } } } }; mlTtsEngine.setTtsCallback(callback); }

- Set the read photos, take pictures and read aloud buttons

this.relativeLayoutLoadPhoto.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { ReadPhotoActivity.this.selectLocalImage(ReadPhotoActivity.this.REQUEST_CHOOSE_ORIGINPIC); } }); this.relativeLayoutTakePhoto.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { ReadPhotoActivity.this.takePhoto(ReadPhotoActivity.this.REQUEST_TAKE_PHOTO); } });

- Start text recognition in the callback of taking pictures and reading photos. startTextAnalyzer()

private void startTextAnalyzer() { if (this.isChosen(this.originBitmap)) { MLFrame mlFrame = new MLFrame.Creator().setBitmap(this.originBitmap).create(); Task<MLText> task = this.textAnalyzer.asyncAnalyseFrame(mlFrame); task.addOnSuccessListener(new OnSuccessListener<MLText>() { @Override public void onSuccess(MLText mlText) { // Transacting logic for segment success. if (mlText != null) { ReadPhotoActivity.this.remoteDetectSuccess(mlText); } else { ReadPhotoActivity.this.displayFailure(); } } }).addOnFailureListener(new OnFailureListener() { @Override public void onFailure(Exception e) { // Transacting logic for segment failure. ReadPhotoActivity.this.displayFailure(); return; } }); } else { Toast.makeText(this.getApplicationContext(), R.string.please_select_picture, Toast.LENGTH_SHORT).show(); return; } }

- After the recognition is successful, click the play button to start playing

this.relativeLayoutRead.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if (ReadPhotoActivity.this.sourceText == null) { Toast.makeText(ReadPhotoActivity.this.getApplicationContext(), R.string.please_select_picture, Toast.LENGTH_SHORT).show(); } else { ReadPhotoActivity.this.mlTtsEngine.speak(sourceText, MLTtsEngine.QUEUE_APPEND); Toast.makeText(ReadPhotoActivity.this.getApplicationContext(), R.string.read_start, Toast.LENGTH_SHORT).show(); } } });

Demo effect

Previous links: Ultra simple integration of HMS Scan Kit code scanning SDK, easy to realize scanning code purchase

Link to the original text: https://developer.huawei.com/consumer/cn/forum/topicview?tid=0201283755975150303&fid=18

Original author: littlewhite