Introduction of Network Library

1.HttpURLConnection

API is simple and small, so it is very suitable for Android projects, but there are some bug s in android 2.2 and below version HttpUrlConnection, so it is recommended to use HttpUrlConnection after android 2.3, and HttpClient before that.

2.HttpClient (Apache )

Efficient and stable, but costly to maintain, the android development team is reluctant to maintain the library, preferring portable HTTP Url Connection. The library has been abandoned since Android 5.0.

3.OKHttp

Square products, OkHttp is more powerful than HttpURLConnection and HttpClient.

4.Volley

Volley introduced a new network communication framework at the Google I/O conference in 2013. It encapsulated HttpURLConnection and HttpClient internally, which solved the problems of network data parsing and thread switching.

It is mainly used to solve the situation of high communication frequency, but small amount of data transmission. For large amount of network operations, such as downloading files, Volley will perform very badly.

In fact, the use of Volley is very simple. Generally speaking, it is to send an HTTP request and add the request to the RequestQueue, where the RequestQueue is a request queue object, which can cache all HTTP requests, and then according to certain conditions. algorithm These requests were sent out concurrently. The internal design of RequestQueue is very suitable for high concurrency, so we don't need to create a RequestQueue object for every HTTP request, which is a waste of resources. Basically, it is enough to create a RequestQueue object in every Active that needs to interact with the network.

Generally speaking, our commonly used Volley has the following three steps: Volley.newRequestQueue(context).add(request);

1. Create a RequestQueue object.

2. Create a StringRequest object.

3. Add StringRequest object to RequestQueue.

Here we mainly through these three sentences to analyze the implementation principle of Volley source code

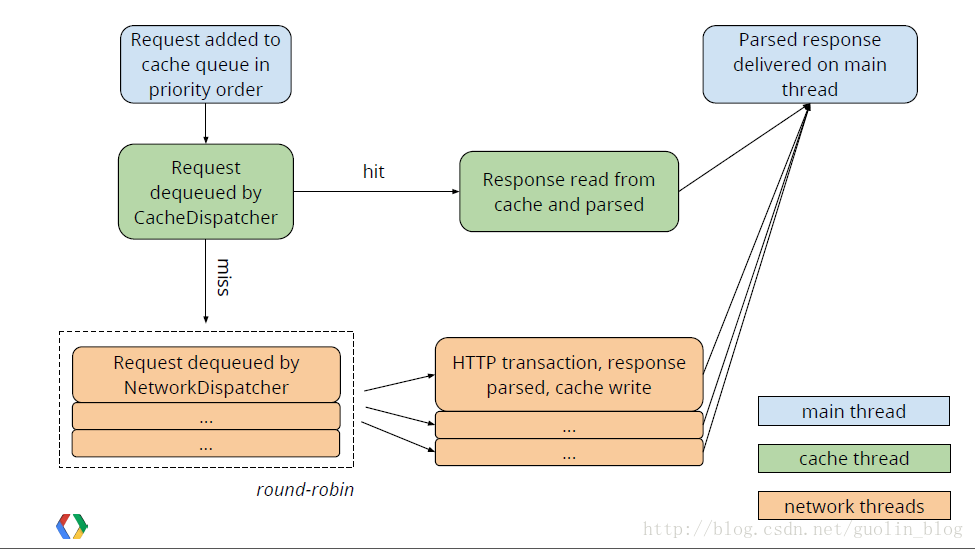

This is the official Volley workflow diagram, where the blue part represents the main thread, the green part represents the cache thread, and the orange part represents the network thread. We call the add() method of RequestQueue in the main thread to add a network request, which is first added to the cache queue. If we find that the corresponding cache results can be found, we read the cache directly and parse it, and then call back to the main thread. If no result is found in the cache, add the request to the network request queue, then process the sending HTTP request, parse the response result, write to the cache, and call back the main thread.

Step 1: New Request Queue (context)

public static RequestQueue newRequestQueue(Context context) { return newRequestQueue(context, null); //Execution of construction method with two parameters }

This method has only one line of code, just calls the method of newRequestQueue() to overload and passes null to the second parameter. Let's look at the code in the newRequestQueue() method with two parameters, as follows:

public static RequestQueue newRequestQueue(Context context, HttpStack stack) { File cacheDir = new File(context.getCacheDir(), DEFAULT_CACHE_DIR); String userAgent = "volley/0"; try { String packageName = context.getPackageName(); PackageInfo info = context.getPackageManager().getPackageInfo(packageName, 0); userAgent = packageName + "/" + info.versionCode; } catch (NameNotFoundException e) { } if (stack == null) { //Judge that if stack equals null, create an HttpStack object if (Build.VERSION.SDK_INT >= 9) { stack = new HurlStack(); } else { // Prior to Gingerbread, HttpUrlConnection was unreliable. // See: http://android-developers.blogspot.com/2011/09/androids-http-clients.html stack = new HttpClientStack(AndroidHttpClient.newInstance(userAgent)); } } Network network = new BasicNetwork(stack); RequestQueue queue = new RequestQueue(new DiskBasedCache(cacheDir), network); queue.start(); return queue; }

As you can see from the above code, if stack is equal to null, create an HttpStack object. It will be judged here that if the mobile phone system version number is greater than 9 (SDK version here), create an instance of HurlStack, or else create an instance of HttpClientStack. In fact, HurlStack uses HttpURLConnection for network communication, while HttpClientStack uses HttpClient for network communication. As for why it needs to be greater than or equal to 9, it is because the corresponding system is Android 2.3 when SDK is 9, and HttpURLConnection is recommended after version 2.3.

After creating HttpStack, a Network object is created to process network requests based on the incoming HttpStack object. Next, a new RequestQueue object is generated, and its start() method is called to start it. Then the RequestQueue is returned, so that the method of new RequestQueue () is finished. Next, let's look at the internal execution of RequestQueue's start() method:

/** Number of network request dispatcher threads to start. */ private static final int DEFAULT_NETWORK_THREAD_POOL_SIZE = 4;//Default number of network request threads /** * Starts the dispatchers in this queue. */ public void start() { stop(); // Make sure any currently running dispatchers are stopped. // Create the cache dispatcher and start it. mCacheDispatcher = new CacheDispatcher(mCacheQueue, mNetworkQueue, mCache, mDelivery); mCacheDispatcher.start(); // Create network dispatchers (and corresponding threads) up to the pool size. for (int i = 0; i < mDispatchers.length; i++) { NetworkDispatcher networkDispatcher = new NetworkDispatcher(mNetworkQueue, mNetwork, mCache, mDelivery); mDispatchers[i] = networkDispatcher; networkDispatcher.start(); } }

Here we first create an instance of CacheDispatcher, then call its start() method, then create an instance of NetworkDispatcher in a for loop, and call their start() methods separately. Cache Dispatcher and Network Dispatcher are both inherited from Thread. By default, the for loop executes four times. That is to say, after calling Volley. new RequestQueue (context), five threads are running in the background, waiting for the arrival of network requests. Cache Dispatcher is a cache thread and Network Dispatcher is a network request thread.

After we get the RequestQueue above, we just need to construct the corresponding Request, and then call the add() method of RequestQueue to pass in the Request to complete the network request operation. Now let's analyze the internal logic of the add() method.

/** * Adds a Request to the dispatch queue. * @param request The request to service * @return The passed-in request */ public <T> Request<T> add(Request<T> request) { // Tag the request as belonging to this queue and add it to the set of current requests. request.setRequestQueue(this); synchronized (mCurrentRequests) { mCurrentRequests.add(request); } // Process requests in the order they are added. request.setSequence(getSequenceNumber()); request.addMarker("add-to-queue"); // If the request is uncacheable, skip the cache queue and go straight to the network. if (!request.shouldCache()) { //Determine whether the current request can be cached mNetworkQueue.add(request); //If it cannot be cached, add this request directly to the network request queue return request; } // Insert request into stage if there's already a request with the same cache key in flight. synchronized (mWaitingRequests) { String cacheKey = request.getCacheKey(); if (mWaitingRequests.containsKey(cacheKey)) { // There is already a request in flight. Queue up. Queue<Request<?>> stagedRequests = mWaitingRequests.get(cacheKey); if (stagedRequests == null) { stagedRequests = new LinkedList<Request<?>>(); } stagedRequests.add(request); mWaitingRequests.put(cacheKey, stagedRequests); if (VolleyLog.DEBUG) { VolleyLog.v("Request for cacheKey=%s is in flight, putting on hold.", cacheKey); } } else { // Insert 'null' queue for this cacheKey, indicating there is now a request in // flight. mWaitingRequests.put(cacheKey, null); mCacheQueue.add(request); //If cacheable, add this request to the cache queue } return request; } }

As you can see, at the beginning, it will determine whether the current request can be cached. If it cannot be cached, it will be added directly to the network request queue. If it can be cached, it will be added to the cache queue. By default, each request can be cached, and of course we can call the setShouldCache(false) method of Request to change this default behavior.

Since each request is cacheable by default, it is naturally added to the cache queue, so the cache thread that has been waiting in the background is about to start running. Let's look at the run() method in Cache Dispatcher. The code is as follows:

public class CacheDispatcher extends Thread { ...... //Eliminate code @Override public void run() { if (DEBUG) VolleyLog.v("start new dispatcher"); Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND); // Make a blocking call to initialize the cache. mCache.initialize(); while (true) { //Dead cycle try { // Get a request from the cache triage queue, blocking until // at least one is available. final Request<?> request = mCacheQueue.take(); request.addMarker("cache-queue-take"); // If the request has been canceled, don't bother dispatching it. if (request.isCanceled()) { request.finish("cache-discard-canceled"); continue; } // Attempt to retrieve this item from cache. Cache.Entry entry = mCache.get(request.getCacheKey()); //Remove the response from the cache if (entry == null) { //How to add this request to the network request queue if it is empty request.addMarker("cache-miss"); // Cache miss; send off to the network dispatcher. mNetworkQueue.put(request); continue; } // If it is completely expired, just send it to the network. if (entry.isExpired()) { //If it is not empty but the cache has expired, add the request to the network request queue as well. request.addMarker("cache-hit-expired"); request.setCacheEntry(entry); mNetworkQueue.put(request); continue; } // We have a cache hit; parse its data for delivery back to the request. request.addMarker("cache-hit"); Response<?> response = request.parseNetworkResponse( new NetworkResponse(entry.data, entry.responseHeaders)); //Analysis of data request.addMarker("cache-hit-parsed"); if (!entry.refreshNeeded()) { // Completely unexpired cache hit. Just deliver the response. mDelivery.postResponse(request, response); } else { // Soft-expired cache hit. We can deliver the cached response, // but we need to also send the request to the network for // refreshing. request.addMarker("cache-hit-refresh-needed"); request.setCacheEntry(entry); // Mark the response as intermediate. response.intermediate = true; // Post the intermediate response back to the user and have // the delivery then forward the request along to the network. mDelivery.postResponse(request, response, new Runnable() { @Override public void run() { try { mNetworkQueue.put(request); } catch (InterruptedException e) { // Not much we can do about this. } } }); } } catch (InterruptedException e) { // We may have been interrupted because it was time to quit. if (mQuit) { return; } continue; } } } }

You can see a while(true) loop that shows that the cache thread is always running, and then tries to extract the response from the cache. If it is empty, add the request to the network request queue. If it is not empty, then judge whether the cache has expired. If it has expired, add the request to the network request queue as well, otherwise it will be recognized. To avoid retransmitting network requests, use the data in the cache directly. Next, the parseNetworkResponse() method of Request will be called to parse the data, and then the parsed data will be callback. The logic of this part of the code is basically the same as that of the latter half of the NetworkDispatcher. Let's see how to handle the network request queue in NetworkDispatcher. The code is as follows:

public class NetworkDispatcher extends Thread { .......//Eliminate code @Override public void run() { Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND); while (true) { //Dead cycle long startTimeMs = SystemClock.elapsedRealtime(); Request<?> request; try { // Take a request from the queue. request = mQueue.take(); } catch (InterruptedException e) { // We may have been interrupted because it was time to quit. if (mQuit) { return; } continue; } try { request.addMarker("network-queue-take"); // If the request was cancelled already, do not perform the // network request. if (request.isCanceled()) { request.finish("network-discard-cancelled"); continue; } addTrafficStatsTag(request); // Perform the network request. NetworkResponse networkResponse = mNetwork.performRequest(request); //Perform network requests request.addMarker("network-http-complete"); // If the server returned 304 AND we delivered a response already, // we're done -- don't deliver a second identical response. if (networkResponse.notModified && request.hasHadResponseDelivered()) { request.finish("not-modified"); continue; } // Parse the response here on the worker thread. Response<?> response = request.parseNetworkResponse(networkResponse); //Analytical Response Network Data request.addMarker("network-parse-complete"); // Write to cache if applicable. // TODO: Only update cache metadata instead of entire record for 304s. if (request.shouldCache() && response.cacheEntry != null) { mCache.put(request.getCacheKey(), response.cacheEntry); request.addMarker("network-cache-written"); } // Post the response back. request.markDelivered(); mDelivery.postResponse(request, response); } catch (VolleyError volleyError) { volleyError.setNetworkTimeMs(SystemClock.elapsedRealtime() - startTimeMs); parseAndDeliverNetworkError(request, volleyError); } catch (Exception e) { VolleyLog.e(e, "Unhandled exception %s", e.toString()); VolleyError volleyError = new VolleyError(e); volleyError.setNetworkTimeMs(SystemClock.elapsedRealtime() - startTimeMs); mDelivery.postError(request, volleyError); } } } private void parseAndDeliverNetworkError(Request<?> request, VolleyError error) { error = request.parseNetworkError(error); mDelivery.postError(request, error); } }

Similarly, we see a similar while(true) loop, indicating that network request threads are running continuously. In the dead loop, the network performRequest() method is called to send network requests, and the network is an interface. The stack we created above is implemented by this code (Network = new Basic Network (stack);), so the specific implementation here is Basic Network, so we look at the performRequest() method in the Basic Network class, as follows:

public class BasicNetwork implements Network { .....//Eliminate code @Override public NetworkResponse performRequest(Request<?> request) throws VolleyError { long requestStart = SystemClock.elapsedRealtime(); while (true) { HttpResponse httpResponse = null; byte[] responseContents = null; Map<String, String> responseHeaders = Collections.emptyMap(); try { // Gather headers. Map<String, String> headers = new HashMap<String, String>(); addCacheHeaders(headers, request.getCacheEntry()); httpResponse = mHttpStack.performRequest(request, headers); //The performRequest() method of HttpStack is called StatusLine statusLine = httpResponse.getStatusLine(); int statusCode = statusLine.getStatusCode(); responseHeaders = convertHeaders(httpResponse.getAllHeaders()); // Handle cache validation. if (statusCode == HttpStatus.SC_NOT_MODIFIED) { Entry entry = request.getCacheEntry(); if (entry == null) { return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, null, responseHeaders, true, SystemClock.elapsedRealtime() - requestStart); //Assemble a NetworkResponse object for return } // http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html#sec10.3.5 entry.responseHeaders.putAll(responseHeaders); return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, entry.data, entry.responseHeaders, true, SystemClock.elapsedRealtime() - requestStart); //Assemble a NetworkResponse object for return } // Some responses such as 204s do not have content. We must check. if (httpResponse.getEntity() != null) { responseContents = entityToBytes(httpResponse.getEntity()); } else { // Add 0 byte response as a way of honestly representing a // no-content request. responseContents = new byte[0]; } // if the request is slow, log it. long requestLifetime = SystemClock.elapsedRealtime() - requestStart; logSlowRequests(requestLifetime, request, responseContents, statusLine); if (statusCode < 200 || statusCode > 299) { throw new IOException(); } return new NetworkResponse(statusCode, responseContents, responseHeaders, false, SystemClock.elapsedRealtime() - requestStart); } catch (SocketTimeoutException e) { ...... //Eliminate code } } } }

This code is mainly about the details of network requests. It should be noted that the performRequest() method of HttpStack is called. Here HttpStack is an instance created by calling the newRequestQueue() method at the beginning. By default, if the system version number is greater than 9, the HulStack object will be created, otherwise the HttpClientStack object will be created. The internal reality of these two objects is to use HttpURLConnection and HttpClient to send network requests respectively, and then assemble the data returned by the server into a NetworkResponse object for return.

The parseNetworkResponse() method of Request is called to parse the data in NetworkResponse and write the data to the cache after the return value of NetworkResponse is received in NetworkDispatcher. This method is implemented by subclasses of Request, because different kinds of Request parsing methods are certainly different. If you want to customize the way Request is done, the parseNetworkResponse() method must be rewritten.

After parsing the data in NetworkResponse, Executor Delivery's postResponse() method is called to call back the parsed data. The code is as follows:

1.stay NetworkDispatcher In class private final ResponseDelivery mDelivery; // Parse the response here on the worker thread. Response<?> response = request.parseNetworkResponse(networkResponse); //Parsing data request.addMarker("network-parse-complete"); mDelivery.postResponse(request, response); //Callback parsed data, specifically implemented in the following code 2.stay ExecutorDelivery Callback parsed data in class public class ExecutorDelivery implements ResponseDelivery { @Override public void postResponse(Request<?> request, Response<?> response) { postResponse(request, response, null); } @Override public void postResponse(Request<?> request, Response<?> response, Runnable runnable) { request.markDelivered(); request.addMarker("post-response"); mResponsePoster.execute(new ResponseDeliveryRunnable(request, response, runnable)); } }

In the execute() method of mResponsePoster, a ResponseDelivery Runnable object is introduced, which ensures that the run() method in the object runs in the main thread. Let's see what the code in the run() method looks like:

private class ResponseDeliveryRunnable implements Runnable { @SuppressWarnings("unchecked") @Override public void run() { // If this request has canceled, finish it and don't deliver. if (mRequest.isCanceled()) { mRequest.finish("canceled-at-delivery"); return; } // Deliver a normal response or error, depending. if (mResponse.isSuccess()) { mRequest.deliverResponse(mResponse.result); } else { mRequest.deliverError(mResponse.error); } // If this is an intermediate response, add a marker, otherwise we're done // and the request can be finished. if (mResponse.intermediate) { mRequest.addMarker("intermediate-response"); } else { mRequest.finish("done"); } // If we have been provided a post-delivery runnable, run it. if (mRunnable != null) { mRunnable.run(); } }

Analyzing the key code, we mainly look at the delivery Response () method of Request, which is another method we need to rewrite when we customize Request. The response of each network request is called back to this method. Finally, we call back the response data to the onResponse() method of Response.Listener in this method.

5.Retrofit.

Square's products encapsulate OKhttp internally, which solves the problems of network data parsing and thread switching.