Processes, Threads, Programs

A process is the smallest unit of the system for resource allocation and scheduling. It is an instance of program execution. A running program is also a container for threads.

Threads are the smallest units that the operating system can schedule operations. They are the actual units of operation in a process, and a thread is a running path in a process. A process can have multiple threads.

Programs are static code stored on disk.

Parallel and Concurrent

Parallelism refers to the simultaneous execution of multiple tasks at the same time, usually on multicore processors.

Concurrency refers to the same time period in which multiple tasks are performed simultaneously, but only one task is executed when refined to the moment.

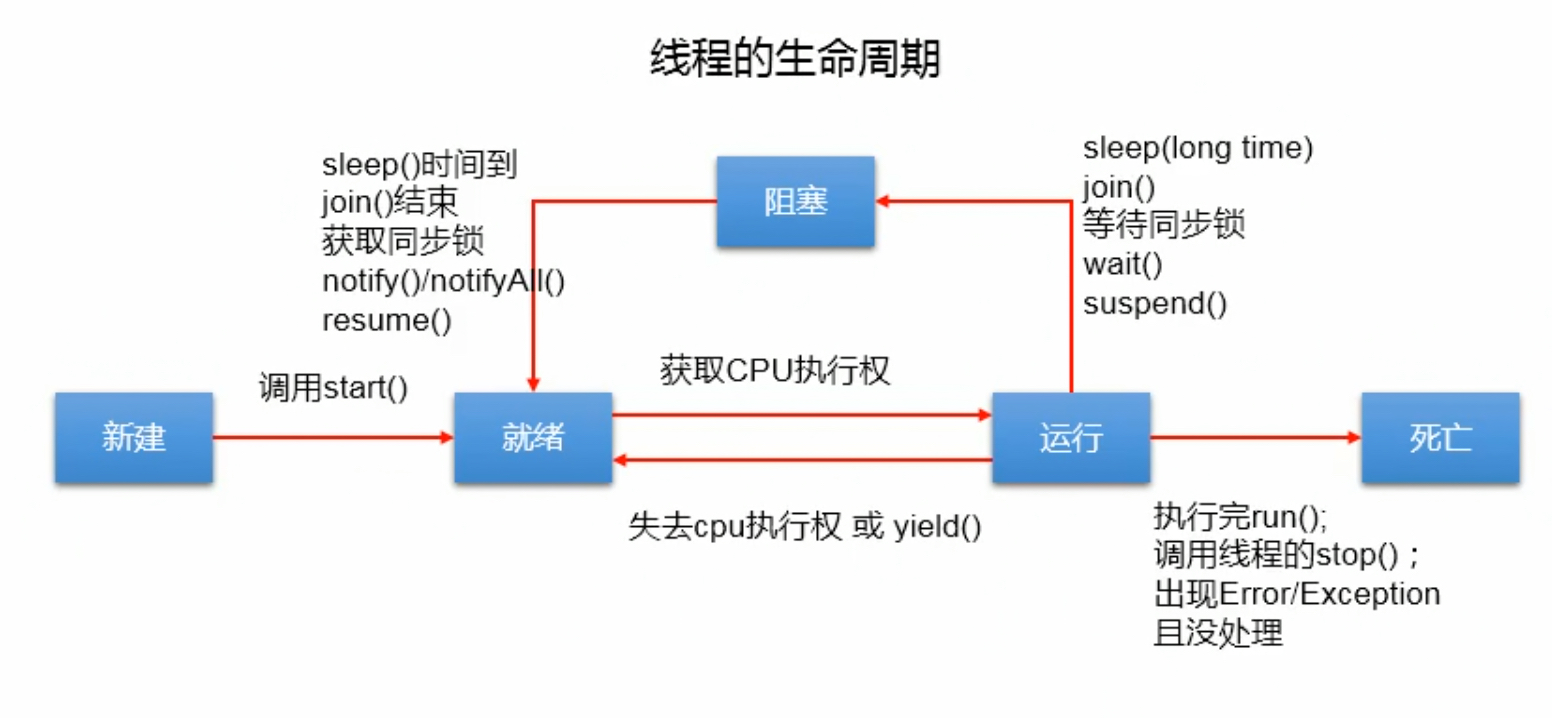

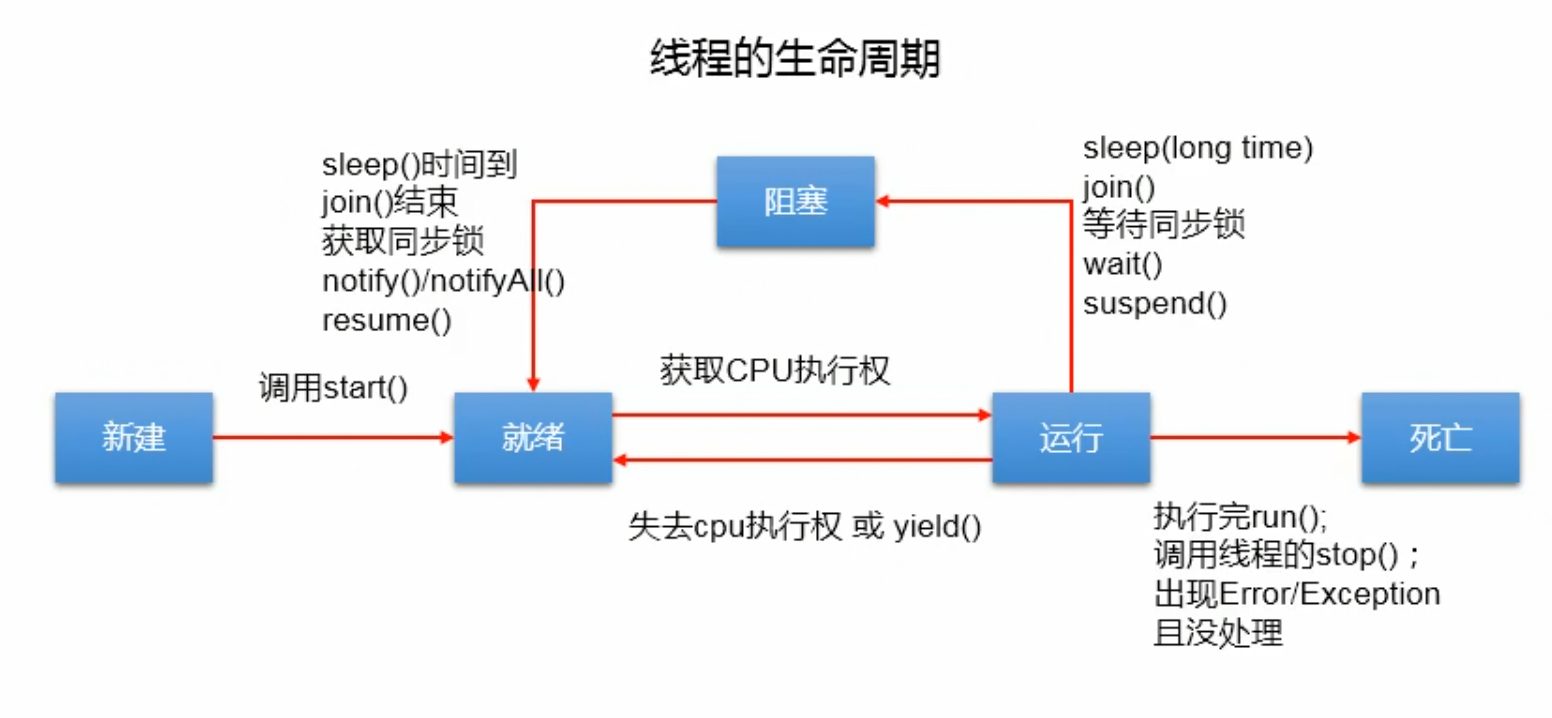

The state of the thread

Similarities and differences between Sleep and Wait

Same: Threads can be blocked and interrupts can interrupt

Different: declare location, sleep is in thread, wait is in object class

The call requirements are different, sleep can be called in any scenario because each running process is a thread and wait can only be called in synchronous code blocks

The sleep does not release the lock, and the wait releases the lock.

Communication between threads

synchronized for inter-thread communication

wait(): Thread blocking after lock release

notify(): wake up a thread with a higher priority

notifyAll(): Wake up all threads

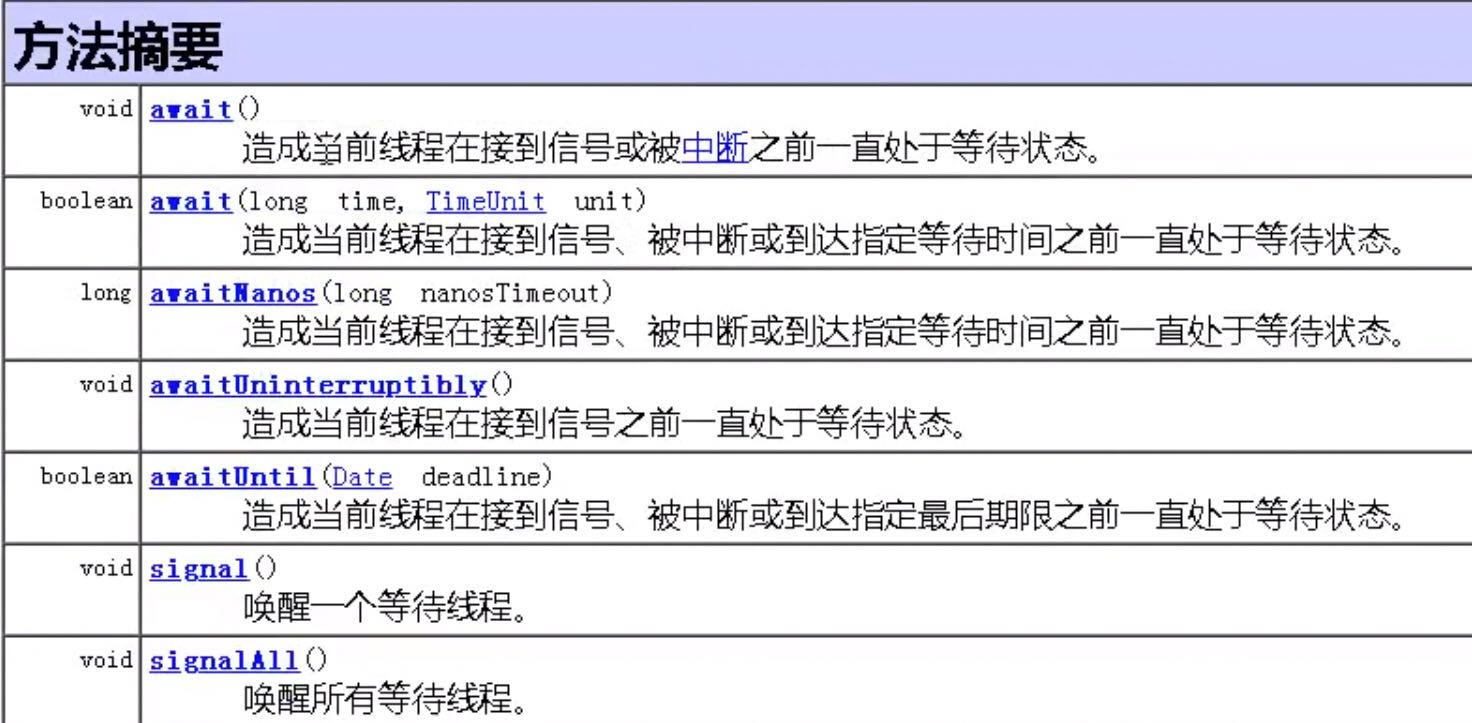

Thread communication in lock

Call Condition method in Lock

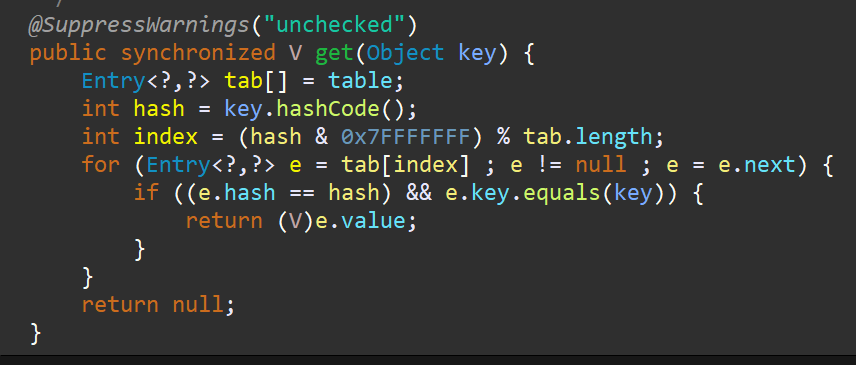

Thread security for collections

Vector HashTable

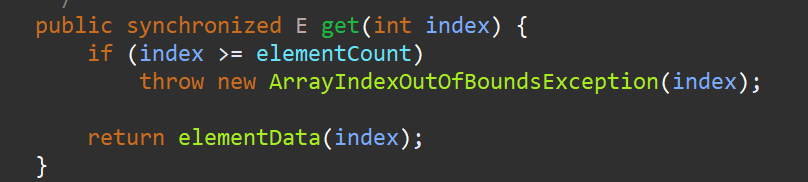

Vector s are thread-safe using the sychronizied keyword, and they perform relatively poorly because synchronization mechanisms require resources.

The same is true for HashTable s.

Vector and HashTable also add sychronizied keywords to the add method, which results in read-to-read exclusion.

To solve this problem, the CopyOnWriteArrayList and ConcurrentHashMap collections are introduced, which lock with smaller granularity and better performance.

Auxiliary Classes in JUC

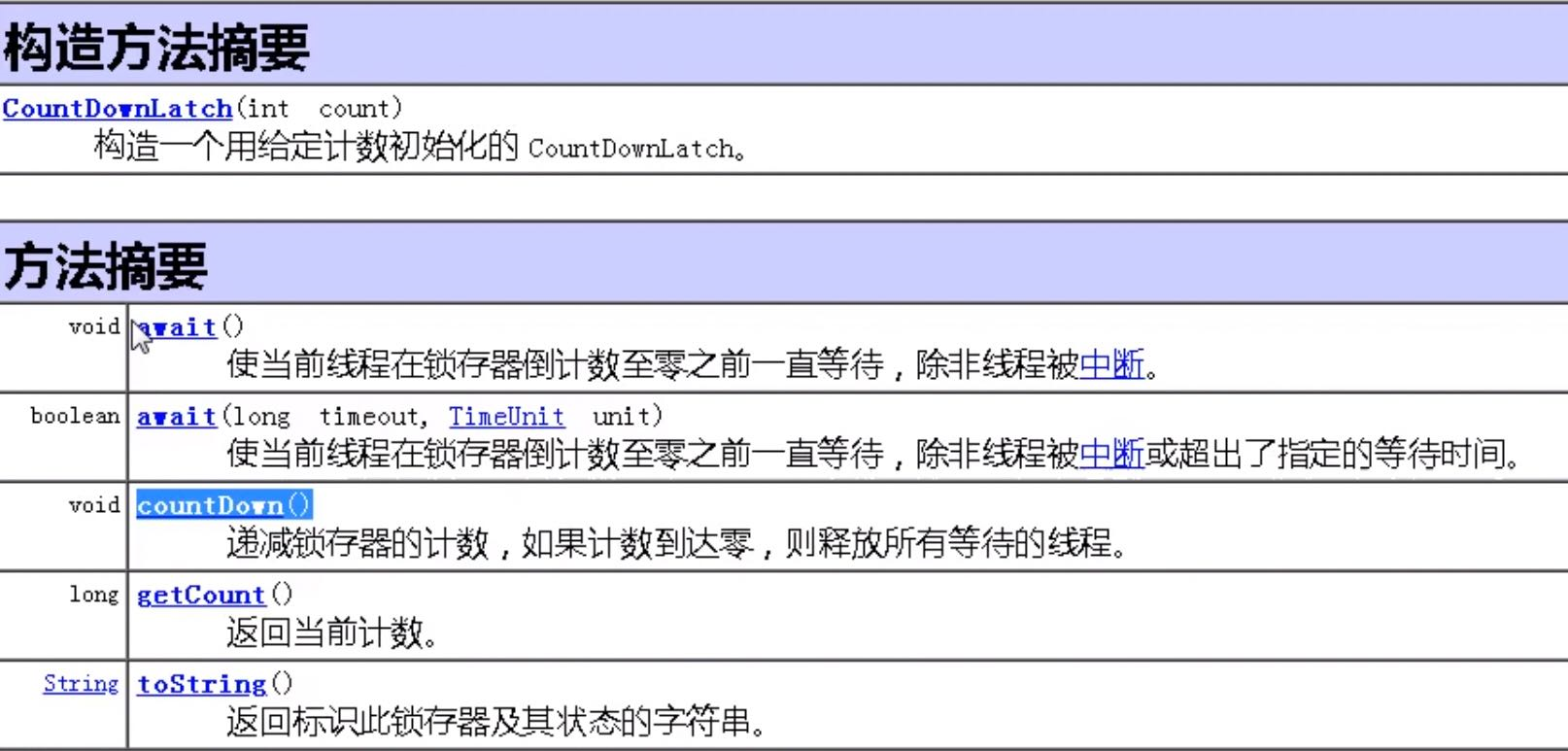

Decrease Count

Method Summary:

The main thing is to set a counter, then use the await method to wait until the counter is reduced to zero before executing.

package juc.second;

import java.util.concurrent.CountDownLatch;

class foo2{

CountDownLatch count = new CountDownLatch(2);

public void first() {

try {

count.await();

System.out.println(Thread.currentThread().getName()+"Start running");

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

public void down(int x) {

for(int i=0;i<x;i++) {

count.countDown();

System.out.println(count.getCount());

System.out.println(Thread.currentThread().getName()+"Take a break");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}

public class countDownLatch {

public static void main(String[] args) {

foo2 foo = new foo2();

new Thread(()->{

foo.first();

}).start();

new Thread(()->{

foo.down(10);

}).start();

}

}

Circulating fence

Set a value x x x until there is x x When x threads are waiting, they break through the barrier together.

package juc.second;

import java.util.concurrent.CyclicBarrier;

class foo3{

public void first(CyclicBarrier cyclicBarrier) {

try {

System.out.println(Thread.currentThread().getName()+"Here we are");

cyclicBarrier.await();

// System.out.println(Thread.currentThread().getName()+ "Gone");

} catch (Exception e) {

e.printStackTrace();

}finally {

}

}

}

public class cyclicBarrier {

public static void main(String[] args) {

foo3 foo = new foo3();

CyclicBarrier cyclicBarrier = new CyclicBarrier(7,()->{

System.out.println("break through");

});

for(int i=0;i<14;i++) {

new Thread(()->{

foo.first(cyclicBarrier);

}).start();

}

}

}

Signal light

Semaphore is a synchronization tool that emerged after Java 1.5. It maintains the number of threads accessing itself. There are two main functions.

acquire() to obtain a license.

release() releases a license.

Take a simple example, for example, Internet cafes have 5 5 5 machines, but there are 10 10 10. When it's full, someone needs to give way below the location to get in.

import java.util.Random;

import java.util.concurrent.Semaphore;

class foo4{

private Semaphore semaphore = new Semaphore(20);

public void getComputer() {

try {

semaphore.acquire();

System.out.println(Thread.currentThread().getName()+"Get a computer");

Random rand = new Random();

Thread.sleep(rand.nextInt(5000));

semaphore.release();

System.out.println(Thread.currentThread().getName()+"Down");

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

public class semaphore {

public static void main(String[] args) {

foo4 foo = new foo4();

for(int i=0;i<50;i++) {

new Thread(()->{

foo.getComputer();

}).start();

}

}

}

Thread Pool

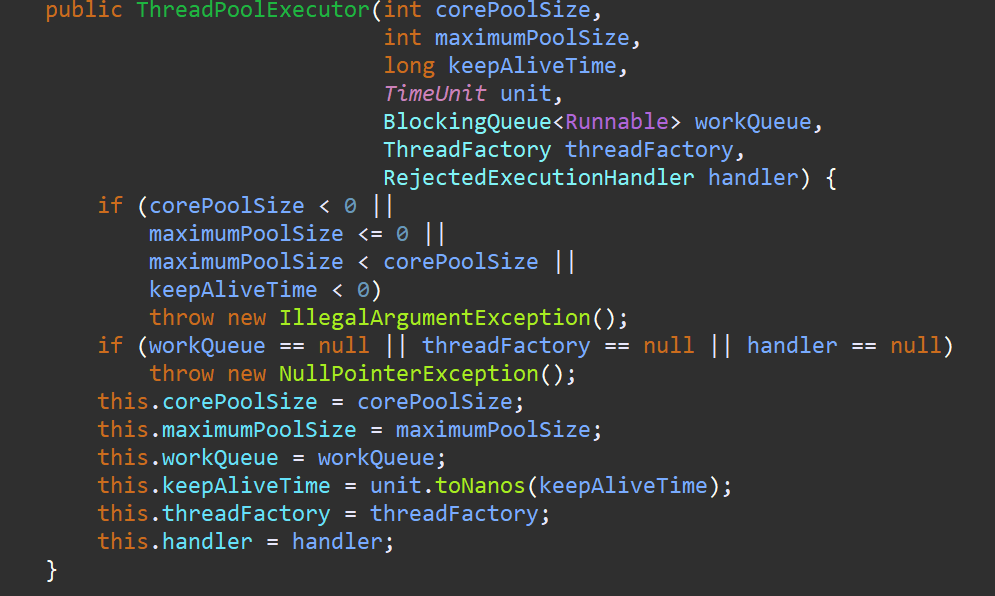

This is the official comment on the thread pool

/**

* Creates a new {@code ThreadPoolExecutor} with the given initial

* parameters.

*

* @param corePoolSize the number of threads to keep in the pool, even

* if they are idle, unless {@code allowCoreThreadTimeOut} is set

* @param maximumPoolSize the maximum number of threads to allow in the

* pool

* @param keepAliveTime when the number of threads is greater than

* the core, this is the maximum time that excess idle threads

* will wait for new tasks before terminating.

* @param unit the time unit for the {@code keepAliveTime} argument

* @param workQueue the queue to use for holding tasks before they are

* executed. This queue will hold only the {@code Runnable}

* tasks submitted by the {@code execute} method.

* @param threadFactory the factory to use when the executor

* creates a new thread

* @param handler the handler to use when execution is blocked

* because the thread bounds and queue capacities are reached

* @throws IllegalArgumentException if one of the following holds:<br>

* {@code corePoolSize < 0}<br>

* {@code keepAliveTime < 0}<br>

* {@code maximumPoolSize <= 0}<br>

* {@code maximumPoolSize < corePoolSize}

* @throws NullPointerException if {@code workQueue}

* or {@code threadFactory} or {@code handler} is null

*/

The first four parameters are the number of core threads, the maximum thread tree, the lifetime and its units. The last three parameters are the blocking queue, the thread factory, and the denial policy.

Blocking Queue

A blocking queue is a queue that helps us manage when a thread is blocked and when it is awakened.

For example, the producer and consumer models, where a producer adds data to a blocked queue and a consumer takes data from it, prevent us from managing thread wakeups and blockages by blocking the queue itself.

Thread Factory

Rejection Policy

AbortPolicy (default): Throw a RejectedExecutionException exception directly to prevent the system from working properly.

CallerRunsPolicy: The caller runtime mechanism under which tasks are neither discarded nor exceptions are thrown, but tasks are returned to the caller, thereby reducing the flow of new tasks

DiscardOlderPolicy: Discard the task that has been waiting the longest in the queue, then join the current task in the queue and try to submit the current task again

DiscardPolicy: This policy silently discards unhandled tasks, does nothing, and throws no exceptions.