1. The problem of too much self-log

After running kafka for a period of time, it will find that its host disk usage is slowly increasing, check the amount of data log holdings or the threshold set before.

At this time, it is kafka's own log print booster disk.

The default ~/kafka_2.11-0.9.0.0/config/log4j.properties are as follows:

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.kafkaAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.kafkaAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.kafkaAppender.File=${kafka.logs.dir}/server.log

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.kafkaAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

...

...

...It can be seen that its own logs are backed up in accordance with the hours, and there is no automatic clearance function, so its own logs have not been cleared, it may affect the prediction and judgment of data volume. At this time, we just want to keep the log of the last n days. log4j does not configure this functionality, and there are two ways to achieve this without changing the source code.

1. Write a crontab script to clear automatically;

2. Modify log4j.properties to clear automatically according to size.

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.kafkaAppender=org.apache.log4j.RollingFileAppender

log4j.appender.kafkaAppender.append=true

log4j.appender.kafkaAppender.maxBackupIndex=2

log4j.appender.kafkaAppender.maxFileSize=5MB

log4j.appender.kafkaAppender.File=${kafka.logs.dir}/server.log

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.kafkaAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

...

...

...As mentioned above, I set up two log backups in the production environment, and rollback started at 5MB.

log.retention.bytes is partition level

The maximum size of the log before deleting it is not clearly explained. In fact, this value is only the size of the partition log, not the size of the topic log.

log.segment.delete.delay.ms settings

The amount of time to wait before deleting a file from the filesystem.

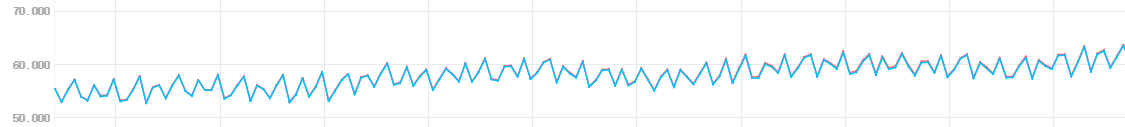

The default value is: 60,000 ms, that is, after the amount of data reaches the set threshold, it will retain the data and wait for a period of time before it is deleted from the file system. So when doing performance testing, if the data transmission rate is very high, it will lead to monitoring the data folder to find that it always exceeds the threshold. Values are deleted, and this threshold can be set a little smaller.