1. Preparation before construction

1.1 ssh password free login configuration method

Prepare at least three host servers. For example, my host servers are:

| Server name | IP |

|---|---|

| Server master | 81.68.172.91 |

| Server slave1 | 121.43.177.90 |

| Server slave2 | 114.132.222.63 |

- First, generate the secret key on the master server. The command to generate the secret key is as follows:

ssh-keygen

[root@master ~]$ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/home/usera/.ssh/id_rsa): Created directory '/home/usera/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/usera/.ssh/id_rsa. Your public key has been saved in /home/usera/.ssh/id_rsa.pub. The key fingerprint is: 39:f2:fc:70:ef:e9:bd:05:40:6e:64:b0:99:56:6e:01 usera@serverA The key's randomart image is: +--[ RSA 2048]----+ | Eo* | | @ . | | = * | | o o . | | . S . | | + . . | | + . .| | + . o . | | .o= o. | +-----------------+

After running the command, you can directly press enter all the way without entering text to generate two files under / home / login user name /. ssh:

- id_rsa private key

- id_rsa.pub public key (the public key will be transferred to the other two servers and appended to the authorized_keys file later)

- Using the command scp id_rsa.pub user name @ 121.43.177.90: / home transmits the public key to the two slave servers respectively. The scp command can copy files between the two network hosts. Click enter to execute the command, and you will be asked to enter the login password of the user of the slave server.

- Log in to slave1 and slave2 respectively, and check whether slave1 and slave2 contain the "authorized_keys" file (usually in the. ssh folder). If not, create the "authorized_keys" file and modify the permission to "600":

[usera@server1 ~]$ touch authorized_keys

[usera@server1 ~]$ chmod 600 authorized_keys

- The ID uploaded from the master server on slave1 and slave2 servers respectively_ The rsa.pub public key is appended to the respective authorized_keys file:

[usera@server1 ~]$ cat id_rsa.pub >> /root/.ssh/authorized_keys

In this way, the master can copy files to two slave hosts through the scp command without password.

1.2 installing JDK

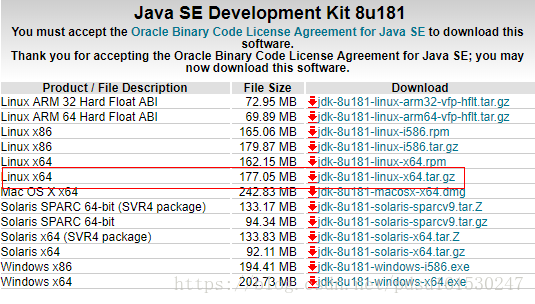

Download jdk1.8 under Linux environment

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

Download the JDK compressed package, upload it to the server, and then use the decompression command:

[root@localhost /home/geek]# tar -zxvf jdk-8u181-linux-x64.tar.gz

Then rename the extracted folder to jdk1.8:

[root@localhost /home/geek]# mv jdk-8u181-linux-x64 jdk1.8

Then configure the jdk environment variable:

[root@localhost /home/geek]# vim /etc/profile

Press i to enter editing, and add the following contents at the end of the profile file

export JAVA_HOME=/home/geek/jdk1.8 #jdk installation directory

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATH

export JAVA_PATH=${JAVA_HOME}/bin:${JRE_HOME}/bin

export PATH=$PATH:${JAVA_PATH}

Esc --> :wq

Save and exit editing

Make the profile file take effect immediately through the command source /etc/profile

[root@localhost /home/geek]# source /etc/profile

After installing the JDK, you can transfer the package of jdk1.8 to the corresponding folders of slave1 and slave2 with scp command, as follows:

[root@localhost /home/geek]# scp -r jdk1.8 root@121.43.177.90:/home/geek

You need to add the - r parameter to recursively copy the entire jdk1.8 folder to the slave server, and then configure their jdk environment variables on the slave server.

1.3 download and configure zookeeper

Go to the download page of the official website of zookeeper to download the zookeeper compressed package. At this time, note that there are two kinds of zookeeper compressed packages on the official website. The non source package with bin is used directly (we need to download this zookeeper), and the other compressed package without bin is the source package, which needs to be compiled to run.

After downloading, unzip it on the master host:

[root@localhost /home/geek]# tar -zxvf apache-zookeeper-3.5.8-bin.tar.gz

Then rename it to zookeeper file name:

[root@localhost /home/geek]# mv apache-zookeeper-3.5.8-bin zookeeper

Then enter the conf folder and copy the zoo_sample.cfg is zoo.cfg:

[root@localhost /home/geek/zookeeper/conf]# cp zoo_sample.cfg zoo.cfg

Modify the following in the zoo.cfg configuration file:

dataDir=/opt/zookeeper/data

Configure environment variables:

Go to the root user, first enter the / etc/profile directory and add the corresponding configuration information:

#set zookeeper environment

export ZK_HOME=/opt/soft/zookeeper/apache-zookeeper-3.5.8-bin/ export PATH=$PATH:$ZK_HOME/bin

Then make the environment variable effective through the following command:

source /etc/profile

2.zookeeper cluster construction

-

Enter the conf folder of zookeeper and edit the zoo.cfg configuration file as follows:

Add the following information: for example, configure master

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

Master, slave1 and slave2 represent three server IPS respectively. You need to modify the hosts file to add the IP mapping between master, slave1 and slave2 and the three servers, and then copy and overwrite the hosts files corresponding to slave1 and slave2 through scp.

The three port functions of zookeeper1. 2181: providing services to client s

2. 2888: use of intra cluster machine communication

3. 3888: election leader usage

Modify the cluster configuration file by server.id = ip:port:port -

Create a myid file in the directory specified by dataDir:

Write server.x in the file in the corresponding host, for example, write 1 in master

[root@master /opt/zookeeper/data]# echo 1 > myid [root@master /opt/zookeeper/data]# cat myid 1

-

Copy the zookeeper to the corresponding location of other slave hosts through the scp command.

-

After doing this, start the zookeeper of the master, slave1 and slave2 hosts respectively:

============================================================

2.1 start zookeeper service

============================================================

Start command:

zkServer.sh start

Stop command:

zkServer.sh stop

Restart command:

zkServer.sh restart

View cluster node status:

zkServer.sh status

- After successful startup, query with the view status command on each server. If two mode s of flower/leader appear respectively, it means success:

root@VM-8-13-ubuntu:/home/geek/zookeeper/logs# zkServer.sh status ZooKeeper JMX enabled by default Using config: /home/geek/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower

root@linuxprobe:/home/geek/zookeeper/logs# zkServer.sh status ZooKeeper JMX enabled by default Using config: /home/geek/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower

root@iZbp10sz66ubwpdg3fy8zwZ:/home/geek# zkServer.sh status ZooKeeper JMX enabled by default Using config: /home/geek/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader

- Leader - there is only one leader

- flower - Multiple Followers

3. Startup issues

-

The firewall needs to be closed before startup (the corresponding port needs to be opened for the production environment)

systemctl stop firewalld

If deployed on a cloud service, open ports 2188, 2888 and 3888 should be set at the server firewall of the cloud platform, and the corresponding ports should be opened for master, slave1 and slave2 servers. -

Zookeeper fails to start when a zookeeper cluster is built on the cloud service. An error Cannot open channel to 3 at election address is reported:

Solution:

On each znode. I modified the configuration file $ZOOKEEPER_HOME / conf / zoo.cfg, set the machine IP to "0.0.0.0", while maintaining the IP addresses of the other two znodes. See belowThe master server is deployed with zookeeper1, and the configuration file is as follows:

server.1=0.0.0.0:2888:3888

server.2=120.78.123.192:2888:3888

server.3=192.168.11.108:2888:3888slave1 deploys zookeeper2, and the configuration file server is as follows:

server.1=47.106.132.191:2888:3888

server.2=0.0.0.0:2888:3888

server.3=192.168.11.108:2888:3888slave2 deploys zookeeper3. The server of the configuration file is as follows:

server.1=47.106.132.191:2888:3888

server.2=120.78.123.192:2888:3888

server.3=0.0.0.0:2888:3888 -

If an error occurs during startup, you can find the cause of the error in the zookeeper cluster by viewing the logs in the zookeeper folder:

root@VM-8-13-ubuntu:/home/geek/zookeeper/logs# cat zookeeper-root-server-VM-8-13-ubuntu.out