Incremental import

Incremental import append for incremental columns

# First import [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor --where "actor_id < 50" --username sakila -P --num-mappers 1 --target-dir /tmp/hive/sqoop/actor_all ... 18/10/15 14:32:14 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 14:32:34 INFO mapreduce.Job: Running job: job_1539583112983_0002 18/10/15 14:32:49 INFO mapreduce.Job: Job job_1539583112983_0002 running in uber mode : false 18/10/15 14:32:49 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 14:33:06 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 14:33:07 INFO mapreduce.Job: Job job_1539583112983_0002 completed successfully 18/10/15 14:33:08 INFO mapreduce.Job: Counters: 30 ... 18/10/15 14:33:08 INFO mapreduce.ImportJobBase: Transferred 1.8262 KB in 40.9516 seconds (45.6636 bytes/sec) 18/10/15 14:33:08 INFO mapreduce.ImportJobBase: Retrieved 49 records. [hadoop@node224 ~]$ hdfs dfs -cat /tmp/hive/sqoop/actor_all/part-m-00000 1,PENELOPE,GUINESS,2006-02-15 04:34:33.0 ... 48,FRANCES,DAY-LEWIS,2006-02-15 04:34:33.0 49,ANNE,CRONYN,2006-02-15 04:34:33.0 [hadoop@node224 ~]$ # apppend incremental import actor_id < 50 # Specified Incremental Mode --incremental (mode append|lastmodified) # Specify incremental proofreading fields --check-column (col) # Specify Incremental Start Value --last-value (value) # append mode increment [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor --username sakila -P --incremental append --check-column actor_id --last-value 49 --num-mappers 1 --target-dir /tmp/hive/sqoop/actor_all ... Enter password: ... 18/10/15 14:43:03 INFO mapreduce.Job: Running job: job_1539583112983_0003 18/10/15 14:43:19 INFO mapreduce.Job: Job job_1539583112983_0003 running in uber mode : false 18/10/15 14:43:19 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 14:43:34 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 14:43:35 INFO mapreduce.Job: Job job_1539583112983_0003 completed successfully 18/10/15 14:43:35 INFO mapreduce.Job: Counters: 30 ... 18/10/15 14:43:35 INFO mapreduce.ImportJobBase: Transferred 5.79 KB in 38.6992 seconds (153.2074 bytes/sec) 18/10/15 14:43:35 INFO mapreduce.ImportJobBase: Retrieved 151 records. 18/10/15 14:43:35 INFO util.AppendUtils: Appending to directory actor_all 18/10/15 14:43:35 INFO util.AppendUtils: Using found partition 1 18/10/15 14:43:35 INFO tool.ImportTool: Incremental import complete! To run another incremental import of all data following this import, supply the following arguments: 18/10/15 14:43:35 INFO tool.ImportTool: --incremental append 18/10/15 14:43:35 INFO tool.ImportTool: --check-column actor_id 18/10/15 14:43:35 INFO tool.ImportTool: --last-value 200 18/10/15 14:43:35 INFO tool.ImportTool: (Consider saving this with 'sqoop job --create') [hadoop@node224 ~]$ hdfs dfs -cat /tmp/hive/sqoop/actor_all/part-m-00001 50,NATALIE,HOPKINS,2006-02-15 04:34:33.0 ... 200,JULIA,FAWCETT,2006-02-15 04:34:33.0

Incremental import of time series lastmodified

# Modify the time in actor_new table differently UPDATE actor_new SET last_update = DATE_ADD(last_update,INTERVAL (FLOOR(RAND()*199+1)) DAY) # First import [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --where "last_update < '2006-04-25 04:34:33'" --username sakila -P --num-mappers 1 --target-dir /tmp/hive/sqoop/actor_lastmodified --delete-target-dir ... 18/10/15 14:57:23 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 14:57:42 INFO mapreduce.Job: Running job: job_1539583112983_0004 18/10/15 14:58:01 INFO mapreduce.Job: Job job_1539583112983_0004 running in uber mode : false 18/10/15 14:58:01 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 14:58:22 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 14:58:23 INFO mapreduce.Job: Job job_1539583112983_0004 completed successfully ... 18/10/15 14:58:23 INFO mapreduce.ImportJobBase: Transferred 2.6592 KB in 32.9053 seconds (82.7527 bytes/sec) 18/10/15 14:58:23 INFO mapreduce.ImportJobBase: Retrieved 69 records. # When imported through lastmodified incremental mode, merge is merged through the specified column when the target directory exists and needs to be specified -- merge-key [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --username sakila -P --incremental lastmodified --check-column last_update --last-value '2006-04-25 04:34:33' --num-mappers 1 --target-dir /tmp/hive/sqoop/actor_lastmodified ... 18/10/15 15:05:10 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 15:05:20 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/a00f6459986efc548e86cabd08b4554d/actor_new.jar 18/10/15 15:05:22 ERROR tool.ImportTool: Import failed: --merge-key or --append is required when using --incremental lastmodified and the output directory exists. [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --username sakila -P --incremental lastmodified --check-column last_update --last-value '2006-04-25 04:34:33' --merge-key actor_id --num-mappers 1 --target-dir /tmp/hive/sqoop/actor_lastmodified ... 18/10/15 15:08:44 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 15:09:02 INFO mapreduce.Job: Running job: job_1539583112983_0006 18/10/15 15:09:11 INFO mapreduce.Job: Job job_1539583112983_0006 running in uber mode : false 18/10/15 15:09:11 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 15:09:23 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 15:09:24 INFO mapreduce.Job: Job job_1539583112983_0006 completed successfully ... 18/10/15 15:09:24 INFO mapreduce.ImportJobBase: Transferred 4.957 KB in 27.9354 seconds (181.705 bytes/sec) ... 18/10/15 15:09:27 INFO mapreduce.Job: Running job: job_1539583112983_0007 18/10/15 15:09:43 INFO mapreduce.Job: Job job_1539583112983_0007 running in uber mode : false 18/10/15 15:09:43 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 15:09:57 INFO mapreduce.Job: map 50% reduce 0% 18/10/15 15:10:05 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 15:10:15 INFO mapreduce.Job: map 100% reduce 100% ... 18/10/15 15:10:16 INFO tool.ImportTool: Incremental import complete! To run another incremental import of all data following this import, supply the following arguments: 18/10/15 15:10:16 INFO tool.ImportTool: --incremental lastmodified 18/10/15 15:10:16 INFO tool.ImportTool: --check-column last_update 18/10/15 15:10:16 INFO tool.ImportTool: --last-value 2018-10-15 15:02:19.0 18/10/15 15:10:16 INFO tool.ImportTool: (Consider saving this with 'sqoop job --create')

Sqoop job operation

Save the command line as a job to facilitate defining multiple uses at a time and simplify command line operations

# Define a job --create <job-id> # View job configuration parameters --show <job-id> # List all defined job s --list # Execute the specified job --exec <job-id> # Delete job --delete <job-id>

Operation example

# Define a single full load of specified data into hive [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --create impjob01_increment_actors -- import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor --where "actor_id < 50" --username sakila -P --hive-import --hive-table db01.t_actors_all --num-mappers 1 ... Enter password: 18/10/15 16:49:07 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 18/10/15 16:49:07 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. # Query job parameter information [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --show impjob01_increment_actors ... Enter password: Job: impjob01_increment_actors Tool: import Options: ---------------------------- verbose = false hcatalog.drop.and.create.table = false db.connect.string = jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false codegen.output.delimiters.escape = 0 codegen.output.delimiters.enclose.required = false codegen.input.delimiters.field = 0 mainframe.input.dataset.type = p split.limit = null hbase.create.table = false db.require.password = true skip.dist.cache = false hdfs.append.dir = false db.where.clause = actor_id < 50 db.table = actor codegen.input.delimiters.escape = 0 accumulo.create.table = false import.fetch.size = null codegen.input.delimiters.enclose.required = false db.username = sakila reset.onemapper = false codegen.output.delimiters.record = 10 import.max.inline.lob.size = 16777216 sqoop.throwOnError = false hbase.bulk.load.enabled = false hcatalog.create.table = false db.clear.staging.table = false codegen.input.delimiters.record = 0 enable.compression = false hive.overwrite.table = false hive.import = true codegen.input.delimiters.enclose = 0 hive.table.name = db01.t_actors_all accumulo.batch.size = 10240000 hive.drop.delims = false customtool.options.jsonmap = {} codegen.output.delimiters.enclose = 0 hdfs.delete-target.dir = false codegen.output.dir = . codegen.auto.compile.dir = true relaxed.isolation = false mapreduce.num.mappers = 1 accumulo.max.latency = 5000 import.direct.split.size = 0 sqlconnection.metadata.transaction.isolation.level = 2 codegen.output.delimiters.field = 1 export.new.update = UpdateOnly incremental.mode = None hdfs.file.format = TextFile sqoop.oracle.escaping.disabled = true codegen.compile.dir = /tmp/sqoop-root/compile/4ef4d1352923d513acd7ca40fa3fbe3a direct.import = false temporary.dirRoot = _sqoop hive.fail.table.exists = false db.batch = false # List all job s [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --list ... Available jobs: impjob01_increment_actors # Delete job [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --delete impjob01_increment_actors ... # Execute job and supplement the parameters invoked at execution time [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --exec impjob01_increment_actors -- --delete-target-dir ... 18/10/15 16:51:19 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 16:51:45 INFO mapreduce.Job: Running job: job_1539583112983_0011 18/10/15 16:51:55 INFO mapreduce.Job: Job job_1539583112983_0011 running in uber mode : false 18/10/15 16:51:55 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 16:52:08 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 16:52:09 INFO mapreduce.Job: Job job_1539583112983_0011 completed successfully 18/10/15 16:52:09 INFO mapreduce.Job: Counters: 30 ... 18/10/15 16:52:09 INFO mapreduce.ImportJobBase: Transferred 1.8262 KB in 30.4684 seconds (61.3751 bytes/sec) 18/10/15 16:52:09 INFO mapreduce.ImportJobBase: Retrieved 49 records. ... 18/10/15 16:52:40 INFO hive.HiveImport: OK 18/10/15 16:52:40 INFO hive.HiveImport: Time taken: 2.172 seconds 18/10/15 16:52:41 INFO hive.HiveImport: Loading data to table db01.t_actors_all 18/10/15 16:52:42 INFO hive.HiveImport: OK 18/10/15 16:52:42 INFO hive.HiveImport: Time taken: 1.869 seconds 18/10/15 16:52:42 INFO hive.HiveImport: Hive import complete. 18/10/15 16:52:42 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

Incremental loading of data into hive through job schema definition

[root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --create impjob02_increment_actors -- import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor --username sakila -P --where "actor_id < 100" --incremental append --check-column actor_id --last-value 49 --hive-import --hive-table db01.t_actors_all --num-mappers 1 ... Enter password: 18/10/15 17:01:17 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 18/10/15 17:01:17 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --list ... 18/10/15 17:01:35 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Available jobs: impjob01_increment_actors impjob02_increment_actors [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop job --exec impjob02_increment_actors ... Enter password: ... 18/10/15 17:02:29 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 17:02:42 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 17:02:43 INFO mapreduce.Job: Job job_1539583112983_0014 completed successfully ... 18/10/15 17:02:44 INFO mapreduce.ImportJobBase: Transferred 1.8857 KB in 37.7095 seconds (51.2072 bytes/sec) 18/10/15 17:02:44 INFO mapreduce.ImportJobBase: Retrieved 50 records. ... 18/10/15 17:03:18 INFO hive.HiveImport: OK 18/10/15 17:03:18 INFO hive.HiveImport: Time taken: 2.954 seconds 18/10/15 17:03:19 INFO hive.HiveImport: Loading data to table db01.t_actors_all 18/10/15 17:03:20 INFO hive.HiveImport: OK 18/10/15 17:03:20 INFO hive.HiveImport: Time taken: 1.992 seconds 18/10/15 17:03:21 INFO hive.HiveImport: Hive import complete. 18/10/15 17:03:21 INFO hive.HiveImport: Export directory is empty, removing it. 18/10/15 17:03:21 INFO tool.ImportTool: Saving incremental import state to the metastore 18/10/15 17:03:21 INFO tool.ImportTool: Updated data for job: impjob02_increment_actors

Note an error in exporting a hit table to MySQL (as follows), because the record separator of the field is not specified at the time of import, which results in hive being unable to parse the data at the time of export, so it is necessary to specify the field separator before importing hive from RDBMS. The following errors are often not directly found the cause of the problem, you need to view the specific log through yarn's web 8088 page for analysis.

# Directly specify the directory export error of the hit table because the data imported into the hit table cannot be parsed [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop export --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --username sakila -P --export-dir /user/hive/warehouse/db01.db/t_actors_all ... Enter password: ... 18/10/15 17:12:39 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 17:13:00 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 17:13:01 INFO mapreduce.Job: Job job_1539583112983_0016 failed with state FAILED due to: Task failed task_1539583112983_0016_m_000001 Job failed as tasks failed. failedMaps:1 failedReduces:0 18/10/15 17:13:01 INFO mapreduce.Job: Counters: 8 Job Counters Failed map tasks=3 Launched map tasks=3 Data-local map tasks=3 Total time spent by all maps in occupied slots (ms)=52283 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=52283 Total vcore-milliseconds taken by all map tasks=52283 Total megabyte-milliseconds taken by all map tasks=53537792 18/10/15 17:13:01 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead 18/10/15 17:13:01 INFO mapreduce.ExportJobBase: Transferred 0 bytes in 42.3959 seconds (0 bytes/sec) 18/10/15 17:13:02 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead 18/10/15 17:13:02 INFO mapreduce.ExportJobBase: Exported 0 records. 18/10/15 17:13:02 ERROR mapreduce.ExportJobBase: Export job failed! 18/10/15 17:13:02 ERROR tool.ExportTool: Error during export: Export job failed! at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:445) at org.apache.sqoop.manager.SqlManager.exportTable(SqlManager.java:931) at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:80) at org.apache.sqoop.tool.ExportTool.run(ExportTool.java:99) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252) # Data format on hdfs [hadoop@node224 ~]$ hdfs dfs -cat /user/hive/warehouse/db01.db/t_actors_all/part-m-00000 1PENELOPEGUINESS2006-02-15 04:34:33.0 2NICKWAHLBERG2006-02-15 04:34:33.0 3EDCHASE2006-02-15 04:34:33.0 4JENNIFERDAVIS2006-02-15 04:34:33.0 5JOHNNYLOLLOBRIGIDA2006-02-15 04:34:33.0

Incremental export

Export export defaults to insert mode, which adds records to the target table. This mode is mainly used to export records to empty or new tables and receive export results. Convert to insert operation. If an insert fails, the task fails. # Specify fields to update matches --update-key # Specify update mode --update-mode <updateonly|allowinsert> # Convert the exported record into an update statement. If there is no corresponding record in the table, new data will not be inserted into the table. If multiple rows match, more rows will be updated. Unmatched update tasks will still occur, and multiple update keys can be specified updateonly # Perform update and insert operations allowinsert

Setting delimiters and data regularization when importing hive

/usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor --where "actor_id < 50" --username sakila -P --hive-import --hive-table db01.t_actors_all --fields-terminated-by ',' --lines-terminated-by '\n' --num-mappers 1 [hadoop@node224 ~]$ hdfs dfs -cat /user/hive/warehouse/db01.db/t_actors_all/part-m-00000 1,PENELOPE,GUINESS,2006-02-15 04:34:33.0 2,NICK,WAHLBERG,2006-02-15 04:34:33.0 3,ED,CHASE,2006-02-15 04:34:33.0

A Full Quantity Derivation

# Export again [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop export --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --username sakila -P --export-dir /user/hive/warehouse/db01.db/t_actors_all --input-fields-terminated-by ',' ... 18/10/15 17:37:52 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 17:38:20 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 17:38:49 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 17:38:51 INFO mapreduce.Job: Job job_1539583112983_0018 completed successfully ... 18/10/15 17:38:51 INFO mapreduce.ExportJobBase: Transferred 5.458 KB in 45.0086 seconds (124.1764 bytes/sec) 18/10/15 17:38:51 INFO mapreduce.ExportJobBase: Exported 49 records. # Data in MySQL ipems_dvp@localhost : sakila 05:05:00> select count(1) from actor_new; +----------+ | count(1) | +----------+ | 49 | +----------+ 1 row in set (0.00 sec)

Incremental import to increase data in tables

# Incremental import [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop import --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor --username sakila -P --where "actor_id < 100" --incremental append --check-column actor_id --last-value 49 --hive-import --hive-table db01.t_actors_all --fields-terminated-by ',' --lines-terminated-by '\n' --num-mappers 1 ... 18/10/15 17:41:34 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 17:42:40 INFO hive.HiveImport: Time taken: 3.445 seconds 18/10/15 17:42:41 INFO hive.HiveImport: Loading data to table db01.t_actors_all 18/10/15 17:42:42 INFO hive.HiveImport: OK 18/10/15 17:42:42 INFO hive.HiveImport: Time taken: 2.031 seconds 18/10/15 17:42:43 INFO hive.HiveImport: Hive import complete. 18/10/15 17:42:43 INFO hive.HiveImport: Export directory is empty, removing it. 18/10/15 17:42:43 INFO tool.ImportTool: Incremental import complete! To run another incremental import of all data following this import, supply the following arguments: 18/10/15 17:42:43 INFO tool.ImportTool: --incremental append 18/10/15 17:42:43 INFO tool.ImportTool: --check-column actor_id 18/10/15 17:42:43 INFO tool.ImportTool: --last-value 99 18/10/15 17:42:43 INFO tool.ImportTool: (Consider saving this with 'sqoop job --create')

Data situation in hive table

0: jdbc:hive2://node225:10000/db01> select count(1) from t_actors_all; WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. +-----+--+ | c0 | +-----+--+ | 98 | +-----+--+ 1 row selected (69.208 seconds)

The allowinsert mode export tested here does not merge the insertion operations according to the official documents, but only performs the insertion operations for unknown reasons. First, record and then analyze the investigation.

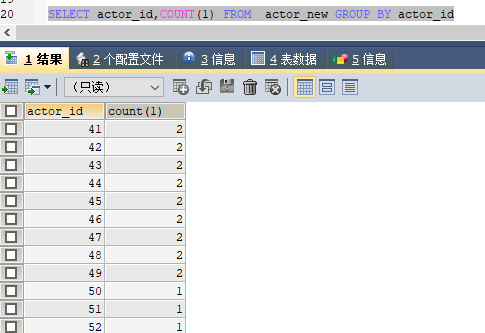

# Merge mode export /usr/local/sqoop-1.4.7/bin/sqoop export --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --username sakila -P --export-dir /user/hive/warehouse/db01.db/t_actors_all --input-fields-terminated-by ',' --update-mode allowinsert --update-key actor_id [root@node222 ~]# /usr/local/sqoop-1.4.7/bin/sqoop export --connect jdbc:mysql://192.168.0.200:3306/sakila?useSSL=false --table actor_new --username sakila -P --export-dir /user/hive/warehouse/db01.db/t_actors_all --input-fields-terminated-by ',' --update-mode allowinsert --update-key actor_id ... 18/10/15 17:45:24 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: ... 18/10/15 17:45:58 INFO mapreduce.Job: map 0% reduce 0% 18/10/15 17:46:17 INFO mapreduce.Job: map 100% reduce 0% 18/10/15 17:46:18 INFO mapreduce.Job: Job job_1539583112983_0020 completed successfully ... 18/10/15 17:46:18 INFO mapreduce.ExportJobBase: Transferred 6.6807 KB in 39.5958 seconds (172.7707 bytes/sec) 18/10/15 17:46:18 INFO mapreduce.ExportJobBase: Exported 99 records. # Query the exported data without merging ipems_dvp@localhost : sakila 05:32:27> select count(1) from actor_new; +----------+ | count(1) | +----------+ | 148 | +----------+ 1 row in set (0.00 sec)

Verify that no update operation was performed from the exported data results