Introduction to Canal

1. Application Scenarios

In the previous statistical analysis function, we have taken a service call to obtain statistics, which is highly coupled and relatively inefficient. At present, I take another way to achieve this by synchronizing the database tables in real time. For ex amp le, we want to count the number of registered and logged-in people every day. We just need to synchronize the membership tables into the statistical database to achieve local statistics, which is OK.Canal is a good database synchronization tool because of its higher efficiency and lower coupling.Canal is an open source project under Alibaba, developed purely in Java.Based on database incremental log resolution, provides incremental data subscription & consumption, currently mainly supporting MySQL.

2. Canal Environment Setup

The principle of canal is based on mysql binlog technology, so you must turn on the binlog writing function of mysql here

Open MySQL service: service mysql start (or systemctl start)Mysqld.service)

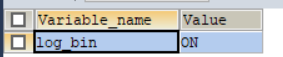

(1) Check if the binlog function is on

show variables like 'log_bin';

(2) If OFF indicates that the function is not turned on, turn on binlog function

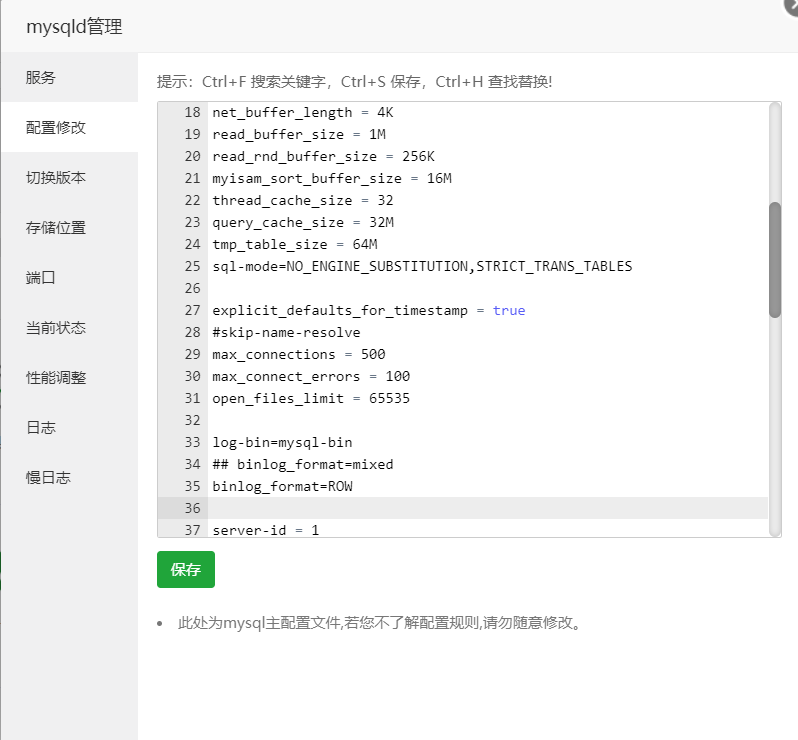

1 Modify the configuration file for MySQLMy.cnf

vi /etc/my.cnf

Additional content:

log-bin=mysql-bin #binlog file name binlog_format=ROW #Select row mode server_id=1 #mysql instance id, cannot duplicate slaveId of canal

2, restart mysql:

service mysql restart

3, log in to mysql client and view log_bin variable

show variables like 'log_bin';

Here I changed it directly at the end of the pagoda

3. Download and install Canal service

Download address:

https://github.com/alibaba/canal/releases

(1) After downloading, place in the directory and unzip the file

cd /usr/local/canal

tar zxvf canal.deployer-1.1.4.tar.gz

(2) Modify the configuration file

vi conf/example/instance.properties

#Need to change to your own database information canal.instance.master.address=192.168.44.132:3306 #You need to change your database user name and password canal.instance.dbUsername=canal canal.instance.dbPassword=canal #You need to change to synchronized database table rules, such as just synchronizing tables #canal.instance.filter.regex=.*\\..* canal.instance.filter.regex=guli_ucenter.ucenter_member

Note:

mysql data parses tables of interest, Perl regular expressions.

Multiple regularities are separated by commas (,), and the escape character requires a double slash (\)

Common examples:

- All tables:. * or. \...

- All tables under the canal schema: canal\...*

- Table under canal that starts with canal: canal\.canal. *

- A table under the canal schema:canal.test1

- Multiple rules used in combination: canal\...*,mysql.test1,mysql.test2(comma separated)

Note: This filter condition is only valid for row mode data (ps. mixed/statement cannot extract tableName for filtering because it does not parse sql)

(3) Enter the bin directory and restart

cd ./restartup.sh

2. canal module

configuration filePom.xml

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <!--mysql--> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> </dependency> <dependency> <groupId>commons-dbutils</groupId> <artifactId>commons-dbutils</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-jdbc</artifactId> </dependency> <!-- canal --> <dependency> <groupId>com.alibaba.otter</groupId> <artifactId>canal.client</artifactId> </dependency> </dependencies>

configuration fileApplication.properties

# Service Port server.port=10001 # service name spring.application.name=canal-client # Environment settings: dev, test, prod spring.profiles.active=dev # mysql database connection spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver spring.datasource.url=jdbc:mysql://localhost:3306/test?serverTimezone=GMT%2B8 spring.datasource.username=root spring.datasource.password=root # mybatis log mybatis-plus.configuration.log-impl=org.apache.ibatis.logging.stdout.StdOutImpl

CanalClient

import com.alibaba.otter.canal.client.CanalConnector; import com.alibaba.otter.canal.client.CanalConnectors; import com.alibaba.otter.canal.protocol.CanalEntry.*; import com.alibaba.otter.canal.protocol.Message; import com.google.protobuf.InvalidProtocolBufferException; import org.apache.commons.dbutils.DbUtils; import org.apache.commons.dbutils.QueryRunner; import org.springframework.stereotype.Component; import javax.annotation.Resource; import javax.sql.DataSource; import java.net.InetSocketAddress; import java.sql.Connection; import java.sql.SQLException; import java.util.List; import java.util.Queue; import java.util.concurrent.ConcurrentLinkedQueue; @Component public class CanalClient { //sql queue private Queue<String> SQL_QUEUE = new ConcurrentLinkedQueue<>(); @Resource private DataSource dataSource; /** * canal Warehousing method */ public void run() { CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress("External Network IP", 11111), "example", "", ""); int batchSize = 1000; try { connector.connect(); connector.subscribe(".*\\..*"); connector.rollback(); try { while (true) { //Try pulling data batchSize records from the master, how many and how many Message message = connector.getWithoutAck(batchSize); long batchId = message.getId(); int size = message.getEntries().size(); if (batchId == -1 || size == 0) { Thread.sleep(1000); } else { dataHandle(message.getEntries()); } connector.ack(batchId); //Simulate execution when the sql stacked in the queue is larger than a certain value if (SQL_QUEUE.size() >= 1) { executeQueueSql(); } } } catch (InterruptedException e) { e.printStackTrace(); } catch (InvalidProtocolBufferException e) { e.printStackTrace(); } } finally { connector.disconnect(); } } /** * Simulate execution of sql statements in queue */ public void executeQueueSql() { int size = SQL_QUEUE.size(); for (int i = 0; i < size; i++) { String sql = SQL_QUEUE.poll(); System.out.println("[sql]----> " + sql); this.execute(sql.toString()); } } /** * data processing * * @param entrys */ private void dataHandle(List<Entry> entrys) throws InvalidProtocolBufferException { for (Entry entry : entrys) { if (EntryType.ROWDATA == entry.getEntryType()) { RowChange rowChange = RowChange.parseFrom(entry.getStoreValue()); EventType eventType = rowChange.getEventType(); if (eventType == EventType.DELETE) { saveDeleteSql(entry); } else if (eventType == EventType.UPDATE) { saveUpdateSql(entry); } else if (eventType == EventType.INSERT) { saveInsertSql(entry); } } } } /** * Save Update Statement * * @param entry */ private void saveUpdateSql(Entry entry) { try { RowChange rowChange = RowChange.parseFrom(entry.getStoreValue()); List<RowData> rowDatasList = rowChange.getRowDatasList(); for (RowData rowData : rowDatasList) { List<Column> newColumnList = rowData.getAfterColumnsList(); StringBuffer sql = new StringBuffer("update " + entry.getHeader().getTableName() + " set "); for (int i = 0; i < newColumnList.size(); i++) { sql.append(" " + newColumnList.get(i).getName() + " = '" + newColumnList.get(i).getValue() + "'"); if (i != newColumnList.size() - 1) { sql.append(","); } } sql.append(" where "); List<Column> oldColumnList = rowData.getBeforeColumnsList(); for (Column column : oldColumnList) { if (column.getIsKey()) { //Only a single primary key is supported temporarily sql.append(column.getName() + "=" + column.getValue()); break; } } SQL_QUEUE.add(sql.toString()); } } catch (InvalidProtocolBufferException e) { e.printStackTrace(); } } /** * Save Delete Statement * * @param entry */ private void saveDeleteSql(Entry entry) { try { RowChange rowChange = RowChange.parseFrom(entry.getStoreValue()); List<RowData> rowDatasList = rowChange.getRowDatasList(); for (RowData rowData : rowDatasList) { List<Column> columnList = rowData.getBeforeColumnsList(); StringBuffer sql = new StringBuffer("delete from " + entry.getHeader().getTableName() + " where "); for (Column column : columnList) { if (column.getIsKey()) { //Only a single primary key is supported temporarily sql.append(column.getName() + "=" + column.getValue()); break; } } SQL_QUEUE.add(sql.toString()); } } catch (InvalidProtocolBufferException e) { e.printStackTrace(); } } /** * Save Insert Statement * * @param entry */ private void saveInsertSql(Entry entry) { try { RowChange rowChange = RowChange.parseFrom(entry.getStoreValue()); List<RowData> rowDatasList = rowChange.getRowDatasList(); for (RowData rowData : rowDatasList) { List<Column> columnList = rowData.getAfterColumnsList(); StringBuffer sql = new StringBuffer("insert into " + entry.getHeader().getTableName() + " ("); for (int i = 0; i < columnList.size(); i++) { sql.append(columnList.get(i).getName()); if (i != columnList.size() - 1) { sql.append(","); } } sql.append(") VALUES ("); for (int i = 0; i < columnList.size(); i++) { sql.append("'" + columnList.get(i).getValue() + "'"); if (i != columnList.size() - 1) { sql.append(","); } } sql.append(")"); SQL_QUEUE.add(sql.toString()); } } catch (InvalidProtocolBufferException e) { e.printStackTrace(); } } /** * Warehousing * @param sql */ public void execute(String sql) { Connection con = null; try { if(null == sql) return; con = dataSource.getConnection(); QueryRunner qr = new QueryRunner(); int row = qr.execute(con, sql); System.out.println("update: "+ row); } catch (SQLException e) { e.printStackTrace(); } finally { DbUtils.closeQuietly(con); } } }

Startup Class

@SpringBootApplication public class CanalApplication implements CommandLineRunner { @Resource private CanalClient canalClient; public static void main(String[] args) { SpringApplication.run(CanalApplication.class, args); } @Override public void run(String... args) throws Exception { //Project startup, performing canal client monitoring canalClient.run(); } }

test

Start the service and it will be found that the service has been listening

Attempt to add a record to the remote database at this time

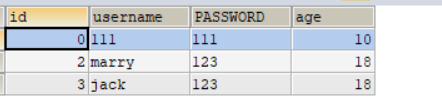

USE test; INSERT INTO member VALUES(0,'111','111',10); SELECT * FROM member;

Console Output Log:

[sql]----> insert into member (id,username,password,age) VALUES ('0','111','111','10') 2020-06-07 10:20:11.830 INFO 12836 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Starting... 2020-06-07 10:20:12.135 INFO 12836 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Start completed. update: 1

Looking at the local database, there's really one more piece of data