1, Introduction to PlumeLog

- The intrusion free distributed log system collects logs based on log4j, log4j2 and logback, and sets the link ID to facilitate the query of associated logs

- Based on elastic search as query engine

- High throughput and query efficiency

- The whole process does not occupy the local disk space of the application and is maintenance free; It is transparent to the project and does not affect the operation of the project itself

- There is no need to modify old projects, and direct use is introduced. It supports dubbo and spring cloud

2, Preparatory work

Server installation

- The first is the message queue. PlumeLog is adapted to redis or kafka. Redis is sufficient for general projects. I also use redis directly on the official website of redis: https://redis.io

- Then you need to install elasticsearch. The download address of the official website is: https://www.elastic.co/cn/downloads/past-releases

- Finally, download the Plumelog Server package from: https://gitee.com/frankchenlong/plumelog/releases

- Plumelog distribution - Gitee.com

Service startup

- Start redis and ensure that redis can be connected locally (the server security group has open ports, and redis is configured to access ip)

- Start elasticsearch. The default startup port is 9200. Direct access indicates that the startup is successful

3, Modify configuration

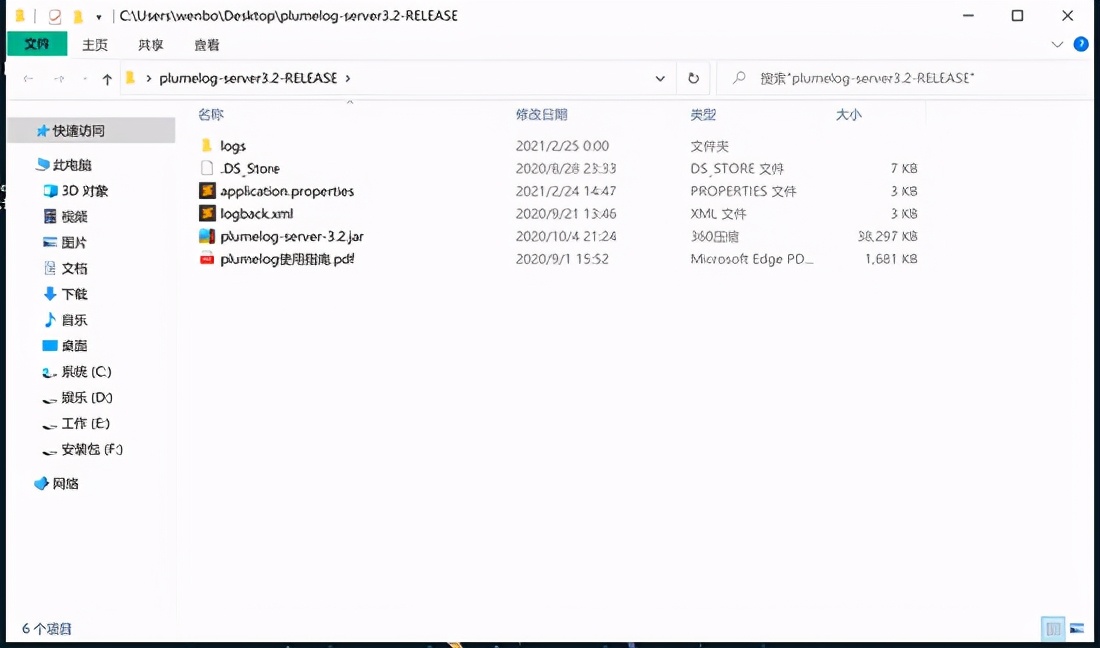

These files are available after the compressed package of Plumelog is decompressed

Modify application.properties and paste my configuration here. The main changes are redis and es configurations

spring.application.name=plumelog_server server.port=8891 spring.thymeleaf.mode=LEGACYHTML5 spring.mvc.view.prefix=classpath:/templates/ spring.mvc.view.suffix=.html spring.mvc.static-path-pattern=/plumelog/** #There are four types of redis, Kafka, rest and restserver #Redis means using redis as the queue #kafka means using kafka as a queue #Rest means fetching logs from the rest interface #restServer means to start as a rest interface server #ui means to start as ui alone plumelog.model=redis #If kafka is used, enable the following configuration #plumelog.kafka.kafkaHosts=172.16.247.143:9092,172.16.247.60:9092,172.16.247.64:9092 #plumelog.kafka.kafkaGroupName=logConsumer #For redis configuration, the redis address must be configured in version 3.0 because alarms need to be monitored plumelog.redis.redisHost=127.0.0.1:6379 #If redis has a password, enable the following configuration plumelog.redis.redisPassWord=123456 plumelog.redis.redisDb=0 #If you use rest, enable the following configuration #plumelog.rest.restUrl=http://127.0.0.1:8891/getlog #plumelog.rest.restUserName=plumelog #plumelog.rest.restPassWord=123456 #elasticsearch related configurations plumelog.es.esHosts=127.0.0.1:9200 #es7. * has removed the index type field, so if it is not configured for es7, an error will be reported if it is not configured below 7. * #plumelog.es.indexType=plumelog #Set the number of index slices. Recommended value: daily log size / ES node machine jvm memory size. A reasonable slice size ensures ES write efficiency and query efficiency plumelog.es.shards=5 plumelog.es.replicas=0 plumelog.es.refresh.interval=30s #The log index is created by day and hour plumelog.es.indexType.model=day #ES sets the password and enables the following configuration #plumelog.es.userName=elastic #plumelog.es.passWord=FLMOaqUGamMNkZ2mkJiY #Number of logs pulled in a single time plumelog.maxSendSize=5000 #Pull interval, kafka not effective plumelog.interval=1000 #If the address of the plumelog UI is not configured, you cannot click the connection in the alarm information plumelog.ui.url=http://127.0.0.1:8891 #Management password. The password you need to enter when manually deleting the log admin.password=123456 #Log retention days, configure 0 or do not configure default permanent retention admin.log.keepDays=30 #Login configuration #login.username=admin #login.password=admin

Do not start the plumelog server first, and then start it finally

Recommended reference configuration method for improving performance

The daily log volume is less than 50G, and the SSD hard disk is used

plumelog.es.shards=5

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=day

The daily log volume is more than 50G, and the mechanical hard disk is used

plumelog.es.shards=5

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=hour

The daily log volume is more than 100G, and the mechanical hard disk is used

plumelog.es.shards=10

plumelog.es.replicas=0

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=hour

The daily log volume is more than 1000G, and the SSD hard disk is used. This configuration can run to 10T for more than a day

plumelog.es.shards=10

plumelog.es.replicas=1

plumelog.es.refresh.interval=30s

plumelog.es.indexType.model=hour

With the increase of plumelog.es.shards and the hour mode, the maximum number of slices of the ES cluster needs to be adjusted

PUT /_cluster/settings

{

"persistent": {

"cluster": {

"max_shards_per_node":100000

}

}

}4, Create a springboot project

Project configuration

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.4.3</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>demo-mybatis</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>demo-mybatis</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.1.4</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!--Distributed log collection plumelog-->

<dependency>

<groupId>com.plumelog</groupId>

<artifactId>plumelog-logback</artifactId>

<version>3.3</version>

</dependency>

<dependency>

<groupId>com.plumelog</groupId>

<artifactId>plumelog-trace</artifactId>

<version>3.3</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

<version>2.1.11.RELEASE</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-core</artifactId>

<version>5.5.8</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>Two configuration files under resources

application.properties

server.port=8888 spring.datasource.url=jdbc:mysql://127.0.0.1:3306/test?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver spring.datasource.username=root spring.datasource.password=123456 spring.datasource.hikari.maximum-pool-size=10 # mybatis configuration mybatis.type-aliases-package=com.example.demomybatis.model mybatis.configuration.map-underscore-to-camel-case=true mybatis.configuration.default-fetch-size=100 mybatis.configuration.default-statement-timeout=30 # jackson formatted date time spring.jackson.date-format=YYYY-MM-dd HH:mm:ss spring.jackson.time-zone=GMT+8 spring.jackson.serialization.write-dates-as-timestamps=false # sql log printing logging.level.com.example.demomybatis.dao=debug ## Output log file logging.file.path=/log

logback-spring.xml

<?xml version="1.0" encoding="UTF-8" ?>

<configuration>

<appender name="consoleApp" class="ch.qos.logback.core.ConsoleAppender">

<layout class="ch.qos.logback.classic.PatternLayout">

<pattern>

%date{yyyy-MM-dd HH:mm:ss.SSS} %-5level[%thread]%logger{56}.%method:%L -%msg%n

</pattern>

</layout>

</appender>

<appender name="fileInfoApp" class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>ERROR</level>

<onMatch>DENY</onMatch>

<onMismatch>ACCEPT</onMismatch>

</filter>

<encoder>

<pattern>

%date{yyyy-MM-dd HH:mm:ss.SSS} %-5level[%thread]%logger{56}.%method:%L -%msg%n

</pattern>

</encoder>

<!-- Rolling strategy -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- route -->

<fileNamePattern>log/info.%d.log</fileNamePattern>

</rollingPolicy>

</appender>

<appender name="fileErrorApp" class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>ERROR</level>

</filter>

<encoder>

<pattern>

%date{yyyy-MM-dd HH:mm:ss.SSS} %-5level[%thread]%logger{56}.%method:%L -%msg%n

</pattern>

</encoder>

<!-- Set scrolling policy -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- route -->

<fileNamePattern>log/error.%d.log</fileNamePattern>

<!-- Controls the maximum number of archived files to be retained. If the number exceeds, the old files will be deleted. It is assumed that rolling is set every month,

And<maxHistory> If it is 1, only the files in the last month will be saved and the previous old files will be deleted -->

<MaxHistory>1</MaxHistory>

</rollingPolicy>

</appender>

<appender name="plumelog" class="com.plumelog.logback.appender.RedisAppender">

<appName>mybatisDemo</appName>

<redisHost>127.0.0.1</redisHost>

<redisAuth>123456</redisAuth>

<redisPort>6379</redisPort>

<runModel>2</runModel>

</appender>

<!-- root Be sure to put it last because there is a problem with the loading sequence -->

<root level="INFO">

<appender-ref ref="consoleApp"/>

<appender-ref ref="fileInfoApp"/>

<appender-ref ref="fileErrorApp"/>

<appender-ref ref="plumelog"/>

</root>

</configuration>Pay attention to adding the appender of the plumelog in the logback configuration file, and then reference the appender in the root. This is the configuration of pushing logs to the redis queue

traceid setting and link tracking global management configuration

traceid interceptor configuration

package com.example.demomybatis.common.interceptor;

import com.plumelog.core.TraceId;

import org.springframework.stereotype.Component;

import org.springframework.web.servlet.HandlerInterceptor;

import org.springframework.web.servlet.ModelAndView;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.util.UUID;

/**

* Created by wenbo on 2021/2/25.

*/

@Component

public class Interceptor implements HandlerInterceptor {

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) {

//Set the TraceID value. If this point is not buried, there will be no link ID

TraceId.logTraceID.set(UUID.randomUUID().toString().replaceAll("-", ""));

return true;

}

@Override

public void postHandle(HttpServletRequest request, HttpServletResponse response, Object handler, ModelAndView modelAndView) {

}

@Override

public void afterCompletion(HttpServletRequest request, HttpServletResponse response, Object handler, Exception ex) {

}

}package com.example.demomybatis.common.interceptor;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.servlet.config.annotation.InterceptorRegistry;

import org.springframework.web.servlet.config.annotation.WebMvcConfigurer;

/**

* Created by wenbo on 2021/2/25.

*/

@Configuration

public class InterceptorConfig implements WebMvcConfigurer {

@Override

public void addInterceptors(InterceptorRegistry registry) {

// Customize interceptors, add intercepting paths and exclude intercepting paths

registry.addInterceptor(new Interceptor()).addPathPatterns("/**");

}

}Link tracking global management configuration

package com.example.demomybatis.common.config;

import com.plumelog.trace.aspect.AbstractAspect;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.Around;

import org.aspectj.lang.annotation.Aspect;

import org.springframework.stereotype.Component;

/**

* Created by wenbo on 2021/2/25.

*/

@Aspect

@Component

public class AspectConfig extends AbstractAspect {

@Around("within(com.example..*))")

public Object around(JoinPoint joinPoint) throws Throwable {

return aroundExecute(joinPoint);

}

}Here, you need to change the pointcut path to your own package path

@ComponentScan({"com.plumelog","com.example.demomybatis"})

Finally, put the demo link: https://gitee.com/wen_bo/demo-plumelog

5, Start project

Start the spring boot first, and then java -jar plumelog-server-3.2.jar Start the plumelog

visit http://127.0.0.1:8891

The default user name and password are admin

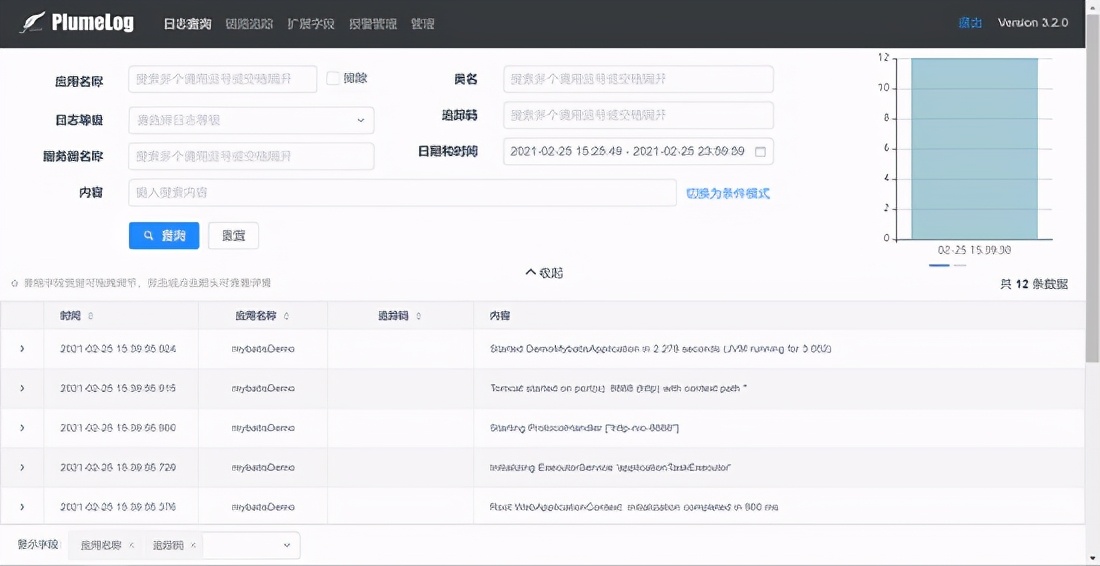

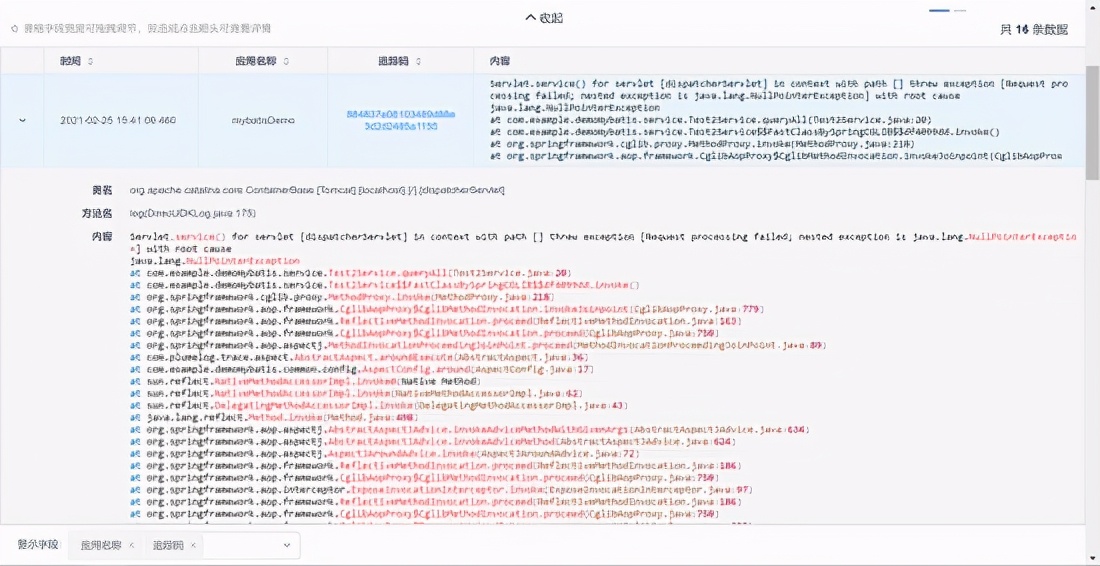

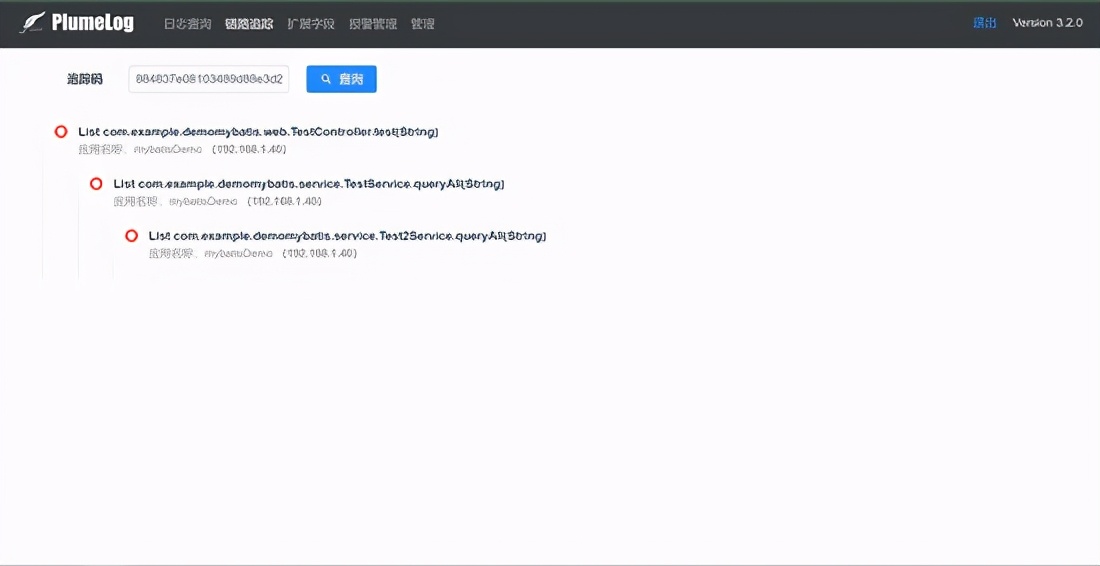

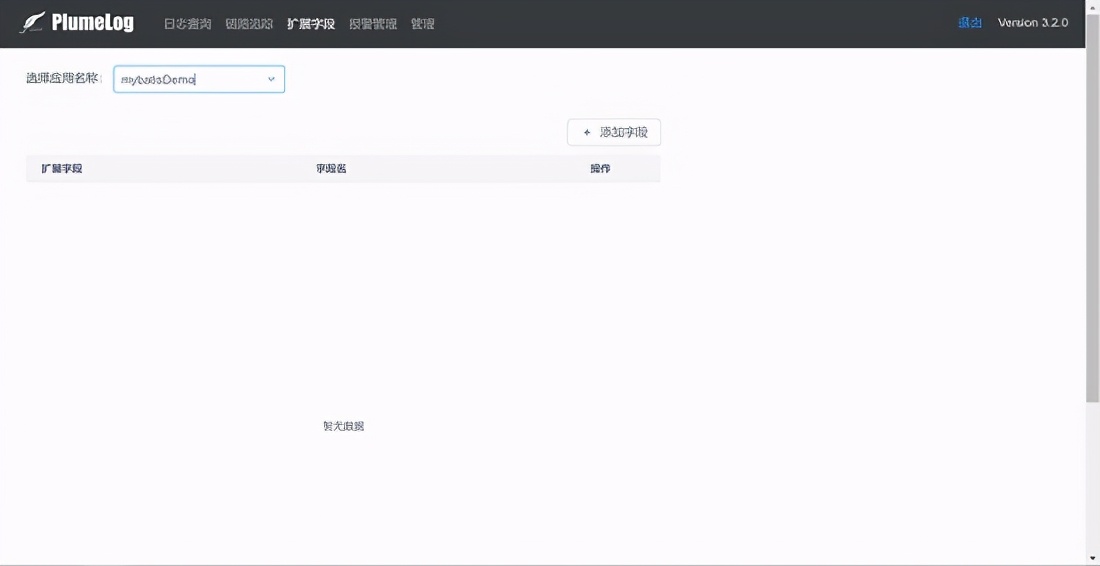

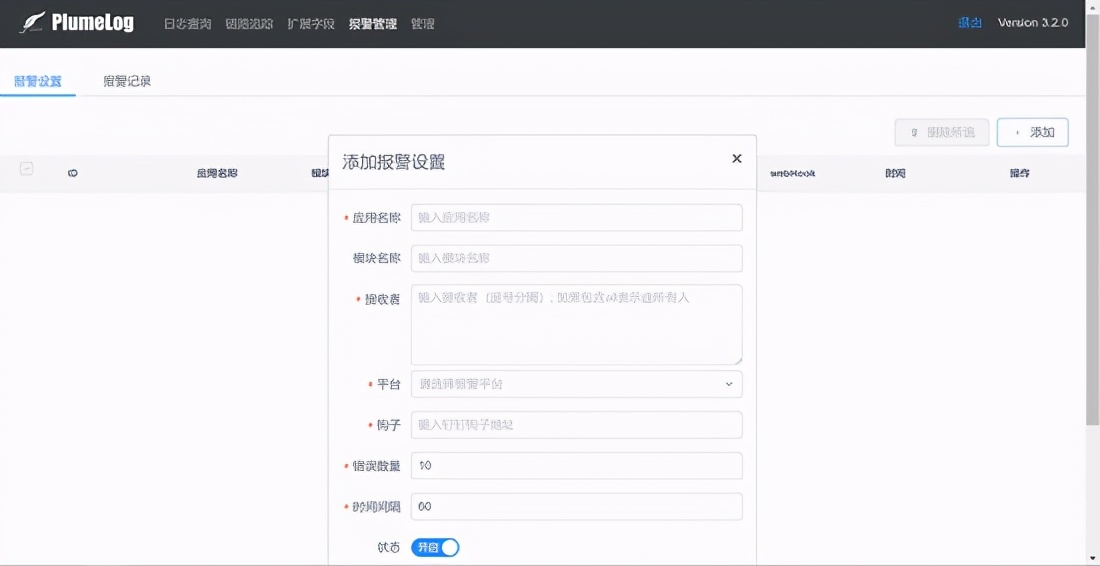

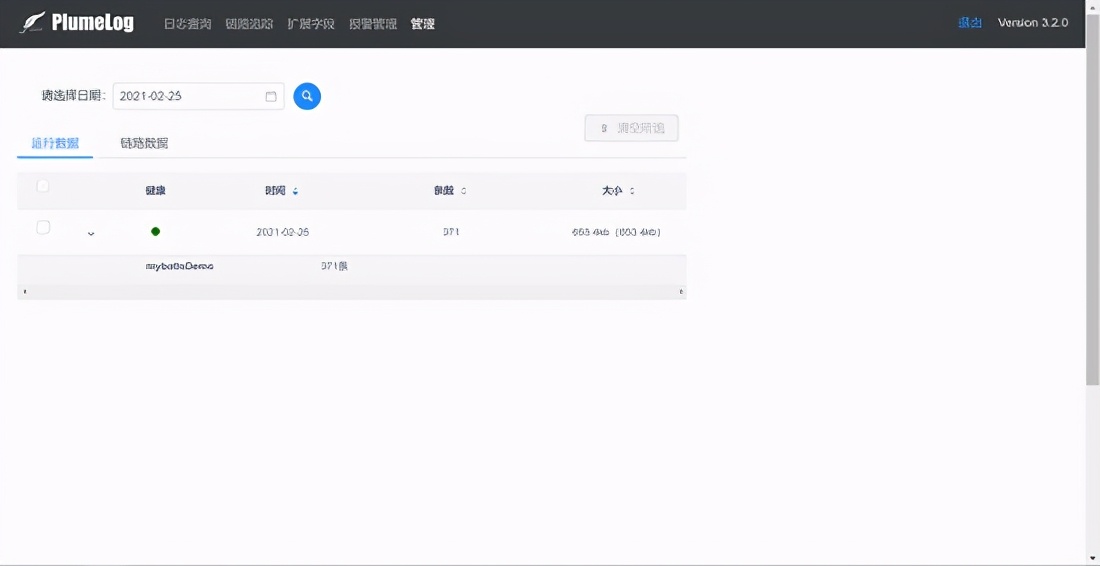

Finally, show the renderings