First, download Spark

The first step in using Spark is to download and decompress. Let's start by downloading the precompiled version of Spark. Visits http://spark.apache.org/downloads.html To download the spark installation package. The version used in this article is: spark-2.4.3-bin-hadoop2.7.tgz

Second, install Spark

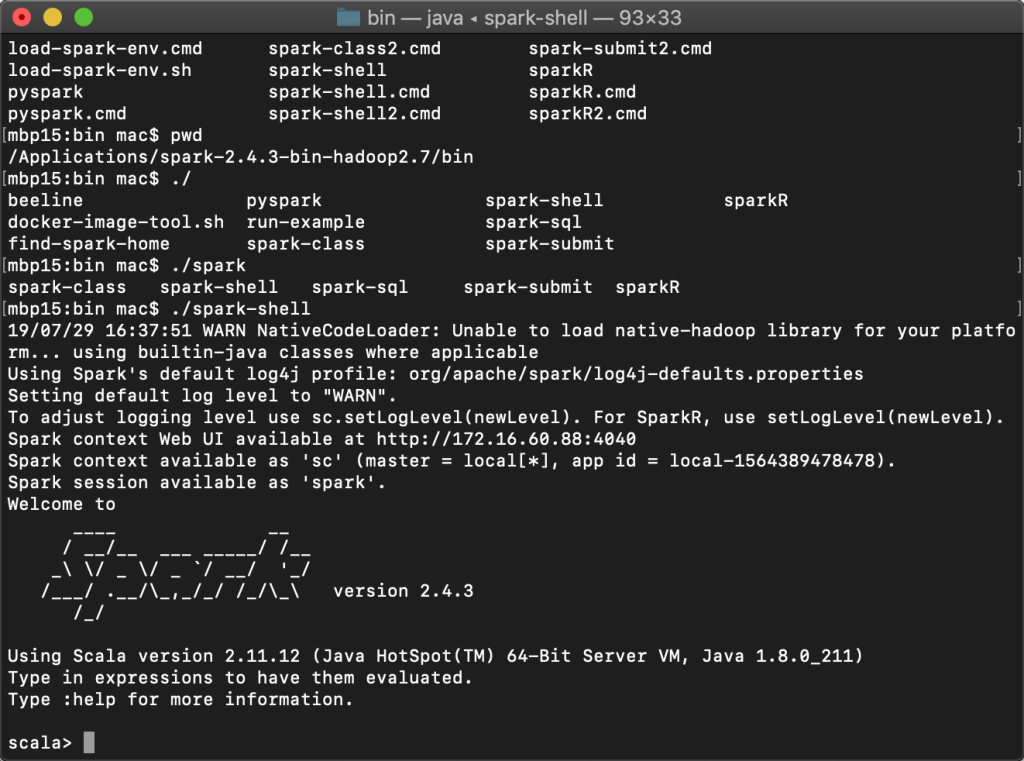

cd ~ tar -xf spark-2.4.3-bin-hadoop2.7.tgz cd spark-2.4.3-bin-hadoop2.7 ls

In the line where the tar command is located, the x tag specifies the tar command to perform decompression, and the f tag specifies the file name of the compressed package. The ls command lists the contents of the Spark directory. Let's first take a rough look at some of the more important files in the Spark directory and the names and functions of the directories.

• README.md

Contains simple instructions for getting started with Spark.

• bin

Contains a series of executable files that can be used to interact with Spark in various ways, such as the Spark shell, which will be covered later in this chapter.

• core,streaming,python......

Contains the source code for the main components of the Spark project.

• examples

Including some Spark programs that can be viewed and run is very helpful for learning Spark's API.

3. Introduction to Spark Shell

Spark has an interactive shell that allows real-time data analysis. If you have used shells like those provided by R, Python, Scala, or operating system shells (such as command prompts in Bash or Windows), you will also be familiar with Spark shells. However, unlike other shell tools, in other shell tools, you can only use single-machine hard disk and memory to manipulate data, while Spark shell can be used to interact with distributed data stored in the memory of many machines or hard disk, and the distribution of processing process is automatically controlled by Spark.

bin/spark-shell

When a Spark shell is started, the Spark shell has pre-created a SparkContext object whose variable is called "sc". If you create another SparkContext object, it will not run. We can use the master tag to specify how to connect to the cluster, or we can use the jars tag to add JAR packages to the classpath, separated by commas between multiple JAR packages, or we can use the packages tag to add Maven dependencies to the shell session, separated by commas between multiple dependencies. Additionally, external repositories are added through the repositories tag. The following statement runs spark-shell in local mode using four cores:

./bin/spark-shell --master local[4]

Fourth, Quick Start Demo

This tutorial will provide a quick introduction to demo of wordCount. First, you need to create a maven project and introduce scala language support.

wordcount in scala version

package com.t9vg import org.apache.spark.{SparkConf, SparkContext} object WordCount { def main(args:Array[String]):Unit={ val conf = new SparkConf().setMaster("local").setAppName("WordCount") val sc = new SparkContext(conf) val text = sc.textFile("quickStart/src/main/resources/1.txt") val words = text.flatMap(line =>line.split(","))//? val pairs = words.map(word =>(word,1))//? val result = pairs.reduceByKey(_+_) val sorted = result.sortByKey(false); sorted.foreach(x => println(x)); } }

Java version of wordCount

package com.t9vg.rdd; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaPairRDD; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.api.java.JavaSparkContext; import scala.Tuple2; import java.util.Arrays; public class WordCount { public static void main(String[] args) { SparkConf conf = new SparkConf().setMaster("local").setAppName("WorldCount"); JavaSparkContext sc = new JavaSparkContext(conf); JavaRDD<String> textFile = sc.textFile("quickStart/src/main/resources/1.txt"); JavaPairRDD<String, Integer> counts = textFile .flatMap(s -> Arrays.asList(s.split(",")).iterator()) .mapToPair(word -> new Tuple2<>(word, 1)) .reduceByKey((a, b) -> a + b); counts.foreach(x-> System.out.println(x.toString())); sc.close(); } }

Project source address:

https://github.com/JDZW2018/learningSpark.git

Please indicate the source for reprinting.

Welcome to join IT-Java/Scala/Big Data/Spring Cloud to discuss qq group: 854150511