1 Spark Operator

1.1 is divided into two categories

1.1.1 Transformation

Transformation delays execution, which records metadata information and actually starts computing when the computing task triggers the Action.

1.1.2 Action

1.2 Two Ways to Create RDD

- RDD is created through the file system supported by HDFS. There is no real data to be calculated in RDD, only metadata is recorded.

- Create RDD in a parallel way through a Scala collection or array.

2 Practice

2.1 exercise 1

scala> val rdd1 = sc.parallelize(Array("a b c","d e f","h i j"))

rdd1: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[11] at parallelize at <console>:27

scala> rdd1.flatMap(_.split(" "))

res2: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[12] at flatMap at <console>:30

scala> res2.collect

res3: Array[String] = Array(a, b, c, d, e, f, h, i, j)

2.2 exercise 2

scala> val rdd5 = sc.parallelize(List(List("a b c","a b b"),List("e f g","a f g"),List("h i j","a a b")))

rdd5: org.apache.spark.rdd.RDD[List[String]] = ParallelCollectionRDD[13] at parallelize at <console>:27

scala> rdd5.flatMap(_.flatMap(_.split(" "))).collect

res4: Array[String] = Array(a, b, c, a, b, b, e, f, g, a, f, g, h, i, j, a, a, b)

2.3 summation set

scala> val rdd6 = sc.parallelize(List(5,6,4,7)) rdd6: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[15] at parallelize at <console>:27 scala> val rdd7 = sc.parallelize(List(1,2,3,4)) rdd7: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[16] at parallelize at <console>:27 scala> val rdd8 = rdd6.union(rdd7) rdd8: org.apache.spark.rdd.RDD[Int] = UnionRDD[17] at union at <console>:31 scala> rdd8.collect res5: Array[Int] = Array(5, 6, 4, 7, 1, 2, 3, 4)

2.4 Intersection

scala> val rdd9 = rdd6.intersection(rdd7) rdd9: org.apache.spark.rdd.RDD[Int] = MapPartitionsRDD[23] at intersection at <console>:31 scala> rdd9.collect res6: Array[Int] = Array(4)

2.5 join

scala> val rdd1 = sc.parallelize(List(("tom", 1), ("jerry", 2), ("kitty", 3))) rdd1: org.apache.spark.rdd.RDD[(String, Int)] = ParallelCollectionRDD[24] at parallelize at <console>:27 scala> val rdd2 = sc.parallelize(List(("jerry", 9), ("tom", 8), ("shuke", 7))) rdd2: org.apache.spark.rdd.RDD[(String, Int)] = ParallelCollectionRDD[25] at parallelize at <console>:27 scala> val rdd3 = rdd1.join(rdd2) rdd3: org.apache.spark.rdd.RDD[(String, (Int, Int))] = MapPartitionsRDD[28] at join at <console>:31 scala> rdd3.collect res7: Array[(String, (Int, Int))] = Array((tom,(1,8)), (jerry,(2,9)))

scala> val rdd1 = sc.parallelize(List(("tom", 1), ("jerry", 2), ("kitty", 3))) rdd1: org.apache.spark.rdd.RDD[(String, Int)] = ParallelCollectionRDD[29] at parallelize at <console>:27 scala> val rdd2 = sc.parallelize(List(("jerry", 9), ("tom", 8), ("shuke", 7),("tom",2))) rdd2: org.apache.spark.rdd.RDD[(String, Int)] = ParallelCollectionRDD[30] at parallelize at <console>:27 scala> val rdd3 = rdd1.leftOuterJoin(rdd2) rdd3: org.apache.spark.rdd.RDD[(String, (Int, Option[Int]))] = MapPartitionsRDD[33] at leftOuterJoin at <console>:31 scala> rdd3.collect res8: Array[(String, (Int, Option[Int]))] = Array((tom,(1,Some(8))), (tom,(1,Some(2))), (jerry,(2,Some(9))), (kitty,(3,None))) scala> val rdd3 = rdd1.rightOuterJoin(rdd2) rdd3: org.apache.spark.rdd.RDD[(String, (Option[Int], Int))] = MapPartitionsRDD[36] at rightOuterJoin at <console>:31 scala> rdd3.collect res9: Array[(String, (Option[Int], Int))] = Array((tom,(Some(1),8)), (tom,(Some(1),2)), (jerry,(Some(2),9)), (shuke,(None,7)))

2.6 groupByKey

scala> val rdd3 = rdd1 union rdd2 rdd3: org.apache.spark.rdd.RDD[(String, Int)] = UnionRDD[37] at union at <console>:31 scala> rdd3.collect res10: Array[(String, Int)] = Array((tom,1), (jerry,2), (kitty,3), (jerry,9), (tom,8), (shuke,7), (tom,2)) scala> rdd3.groupByKey res11: org.apache.spark.rdd.RDD[(String, Iterable[Int])] = ShuffledRDD[38] at groupByKey at <console>:34 scala> val rdd4 = rdd3.groupByKey rdd4: org.apache.spark.rdd.RDD[(String, Iterable[Int])] = ShuffledRDD[39] at groupByKey at <console>:33 scala> rdd4.collect res12: Array[(String, Iterable[Int])] = Array((tom,CompactBuffer(1, 8, 2)), (jerry,CompactBuffer(2, 9)), (shuke,CompactBuffer(7)), (kitty,CompactBuffer(3))) scala> rdd3.groupByKey.map(x=>(x._1,x._2.sum)).collect res13: Array[(String, Int)] = Array((tom,11), (jerry,11), (shuke,7), (kitty,3))

2.7 cogroup

scala> val rdd1 = sc.parallelize(List(("tom", 1), ("tom", 2), ("jerry", 3), ("kitty", 2))) rdd1: org.apache.spark.rdd.RDD[(String, Int)] = ParallelCollectionRDD[42] at parallelize at <console>:27 scala> val rdd2 = sc.parallelize(List(("jerry", 2), ("tom", 1), ("shuke", 2))) rdd2: org.apache.spark.rdd.RDD[(String, Int)] = ParallelCollectionRDD[43] at parallelize at <console>:27 scala> val rdd3 = rdd1.cogroup(rdd2) rdd3: org.apache.spark.rdd.RDD[(String, (Iterable[Int], Iterable[Int]))] = MapPartitionsRDD[45] at cogroup at <console>:31 scala> rdd3.collect res14: Array[(String, (Iterable[Int], Iterable[Int]))] = Array((tom,(CompactBuffer(1, 2),CompactBuffer(1))), (jerry,(CompactBuffer(3),CompactBuffer(2))), (shuke,(CompactBuffer(),CompactBuffer(2))), (kitty,(CompactBuffer(2),CompactBuffer()))) scala> val rdd4 = rdd3.map(t=>(t._1, t._2._1.sum + t._2._2.sum)) rdd4: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[46] at map at <console>:33 scala> rdd4.collect res15: Array[(String, Int)] = Array((tom,4), (jerry,5), (shuke,2), (kitty,2))

2.8 cartesian Cartesian product

scala> val rdd1 = sc.parallelize(List("tom", "jerry")) rdd1: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[47] at parallelize at <console>:27 scala> val rdd2 = sc.parallelize(List("tom", "kitty", "shuke")) rdd2: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[48] at parallelize at <console>:27 scala> val rdd3 = rdd1.cartesian(rdd2) rdd3: org.apache.spark.rdd.RDD[(String, String)] = CartesianRDD[49] at cartesian at <console>:31 scala> rdd3.collect res16: Array[(String, String)] = Array((tom,tom), (tom,kitty), (tom,shuke), (jerry,tom), (jerry,kitty), (jerry,shuke))

2.9

scala> val rdd1 = sc.parallelize(List(1,2,3,4,5), 2) rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[50] at parallelize at <console>:27 scala> rdd1.collect res17: Array[Int] = Array(1, 2, 3, 4, 5) scala> val rdd2 = rdd1.reduce(_+_) rdd2: Int = 15 scala> rdd1.top(2) res18: Array[Int] = Array(5, 4) scala> rdd1.take(2) res19: Array[Int] = Array(1, 2) scala> rdd1.first res20: Int = 1 scala> rdd1.takeOrdered(3) res21: Array[Int] = Array(1, 2, 3)

3 New Maven Project

3.1 maven configuration

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>cn.tzb.com</groupId> <artifactId>hellospark</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>1.8</maven.compiler.source> <maven.compiler.target>1.8</maven.compiler.target> <encoding>UTF-8</encoding> <scala.version>2.10.6</scala.version> <spark.version>1.6.3</spark.version> <hadoop.version>2.6.4</hadoop.version> </properties> <dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> <version>${scala.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> </dependency> </dependencies> <build> <sourceDirectory>src/main/scala</sourceDirectory> <testSourceDirectory>src/test/scala</testSourceDirectory> <plugins> <plugin> <groupId>net.alchim31.maven</groupId> <artifactId>scala-maven-plugin</artifactId> <version>3.2.2</version> <executions> <execution> <goals> <goal>compile</goal> <goal>testCompile</goal> </goals> <configuration> <args> <arg>-make:transitive</arg> <arg>-dependencyfile</arg> <arg>${project.build.directory}/.scala_dependencies</arg> </args> </configuration> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>2.4.3</version> <executions> <execution> <phase>package</phase> <goals> <goal>shade</goal> </goals> <configuration> <filters> <filter> <artifact>*:*</artifact> <excludes> <exclude>META-INF/*.SF</exclude> <exclude>META-INF/*.DSA</exclude> <exclude>META-INF/*.RSA</exclude> </excludes> </filter> </filters> <transformers> <transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"> <mainClass>demo.WordCount</mainClass> </transformer> </transformers> </configuration> </execution> </executions> </plugin> </plugins> </build> </project>

3.2 WordCount.scala

package demo import org.apache.spark.{SparkConf, SparkContext} object WordCount { def main(args: Array[String]): Unit = { val conf = new SparkConf().setAppName("wc") //It's very important to get to Spark Entrance to Cluster val sc = new SparkContext(conf) sc.textFile(args(0)).flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).sortBy(_._2,false).saveAsTextFile(args(1)) sc.stop() } }

3.3 pack

3.4 operation

jar package upload to cluster

Start HDFS

start-dfs.sh

Start cluster

[hadoop@node1 ~]$ cd /home/hadoop/apps/spark-1.6.3-bin-hadoop2.6/ [hadoop@node1 spark-1.6.3-bin-hadoop2.6]$ sbin/start-all.sh

[hadoop@node1 spark-1.6.3-bin-hadoop2.6]$ bin/spark-submit --master spark://node1:7077 --class demo.WordCount --executor-memory 512m --total-executor-cores 2 /home/hadoop/hellospark-1.0-SNAPSHOT.jar hdfs://node1:9000/wc hdfs://node1:9000/wcout

http://node1:8080/

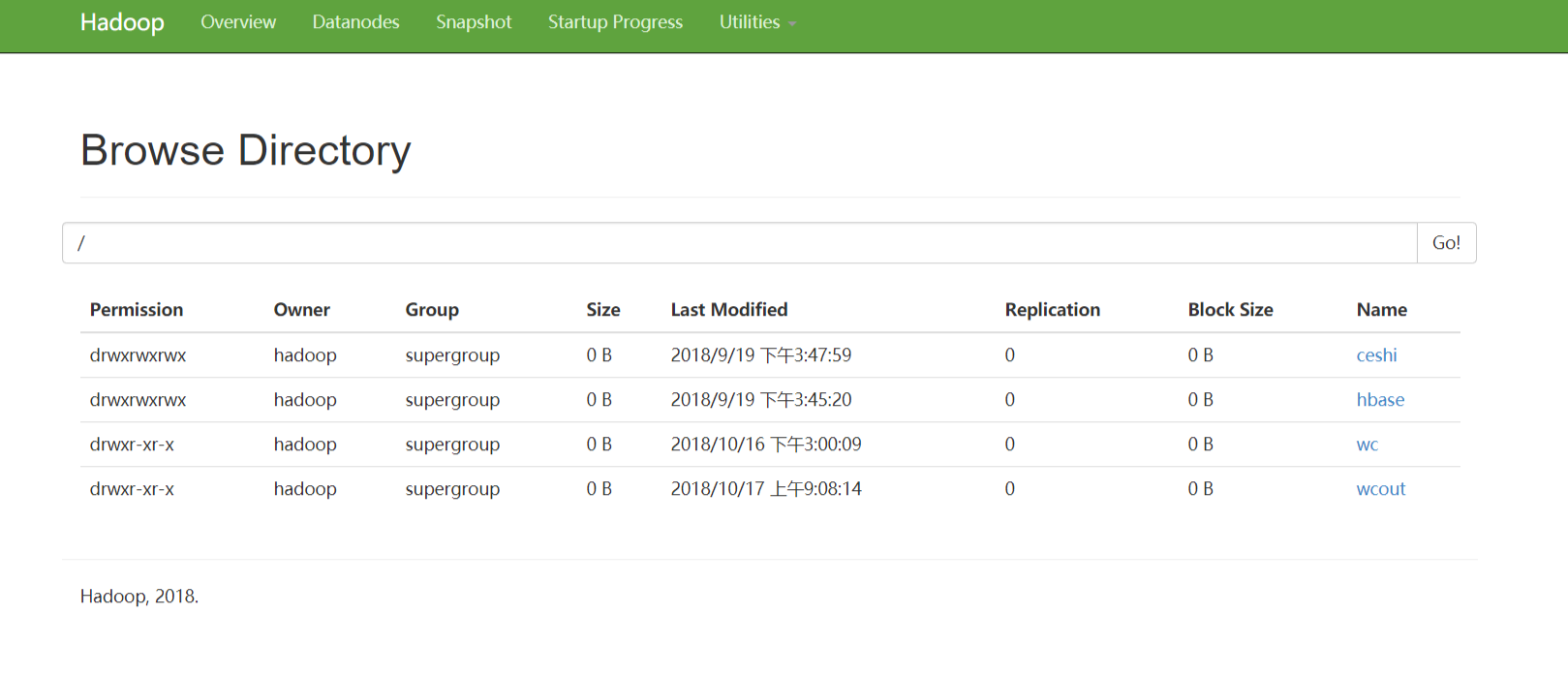

http://node1:50070

[hadoop@node1 ~]$ hdfs dfs -ls /wcout Found 4 items -rw-r--r-- 3 hadoop supergroup 0 2018-10-17 09:08 /wcout/_SUCCESS -rw-r--r-- 3 hadoop supergroup 11 2018-10-17 09:08 /wcout/part-00000 -rw-r--r-- 3 hadoop supergroup 8 2018-10-17 09:08 /wcout/part-00001 -rw-r--r-- 3 hadoop supergroup 20 2018-10-17 09:08 /wcout/part-00002 [hadoop@node1 ~]$ hdfs dfs -cat /wcout/part-* (hello,12) (tom,6) (henny,3) (jerry,3)