The source of this article is: http://blog.csdn.net/bluishglc/article/details/50945032 Reproduction in any form is strictly prohibited, otherwise CSDN will be entrusted to the official protection of rights and interests!

Spark's official documentation repeatedly emphasizes operations that will work on RDD, whether they are a function or a snippet of code, they are "closures", which Spark distributes to various worker nodes for execution, which involves a neglected issue: the "serialization" of closures.

Obviously, closures are stateful, which mainly refers to the free variables involved and other variables that free variables depend on, so Spark needs to retrieve all the variables involved in closures (including those passing dependent variables) correctly before passing a simple function or a piece of code (that is, closures) to operations like RDD.map. These variables are serialized before they are passed to the worker node and deserialized for execution. If any of the variables involved do not support serialization or specify how to serialize yourself, you will encounter such errors:

org.apache.spark.SparkException: Task not serializable

- 1

In the following example, we continually receive json messages from kafka and parse strings into corresponding entities in spark-streaming:

object App { private val config = ConfigFactory.load("my-streaming.conf") case class Person (firstName: String,lastName: String) def main(args: Array[String]) { val zkQuorum = config.getString("kafka.zkQuorum") val myTopic = config.getString("kafka.myTopic") val myGroup = config.getString("kafka.myGroup") val conf = new SparkConf().setAppName("my-streaming") val ssc = new StreamingContext(conf, Seconds(1)) val lines = KafkaUtils.createStream(ssc, zkQuorum, myGroup, Map(myTopic -> 1)) //this val is a part of closure, and it's not serializable! implicit val formats = DefaultFormats def parser(json: String) = parse(json).extract[Person].firstName lines.map(_._2).map(parser).print .... ssc.start() ssc.awaitTerminationOrTimeout(10000) ssc.stop() }}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

When this code is executed, the following error will be reported:

org.apache.spark.SparkException: Task not serializableCaused by: java.io.NotSerializableException: org.json4s.DefaultFormats$

- 1

- 2

The crux of the problem is that closures cannot be serialized. In this case, the scope of the closure is: function parser and an implicit parameter on which it depends: formats, and the problem lies in the implicit parameter, which is of type DefaultFormats. This class does not provide a description of serialization and deserialization itself, so Spark cannot serialize formats and thus cannot push task to remote execution.

The implicit parameter formats are prepared for extract, and the list of its parameters is as follows:

org.json4s.ExtractableJsonAstNode#extract[A](implicit formats: Formats, mf: scala.reflect.Manifest[A]): A = ...- 1

After finding the root cause of the problem, we can solve it. In fact, we don't need to serialize formats at all. For us, it's stateless. So we can bypass serialization by declaring it as a globally static variable. So the way to change this is simply to migrate the declaration of implicit val formats = DefaultFormats from within the method to the field location of App Object.

object App { private val config = ConfigFactory.load("my-streaming.conf") case class Person (firstName: String,lastName: String) //As Object field, global, static, no need to serialize implicit val formats = DefaultFormats def main(args: Array[String]) { val zkQuorum = config.getString("kafka.zkQuorum") val myTopic = config.getString("kafka.myTopic") val myGroup = config.getString("kafka.myGroup") val conf = new SparkConf().setAppName("my-streaming") val ssc = new StreamingContext(conf, Seconds(1)) val lines = KafkaUtils.createStream(ssc, zkQuorum, myGroup, Map(myTopic -> 1)) def parser(json: String) = parse(json).extract[Person].firstName lines..map(_._2).map(parser).print .... ssc.start() ssc.awaitTerminationOrTimeout(10000) ssc.stop() }}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

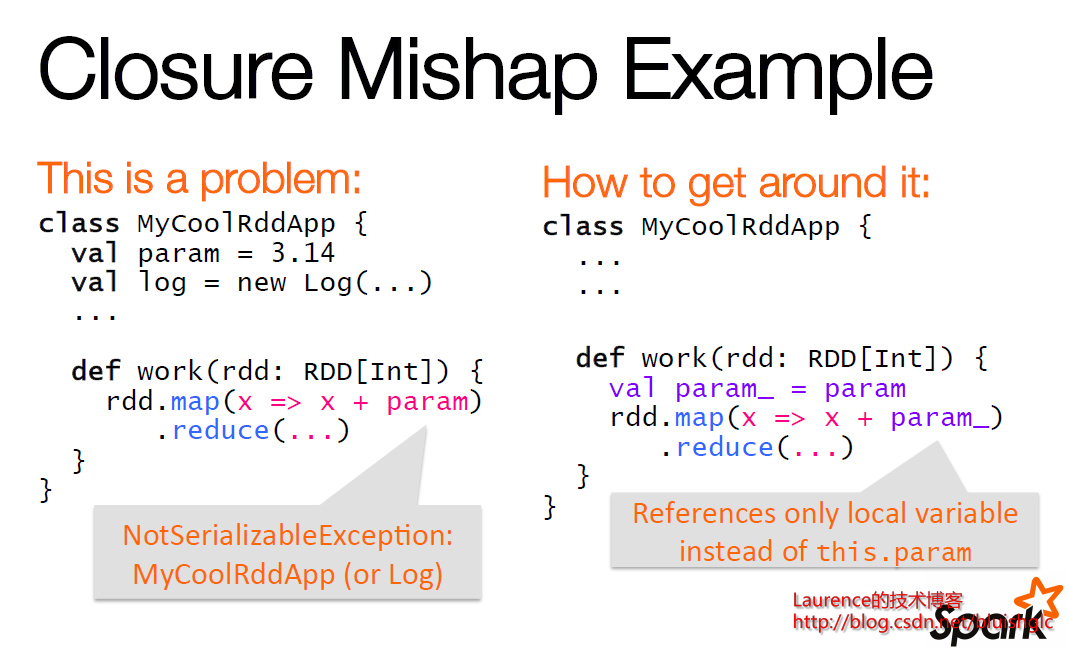

Here is another good example:

This example demonstrates a solution to a similar problem: "Copy a class member variable into a closure" or the whole object will need to be serialized!

Finally, let's summarize how to properly handle the serialization of Spark Task closures. First of all, you need to have a clear understanding of the boundaries of the closures Task involves, and try to control the scope of the closures and the free variables involved. A very cautious point is: try not to directly refer to the member variables and functions of a class in the closure, which will lead to the serialization of the entire class instance. Such examples are also mentioned in the Spark document, as follows:

class MyClass { def func1(s: String): String = { ... } def doStuff(rdd: RDD[String]): RDD[String] = { rdd.map(func1) }}- 1

- 2

- 3

- 4

Then, a good way to organize code is to encapsulate as many complex operations as possible into a single global function body, except for those very short functions: global static methods or function objects.

If you really need an instance of a class to participate in the calculation process, you need to do a good job of serialization.