Does 51CTO have no directory function?Good or bad

========

Welcome to Penguin for any questions ^-^

1176738641

========

preparation in advance

folders creating

#Create five folders in the user directory app #Storage application software #Store application packages data #Store test data lib #Store jar package source #Store source code

Download required software and versions

- apache-maven-3.6.1-bin.tar.gz

- hadoop-2.6.0-cdh5.14.0.tar.gz

- jdk-8u131-linux-x64.tar.gz

- scala-2.11.8.tgz

Install jdk8

Uninstall existing jdk

rpm -qa|grep java # If the installed version is less than 1.7, uninstall the jdk rpm -e Package 1 Package 2

Unzip jdk to ~/app directory

tar -zxf jdk-8u131-linux-x64.tar.gz -C ~/app/

Test jdk8 installation succeeded

~/app/jdk1.8.0_131/bin/java -version java version "1.8.0_131" Java(TM) SE Runtime Environment (build 1.8.0_131-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode)

Version information printed normally, indicating successful installation

Configuring environment variables

Remember >> for append!>For coverage!Make sure you don't score>

echo "####JAVA_HOME####" echo "export JAVA_HOME=/home/max/app/jdk1.8.0_131" >> ~/.bash_profile echo "export PATH=$JAVA_HOME/bin:$PATH" >> ~/.bash_profile # Refresh environment variables source ~/.bash_profile

At this point, using java-version works in any directory

Install maven

Unzip to ~/app/

tar -zxvf apache-maven-3.6.1-bin.tar.gz -C ~/app

Test maven installed successfully

~/app/apache-maven-3.6.1/bin/mvn -v Apache Maven 3.6.1 (d66c9c0b3152b2e69ee9bac180bb8fcc8e6af555; 2019-04-04T15:00:29-04:00) Maven home: /home/max/app/apache-maven-3.6.1 Java version: 1.8.0_131, vendor: Oracle Corporation, runtime: /home/max/app/jdk1.8.0_131/jre Default locale: en_US, platform encoding: UTF-8 OS name: "linux", version: "2.6.32-358.el6.x86_64", arch: "amd64", family: "unix"

Display publication information indicating successful installation

Add environment variables

Remember >> for append!>For coverage!Make sure you don't score>

echo "####MAVEN_HOME####" >> ~/.bash_profile echo "export MAVEN_HOME=/home/max/app/apache-maven-3.6.1/" >> ~/.bash_profile echo "export PATH=$MAVEN_HOME/bin:$PATH" >> ~/.bash_profile # Refresh environment variables source ~/.bash_profile

At this point, mvn-v works in any directory

Configure Local Warehouse Directory &&Remote Warehouse Address

# Create local warehouse folder mkdir ~/maven_repo # Modify settings.xml file vim $MAVEN_HOME/conf/settings.xml

Pay attention to the label!Don't conflict with existing labels

<!-- localRepository

| The path to the local repository maven will use to store artifacts.

|

| Default: ${user.home}/.m2/repository

<localRepository>/path/to/local/repo</localRepository>

-->

<localRepository>/home/max/maven_repo</localRepository>

<mirrors>

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*,!cloudera</mirrorOf>

<name>Nexus aliyun</name>

<url>

http://maven.aliyun.com/nexus/content/groups/public

</url>

</mirror>Install Scala

Unzip to ~/app/

tar -zxf scala-2.11.8.tgz -C ~/app/

Test scala installed successfully

~/app/scala-2.11.8/bin/scala scala> [max@hadoop000 scala-2.11.8]$ scala Welcome to Scala 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_131). Type in expressions for evaluation. Or try :help. scala>

Installation Successful

Add environment variables

echo "####SCALA_HOME####" >> ~/.bash_profile echo "export SCALA_HOME=/home/max/app/scala-2.11.8" >> ~/.bash_profile echo "export PATH=$SCALA_HOME/bin:$PATH" >> ~/.bash_profile # Refresh environment variables source ~/.bash_profile

At this point, using scala works in any directory

Install Git

Default to CentOS user

sudo yum install git #Automatic installation, during which you need to press y several times #Show as follows Installed: git.x86_64 0:1.7.1-9.el6_9 Dependency Installed: perl-Error.noarch 1:0.17015-4.el6 perl-Git.noarch 0:1.7.1-9.el6_9 Dependency Updated: openssl.x86_64 0:1.0.1e-57.el6 Complete! #Installation Successful

Pre-work is finished at last!!!!

==========================Cumulative dog's dividing line==================

In fact, the long compilation process is just beginning

Download & Compile Spark Source

Offer the great murder!===>Reference Official Web

Download & Unzip Spark2.4.2 Source

cd ~/source wget https://archive.apache.org/dist/spark/spark-2.4.2/spark-2.4.2.tgz #Sometimes thieves are slow [max@hadoop000 source]$ ll total 15788 -rw-rw-r--. 1 max max 16165557 Apr 28 12:27 spark-2.4.2.tgz [max@hadoop000 source]$ tar -zxf spark-2.4.2.tgz

About Maven

Instead of using the mvn command, we use the make-distribution.sh script directly, but it needs to be modified

#Under the spark-2.4.2 folder

vim ./dev/make-distribution.sh

#It is best practice to comment these lines out here to reduce compilation time by specifying a version number

#VERSION=$("$MVN" help:evaluate -Dexpression=project.version $@ 2>/dev/null\

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | tail -n 1)

#SCALA_VERSION=$("$MVN" help:evaluate -Dexpression=scala.binary.version $@ 2>/dev/null\

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | tail -n 1)

#SPARK_HADOOP_VERSION=$("$MVN" help:evaluate -Dexpression=hadoop.version $@ 2>/dev/null\

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | tail -n 1)

#SPARK_HIVE=$("$MVN" help:evaluate -Dexpression=project.activeProfiles -pl sql/hive $@ 2>/dev/null\

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | fgrep --count "<id>hive</id>";\

# # Reset exit status to 0, otherwise the script stops here if the last grep finds nothing\

# # because we use "set -o pipefail"

# echo -n)

##Add a parameter and note that the version number corresponds to the production environment you want

VERSION=2.4.2

SCALA_VERSION=2.11

SPARK_HADOOP_VERSION=hadoop-2.6.0-cdh5.14.0

SPARK_HIVE=1Modify pom.xml

In the maven default library, only the apache version of the Hadoop dependency is defaulted, but since our Hadoop version is hadoop-2.6.0-cdh5.14.0, we need to add a CDH repository to the pom file

#Under the spark-2.4.2 folder vim pom.xml

<repositories>

<!--<repositories>

This should be at top, it makes maven try the central repo first and then others

and hence faster dep resolution

<repository>

<id>central</id>

<name>Maven Repository</name>

<url>https://repo.maven.apache.org/maven2</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

-->

<repository>

<id>central</id>

<url>http://maven.aliyun.com/nexus/content/groups/public//</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>true</enabled>

<updatePolicy>always</updatePolicy>

<checksumPolicy>fail</checksumPolicy>

</snapshots>

</repository>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>Start Compiling

./dev/make-distribution.sh \ --name hadoop-2.6.0-cdh5.14.0 \ --tgz \ -Phadoop-2.6 \ -Dhadoop.version=2.6.0-cdh5.14.0 \ -Phive -Phive-thriftserver \ -Pyarn \ -Pkubernetes

The first time it takes about 1h to compile, I'm Ali Cloud Mirror

Re-compile takes about 10 minutes

Note: If you make a mistake, you must learn to read the error log!

##Compilation complete

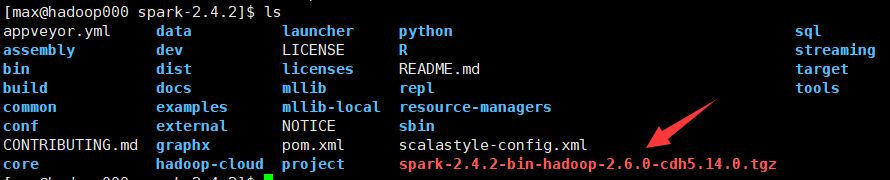

#Last part of log compiled successfully + mkdir /home/max/source/spark-2.4.2/dist/conf + cp /home/max/source/spark-2.4.2/conf/docker.properties.template /home/max/source/spark-2.4.2/conf/fairscheduler.xml.template /home/max/source/spark-2.4.2/conf/log4j.properties.template /home/max/source/spark-2.4.2/conf/metrics.properties.template /home/max/source/spark-2.4.2/conf/slaves.template /home/max/source/spark-2.4.2/conf/spark-defaults.conf.template /home/max/source/spark-2.4.2/conf/spark-env.sh.template /home/max/source/spark-2.4.2/dist/conf + cp /home/max/source/spark-2.4.2/README.md /home/max/source/spark-2.4.2/dist + cp -r /home/max/source/spark-2.4.2/bin /home/max/source/spark-2.4.2/dist + cp -r /home/max/source/spark-2.4.2/python /home/max/source/spark-2.4.2/dist + '[' false == true ']' + cp -r /home/max/source/spark-2.4.2/sbin /home/max/source/spark-2.4.2/dist + '[' -d /home/max/source/spark-2.4.2/R/lib/SparkR ']' + '[' true == true ']' + TARDIR_NAME=spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0 + TARDIR=/home/max/source/spark-2.4.2/spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0 + rm -rf /home/max/source/spark-2.4.2/spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0 + cp -r /home/max/source/spark-2.4.2/dist /home/max/source/spark-2.4.2/spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0 + tar czf spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0.tgz -C /home/max/source/spark-2.4.2 spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0 + rm -rf /home/max/source/spark-2.4.2/spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0

You can see that the compiled package is under the spark source package

decompression

[max@hadoop000 spark-2.4.2]$ tar -zxf spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0.tgz -C ~/app/ [max@hadoop000 spark-2.4.2]$ cd ~/app/ [max@hadoop000 app]$ ll total 16 drwxrwxr-x. 6 max max 4096 Apr 28 17:02 apache-maven-3.6.1 drwxr-xr-x. 8 max max 4096 Mar 15 2017 jdk1.8.0_131 drwxrwxr-x. 6 max max 4096 Mar 4 2016 scala-2.11.8 drwxrwxr-x. 11 max max 4096 Apr 28 21:20 spark-2.4.2-bin-hadoop-2.6.0-cdh5.14.0

Done!