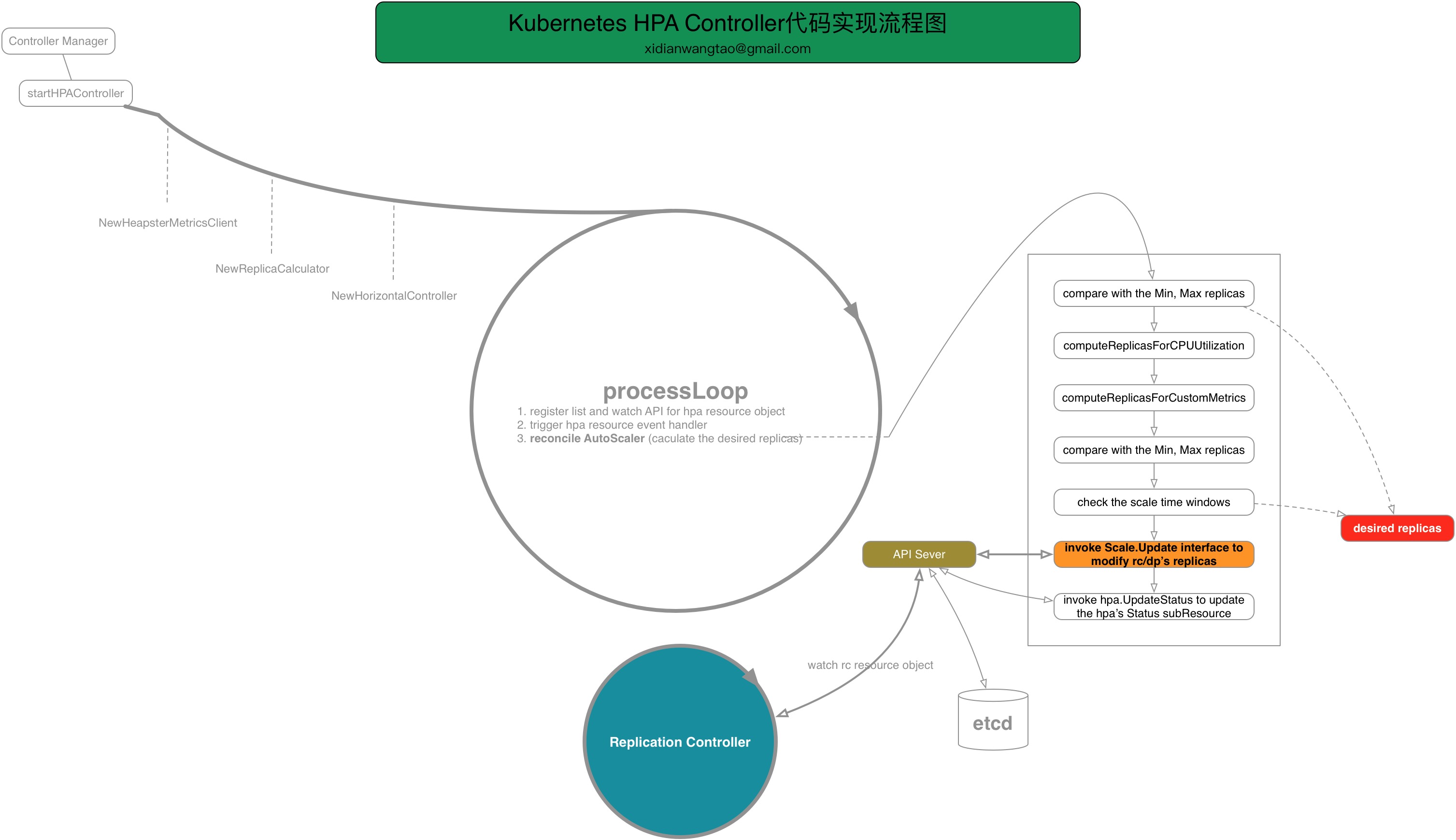

Author: xidianwangtao@gmail.com

Analysis of Source Directory Structure

The main code of Horizontal Pod Autoscaler (hereinafter referred to as HPA) is as follows, and the main documents involved are few.

cmd/kube-controller-manager/app/autoscaling.go // HPA Controller Startup Code /pkg/controller/podautoscaler . ├── BUILD ├── OWNERS ├── doc.go ├── horizontal.go // The core code of podautoscaler, including the code to create and run it ├── horizontal_test.go ├── metrics │ ├── BUILD │ ├── metrics_client.go │ ├── metrics_client_test.go │ ├── metrics_client_test.go.orig │ ├── metrics_client_test.go.rej │ └── utilization.go ├── replica_calculator.go // Creation of Replica Caculator and Method of Calculating replicas Based on cpu/metrics └── replica_calculator_test.go

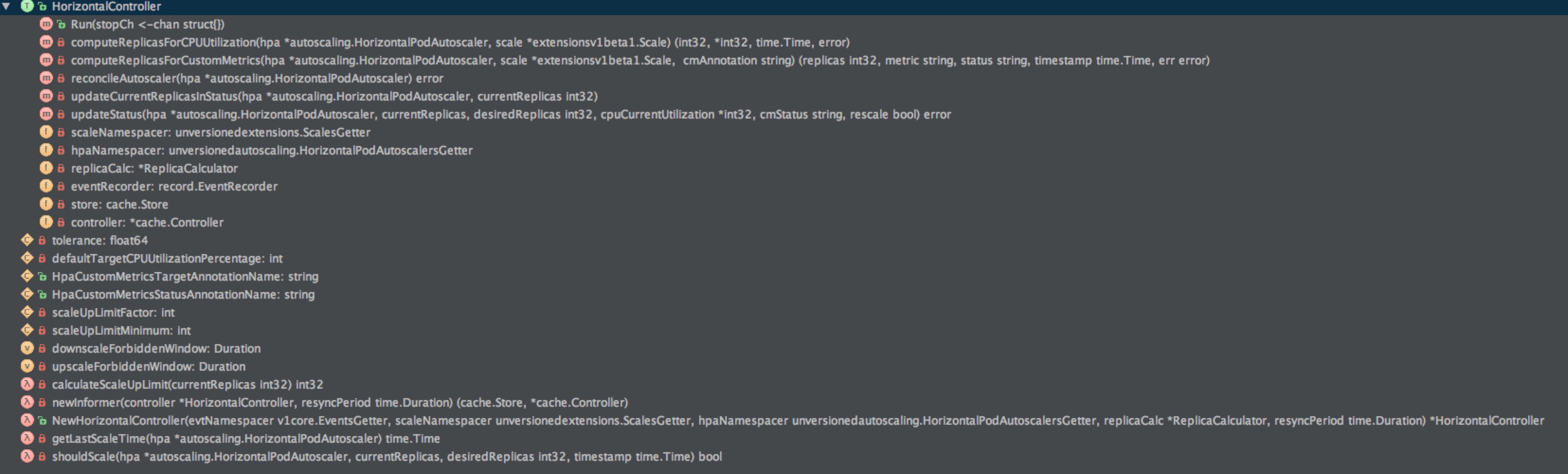

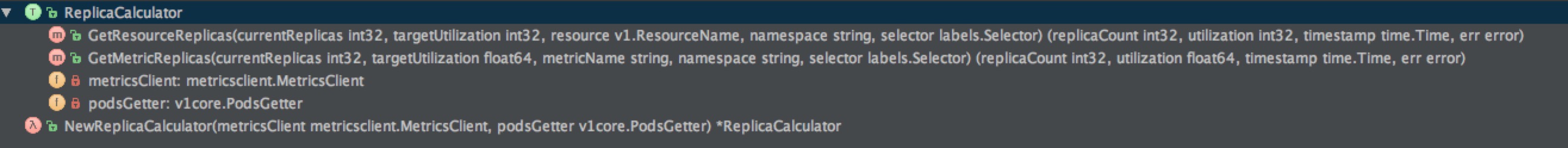

Among them, horizontal.go and replica_calculator.go are the core files, and their corresponding structures are as follows:

- horizontal.go

- replica_calculator.go

Source code analysis

HPA Controller, like other Controllers, is initialized and started when kube-controller-manager starts, as shown in the following code.

cmd/kube-controller-manager/app/controllermanager.go:224 func newControllerInitializers() map[string]InitFunc { controllers := map[string]InitFunc{} ... controllers["horizontalpodautoscaling"] = startHPAController ... return controllers }

When kube-controller-manager starts, it starts with a bunch of controllers. For HPA controller, it starts with startHPAController.

cmd/kube-controller-manager/app/autoscaling.go:29 func startHPAController(ctx ControllerContext) (bool, error) { ... // HPA Controller requires that the cluster has deployed Heapster, which provides monitoring data for replicas computation. metricsClient := metrics.NewHeapsterMetricsClient( hpaClient, metrics.DefaultHeapsterNamespace, metrics.DefaultHeapsterScheme, metrics.DefaultHeapsterService, metrics.DefaultHeapsterPort, ) // Create Replica Caculator, which will be used later to calculate desired replicas. replicaCalc := podautoscaler.NewReplicaCalculator(metricsClient, hpaClient.Core()) // Create HPA Controller and start goroutine to execute its Run method to get started. go podautoscaler.NewHorizontalController( hpaClient.Core(), hpaClient.Extensions(), hpaClient.Autoscaling(), replicaCalc, ctx.Options.HorizontalPodAutoscalerSyncPeriod.Duration, ).Run(ctx.Stop) return true, nil }

First, let's look at the code that NewHorizontal Controller creates for HPA Controller.

pkg/controller/podautoscaler/horizontal.go:112 func NewHorizontalController(evtNamespacer v1core.EventsGetter, scaleNamespacer unversionedextensions.ScalesGetter, hpaNamespacer unversionedautoscaling.HorizontalPodAutoscalersGetter, replicaCalc *ReplicaCalculator, resyncPeriod time.Duration) *HorizontalController { ... // Constructing HPA Controller controller := &HorizontalController{ replicaCalc: replicaCalc, eventRecorder: recorder, scaleNamespacer: scaleNamespacer, hpaNamespacer: hpaNamespacer, } // Create Informer, configure the corresponding ListWatch Func and its corresponding EventHandler to monitor the Add and Update events of HPA Resource. newInformer is the core code entry for HPA. store, frameworkController := newInformer(controller, resyncPeriod) controller.store = store controller.controller = frameworkController return controller }

It is necessary to look at the definition of HPA Controller struct:

pkg/controller/podautoscaler/horizontal.go:59 type HorizontalController struct { scaleNamespacer unversionedextensions.ScalesGetter hpaNamespacer unversionedautoscaling.HorizontalPodAutoscalersGetter replicaCalc *ReplicaCalculator eventRecorder record.EventRecorder // A store of HPA objects, populated by the controller. store cache.Store // Watches changes to all HPA objects. controller *cache.Controller }

- scaleNamespacer is actually a ScaleInterface, including the Get and Update interfaces of Scale subresource.

- hpaNamespacer is Horizontal Pod Autoscaler Interface, including Create, Update, Update Status, Delete, Get, List, Watch interfaces of Horizontal Pod Autoscaler.

-

replicaCalc calculates the desired replicas based on the monitoring data provided by Heapster.

pkg/controller/podautoscaler/replica_calculator.go:31 type ReplicaCalculator struct { metricsClient metricsclient.MetricsClient podsGetter v1core.PodsGetter } - Store and controller: Controller is used to watch HPA objects and update them to the store cache.

Scale subresource is mentioned above. What is that? Well, we have to look at the definition of Scale.

pkg/apis/extensions/v1beta1/types.go:56 // represents a scaling request for a resource. type Scale struct { metav1.TypeMeta `json:",inline"` // Standard object metadata; More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#metadata. // +optional v1.ObjectMeta `json:"metadata,omitempty" protobuf:"bytes,1,opt,name=metadata"` // defines the behavior of the scale. More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#spec-and-status. // +optional Spec ScaleSpec `json:"spec,omitempty" protobuf:"bytes,2,opt,name=spec"` // current status of the scale. More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#spec-and-status. Read-only. // +optional Status ScaleStatus `json:"status,omitempty" protobuf:"bytes,3,opt,name=status"` } // describes the attributes of a scale subresource type ScaleSpec struct { // desired number of instances for the scaled object. Replicas int `json:"replicas,omitempty"` } // represents the current status of a scale subresource. type ScaleStatus struct { // actual number of observed instances of the scaled object. Replicas int `json:"replicas"` // label query over pods that should match the replicas count. Selector map[string]string `json:"selector,omitempty"` }

- Scale struct is the request data for a scale action.

- Spec defines desired replicas number.

- ScaleStatus defines the current replicas number.

After looking at the structure of the Horizontal Controller, look at the new Informer called in the New Horizontal Controller. In the comments above, I mentioned that newInformer is the core code entry for the entire HPA.

pkg/controller/podautoscaler/horizontal.go:75 func newInformer(controller *HorizontalController, resyncPeriod time.Duration) (cache.Store, *cache.Controller) { return cache.NewInformer( // Configure ListFucn and WatchFunc to periodically List and watch HPA resource s. &cache.ListWatch{ ListFunc: func(options v1.ListOptions) (runtime.Object, error) { return controller.hpaNamespacer.HorizontalPodAutoscalers(v1.NamespaceAll).List(options) }, WatchFunc: func(options v1.ListOptions) (watch.Interface, error) { return controller.hpaNamespacer.HorizontalPodAutoscalers(v1.NamespaceAll).Watch(options) }, }, // Define the object you expect to receive as Horizontal Pod Autoscaler &autoscaling.HorizontalPodAutoscaler{}, // Define periodic lists resyncPeriod, // Configure Andler (AddFunc, UpdateFunc) for HPA resource event cache.ResourceEventHandlerFuncs{ AddFunc: func(obj interface{}) { hpa := obj.(*autoscaling.HorizontalPodAutoscaler) hasCPUPolicy := hpa.Spec.TargetCPUUtilizationPercentage != nil _, hasCustomMetricsPolicy := hpa.Annotations[HpaCustomMetricsTargetAnnotationName] if !hasCPUPolicy && !hasCustomMetricsPolicy { controller.eventRecorder.Event(hpa, v1.EventTypeNormal, "DefaultPolicy", "No scaling policy specified - will use default one. See documentation for details") } // Adjust hpa data according to monitoring err := controller.reconcileAutoscaler(hpa) if err != nil { glog.Warningf("Failed to reconcile %s: %v", hpa.Name, err) } }, UpdateFunc: func(old, cur interface{}) { hpa := cur.(*autoscaling.HorizontalPodAutoscaler) // Adjust hpa data according to monitoring err := controller.reconcileAutoscaler(hpa) if err != nil { glog.Warningf("Failed to reconcile %s: %v", hpa.Name, err) } }, // We are not interested in deletions. }, ) }

newInformer's code is not long either. Simply put, it's Func with ListWatch of HPA resource, Add of HPA resource and handler Func of Update Event.

Finally, reconcile Autoscaler is used to correct hpa data.

In the above code, the List Watch Func of HPA resource is registered as the List and Watch interfaces defined by Horizontal Pod Autoscaler Interface.

Wait a minute. Having said so much, why haven't you seen the definition of Horizontal Pod Autoscaler struct yet? Well, let's take a look at it. It happens to be in the Horizontal Pod Autoscaler Interface.

pkg/apis/autoscaling/v1/types.go:76 // configuration of a horizontal pod autoscaler. type HorizontalPodAutoscaler struct { metav1.TypeMeta `json:",inline"` // Standard object metadata. More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#metadata // +optional v1.ObjectMeta `json:"metadata,omitempty" protobuf:"bytes,1,opt,name=metadata"` // behaviour of autoscaler. More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#spec-and-status. // +optional Spec HorizontalPodAutoscalerSpec `json:"spec,omitempty" protobuf:"bytes,2,opt,name=spec"` // current information about the autoscaler. // +optional Status HorizontalPodAutoscalerStatus `json:"status,omitempty" protobuf:"bytes,3,opt,name=status"` }

-

Spec HorizontalPod AutoscalerSpec stores the description information of HPA and can configure the corresponding flag information through kube-controller-manager. Target CPU Utilization Percentage, including MinReplicas, MaxReplicas, and the average percentage of all pods corresponding to hpa.

pkg/apis/autoscaling/v1/types.go:36 // specification of a horizontal pod autoscaler. type HorizontalPodAutoscalerSpec struct { // reference to scaled resource; horizontal pod autoscaler will learn the current resource consumption // and will set the desired number of pods by using its Scale subresource. ScaleTargetRef CrossVersionObjectReference `json:"scaleTargetRef" protobuf:"bytes,1,opt,name=scaleTargetRef"` // lower limit for the number of pods that can be set by the autoscaler, default 1. // +optional MinReplicas *int32 `json:"minReplicas,omitempty" protobuf:"varint,2,opt,name=minReplicas"` // upper limit for the number of pods that can be set by the autoscaler; cannot be smaller than MinReplicas. MaxReplicas int32 `json:"maxReplicas" protobuf:"varint,3,opt,name=maxReplicas"` // target average CPU utilization (represented as a percentage of requested CPU) over all the pods; // if not specified the default autoscaling policy will be used. // +optional TargetCPUUtilizationPercentage *int32 `json:"targetCPUUtilizationPercentage,omitempty" protobuf:"varint,4,opt,name=targetCPUUtilizationPercentage"` } -

Status Horizontal Pod Autoscaler Statu stores HPA's current status data, including the time interval between two scales, Observer Generation, Last scale Time, Current Replicas, Desired Replicas, the average percentage of all pods corresponding to hpa. Utilization rate.

pkg/apis/autoscaling/v1/types.go:52 // current status of a horizontal pod autoscaler type HorizontalPodAutoscalerStatus struct { // most recent generation observed by this autoscaler. // +optional ObservedGeneration *int64 `json:"observedGeneration,omitempty" protobuf:"varint,1,opt,name=observedGeneration"` // last time the HorizontalPodAutoscaler scaled the number of pods; // used by the autoscaler to control how often the number of pods is changed. // +optional LastScaleTime *metav1.Time `json:"lastScaleTime,omitempty" protobuf:"bytes,2,opt,name=lastScaleTime"` // current number of replicas of pods managed by this autoscaler. CurrentReplicas int32 `json:"currentReplicas" protobuf:"varint,3,opt,name=currentReplicas"` // desired number of replicas of pods managed by this autoscaler. DesiredReplicas int32 `json:"desiredReplicas" protobuf:"varint,4,opt,name=desiredReplicas"` // current average CPU utilization over all pods, represented as a percentage of requested CPU, // e.g. 70 means that an average pod is using now 70% of its requested CPU. // +optional CurrentCPUUtilizationPercentage *int32 `json:"currentCPUUtilizationPercentage,omitempty" protobuf:"varint,5,opt,name=currentCPUUtilizationPercentage"` }

New Informer's code shows that whether the event of hpa resource is Add or update, reconcile Autoscaler is ultimately called to trigger the update of Horizontal Pod Autoscaler data.

pkg/controller/podautoscaler/horizontal.go:272 func (a *HorizontalController) reconcileAutoscaler(hpa *autoscaling.HorizontalPodAutoscaler) error { ... // Get scale subresource data corresponding to resource. scale, err := a.scaleNamespacer.Scales(hpa.Namespace).Get(hpa.Spec.ScaleTargetRef.Kind, hpa.Spec.ScaleTargetRef.Name) ... // Get the current number of copies currentReplicas := scale.Status.Replicas cpuDesiredReplicas := int32(0) cpuCurrentUtilization := new(int32) cpuTimestamp := time.Time{} cmDesiredReplicas := int32(0) cmMetric := "" cmStatus := "" cmTimestamp := time.Time{} desiredReplicas := int32(0) rescaleReason := "" timestamp := time.Now() rescale := true // If the expected number of copies is 0, no scale operation is performed. if scale.Spec.Replicas == 0 { // Autoscaling is disabled for this resource desiredReplicas = 0 rescale = false } // The expected number of replicas should not exceed the maximum number of replicas configured in hpa else if currentReplicas > hpa.Spec.MaxReplicas { rescaleReason = "Current number of replicas above Spec.MaxReplicas" desiredReplicas = hpa.Spec.MaxReplicas } // The expected number of replicas should not be less than the minimum number of replicas configured else if hpa.Spec.MinReplicas != nil && currentReplicas < *hpa.Spec.MinReplicas { rescaleReason = "Current number of replicas below Spec.MinReplicas" desiredReplicas = *hpa.Spec.MinReplicas } // The minimum number of expected replicas is 1 else if currentReplicas == 0 { rescaleReason = "Current number of replicas must be greater than 0" desiredReplicas = 1 } // If the current number of replicas is between Min and Max, the expected number of replicas needs to be calculated according to the algorithm based on cpu or custom metrics (if the corresponding Annotation is added). else { // All basic scenarios covered, the state should be sane, lets use metrics. cmAnnotation, cmAnnotationFound := hpa.Annotations[HpaCustomMetricsTargetAnnotationName] if hpa.Spec.TargetCPUUtilizationPercentage != nil || !cmAnnotationFound { // Calculating the expected number of replicas based on cpu utilization cpuDesiredReplicas, cpuCurrentUtilization, cpuTimestamp, err = a.computeReplicasForCPUUtilization(hpa, scale) if err != nil { // Update the current number of copies of hpa a.updateCurrentReplicasInStatus(hpa, currentReplicas) return fmt.Errorf("failed to compute desired number of replicas based on CPU utilization for %s: %v", reference, err) } } if cmAnnotationFound { // Calculate the expected number of replicas based on custom metrics data cmDesiredReplicas, cmMetric, cmStatus, cmTimestamp, err = a.computeReplicasForCustomMetrics(hpa, scale, cmAnnotation) if err != nil { // Update the current number of copies of hpa a.updateCurrentReplicasInStatus(hpa, currentReplicas) return fmt.Errorf("failed to compute desired number of replicas based on Custom Metrics for %s: %v", reference, err) } } // The maximum number of expected replicas obtained by cpu and custom metric s is taken as the final desired replicas, and it should be in the range of min and max. rescaleMetric := "" if cpuDesiredReplicas > desiredReplicas { desiredReplicas = cpuDesiredReplicas timestamp = cpuTimestamp rescaleMetric = "CPU utilization" } if cmDesiredReplicas > desiredReplicas { desiredReplicas = cmDesiredReplicas timestamp = cmTimestamp rescaleMetric = cmMetric } if desiredReplicas > currentReplicas { rescaleReason = fmt.Sprintf("%s above target", rescaleMetric) } if desiredReplicas < currentReplicas { rescaleReason = "All metrics below target" } if hpa.Spec.MinReplicas != nil && desiredReplicas < *hpa.Spec.MinReplicas { desiredReplicas = *hpa.Spec.MinReplicas } // never scale down to 0, reserved for disabling autoscaling if desiredReplicas == 0 { desiredReplicas = 1 } if desiredReplicas > hpa.Spec.MaxReplicas { desiredReplicas = hpa.Spec.MaxReplicas } // Do not upscale too much to prevent incorrect rapid increase of the number of master replicas caused by // bogus CPU usage report from heapster/kubelet (like in issue #32304). if desiredReplicas > calculateScaleUpLimit(currentReplicas) { desiredReplicas = calculateScaleUpLimit(currentReplicas) } // Based on the comparison of current Replicas and desired Replicas, and whether scale time meets the configuration interval requirement, it is decided whether rescale is needed at this time. rescale = shouldScale(hpa, currentReplicas, desiredReplicas, timestamp) } if rescale { scale.Spec.Replicas = desiredReplicas // Execute the Update interface of ScaleInterface to trigger the data update of the scale subresource corresponding to the resource invoked by API Server. In fact, it will eventually modify the replicas corresponding to rc or deployment, and then rc or deployment Controller will eventually expand or shrink the number of replicas to meet the new expectations. _, err = a.scaleNamespacer.Scales(hpa.Namespace).Update(hpa.Spec.ScaleTargetRef.Kind, scale) if err != nil { a.eventRecorder.Eventf(hpa, v1.EventTypeWarning, "FailedRescale", "New size: %d; reason: %s; error: %v", desiredReplicas, rescaleReason, err.Error()) return fmt.Errorf("failed to rescale %s: %v", reference, err) } a.eventRecorder.Eventf(hpa, v1.EventTypeNormal, "SuccessfulRescale", "New size: %d; reason: %s", desiredReplicas, rescaleReason) glog.Infof("Successfull rescale of %s, old size: %d, new size: %d, reason: %s", hpa.Name, currentReplicas, desiredReplicas, rescaleReason) } else { desiredReplicas = currentReplicas } // Update status data of hpa resource return a.updateStatus(hpa, currentReplicas, desiredReplicas, cpuCurrentUtilization, cmStatus, rescale) }

The reconcile Autoscaler code above is very important. Write everything you want to say into the corresponding comments. ComputeReplicas ForCPU Utilization and ComputeReplicas ForCustom Metrics need to be presented separately, because these two methods are the embodiment of HPA algorithm. In fact, the final algorithm is pkg/controller/podautoscaler/replica_calculator.go:45#GetResourceReplicas and pkg/controller/podautoscaler/replica_calculator.g. O:153 # GetMetricReplicas implements:

- pkg/controller/podautoscaler/replica_calculator.go:45#GetResourceReplicas is responsible for calculating desired replicas number based on the cpu utilization data provided by heapster.

- pkg/controller/podautoscaler/replica_calculator.go:153#GetMetricReplicas is responsible for calculating desired replicas number based on custom raw metric data provided by heapster.

Specifically on the source code analysis of HPA algorithm, I will write a separate blog in the future, interested can be concerned about (for most students, there is no need to pay attention to, unless you need to customize the HPA algorithm, will be specific to analyze).

In a word, after calculating desired replicas based on cpu and custom metric s data, the maximum value of both can be obtained, but it can not exceed the configured Max Replicas.

Wait a minute and figure out that desired replicas are still enough. We also need to look at shouldScale to see if the time interval between the last elastic expansion and the current one meets the criteria:

- The interval between the two contractions should not be less than 5 minutes.

- The interval between two expansion should not be less than 3 minutes.

The code for shouldScale is as follows:

pkg/controller/podautoscaler/horizontal.go:387 ... var downscaleForbiddenWindow = 5 * time.Minute var upscaleForbiddenWindow = 3 * time.Minute ... func shouldScale(hpa *autoscaling.HorizontalPodAutoscaler, currentReplicas, desiredReplicas int32, timestamp time.Time) bool { if desiredReplicas == currentReplicas { return false } if hpa.Status.LastScaleTime == nil { return true } // Going down only if the usageRatio dropped significantly below the target // and there was no rescaling in the last downscaleForbiddenWindow. if desiredReplicas < currentReplicas && hpa.Status.LastScaleTime.Add(downscaleForbiddenWindow).Before(timestamp) { return true } // Going up only if the usage ratio increased significantly above the target // and there was no rescaling in the last upscaleForbiddenWindow. if desiredReplicas > currentReplicas && hpa.Status.LastScaleTime.Add(upscaleForbiddenWindow).Before(timestamp) { return true } return false }

Only when this condition is met will the Scales.Update interface be invoked to interact with API Server to complete the setting of replicas for the RC corresponding to Scale. Taking rc Controller as an example (the same as deployment Controller), the implementation logic of Scales.Update interface corresponding to API Server is as follows:

pkg/registry/core/rest/storage_core.go:91 func (c LegacyRESTStorageProvider) NewLegacyRESTStorage(restOptionsGetter generic.RESTOptionsGetter) (LegacyRESTStorage, genericapiserver.APIGroupInfo, error) { ... if autoscalingGroupVersion := (schema.GroupVersion{Group: "autoscaling", Version: "v1"}); registered.IsEnabledVersion(autoscalingGroupVersion) { apiGroupInfo.SubresourceGroupVersionKind["replicationcontrollers/scale"] = autoscalingGroupVersion.WithKind("Scale") } ... restStorageMap := map[string]rest.Storage{ ... "replicationControllers": controllerStorage.Controller, "replicationControllers/status": controllerStorage.Status, ... } return restStorage, apiGroupInfo, nil } pkg/registry/core/controller/etcd/etcd.go:124 func (r *ScaleREST) Update(ctx api.Context, name string, objInfo rest.UpdatedObjectInfo) (runtime.Object, bool, error) { rc, err := r.registry.GetController(ctx, name, &metav1.GetOptions{}) if err != nil { return nil, false, errors.NewNotFound(autoscaling.Resource("replicationcontrollers/scale"), name) } oldScale := scaleFromRC(rc) obj, err := objInfo.UpdatedObject(ctx, oldScale) if err != nil { return nil, false, err } if obj == nil { return nil, false, errors.NewBadRequest("nil update passed to Scale") } scale, ok := obj.(*autoscaling.Scale) if !ok { return nil, false, errors.NewBadRequest(fmt.Sprintf("wrong object passed to Scale update: %v", obj)) } if errs := validation.ValidateScale(scale); len(errs) > 0 { return nil, false, errors.NewInvalid(autoscaling.Kind("Scale"), scale.Name, errs) } // Set rc to spec.replicas as the expected number of replicas in Scale rc.Spec.Replicas = scale.Spec.Replicas rc.ResourceVersion = scale.ResourceVersion // Update to etcd rc, err = r.registry.UpdateController(ctx, rc) if err != nil { return nil, false, err } return scaleFromRC(rc), false, nil }

Students who know kubernetes rc Controller well know that after modifying the replicas of rc, rc Controller watch will arrive, and then trigger RC Controller to execute replicas to create or destroy the corresponding amount of difference, eventually making the number of copies reach the expected value calculated by HPA. That is to say, RC controllers ultimately perform specific expansion or contraction actions.

Finally, let's look at HorizontalController's Run method:

pkg/controller/podautoscaler/horizontal.go:130 func (a *HorizontalController) Run(stopCh <-chan struct{}) { defer utilruntime.HandleCrash() glog.Infof("Starting HPA Controller") go a.controller.Run(stopCh) <-stopCh glog.Infof("Shutting down HPA Controller") }

Simply, the ListWatch responsible for HPA Resource s updates the change to the corresponding store(cache).

> The synchronization cycle of HPA Resource is set by horizontal-pod-autoscaler-use-rest-clients with a default value of 30s.

Summary (flowchart)