Correlation constant parsing

bucketCntBits = 3 // It represents bit bucketCnt = 1 << bucketCntBits // Represents a bucket(bmap) with a maximum storage of 8 key s loadFactorNum = 13 loadFactorDen = 2 // The load factor is calculated from these two factors (the load factor is related to when to trigger capacity expansion) maxKeySize = 128 maxElemSize = 128 // emptyRest = 0 : On behalf of the topHash Corresponding K/V available ,Or it represents the position and the position behind it bucket Also available emptyOne = 1 : Only on behalf of the topHash Corresponding K/V available evacuatedX = 2 : And rehash of,Represents that the original element may have been migrated to X position(In situ),Of course, it is also possible to migrate to Y position evacuatedY = 3 evacuatedEmpty = 4 : When this bucket After all the elements in are migrated,set up evacuatedEmpty minTopHash = 5 When topHash<=5 When,Status is stored,Otherwise, what is stored is hash value

- The subscript corresponding to each tophash is a kv

map structure

-

src/runtime/map.go

-

The internal object is hmap

-

type hmap struct { // Note: the format of the hmap is also encoded in cmd/compile/internal/gc/reflect.go.. count int // Number of elements in map flags uint8 // Identification status B uint8 // Used to set the maximum number of buckets to 2^B, that is, len(buckets)=2^B noverflow uint16 // Number of overflowing buckets hash0 uint32 // hash seed, involving hash functions buckets unsafe.Pointer // Pointer object of buckets oldbuckets unsafe.Pointer // buckets when capacity expansion is triggered nevacuate uintptr // Progressive is the progress of rehash, which is similar to redis extra *mapextra // }

-

-

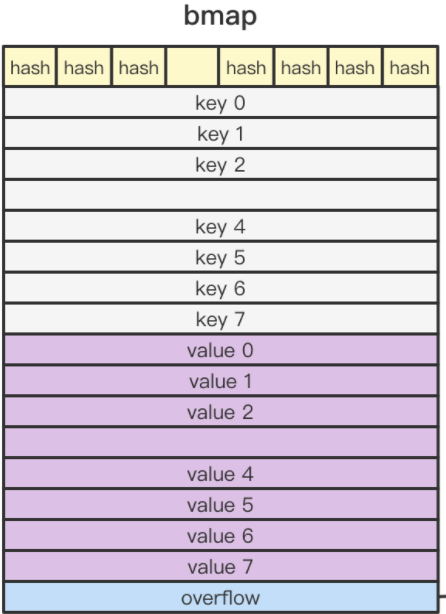

At the same time, similar to the hashMap of Java, it also has the concept of bucket. In golang, it is bmap

-

type bmap struct { tophash [bucketCnt]uint8 // It can be found that a bucket can only store 8 key s } The actual object generated after compilation is: type bmap struct { topbits [8]uint8 keys [8]keytype values [8]valuetype pad uintptr overflow uintptr // When K and V are non pointer objects, in order to avoid being scanned by gc, overflow will be moved to hmap, so that bmap still does not contain pointers }

-

-

The memory model of bmap is:

-

Key / key / key = > value / value / value, not key/value/key/value

-

-

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-G2p99OKJ-1631841576712)(/Users/joker/Nutstore Files / my nut cloud / review / imgs/golang_map_bmap.png)]

-

Initialization of MAP

-

m1 := make(map[int]int) // The corresponding internal function is makemap_small m2 := make(map[int]int, 10) // The corresponding function is makemap. Creating a map supports passing a parameter indicating the initial size

-

func makemap_small() *hmap { h := new(hmap) h.hash0 = fastrand() return h } It's just simple new One,Will not initialize bucket array -

Core functions:

-

func makemap(t *maptype, hint int, h *hmap) *hmap { mem, overflow := math.MulUintptr(uintptr(hint), t.bucket.size) if overflow || mem > maxAlloc { hint = 0 } if h == nil { h = new(hmap) } // Get a random factor h.hash0 = fastrand() // hint refers to the expected size value when creating a map. This is similar to the hashMap of Java, which will eventually make the initial capacity to the nth power of 2 B := uint8(0) for overLoadFactor(hint, B) { B++ } h.B = B // When B==0, it means that the initialization of buckets will be triggered only when it is written by put if h.B != 0 { var nextOverflow *bmap // Request to create bucket array h.buckets, nextOverflow = makeBucketArray(t, h.B, nil) if nextOverflow != nil { h.extra = new(mapextra) h.extra.nextOverflow = nextOverflow } } return h }

-

-

summary

- During map initialization, there are only two cases in general. One is

- makemap_small: in this way, only hmap s will be created and buckets will not be initialized

- makemap: automatically modify the capacity to the nth power of 2, and then initialize the buckets array

- During map initialization, there are only two cases in general. One is

MAP # put

-

Function: src/runtime/map.go#mapassign

-

Phase I: initialization phase

-

// ... omit some regular debug ging and verification // Determine whether to read and write concurrently if h.flags&hashWriting != 0 { throw("concurrent map writes") } // The corresponding hash function will be obtained at compile time hash := t.hasher(key, uintptr(h.hash0)) // Identification is in write state (used for concurrent read-write judgment) h.flags ^= hashWriting // If it's a makemap_small, buckets are not initialized at this time if h.buckets == nil { h.buckets = newobject(t.bucket) // newarray(t.bucket, 1) }

-

-

Stage 2: locate bucket

-

// Get the memory address of the bucket b := (*bmap)(add(h.buckets, bucket*uintptr(t.bucketsize))) // Get the top 8 bits of the hash as the key top := tophash(hash) var inserti *uint8 var insertk unsafe.Pointer var elem unsafe.Pointer bucketloop: for { // Traverse each cell for i := uintptr(0); i < bucketCnt; i++ { // If the current hash does not match the upper 8-bit hash if b.tophash[i] != top { // If the bucket is nil and the current element has no assignment if isEmpty(b.tophash[i]) && inserti == nil { inserti = &b.tophash[i] insertk = add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize)) elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) } // If the current bucket is in the overflow state, which means that the capacity is insufficient, the entire write will be skipped directly if b.tophash[i] == emptyRest { break bucketloop } continue } // Start updating values k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize)) // Judge whether to store a pointer or an element. If it is an element, dereference it if t.indirectkey() { k = *((*unsafe.Pointer)(k)) } // Only the same key can be updated if !t.key.equal(key, k) { continue } // Through memory copy, update key,value, if t.needkeyupdate() { typedmemmove(t.key, k, key) } // Finally, move the pointer handle to point to the new value elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) goto done } // If the above does not exit, it means that the elements in the current bucket are full, and we need to get the next one ovf := b.overflow(t) if ovf == nil { // It means that all the bucket s are full break } // Traverse to get the next bucket and continue the for loop b = ovf } // It indicates that the same key is not found or inserted, so the bucket s may be full // If capacity expansion needs to be triggered at present (that is, capacity expansion will be triggered when the current average number of elements in each bucket > = loadactor) if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) { // Start capacity expansion hashGrow(t, h) // Because the capacity expansion involves rehash, you need to go through it again goto again }

-

-

Stage 3: applying for a new bucket

-

When we get here,On behalf of,bucket Full,Need to apply for new bucket,Then everything starts reassigning if inserti == nil { // The current bucket and all the overflow buckets connected to it are full, allocate a new one. newb := h.newoverflow(t, b) inserti = &newb.tophash[0] insertk = add(unsafe.Pointer(newb), dataOffset) elem = add(insertk, bucketCnt*uintptr(t.keysize)) } // Store K and V. if they are not pointers, they also need to be dereferenced if t.indirectkey() { kmem := newobject(t.key) *(*unsafe.Pointer)(insertk) = kmem insertk = kmem } if t.indirectelem() { vmem := newobject(t.elem) *(*unsafe.Pointer)(elem) = vmem } // Memory copy key typedmemmove(t.key, insertk, key) *inserti = top h.count++ // Finally, eliminate the flag bit done: if h.flags&hashWriting == 0 { throw("concurrent map writes") } h.flags &^= hashWriting if t.indirectelem() { elem = *((*unsafe.Pointer)(elem)) } return elem

-

-

Map expansion rehash

-

golang's rehash is a progressive hashing process. First, apply for a new bucket array through hashGrow (or do not apply at all: the second trigger case). Then, each time you write or read data, you will judge whether the current map is in the rehash process. If so, rehash will be assisted

-

The most critical function is evaluate

-

There are two reasons for capacity expansion

-

One is the capacity expansion caused by reaching the loadFactor

-

The other is caused by too many overflow s

-

// Loading factor exceeds 6.5 func overLoadFactor(count int, B uint8) bool { return count > bucketCnt && uintptr(count) > loadFactorNum*(bucketShift(B)/loadFactorDen) } // Too many overflow buckets func tooManyOverflowBuckets(noverflow uint16, B uint8) bool { if B > 15 { B = 15 } return noverflow >= uint16(1)<<(B&15) }

-

-

If it is the former, the capacity of the bucket will be doubled directly (that is, the binary will be moved down and left by one bit)

-

-

func evacuate(t *maptype, h *hmap, oldbucket uintptr) { b := (*bmap)(add(h.oldbuckets, oldbucket*uintptr(t.bucketsize))) newbit := h.noldbuckets() if !evacuated(b) { // First, judge whether the current bucket has been rehash ed (through the internal flag, because the bmap will be installed and replaced with the bmap above) var xy [2]evacDst // Because the expansion may be twice as large, an array with a length of 2 is defined, and 0 is used to locate the previous elements x := &xy[0] x.b = (*bmap)(add(h.buckets, oldbucket*uintptr(t.bucketsize))) x.k = add(unsafe.Pointer(x.b), dataOffset) x.e = add(x.k, bucketCnt*uintptr(t.keysize)) if !h.sameSizeGrow() { // Double the expansion, so it may affect another element, so record the affected element y := &xy[1] y.b = (*bmap)(add(h.buckets, (oldbucket+newbit)*uintptr(t.bucketsize))) y.k = add(unsafe.Pointer(y.b), dataOffset) y.e = add(y.k, bucketCnt*uintptr(t.keysize)) } // Starting from the current bucket, traverse each bucket, because buckets are connected together for ; b != nil; b = b.overflow(t) { k := add(unsafe.Pointer(b), dataOffset) e := add(k, bucketCnt*uintptr(t.keysize)) // Traverse each element inside the bucket for i := 0; i < bucketCnt; i, k, e = i+1, add(k, uintptr(t.keysize)), add(e, uintptr(t.elemsize)) { top := b.tophash[i] // If it is an empty value (without assignment), it is directly marked as rehash if isEmpty(top) { b.tophash[i] = evacuatedEmpty continue } // Not null, but not the initial value, panic if top < minTopHash { throw("bad map state") } // If it is a pointer, dereference is triggered k2 := k if t.indirectkey() { k2 = *((*unsafe.Pointer)(k2)) } var useY uint8 if !h.sameSizeGrow() { // If it is a 2x expansion, recalculate the hash value hash := t.hasher(k2, uintptr(h.hash0)) if h.flags&iterator != 0 && !t.reflexivekey() && !t.key.equal(k2, k2) { // It indicates that there is a routine traversing the map. At the same time, the key does not match after recalculating the hash, which means that the value needs to be Move to a new bucket,therefore,In order to have a new hash, There will be one here &1 Operation of,The beauty of this operation is bring rehash Posterior bucket subscript,Or in the original position,Or in bucketIndex+2^B At two locations useY = top & 1,This is actually related to Java Very much ,however Java How did it happen? I forgot :-( top = tophash(hash) } else { if hash&newbit != 0 { useY = 1 } } } if evacuatedX+1 != evacuatedY || evacuatedX^1 != evacuatedY { throw("bad evacuatedN") } b.tophash[i] = evacuatedX + useY // evacuatedX + 1 == evacuatedY dst := &xy[useY] // evacuation destination // If the bucket of the current element happens to be the last bucket if dst.i == bucketCnt { dst.b = h.newoverflow(t, dst.b) dst.i = 0 dst.k = add(unsafe.Pointer(dst.b), dataOffset) dst.e = add(dst.k, bucketCnt*uintptr(t.keysize)) } dst.b.tophash[dst.i&(bucketCnt-1)] = top // mask dst.i as an optimization, to avoid a bounds check // Copy assignment / direct assignment if t.indirectkey() { *(*unsafe.Pointer)(dst.k) = k2 // copy pointer } else { typedmemmove(t.key, dst.k, k) // copy elem } if t.indirectelem() { *(*unsafe.Pointer)(dst.e) = *(*unsafe.Pointer)(e) } else { typedmemmove(t.elem, dst.e, e) } dst.i++ dst.k = add(dst.k, uintptr(t.keysize)) dst.e = add(dst.e, uintptr(t.elemsize)) } } // Finally, the hmap is dereferenced from oldBuckets so that it can be used by gc if h.flags&oldIterator == 0 && t.bucket.ptrdata != 0 { b := add(h.oldbuckets, oldbucket*uintptr(t.bucketsize)) ptr := add(b, dataOffset) n := uintptr(t.bucketsize) - dataOffset memclrHasPointers(ptr, n) } } if oldbucket == h.nevacuate { // Finally, judge whether all rehash is completed. If yes, eliminate some flag bits advanceEvacuationMark(h, t, newbit) } }

Deletion of Map

-

func mapdelete(t *maptype, h *hmap, key unsafe.Pointer) { // .... Omit the debug information if h == nil || h.count == 0 { if t.hashMightPanic() { t.hasher(key, 0) // see issue 23734 } return } // Concurrent read / write judgment if h.flags&hashWriting != 0 { throw("concurrent map writes") } // Get the hash corresponding to this key hash := t.hasher(key, uintptr(h.hash0)) // Add security sign h.flags ^= hashWriting // Obtain the corresponding bucket subscript through the upper 8 bits of the hash bucket := hash & bucketMask(h.B) // If capacity expansion is in progress at this time, auxiliary capacity expansion is required if h.growing() { growWork(t, h, bucket) } // Through offset: get the memory address of bmap(cell), which is the first place in the linked list b := (*bmap)(add(h.buckets, bucket*uintptr(t.bucketsize))) bOrig := b // Get the upper 8 bits of hash top := tophash(hash) search: for ; b != nil; b = b.overflow(t) { for i := uintptr(0); i < bucketCnt; i++ { if b.tophash[i] != top { // If the cell has been marked as empty, it means that there is no need to query and judge later, and it ends quickly if b.tophash[i] == emptyRest { break search } continue } k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize)) k2 := k // Dereference if t.indirectkey() { k2 = *((*unsafe.Pointer)(k2)) } if !t.key.equal(key, k2) { continue } if t.indirectkey() { *(*unsafe.Pointer)(k) = nil } else if t.key.ptrdata != 0 { memclrHasPointers(k, t.key.size) } // Get the corresponding value e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) // Clear value if t.indirectelem() { *(*unsafe.Pointer)(e) = nil } else if t.elem.ptrdata != 0 { memclrHasPointers(e, t.elem.size) } else { memclrNoHeapPointers(e, t.elem.size) } // Identifies that the cell is available b.tophash[i] = emptyOne if i == bucketCnt-1 { if b.overflow(t) != nil && b.overflow(t).tophash[0] != emptyRest { goto notLast } // Note the topHash[0] of the previous bucket has been set to emptyRest, which means that the entire bucket is available } else { if b.tophash[i+1] != emptyRest { goto notLast } // Note that the next topHash has been set to emptyRest, and the previous ones are available } // Set to emptyRest for { b.tophash[i] = emptyRest if i == 0 { if b == bOrig { break // beginning of initial bucket, we're done. } // Find previous bucket, continue at its last entry. c := b for b = bOrig; b.overflow(t) != c; b = b.overflow(t) { } i = bucketCnt - 1 } else { i-- } if b.tophash[i] != emptyOne { break } } notLast: h.count-- // Reset the hash seed to make it more difficult for attackers to // repeatedly trigger hash collisions. See issue 25237. if h.count == 0 { h.hash0 = fastrand() } break search } } // Remove protection bit if h.flags&hashWriting == 0 { throw("concurrent map writes") } h.flags &^= hashWriting }

Acquisition of Map

-

func mapaccessK(t *maptype, h *hmap, key unsafe.Pointer) (unsafe.Pointer, unsafe.Pointer) { if h == nil || h.count == 0 { return nil, nil } hash := t.hasher(key, uintptr(h.hash0)) m := bucketMask(h.B) // Obtain the corresponding bucket address through the lower 8 bits of the hash b := (*bmap)(unsafe.Pointer(uintptr(h.buckets) + (hash&m)*uintptr(t.bucketsize))) // Description: capacity expansion in progress if c := h.oldbuckets; c != nil { // If you don't wait for size expansion if !h.sameSizeGrow() { // Get the address of the previous bucket // There used to be half as many buckets; mask down one more power of two. m >>= 1 } oldb := (*bmap)(unsafe.Pointer(uintptr(c) + (hash&m)*uintptr(t.bucketsize))) if !evacuated(oldb) { // If the previous bucket has not been rehash, it indicates that the data is still in the original place. Use the previous bucket b = oldb } } top := tophash(hash) bucketloop: // Traverse the cell for matching, and then find the result for ; b != nil; b = b.overflow(t) { for i := uintptr(0); i < bucketCnt; i++ { if b.tophash[i] != top { if b.tophash[i] == emptyRest { break bucketloop } continue } k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize)) if t.indirectkey() { k = *((*unsafe.Pointer)(k)) } if t.key.equal(key, k) { e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) if t.indirectelem() { e = *((*unsafe.Pointer)(e)) } return k, e } } } return nil, nil }

summary

-

A lot of state variables are set in the golang map, such as emptyOne,emptyRest and so on, which are used to quickly fail

-

map is implemented by hmap+bmap in the bottom layer. The solution of hash conflict is similar to that of Java. It is also solved by zipper method. By default, a bmap can only store 8 K and V, and the memory model of K and V in bmap is key and then value. The reason is to reduce padding

-

The internal overflow of bmap points to the extra of hmap to avoid being scanned by gc

-

Similar to Java, there is also a key load factor. golang defaults to 6.5. The calculation method of this value is count / number of buckets, that is, the calculation result is the recommended number of cell s stored in each bucket

-

The capacity expansion of golang's map is similar to that of redis. Progressive rehash is adopted. Only two buckets are expanded at a time. At the same time, there are two kinds of capacity expansion opportunities for golang. One is to reach the load factor, and the other is too many overflow buckets (the maximum value of this value is 2 ^ 15). Reaching the load factor will double the capacity of the whole bucket, and the latter is equal size

-

golang rehash is similar to Java. It is either in place or twice the position of the current bucket. The specific implementation is through the original hash & 1

-

The basic process is the same

- golang map bucket positioning is to locate the bucket through the lower 6 bits of the hash. After obtaining the bucket, the subscript of topHash is obtained through the upper 8 bits of the hash

- If the bucket cannot find the corresponding value, locate it in the overflow bucket of the bucket

- Then, traverse the internal cell, and start the corresponding processing when tophash matches

- lookup

- If the current capacity expansion is in progress, and the oldBuckets is not empty, it will first judge whether it is the same size expansion or has been expanded. If it is double expansion, it will first obtain the previous bucket address to judge whether it has been rehash ed. If not, the original bucket will be used

- If there is a matching in the corresponding topHash, it will be returned directly

- add to

- Judge whether capacity expansion is in progress. If capacity expansion is in progress, it will be assisted first

- Then traverse the cell s in the bucket. If there are duplicates, update them. Otherwise, insert new data

- Finally, judge whether the two conditions for capacity expansion are met. If yes, start to prepare for capacity expansion, but not directly expand, but mark it as expandable

- delete

- Similarly, it will also judge whether capacity expansion is in progress. If yes, auxiliary capacity expansion

- Then traverse the cell s in the bucket, and set the matching to null. Finally, it will optimize and judge whether the previous bucket is also empty (to assist future operations)

problem

-

The reason for map disorder is

- When rehash, the hash will be recalculated and a random factor will be added

-

The role of overflow in bmap

- The function is to create a bucket when the bucket overflows (because the number of elements in the bucket is fixed at 8), and when the 9th key is also in the bucket, a bucket will be created, and then connected through the overflow pointer to form a linked list

- Why is the number of bucket s fixed

- Because the top hash of bmap is 8 bits higher, it is 8 bits (but there seems to be no basis)

- When will the number of overflow s increase

- When put, if the bucket element is full and the overflow buckets are full, a new bucket will be applied to point to overflow

-

What is tophash and what is its function

- tophash is the upper 8 bits of the hash

- The function is to:

- topHash is the top 8 bits of the hash. It is used for fast positioning, because each bucket has a hash. This topHash can be quickly matched with it. If it is not satisfied, it will be quickly next

-

Timing of capacity expansion

-

- When the number of cell s in each bucket > = LoadFactor

- When the number of overflow > 2 ^ 15 square meters (the maximum value is 2 ^ 15)

-

-

Why does bmap take the form of key/key/key/value/value instead of key/value

- It is also related to the operating system. The operating system cache is stored in the cache block in the form of cache line, and the size of each line is fixed. If the same data is cached in two cache lines, the hit rate is low and the efficiency is low. Therefore, there will be the former form of padding and map, so that padding only needs to be placed at the end of value instead of key/value/padding

-

The role of flags and B in hmap

- The function of flags is to judge whether it is in concurrent read-write state. When writing, it will be marked as write state. The same is true for reading