1. Semaphore

There are two kinds of semaphores: counting semaphores and binary semaphores. Counting semaphores can be obtained multiple times. Binary semaphores have only two states: 0 and 1, and can only be obtained once.

Semaphores can be used to protect resources and prevent multiple tasks from accessing a resource at the same time. Create an exclusive binary semaphore for the resource. Before applying to access a resource, the task applies to obtain the semaphore. If no task is currently accessing the resource, the semaphore can be obtained successfully and the resource can be accessed continuously; If the current resource is being accessed by a task, obtaining the semaphore will fail, and the task will enter the suspended state. Wait for other tasks to access the resource and release the semaphore before accessing the resource.

If a resource allows multiple tasks to access at the same time, a counting semaphore can be used. If a task requests to obtain a semaphore each time, the semaphore count will be reduced by one until it is reduced to 0, and other tasks will not be allowed to apply for acquisition again, that is, the maximum number of tasks accessing resources at the same time can be controlled through the initial value of the semaphore.

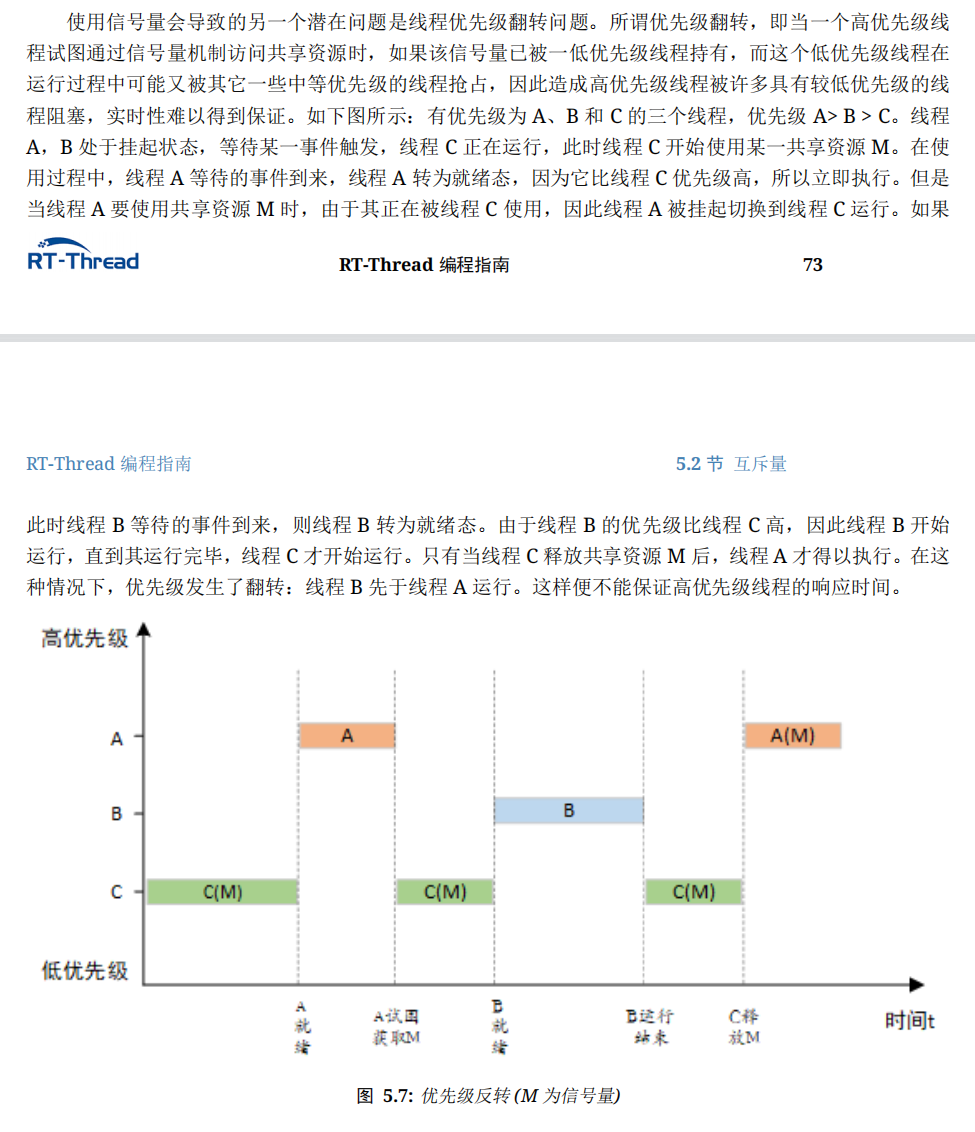

2. Priority reversal

The use of semaphores may lead to the problem of priority reversal. The detailed description is shown in the figure below, which is intercepted from the RT thread programming guide. In short, when a high priority task requests to access resources, the resources are being occupied by low priority tasks, so the high priority task is suspended. At this time, a medium priority task is ready, depriving the CPU use right of low priority tasks, Starting execution causes the task to be executed before the high priority task.

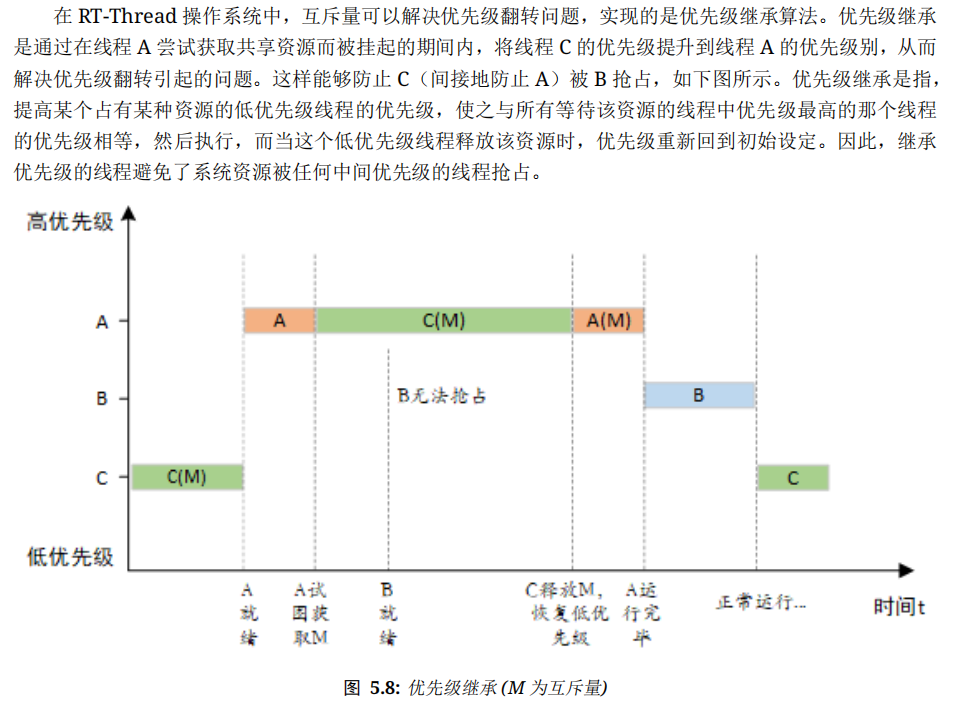

3. Mutual exclusion

The mutex can be understood as a binary semaphore, which can avoid the above problem of priority inversion. The method is to increase the priority of the low priority task to the same level as the high priority task when the high priority task applies for access to the resource but the resource is used by the low priority task, so as to avoid depriving the CPU use right of the low priority task when the medium priority task is ready. See the figure below for detailed description. When a task requests to obtain a mutex, the system will judge the priority of the task currently occupying the mutex and the priority of the requested task. If the requested task has a higher priority, the system will assign the higher priority to the task occupying the mutex, and restore its original priority when the task releases the mutex.

In addition, mutexes also support reentry, that is, multiple acquisitions. At this time, mutexes need to be released as many times as they have been acquired before they can be fully released. And the mutex belongs to a task. The task that obtains the mutex must release the mutex. Other tasks cannot release the mutex.

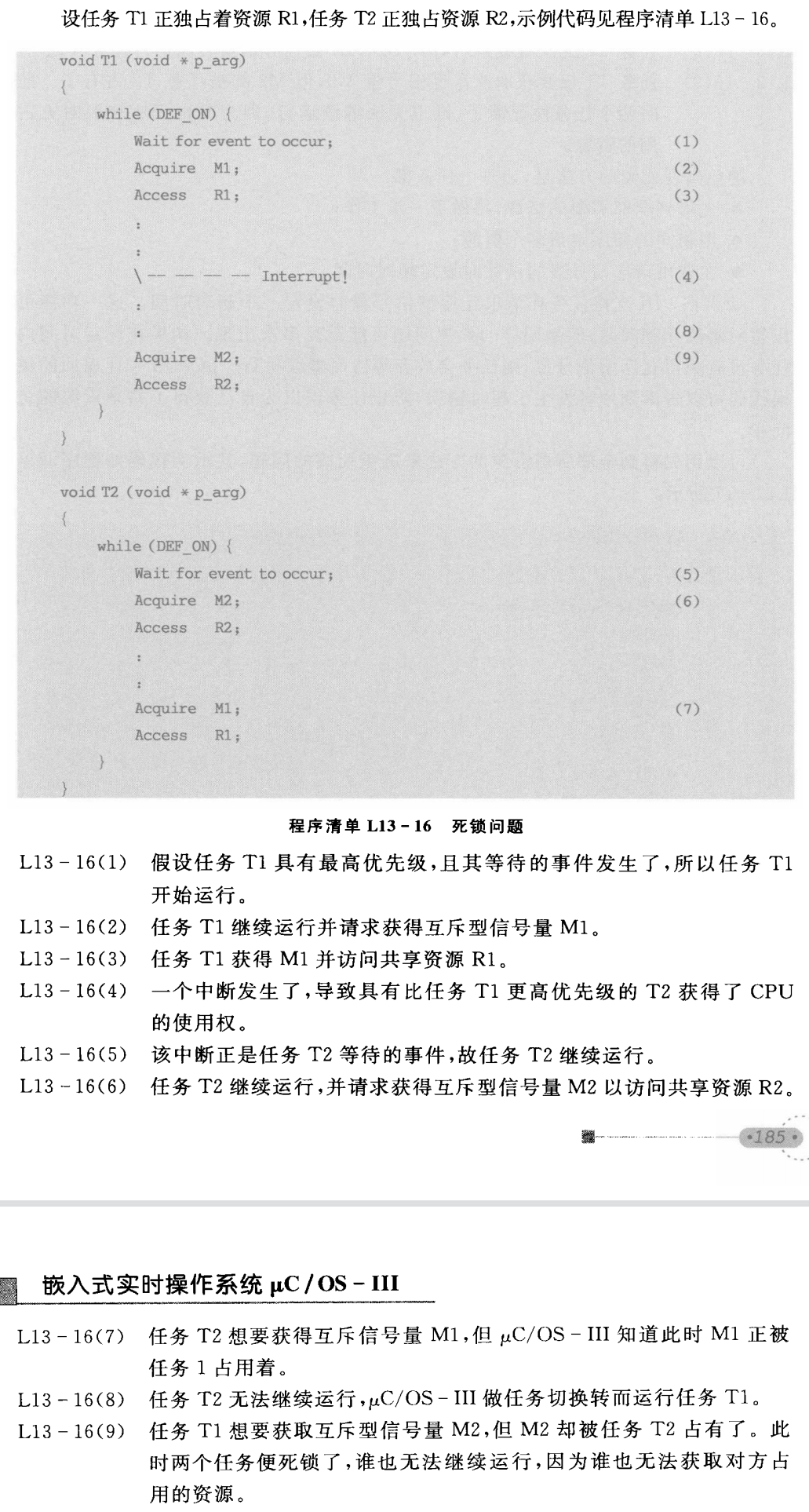

4. Deadlock

Deadlock, also known as locking, refers to two tasks waiting for each other's occupied resources without limit. The detailed description is as follows, which is intercepted from embedded real-time operating system uC/OS-III:

There are three ways to avoid deadlock:

- (1) Apply for multiple resources in the same order in different tasks;

- (2) When multiple resources are needed in a task, obtain all resources first, and then do the next step;

- (3) Set the timeout when applying for mutex or semaphore to avoid waiting forever.

The most effective method is method (1). By accessing resources in the same order, you can avoid cross access between two tasks and two resources. If the order of using resources in a task is fixed, you can first obtain the access permissions of all resources and then access resources, so as to avoid deadlock. Method (3) can only temporarily avoid deadlock, but it will lead to system error. It is not recommended.

5. Mailbox and message queue

5.1 uCOS

uCOS-II has two concepts of mailbox and message queue, both of which are events. For mailbox, there is a pointer in the Event control block that directly points to the data address, so only one data pointer can be passed at a time. For message queues, the pointer in the Event control block points to a message queue. Each node in the message queue contains a data address, so multiple data addresses can be delivered at the same time. Message queues with a queue size of 1 serve the same purpose as mailboxes.

uCOS-II has a static global structure array OSQTbl[OS_MAX_QS], which contains all available message queues in the system. Each time a message queue is created, a node is taken from this array.

In uCOS-III, the concept of mailbox is cancelled and only message queue is used. In the code, a message pool is defined:

OS_MSG OSCfg_MsgPool [OS_CFG_MSG_POOL_SIZE];

When sending messages, all message queues take a node from the message pool and hang it on the message linked list of the message queue. The message queue control block has a pointer to the head and tail of the message linked list and records the number of message nodes.

The message queue control block is defined as follows:

struct os_msg_q { /* OS_MSG_Q */

OS_MSG *InPtr; /* Pointer to next OS_MSG to be inserted in the queue */

OS_MSG *OutPtr; /* Pointer to next OS_MSG to be extracted from the queue */

OS_MSG_QTY NbrEntriesSize; /* Maximum allowable number of entries in the queue */

OS_MSG_QTY NbrEntries; /* Current number of entries in the queue */

OS_MSG_QTY NbrEntriesMax; /* Peak number of entries in the queue */

};

5.2 rt-thread

RTT has two concepts: mailbox and message queue. Its mailbox concept is different from that of UCOS II. RTT mailbox is implemented based on an array. The members of the array are 4-byte variables, which can be data addresses or variables. This array is the message pool of the mailbox and is allocated fixedly when creating the mailbox. Therefore, the RTT mailbox transmits a fixed 4-byte data every time when transmitting data, takes a 4-byte variable from the message pool every time sending mail, and releases a 4-byte variable when receiving mail. The mailbox control block stores the data addresses of the sending mail (in_offset) and receiving mail (out_offset) of the current mailbox, as well as the number of mail entries in the current mailbox.

The mailbox control block is defined as follows:

struct rt_mailbox

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

rt_ubase_t *msg_pool; /**< start address of message buffer */

rt_uint16_t size; /**< size of message pool */

rt_uint16_t entry; /**< index of messages in msg_pool */

rt_uint16_t in_offset; /**< input offset of the message buffer */

rt_uint16_t out_offset; /**< output offset of the message buffer */

rt_list_t suspend_sender_thread; /**< sender thread suspended on this mailbox */

};

The implementation of RTT message queue is similar to uCOS. However, RTT is no longer that all message queues share a message pool, but each message queue has a separate message pool and is managed separately.

The message queue control block of RTT is defined as follows:

struct rt_messagequeue

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

void *msg_pool; /**< start address of message queue */

rt_uint16_t msg_size; /**< message size of each message */

rt_uint16_t max_msgs; /**< max number of messages */

rt_uint16_t entry; /**< index of messages in the queue */

void *msg_queue_head; /**< list head */

void *msg_queue_tail; /**< list tail */

void *msg_queue_free; /**< pointer indicated the free node of queue */

rt_list_t suspend_sender_thread; /**< sender thread suspended on this message queue */

};