Summary

This document describes how to obtain and run Solr, collect various data sources into multiple collections, and understand the Solr management and search interface.

First, unzip the Solr version and change the working directory to a subdirectory where Solr is installed. Note that the base directory name may vary with the version Solr downloads. For example, shell is used in UNIX, Cygwin or MacOS:

/: $ ls solr *

solr-6.2.0.zip

/: $ unzip -q solr-6.2.0.zip

/: $ cd solr-6.2.0 To start Solr, run: bin/solr start-e cloud-noprompt (as executed by the Windows system cmd command)

/solr-6.4.2:$ bin/solr start -e cloud -noprompt Welcome to the SolrCloud example! Start two Solr nodes for your example SolrCloud cluster. Starting up 2 Solr nodes for your example SolrCloud cluster. ... Start Solr server on port 8983 (pid = 8404) Started Solr server on port 8983 (pid=8404). Happy searching! ... Start Solr server on port 7574 (pid = 8549) Started Solr server on port 7574 (pid=8549). Happy searching! ... SolrCloud example running, please visit http://localhost:8983/solr /solr-6.4.2:$ _

By loading the Solr Admin UI in the Web browser, you can see that Solr is running: http://localhost:8983/solr/. This is the main starting point for managing Solr.

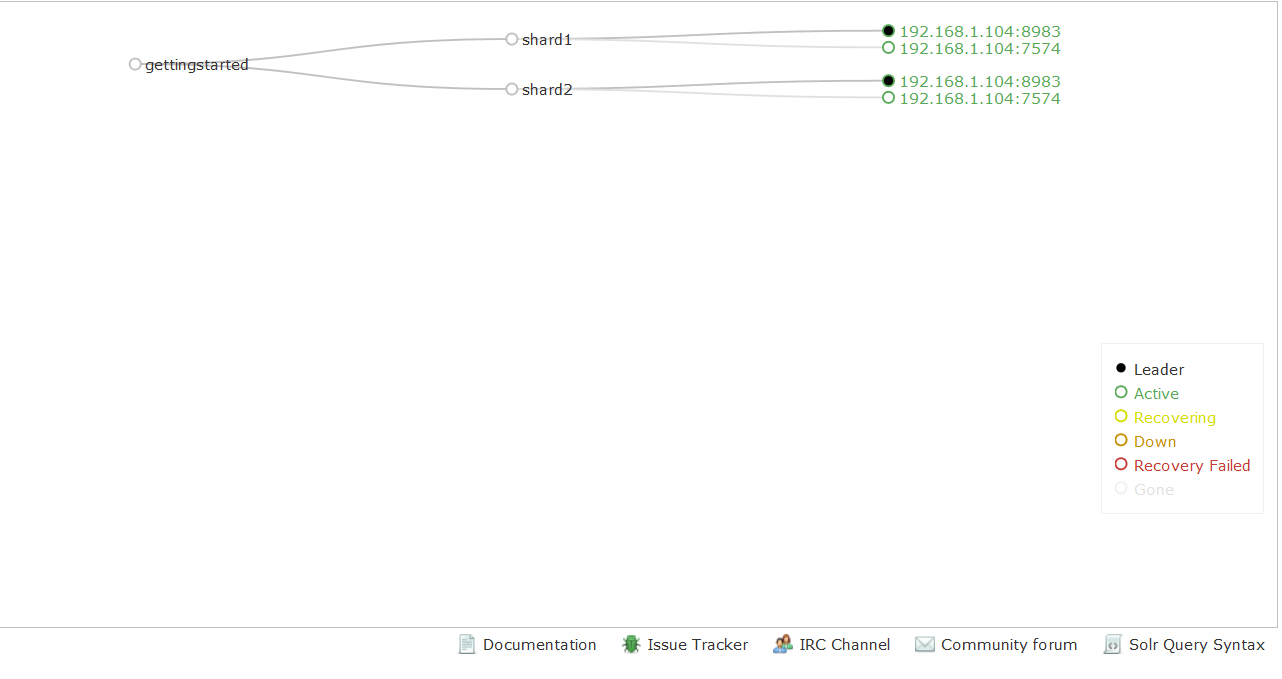

Solr will now run two "nodes", one on port 7574 and one on port 8983. There is a collection automatically created, started, two fragmented collections, each with two copies. Cloud tags in the management interface describe collections very well:

Index data

Your Solr server is up and running, but it does not contain any data. Solr installation includes bin / post tools to facilitate easy import of various types of documents into Solr from the beginning. We will use this tool as an example of the index below.

You will need a command shell to run these examples, which are located in the Solr installation directory; where do you launch the Solr shell works well?

Note: At present, there is no comparable Windows script in the bin / post tool, but the underlying Java program invoked is available. For more information, see Post Tool, Windows section.

Catalogue of indexed "rich" files

Let's first index local "rich" files, including HTML, PDF, Microsoft Office formats (such as MS Word), plain text, and many other formats. bin / post has the ability to crawl file directories, optionally recursively averaging, sending the original content of each file to Solr for extraction and indexing. Solr installation includes a docs / subdirectory so that you can create a convenient (mainly) built-in HTML file.

bin/post -c gettingstarted docs/The following are its results:

/solr-6.4.2:$ bin/post -c gettingstarted docs/

java -classpath /solr-6.4.2/dist/solr-core-6.4.2.jar -Dauto=yes -Dc=gettingstarted -Ddata=files -Drecursive=yes org.apache.solr.util.SimplePostTool docs/

SimplePostTool version 5.0.0

Posting files to [base] url http://localhost:8983/solr/gettingstarted/update...

Entering auto mode. File endings considered are xml,json,jsonl,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log

Entering recursive mode, max depth=999, delay=0s

Indexing directory docs (3 files, depth=0)

POSTing file index.html (text/html) to [base]/extract

POSTing file quickstart.html (text/html) to [base]/extract

POSTing file SYSTEM_REQUIREMENTS.html (text/html) to [base]/extract

Indexing directory docs/changes (1 files, depth=1)

POSTing file Changes.html (text/html) to [base]/extract

...

4329 files indexed.

COMMITting Solr index changes to http://localhost:8983/solr/gettingstarted/update...

Time spent: 0:01:16.252The command line is broken down as follows:

- C gettingstart: The name of the collection to be indexed Docs/:Solr Installs the relative path of docs/directory

You have now indexed thousands of documents into the collection of gettingstarted in Solr and submitted these changes. You can search for "solr" by loading the Admin UI query tab and entering "solr" in q param (replace *:*, match all documents) and Execute Query. For more information, see the Search section below.

To index your own data, rerun the directory index command pointing to your own document directory. For example, in Mac instead of docs / try / Documents / or / Desktop /! You may want to start again from a clean, empty system, rather than having your content except Solr docs / directory; see the Cleaning section below to learn how to restore to a clean starting point.

Index Solr XML

Solr supports indexing structured content in various incoming formats. Solr XML is the most important format in the history of transforming structured content into Solr. Many Solr indexers have been coded to process domain content into Solr XML output, usually HTTP is published directly to Solr's / update endpoints.

Solr installation includes some files and sample data in Solr XML format (mostly simulated technical product data). Note: This technical product data has more domain specific configurations, including architecture and browsing UI. The bin/solr script includes built-in support for running bin/solr start-e technical products, which not only starts Solr, but also indexes the data (be sure to bin/solr stop-all before trying). However, the following example assumes that Solr is started with bin / solr start -e cloud to keep consistent with all the examples on this page, so the collection used is "gettingstart" rather than "technical products".

Using bin/post, index the sample Solr XML file in example/exampledocs/:

bin/post -c gettingstarted example/exampledocs/*.xmlHere's what you'll see:

/solr-6.4.2:$ bin/post -c gettingstarted example/exampledocs/*.xml

java -classpath /solr-6.4.2/dist/solr-core-6.4.2.jar -Dauto=yes -Dc=gettingstarted -Ddata=files org.apache.solr.util.SimplePostTool example/exampledocs/gb18030-example.xml ...

SimplePostTool version 5.0.0

Posting files to [base] url http://localhost:8983/solr/gettingstarted/update...

Entering auto mode. File endings considered are xml,json,jsonl,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log

POSTing file gb18030-example.xml (application/xml) to [base]

POSTing file hd.xml (application/xml) to [base]

POSTing file ipod_other.xml (application/xml) to [base]

POSTing file ipod_video.xml (application/xml) to [base]

POSTing file manufacturers.xml (application/xml) to [base]

POSTing file mem.xml (application/xml) to [base]

POSTing file money.xml (application/xml) to [base]

POSTing file monitor.xml (application/xml) to [base]

POSTing file monitor2.xml (application/xml) to [base]

POSTing file mp500.xml (application/xml) to [base]

POSTing file sd500.xml (application/xml) to [base]

POSTing file solr.xml (application/xml) to [base]

POSTing file utf8-example.xml (application/xml) to [base]

POSTing file vidcard.xml (application/xml) to [base]

14 files indexed.

COMMITting Solr index changes to http://localhost:8983/solr/gettingstarted/update...

Time spent: 0:00:02.077... Now you can use the default Solr query grammar (a superset of Lucene query grammar) to search for all types of things.

Note: You can browse the documents indexed at http://localhost:8983/solr/gettingstart/browse. / The browse UI allows you to understand how Solr's technical capabilities work in familiar, though somewhat rough and prototyped, interactive HTML views. (/ browse view defaults to assume that the schema and data to get started are all combinations of structured XML, JSON, CSV sample data and unstructured rich documents. Your own data may not look ideal, but / browse templates can be customized.)

Index JSON

Solr supports indexing JSON, arbitrarily structured JSON or "Solr JSON" (similar to Solr XML).

Solr includes a small sample Solr JSON file to illustrate this function. Using bin / post again, index the sample JSON file:

bin/post -c gettingstarted example/exampledocs/books.jsonYou will see the following:

/solr-6.4.2:$ bin/post -c gettingstarted example/exampledocs/books.json

java -classpath /solr-6.4.2/dist/solr-core-6.4.2.jar -Dauto=yes -Dc=gettingstarted -Ddata=files org.apache.solr.util.SimplePostTool example/exampledocs/books.json

SimplePostTool version 5.0.0

Posting files to [base] url http://localhost:8983/solr/gettingstarted/update...

Entering auto mode. File endings considered are xml,json,jsonl,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log

POSTing file books.json (application/json) to [base]/json/docs

1 files indexed.

COMMITting Solr index changes to http://localhost:8983/solr/gettingstarted/update...

Time spent: 0:00:00.493For more information on indexing Solr JSON, see the "Solr Reference Guide" section Solr-Style JSON

To flatten (and/or split) and index arbitrarily structured JSON s, see topics other than this Quick Start Guide Transforming and Indexing Custom JSON data (Convert and index custom JSON data).

Index CSV (comma/column delimited values)

A large amount of data to Solr passes through CSV, especially when files are all of the same kind with the same set of fields. CSV can be easily exported from spreadsheets (such as Excel) or databases (such as MySQL). When you start using Solr, it's usually easiest to convert structured data into CSV format and index it to Solr instead of a more complex one-step operation.

Use the example CSV file contained in the bin/post index:

bin/post -c gettingstarted example/exampledocs/books.csvYou will see:

/solr-6.4.2:$ bin/post -c gettingstarted example/exampledocs/books.csv

java -classpath /solr-6.4.2/dist/solr-core-6.4.2.jar -Dauto=yes -Dc=gettingstarted -Ddata=files org.apache.solr.util.SimplePostTool example/exampledocs/books.csv

SimplePostTool version 5.0.0

Posting files to [base] url http://localhost:8983/solr/gettingstarted/update...

Entering auto mode. File endings considered are xml,json,jsonl,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log

POSTing file books.csv (text/csv) to [base]

1 files indexed.

COMMITting Solr index changes to http://localhost:8983/solr/gettingstarted/update...

Time spent: 0:00:00.109For more information, see the section "CSV Formatted Index Updates" in the "Solr Reference Guide"

Other indexing techniques

Import records from the database using the Data Import Handler (DIH). Create documents to be sent to Solr programmatically using JVM-based language or SolrJ from other Solr clients. Use the Manage UI Documents tab to paste documents to be indexed, or select Document Generator from the Document Type drop-down list to create a field at a time. Click the submit document button below the form to index the document.

Update data

You may notice that even if you index the contents of this guide more than once, you will not repeat the results you find. This is because the example schema.xml specifies a "uniqueKey" field named "id". Whenever you issue a command to Solr to add a document with the same value as the existing document uniqueKey, it automatically replaces it. You can see this by looking at the values of numDocs and maxDoc in the core specific overview section of Solr Admin UI.

NumDocs denotes the number of documents that can be searched in the index (and will be larger than the number of XML, JSON or CSV files, because some files contain multiple documents). The maxDoc value may be larger because the maxDoc count includes documents that have not been physically deleted from the index. You can redistribute the sample files again and again, and numDocs will never increase, because new documents will constantly replace old ones.

Continue editing any existing sample data file, change some data, and then rerun the SimplePostTool command. You will see that your changes are reflected in subsequent searches.

Delete data

You can delete data by issuing a delete command to the update URL and specifying the value of the unique key field of the document or by matching queries for multiple documents (use that value carefully). Because these commands are small, we specify them directly on the command line instead of referencing JSON or XML files.

Execute the following command to delete a specific document:

bin/post -c gettingstarted -d "<delete><id>SP2514N</id></delete>"search

Solr can query through REST clients, cURL, wget, Chrome POSTMAN, and through local clients that can be used in many programming languages.

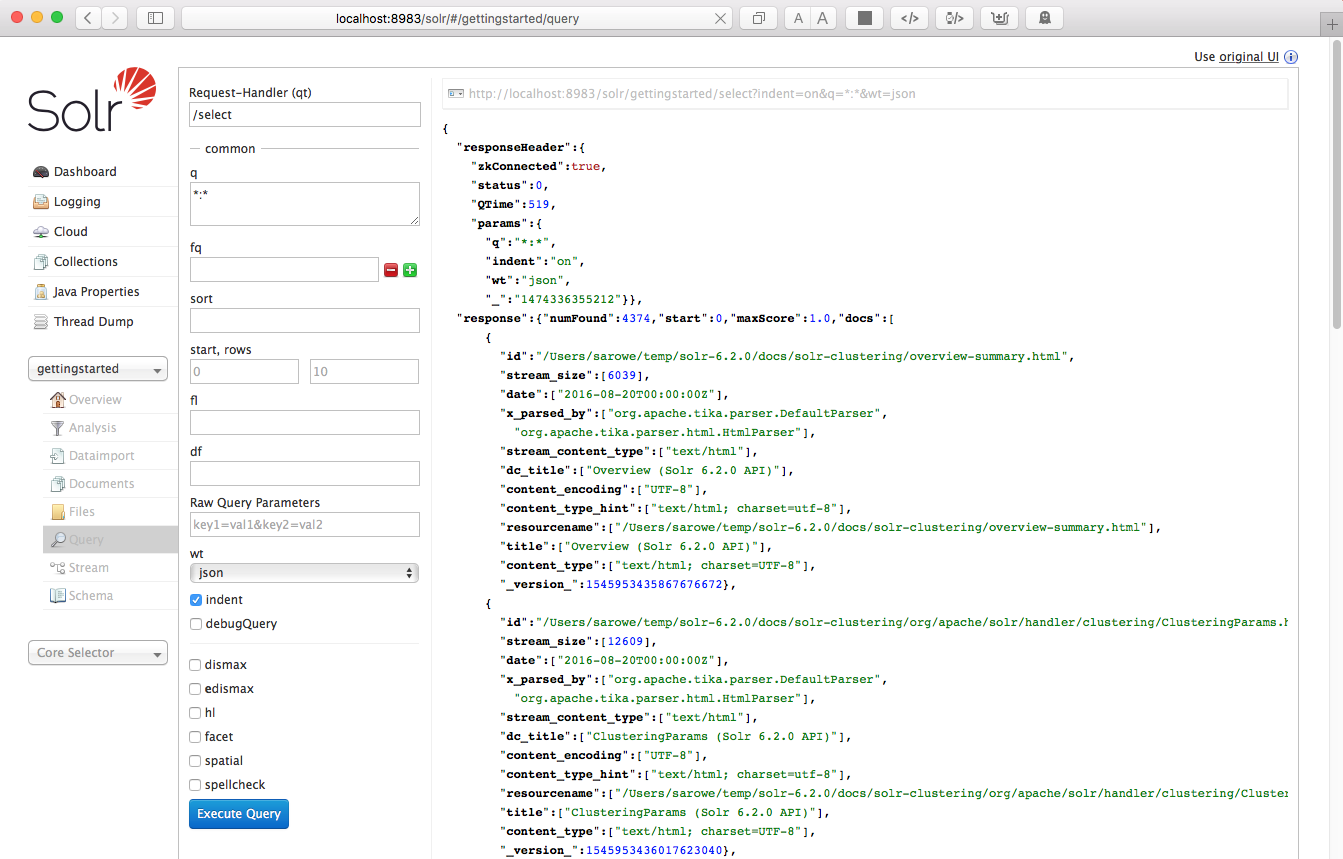

The Solr management UI includes the query builder interface - see the Start Query tab under http://localhost:8983/solr//gettingstart/query.

If you click the Execute Query button without changing anything in the form, you will get 10 JSON-formatted documents (*:* matches all documents in q param):

The URL sent by the management UI to Solr is shown in light gray in the upper right corner of the screen capture above - if you click on it, your browser will display the original response. To use cURL, use quotation marks on the curl command line to make the same URL:

curl "http://localhost:8983/solr/gettingstarted/select?indent=on&q=*:*&wt=json"basic

Search for a single word

To search for a term, use it as a q param value in the core specific Solr Admin UI query section and replace it with the term you are looking for. Search for "foundation":

curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=foundation"You will get:

/solr-6.4.2$ curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=foundation"

{

"responseHeader":{

"zkConnected":true,

"status":0,

"QTime":527,

"params":{

"q":"foundation",

"indent":"true",

"wt":"json"}},

"response":{"numFound":4156,"start":0,"maxScore":0.10203234,"docs":[

{

"id":"0553293354",

"cat":["book"],

"name":["Foundation"],

...Response instructions have 4,156 hits ("numFound": 4156), of which the first 10 are returned because by default start = 0 and rows = 10. You can specify these parameters to traverse the results, where start is the location (starting from zero) of the first result to be returned, and rows is the page size.

To limit the fields returned in the response, use fl param, which uses a comma-separated list of field names. For example. Returns only the id field:

curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=foundation&fl=id"q = foundation matches almost all the documents we indexed, because most of the files under docs / contain the Apache Software Foundation. To limit the search to specific fields, use the grammar "q = field: value", for example. Search Foundation only in the name field:

curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=name:Foundation"The above request returns only one document from the response ("numFound": 1)

...

"response":{"numFound":1,"start":0,"maxScore":2.5902672,"docs":[

{

"id":"0553293354",

"cat":["book"],

"name":["Foundation"],

...Phrase Search

To search for multi-term phrases, enclose them in double quotation marks: q = multiple terms here. For example. To search for "CAS Delay" - Note that the spaces between words must be converted to "+" in the Web site (the administrative interface automatically processes the address code):

curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=\"CAS+latency\""Response:

{

"responseHeader":{

"zkConnected":true,

"status":0,

"QTime":391,

"params":{

"q":"\"CAS latency\"",

"indent":"true",

"wt":"json"}},

"response":{"numFound":3,"start":0,"maxScore":22.027056,"docs":[

{

"id":"TWINX2048-3200PRO",

"name":["CORSAIR XMS 2GB (2 x 1GB) 184-Pin DDR SDRAM Unbuffered DDR 400 (PC 3200) Dual Channel Kit System Memory - Retail"],

"manu":["Corsair Microsystems Inc."],

"manu_id_s":"corsair",

"cat":["electronics", "memory"],

"features":["CAS latency 2, 2-3-3-6 timing, 2.75v, unbuffered, heat-spreader"],

...Combinatorial search

By default, when you search for multiple terms and/or phrases in a single query, Solr only needs one to exist for document matching. Documents with more terms will be ranked higher in the result list.

You can require a term or phrase to be prefixed with "+"; on the contrary, in order not to allow the existence of a term or phrase, use "-" as the prefix.

To find documents that contain the terms "one" and "three", enter + one + three in q param on the Admin UI Query tab. Because the "+" character has a reserved use in the URL (encoding space characters), its curl-specific URL must be encoded as "% 2B":

curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=%2Bone+%2Bthree"Search for documents that contain the term "two" but do not contain the term "one", enter + two -one in q param in the management UI. Similarly, the address codes "+" as "% 2B":

curl "http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=%2Btwo+-one"thorough

For more Solr search options, see the Search section of the Solr Reference Guide.

face

One of Solr's most popular features is facet. Faceting allows search results to be arranged into subsets (or buckets or categories), providing counts for each subset. There are several types of faceting: field values, numbers and date ranges, pivots (decision trees) and arbitrary query facets.

Field facet

In addition to providing search results, Solr queries can return the number of documents containing each unique value in the entire result set.

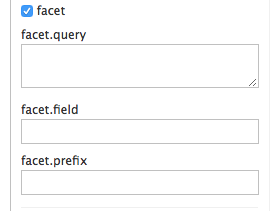

From the core specific management interface query tab, if you select the Face check box, you will see some options related to the Face:

To view the facet count in all documents (q =:): Open the facet (true) and specify the fields to facet through the facet.field parameter. If you only need facets and have no document content, specify rows = 0. The curl command below returns the face count of the manu_id_s field:

curl 'http://localhost:8983/solr/gettingstarted/select?wt=json&indent=true&q=*:*&rows=0'\

'&facet=true&facet.field=manu_id_s'You will see:

{

"responseHeader":{

"zkConnected":true,

"status":0,

"QTime":201,

"params":{

"q":"*:*",

"facet.field":"manu_id_s",

"indent":"true",

"rows":"0",

"wt":"json",

"facet":"true"}},

"response":{"numFound":4374,"start":0,"maxScore":1.0,"docs":[]

},

"facet_counts":{

"facet_queries":{},

"facet_fields":{

"manu_id_s":[

"corsair",3,

"belkin",2,

"canon",2,

"apple",1,

"asus",1,

"ati",1,

"boa",1,

"dell",1,

"eu",1,

"maxtor",1,

"nor",1,

"uk",1,

"viewsonic",1,

"samsung",0]},

"facet_ranges":{},

"facet_intervals":{},

"facet_heatmaps":{}}}Range facet

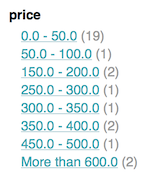

For numbers or dates, it is often desirable to split the facet count into ranges rather than discrete values. The main example of numerical range faceting using sample product data is price. In the / browse UI, it is as follows:

Data from these price range facets can be displayed in JSON format using this command:

curl 'http://localhost:8983/solr/gettingstarted/select?q=*:*&wt=json&indent=on&rows=0'\

'&facet=true'\

'&facet.range=price'\

'&f.price.facet.range.start=0'\

'&f.price.facet.range.end=600'\

'&f.price.facet.range.gap=50'\

'&facet.range.other=after'You will get:

{

"responseHeader":{

"zkConnected":true,

"status":0,

"QTime":248,

"params":{

"facet.range":"price",

"q":"*:*",

"f.price.facet.range.start":"0",

"facet.range.other":"after",

"indent":"on",

"f.price.facet.range.gap":"50",

"rows":"0",

"wt":"json",

"facet":"true",

"f.price.facet.range.end":"600"}},

"response":{"numFound":4374,"start":0,"maxScore":1.0,"docs":[]

},

"facet_counts":{

"facet_queries":{},

"facet_fields":{},

"facet_ranges":{

"price":{

"counts":[

"0.0",19,

"50.0",1,

"100.0",0,

"150.0",2,

"200.0",0,

"250.0",1,

"300.0",1,

"350.0",2,

"400.0",0,

"450.0",1,

"500.0",0,

"550.0",0],

"gap":50.0,

"after":2,

"start":0.0,

"end":600.0}},

"facet_intervals":{},

"facet_heatmaps":{}}}Data Perspective Surface

Another type of faceting is the pivotal plane, also known as the "decision tree", which allows two or more fields to be nested for all possible combinations. Using sample technology product data, the pivot surface can be used to see how many product stocks or inventories are in the "book" category (cat field). Here's how to get the raw data for this scenario:

curl 'http://localhost:8983/solr/gettingstarted/select?q=*:*&rows=0&wt=json&indent=on'\

'&facet=on&facet.pivot=cat,inStock'This leads to the following response (pruned only for book category output), in which 14 items in the "book" category are said to have 12 inventories and 2 nonexistent items:

...

"facet_pivot":{

"cat,inStock":[{

"field":"cat",

"value":"book",

"count":14,

"pivot":[{

"field":"inStock",

"value":true,

"count":12},

{

"field":"inStock",

"value":false,

"count":2}]},

...More faceted options

For a complete report on Solr faceting, visit the Faceting section of Solr Reference Guide.

space

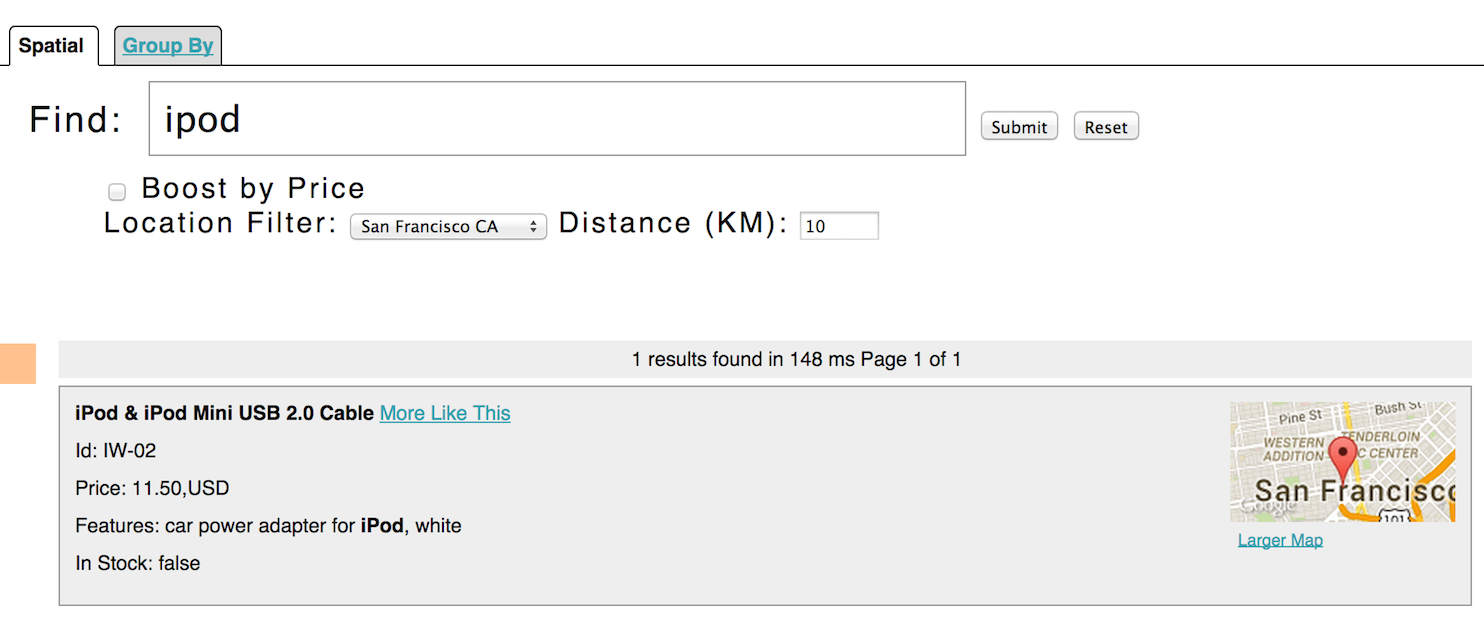

Solr has complex geospatial support, including searching within a specified range of locations (or boundaries), sorting by distance, or even improving results by distance. example / exampledocs / *. Some sample technical product documents in xml have locations associated with them to illustrate spatial capabilities. To run the technical product example, see the technical products sample section. Spatial queries can be combined with any other type of query, such as in the case of querying "ipod" within 10 kilometres of San Francisco:

The URL for this example is

http://localhost:8983/solr/techproducts/browse?q=ipod&pt=37.7752%2C-122.4232&d=10&sfield=store&fq=%7B%21bbox%7D&queryOpts=spatial&queryOpts=spatialUse the / browse UI to display maps of each item and allow easy selection of locations to search nearby.

For more information on Solr's spatial capabilities, see the Space Search section of the Solr Reference Guide.

Wrap up

If you run the complete command set in this Quick Start Guide, you have done the following:

Start Solr in SolrCloud mode, with two nodes and two collections, including fragments and replicas Index a directory of rich text files Indexed Solr XML files Indexed Solr JSON file Indexed CSV content Open the admin console and use its query interface to get results in JSON format Open/browse the interface and explore Solr's functionality in a more friendly and familiar interface

Nice works! The script (see below) took two minutes to run all these projects! (Your running time may vary depending on the power supply and available resources of your computer.)

Here is a Unix script for easy copy and paste to run the key commands of this Quick Start Guide:

date

bin/solr start -e cloud -noprompt

open http://localhost:8983/solr

bin/post -c gettingstarted docs/

open http://localhost:8983/solr/gettingstarted/browse

bin/post -c gettingstarted example/exampledocs/*.xml

bin/post -c gettingstarted example/exampledocs/books.json

bin/post -c gettingstarted example/exampledocs/books.csv

bin/post -c gettingstarted -d "<delete><id>SP2514N</id></delete>"

bin/solr healthcheck -c gettingstarted

dateClear

When you complete this guide, you may want to stop Solr and reset the environment back to its starting point. The following command line stops Solr and deletes the directory for each of the two nodes created by the startup script:

bin/solr stop -all ; rm -Rf example/cloud/