In the single control plane topology, multiple Kubernetes clusters use a single Istio control plane running on one cluster. Pilot on the control plane manages the services on the local and remote clusters and configures the invoke sidecar agent for all clusters.

Cluster aware service routing

Istio 1.1 introduces the cluster aware service routing capability. In a single control plane topology configuration, using istio's split horizon EDS (horizontal split endpoint discovery service) function, service requests can be routed to other clusters through its gateway. Based on the location of the request source, istio can route requests to different endpoints.

In this configuration, requests from Sidecar agents in one cluster to services in the same cluster are still forwarded to the local service IP. If the target workload is running in another cluster, use the gateway IP of the remote cluster to connect to the service.

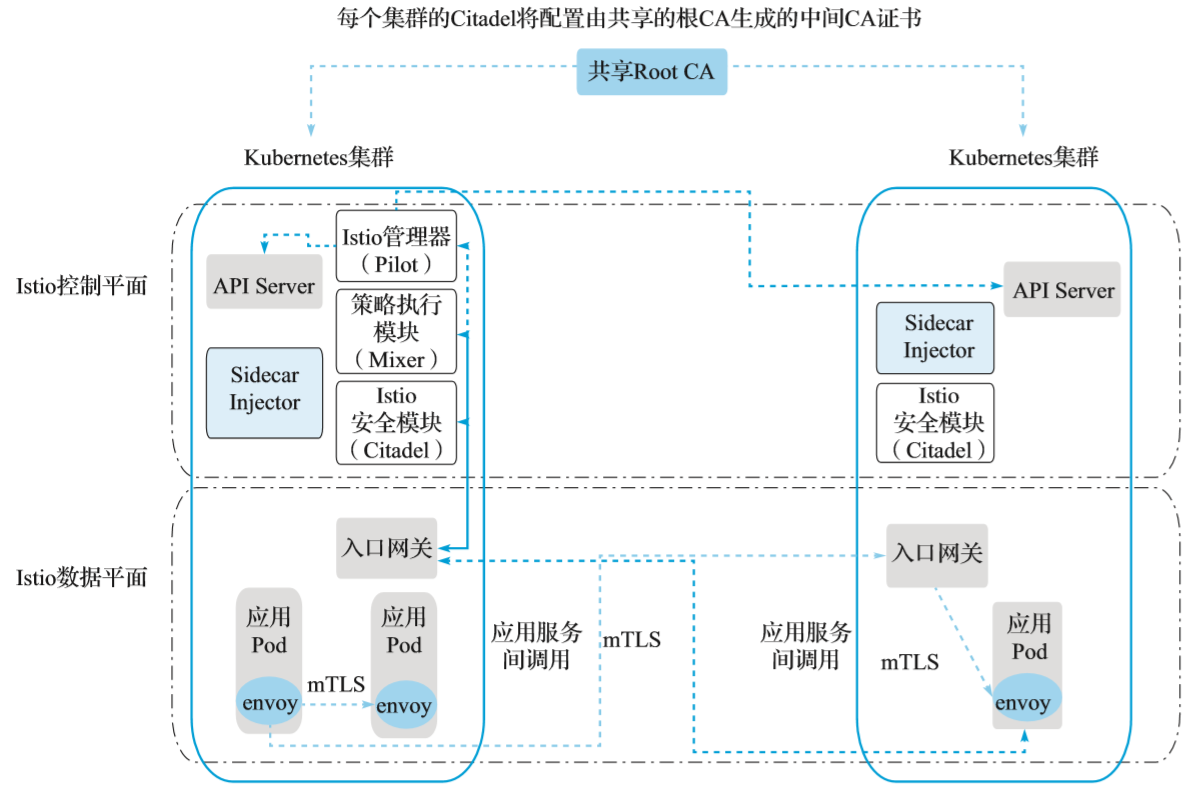

As shown in the figure, cluster 1 runs a full set of Istio control plane components, while cluster 2 only runs Istio Citadel, Sidecar Injector and Ingres gateway. There is no need for VPN connection and no need for direct network access between workloads in different clusters.

An intermediate CA certificate is generated for Citadel of each cluster from the shared root ca. the shared root CA enables two-way TLS communication across different clusters. For illustration purposes, we use the sample root CA certificate provided in the Istio installation under the samples/certs directory for two clusters. In the actual deployment, you may use different Ca certificates for each cluster, and all CA certificates are signed by the common root ca.

In each Kubernetes cluster (including clusters cluster1 and cluster2 in the example), use the following command to create the Kubernetes key for the generated CA certificate:

kubectl create namespace istio-system kubectl create secret generic cacerts -n istio-system \ --from-file=samples/certs/ca-cert.pem \ --from-file=samples/certs/ca-key.pem \ --from-file=samples/certs/root-cert.pem \ --from-file=samples/certs/cert-chain.pem

Istio control plane components

In the cluster cluster1 where the whole set of Istio control plane components are deployed, follow the steps below:

1. Install Istio's CRD s and wait a few seconds for them to be submitted to the Kubernetes API server, as follows:

for i in install/kubernetes/helm/istio-init/files/crd*yaml; do kubectl apply -f $i; done

2. Then start to deploy the Istio control plane in cluster 1.

If the helm dependencies are missing or not up-to-date, you can update them through helm dep update. Note that since istio CNI is not used, you can temporarily remove it from the dependency requirements.yaml Remove and then perform the update operation. The specific commands are as follows:

helm template --name=istio --namespace=istio-system \ --set global.mtls.enabled=true \ --set security.selfSigned=false \ --set global.controlPlaneSecurityEnabled=true \ --set global.meshExpansion.enabled=true \ --set global.meshNetworks.network2.endpoints[0].fromRegistry=n2-k8s-config \ --set global.meshNetworks.network2.gateways[0].address=0.0.0.0 \ --set global.meshNetworks.network2.gateways[0].port=15443 \ install/kubernetes/helm/istio > ./istio-auth.yaml

Note that the gateway address is set to 0.0.0.0. This is a temporary placeholder value that will be updated to its gateway's public IP value after cluster 2 deployment.

Deploy Istio to cluster1 as follows:

kubectl apply -f ./istio-auth.yaml

Ensure that the above steps are performed successfully in the Kubernetes cluster.

- Create a gateway to access the remote service as follows:

kubectl create -f - <<EOF apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: cluster-aware-gateway namespace: istio-system spec: selector: istio: ingressgateway servers: - port: number: 15443 name: tls protocol: TLS tls: mode: AUTO_PASSTHROUGH hosts: - "*" EOF

The gateway above is configured with a dedicated port 15443 to pass the incoming traffic to the target service specified in the SNI header of the request. The two-way TLS connection is always used from the source service to the target service.

Note that although the gateway definition is applied to cluster cluster1, because both clusters communicate with the same Pilot, this gateway instance is also applicable to cluster cluster2.

Istio remote component

Deploy the istio remote component in another cluster, cluster2, as follows:

1. First obtain the gateway address of the cluster cluster1, as follows:

export LOCAL_GW_ADDR=$(kubectl get svc --selector=app=istio-ingressgateway \ -n istio-system -o jsonpath="{.items[0].status.loadBalancer.ingress[0].ip}")

Create an Istio remote deployment YAML file using Helm by executing the following command:

helm template --name istio-remote --namespace=istio-system \ --values install/kubernetes/helm/istio/values-istio-remote.yaml \ --set global.mtls.enabled=true \ --set gateways.enabled=true \ --set security.selfSigned=false \ --set global.controlPlaneSecurityEnabled=true \ --set global.createRemoteSvcEndpoints=true \ --set global.remotePilotCreateSvcEndpoint=true \ --set global.remotePilotAddress=${LOCAL_GW_ADDR} \ --set global.remotePolicyAddress=${LOCAL_GW_ADDR} \ --set global.remoteTelemetryAddress=${LOCAL_GW_ADDR} \ --set gateways.istio-ingressgateway.env.ISTIO_META_NETWORK="network2" \ --set global.network="network2" \ install/kubernetes/helm/istio > istio-remote-auth.yaml

2. Deploy the Istio remote component to cluster2 as follows:

kubectl apply -f ./istio-remote-auth.yaml

Ensure that the above steps are performed successfully in the Kubernetes cluster.

3. Update the configuration item istio of cluster cluster1 to obtain the gateway address of cluster cluster2, as shown below:

export REMOTE_GW_ADDR=$(kubectl get --context=$CTX_REMOTE svc --selector=app= istio-ingressgateway -n istio-system -o jsonpath="{.items[0].status.loadBalancer.ingress [0].ip}")

Edit the configuration item istio under the namespace istio system in cluster cluster 1, replace the gateway address of network2, and change it from 0.0.0.0 to the gateway address ${remote of cluster cluster 2_ GW_ ADDR}. After saving, Pilot will automatically read the updated network configuration.

4. Create Kubeconfig of cluster 2. Create Kubeconfig of the service account istio multi on cluster 2 and save it as the file n2-k8s-config through the following command:

CLUSTER_NAME="cluster2" SERVER=$(kubectl config view --minify=true -o "jsonpath={.clusters[].cluster.server}") SECRET_NAME=$(kubectl get sa istio-multi -n istio-system -o jsonpath='{.secrets[].name}') CA_DATA=$(kubectl get secret ${SECRET_NAME} -n istio-system -o "jsonpath={.data['ca\.crt']}") TOKEN=$(kubectl get secret ${SECRET_NAME} -n istio-system -o "jsonpath={.data['token']}" | base64 --decode) cat <<EOF > n2-k8s-config apiVersion: v1 kind: Config clusters: - cluster: certificate-authority-data: ${CA_DATA} server: ${SERVER} name: ${CLUSTER_NAME} contexts: - context: cluster: ${CLUSTER_NAME} user: ${CLUSTER_NAME} name: ${CLUSTER_NAME} current-context: ${CLUSTER_NAME} users: - name: ${CLUSTER_NAME} user: token: ${TOKEN} EOF

5. Add cluster 2 to Istio control plane.

Execute the following commands in cluster cluster to add the kubeconfig of cluster cluster2 generated above to the secret of cluster cluster1. After executing these commands, Istio Pilot in cluster cluster1 will start to listen to the services and instances of cluster cluster2, just like listening to the services and instances in cluster cluster1:

kubectl create secret generic n2-k8s-secret --from-file n2-k8s-config -n istio-system kubectl label secret n2-k8s-secret istio/multiCluster=true -n istio-system

Deploy sample application

To demonstrate cross cluster access, deploy the sleep application service and version v1 helloworld service in the first Kubernetes cluster cluster 1, deploy version v2 helloworld service in the second cluster cluster 2, and then verify whether the sleep application can call the local or remote cluster helloworld service.

1. Deploy the helloworld service of sleep and version v1 to the first cluster cluster1, and execute the following command:

kubectl create namespace app1 kubectl label namespace app1 istio-injection=enabled kubectl apply -n app1 -f samples/sleep/sleep.yaml kubectl apply -n app1 -f samples/helloworld/service.yaml kubectl apply -n app1 -f samples/helloworld/helloworld.yaml -l version=v1 export SLEEP_POD=$(kubectl get -n app1 pod -l app=sleep -o jsonpath={.items..metadata.name})

2. Deploy the helloworld service of version v2 to the second cluster cluster2, and execute the following command:

kubectl create namespace app1 kubectl label namespace app1 istio-injection=enabled kubectl apply -n app1 -f samples/helloworld/service.yaml kubectl apply -n app1 -f samples/helloworld/helloworld.yaml -l version=v2

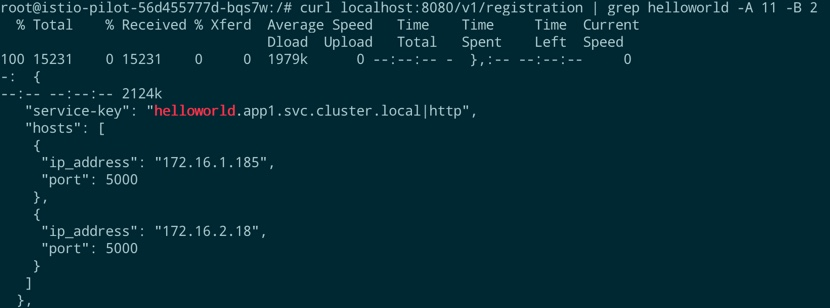

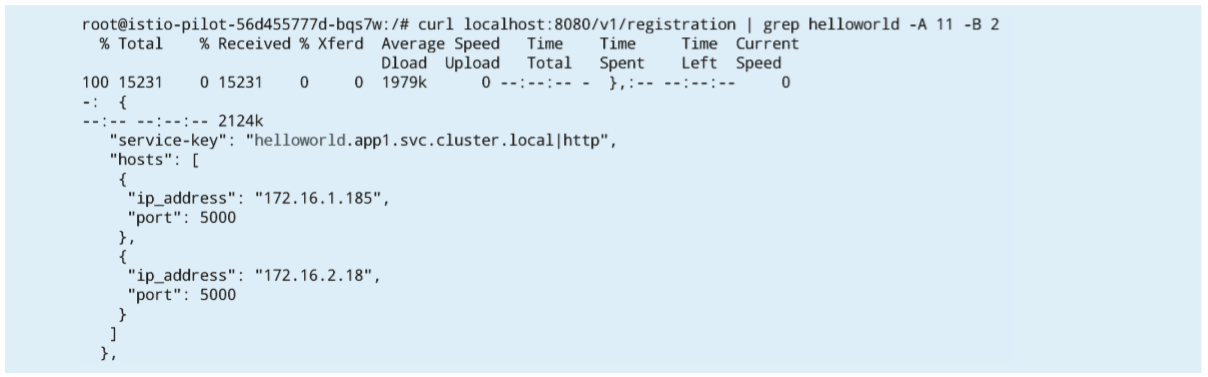

3. Log in to the Istio pilot container under the namespace Istio system and run curl localhost:8080/v1/registration |Grep helloworld - a 11 - B 2 command, if the following similar results are obtained, it means that the helloworld services of version V1 and v2 have been registered in the Istio control plane:

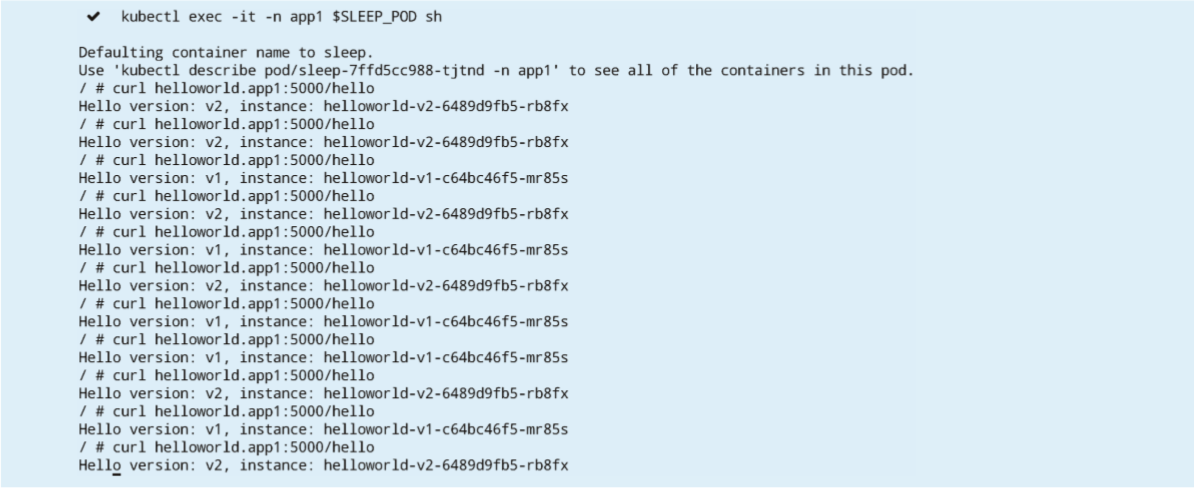

4. Verify whether the sleep service in cluster cluster 1 can call the helloworld service of local or remote cluster normally. Execute the following command under cluster cluster 1:

kubectl exec -it -n app1 $SLEEP_POD sh

Log in to the container and run curl helloworld.app1:5000/hello.

If the settings are correct, you can see two versions of helloworld service in the returned call results. At the same time, you can verify the access endpoint IP address by viewing the istio proxy container log in the sleep container group. The returned results are as follows: