Author: Song Tongtong

1. Introduction

nltk is a natural language processing module of python, which implements naive Bayes classification algorithm. This time, Mo will teach you how to classify tweets according to positive and negative emotions through Python and nltk modules.

Within the project, there is a code tutorial that can be run, naive ﹣ code.ipynb, and a deployment file, Deploy.ipynb, which is easy to deploy. You can combine the previously released Mo platform deployment introduction Learn how to deploy your own applications. You can also open the project address below to test the deployed application. For example, simply enter "My house is great." and "My house is not great." to determine whether they are respectively positive or negative.

The content of this article only needs to be familiar with Python. Please learn with little Mo. Project address: https://momodel.cn/explore/5eacf3097f8b5371a8480403?type=app

2. Preparations

2.1 import Kit

First, import the toolkit nltk we used.

import nltk # If there is no such package, you can operate according to the following code # pip install nltk # import nltk # nltk.download() # Download the dependent resources. Generally, Download nltk. Download ('public ')

2.2 data preparation

The training model needs a lot of labeled data to have a better effect. Here we use a small amount of data to help us understand the whole process and principle. If we need better experimental results, we can increase the number of training data. Because this model is a binary model, we need two kinds of data, marked as' positive 'and' negative '. The preliminary training model needs test data to test the effect.

# Data marked as positive pos_tweets = [('I love this car', 'positive'), ('This view is amazing', 'positive'), ('I feel great this morning', 'positive'), ('I am so excited about the concert', 'positive'), ('He is my best friend', 'positive')] # Data marked negative neg_tweets = [('I do not like this car', 'negative'), ('This view is horrible', 'negative'), ('I feel tired this morning', 'negative'), ('I am not looking forward to the concert', 'negative'), ('He is my enemy', 'negative')] #Test data, standby test_tweets = [('I feel happy this morning', 'positive'), ('Larry is my friend', 'positive'), ('I do not like that man', 'negative'), ('My house is not great', 'negative'), ('Your song is annoying', 'negative')]

2.3 feature extraction

We need to extract effective features from training data to train the model. The feature here is the tag, which is the valid word in the corresponding twitter. So, how to extract these effective words? First, segment words and turn all words into lowercase, take words longer than 2, and the resulting list represents a tweet; then, integrate all the words contained in the training data tweet.

# Data integration and segmentation, delete words less than 2 in length tweets = [] for (words, sentiment) in pos_tweets + neg_tweets: words_filtered = [e.lower() for e in words.split() if len(e) >= 3] tweets.append((words_filtered, sentiment)) print(tweets) # Extract all the words in the training data, and the word feature list is represented by the words extracted from the twitter content def get_words_in_tweets(tweets): all_words = [] for (words, sentiment) in tweets: all_words.extend(words) return all_words words_in_tweets = get_words_in_tweets(tweets) print(words_in_tweets)

In order to train the classifier, we need a unified feature, that is, whether to include the words in our thesaurus. The following feature extractor can extract the features of the input tweet word list.

# To extract features from a tweet, the dictionary obtained indicates which words the tweet contains def extract_features(document): document_words = set(document) features = { } for word in word_features: features['contains({})'.format(word)] = (word in document_words) return features print(extract_features(['love', 'this', 'car']))

2.4 making training sets and training classifiers

The training set is made by using the apply features method of nltk's classify module.

# Using the method of apply features to make training set training_set = nltk.classify.apply_features(extract_features, tweets) print(training_set)

Training naive Bayesian classifier.

# Training naive Bayesian classifier classifier = nltk.NaiveBayesClassifier.train(training_set)

At this point, our classifier preliminary training is completed, and it can be used.

3. Test work

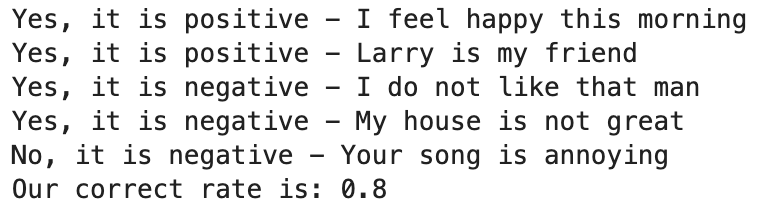

What is the effect of our classifier? Let's check it with the test set we prepared in advance. The accuracy of 0.8 can be obtained.

count = 0 for (tweet, sentiment) in test_tweets: if classifier.classify(extract_features(tweet.split())) == sentiment: print('Yes, it is '+sentiment+' - '+tweet) count = count + 1 else: print('No, it is '+sentiment+' - '+tweet) rate = count/len(test_tweets) print('Our correct rate is:', rate)

The reason why the sentence 'Your song is annoying' is classified incorrectly is that there is no information about the word 'Annoying' in our lexicon. This also illustrates the importance of datasets.

4. Analysis and summary

- The label of the classifier is the prior probability of the label. In our case, the probability of being labeled positive and negative is both 0.5.

- The feature probdist of the classifier is the feature / value probability dictionary. It is used with the label probdist to create a classifier. The feature / value probability dictionary associates the expected likelihood estimation with features and tags. We can see that when the input contains the word 'best', the probability that the input value is marked negative is 0.833.

- We can show the most informative features in the classifier by the show most informative features () method. We can see that if "not" is not included in the input, the possibility of being marked as positive is 1.6 times higher than that of negative; if "best" is not included, the possibility of being marked as negative is 1.2 times higher than that of positive.

print(classifier._label_probdist.prob('positive')) print(classifier._label_probdist.prob('negative')) print(classifier._feature_probdist) print(classifier._feature_probdist[('negative', 'contains(best)')].prob(True)) print(classifier.show_most_informative_features())

For more details, please enter the project: https://momodel.cn/explore/5eacf3097f8b5371a8480403?type=app Fork to your workbench for practical operation and learning.

5. References

- Learning materials: http://www.nltk.org/book/

- Reference blog: http://www.laurentluce.com/posts/twitter-sentiment-analysis-using-python-and-nltk/

About us

Mo (website: https://momodel.cn) It is an artificial intelligence online modeling platform supporting Python, which can help you develop, train and deploy models quickly.

The near future Mo We are also continuing to introduce machine learning related introductory courses and papers sharing activities. Welcome to our official account to get the latest information.