1 course learning

This lesson is mainly for the white AI course:

Ptorch model reasoning and multi task general paradigm The second lesson in the course.

2 assignment topic

Title Description

Required questions: (1) from torchvision Loading in resnet18 Model structure, and load the pre trained model weight 'resnet18-5c106cde.pth' (In the material package weights Folder). (2) Load the weight in (1) resnet18 Model, preservation onnx File. (3) with torch.rand([1,3,224,224]).type(torch.float32)As input, find resnet18 The amount of model calculation and parameters. (4) with torch.rand([1,3,448,448]).type(torch.float32)As input, find resnet18 The amount of model calculation and parameters.

Thinking questions: (1) Compare the results of (3) and (4) in the must do question. What is the law? (2) Try to use netron visualization resnet18 of onnx file (3) model As torch.nn.Module Subclass of, except with model.state_dict()In addition to viewing the network layer, you can also use model.named_parameters()and model.parameters(). What's the difference between them? (4) Used when loading model weights model.load_state_dict(Dictionaries, strict=True),Inside strict When do parameters need to be assigned False?

Implementation and results

from thop import profile

from torchvision.models import resnet18

# Detect the use of GPU or CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# Loading model structure

model = resnet18(num_classes=1000).to(device)

# Load weight file

pretrained_state_dict = torch.load('./weights/resnet18-5c106cde.pth')

model.load_state_dict(pretrained_state_dict, strict=True)

inputs = torch.ones([1, 3, 224, 224]).type(torch.float32).to(torch.device('cuda:0'))

torch.onnx.export(model, inputs, './weights/resnet18.onnx', verbose=False)

inputs1 = torch.rand([1, 3, 224, 224]).type(torch.float32).to(torch.device('cuda:0'))

inputs2 = torch.rand([1, 3, 448, 448]).type(torch.float32).to(torch.device('cuda:0'))

flops1, params1 = profile(model=model, inputs=(inputs1,))

flops2, params2 = profile(model=model, inputs=(inputs2,))

print('Model([1, 3, 224, 224]): {:.2f} GFLOPs and {:.2f}M parameters'.format(flops1/1e9, params1/1e6))

print('Model([1, 3, 448, 448]): {:.2f} GFLOPs and {:.2f}M parameters'.format(flops2/1e9, params2/1e6))

The generated resnet18.onnx file is 45M

Taking torch. Rand ([1,322424]). Type (torch. Float32) as the input, the model calculation amount of ResNet18 is 1.82 GFLOPs and the parameter amount is 11.69M

Taking torch. Rand ([1,3448448]). Type (torch. Float32) as the input, the model calculation amount of ResNet18 is 7.27 GFLOPs and the parameter amount is 11.69M

reflection

1 Analysis of model calculation and parameters

The parameter quantity of the model is related to the network structure itself, but independent of the input picture. Therefore, for the previous input of torch.rand ([1,322424]). Type (torch. Float32) and torch.rand ([1,3448448]). Type (torch. Float32), the parameter quantity of resnet18 is the same, i.e. 11.69M; However, the calculation amount of the model is related to the input picture, and it can be seen that the calculation amount of torch.rand ([1,3448448]). Type (torch. Float32) is 4 times more than that of torch.rand ([1,322424]). Type (torch. Float32) as the input, so the results are 7.27 GFLOPs and 1.82 GFLOPs respectively.

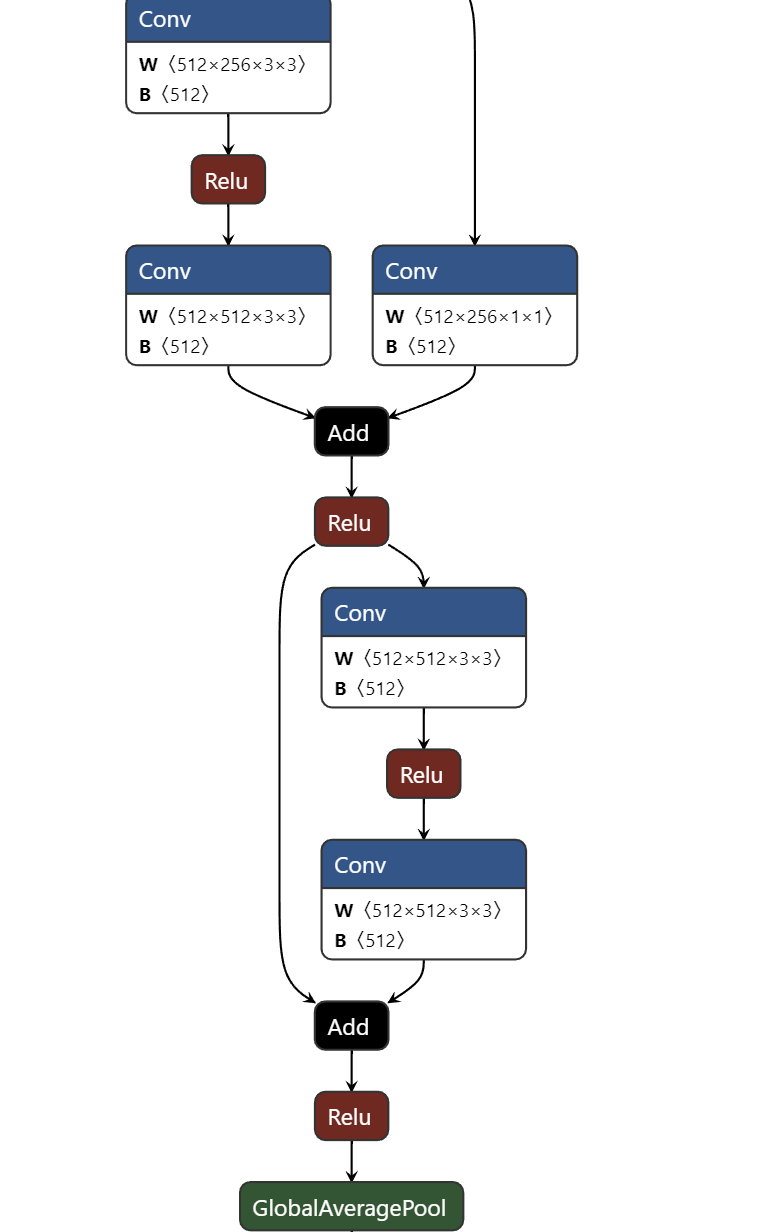

2. Netron visualizes the onnx file of resnet18

3 model.state_dict(),model.named_ Comparison of parameters() and model.parameters()

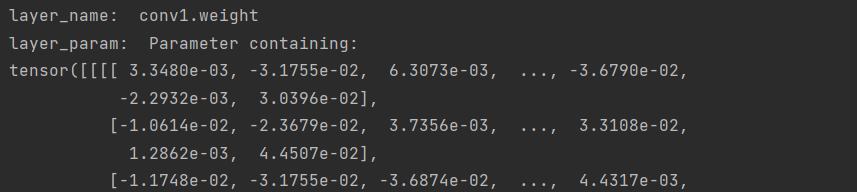

model.named_parameters() returns the list. Each tuple contains two contents, layer name and layer parameter

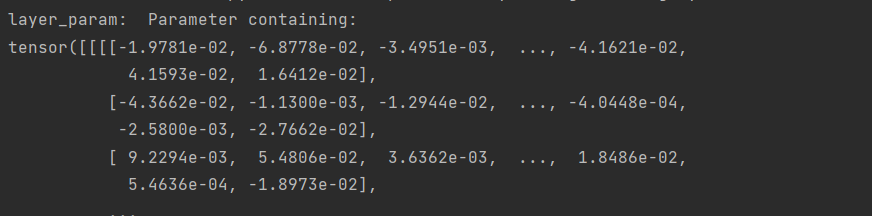

model.parameters() returns the list, which contains only layer parameters

model.named_ Test results for parameters()

from torchvision.models import resnet18

# Detect the use of GPU or CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# Loading model structure

model = resnet18(num_classes=1000).to(device)

for layer_name, layer_param in model.named_parameters():

print('layer_name: ', layer_name)

print('layer_param: ', layer_param)

Test results of model.parameters()

from torchvision.models import resnet18

# Detect the use of GPU or CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# Loading model structure

model = resnet18(num_classes=1000).to(device)

for layer_param in model.parameters():

print('layer_param: ', layer_param)

model.state_ Test results of dict()

from torchvision.models import resnet18

# Detect the use of GPU or CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# Loading model structure

model = resnet18(num_classes=1000).to(device)

for layer_name, layer_param in model.state_dict().items():

print('layer_name: ', layer_name)

print('layer_param: ', layer_param)

model.named_parameters() and model.state_ Different from dict()

(1) Different return value types

model.named_parameters(): layer_name and layer_param is packaged into a tuple and then stored in the list, so the traversal method is for layer_name, layer_param in model.named_parameters()

model.state_dict(): layer_name and layer_param is stored in the form of key value pair information, so the traversal method is for layer_name, layer_param in model.state_dict().items()

(2) The types of stored model parameters are different

model.named_parameters(): only parameters that can be learned and updated are saved

model.state_dict(): stores all parameters in all layer s contained in the model

(3) Required of the returned value_ Grad attribute is different

model.named_parameters(): require_ The grad property is True

model.state_dict(): require_ The grad attribute is False

4 model.load_ state_ What is the role of strict in dict (Dictionary, strict=True)?

This dictionary is generally a weight file, which means strict.

If strict=True, the parameter value of the weight file must strictly match the model

If strict=False, only the parameters to be loaded in the model can be loaded with the weight file; There is no need to make the parameters of the weight file strictly correspond to the parameters of the model

Learning experience

In this lesson, we mainly learn about the amount of model parameters and calculations by loading models and weight files, so as to lay a foundation for subsequent model training and testing.

reference resources

https://blog.csdn.net/weixin_41712499/article/details/110198423