Preface

This article is about using Scrapy to grab app details pages from Anzhi Market, such as click to view them Peace elite , including app name, version number, icon, classification, time, size, download, author, introduction, update instructions, software screenshots, exciting content, etc. Picture resources icon and market display (app screenshots) are downloaded locally and all data is stored in the database.

Questions to consider:

- Stored database design

- Picture resource links have redirection

- The icon to download the app needs to be a.png suffix

- ...

Need to familiarize yourself with the Scrapy framework first: Click to learn

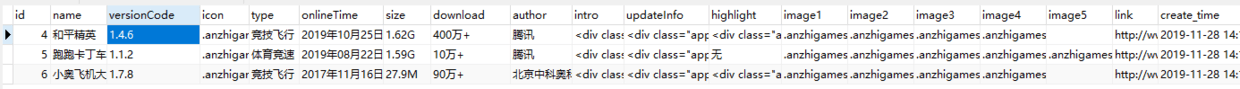

Database Design

Created as mysql database, named app_anzhigame, table named games, Anzhi market map is limited to 4-5, profile etc. less than 1500 words, pictures are relative addresses

# Building Libraries CREATE DATABASE app_anzhigame CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci; USE app_anzhigame; DROP TABLE games; # Building tables CREATE TABLE games( id INTEGER(11) UNSIGNED AUTO_INCREMENT COLLATE utf8mb4_general_ci, name VARCHAR(20) NOT NULL COLLATE utf8mb4_general_ci COMMENT 'Game Name' , versionCode VARCHAR(10) COLLATE utf8mb4_general_ci COMMENT 'version number' NOT NULL DEFAULT 'v1.0', icon VARCHAR(100) COLLATE utf8mb4_general_ci COMMENT 'Game Icon icon' NOT NULL DEFAULT '', type VARCHAR(20) COLLATE utf8mb4_general_ci COMMENT 'classification' NOT NULL DEFAULT '', onlineTime VARCHAR(20) COLLATE utf8mb4_general_ci COMMENT 'uptime', size VARCHAR(10) COLLATE utf8mb4_general_ci COMMENT 'Size' NOT NULL DEFAULT '0B', download VARCHAR(10) COLLATE utf8mb4_general_ci COMMENT 'Downloads' NOT NULL DEFAULT '0', author VARCHAR(20) COLLATE utf8mb4_general_ci COMMENT 'author', intro VARCHAR(1500) COLLATE utf8mb4_general_ci COMMENT 'brief introduction', updateInfo VARCHAR(1500) COLLATE utf8mb4_general_ci COMMENT 'Update description', highlight VARCHAR(1500) COLLATE utf8mb4_general_ci COMMENT 'Brilliant Content', image1 VARCHAR(100) COLLATE utf8mb4_general_ci COMMENT 'Market Figure 1', image2 VARCHAR(100) COLLATE utf8mb4_general_ci COMMENT 'Market Figure 2', image3 VARCHAR(100) COLLATE utf8mb4_general_ci COMMENT 'Market Figure 3', image4 VARCHAR(100) COLLATE utf8mb4_general_ci COMMENT 'Market Figure 4', image5 VARCHAR(100) COLLATE utf8mb4_general_ci COMMENT 'Market Figure 5', link VARCHAR(200) COLLATE utf8mb4_general_ci COMMENT 'Crawl Links', create_time timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT 'Creation Time', update_time timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE current_timestamp COMMENT 'Update Time', PRIMARY KEY (`id`) )ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci COMMENT 'Anzhi Market Crawls Game List';

Create item

Create a project scrapy startproject anzhispider, modify items.py

class AnzhispiderItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() # Link Address link = scrapy.Field() # app name name = scrapy.Field() # version number versionCode = scrapy.Field() # Game icon icon icon = scrapy.Field() # icon storage address iconPath = scrapy.Field() # classification type = scrapy.Field() # uptime onlineTime = scrapy.Field() # Size size = scrapy.Field() # Downloads download = scrapy.Field() # author author = scrapy.Field() # brief introduction intro = scrapy.Field() # Update description updateInfo = scrapy.Field() # Brilliant Content highlight = scrapy.Field() # Market Character Array images = scrapy.Field() # Market Map Storage Address imagePaths = scrapy.Field()

Create Spider

Create AnzhiSpider.py under the spiders directory and class AnzhiSpider, inherited from scrapy.Spider.

class AnzhiSpider(Spider): name = "AnzhiSpider" # Domains Allowed Access allowed_domains = ["www.anzhi.com"] start_urls = ["http://www.anzhi.com/pkg/3d81_com.tencent.tmgp.pubgmhd.html"] # start_urls = ["http://www.anzhi.com/pkg/3d81_com.tencent.tmgp.pubgmhd.html","http://www.anzhi.com/pkg/84bf_com.sxiaoao.feijidazhan.html","http://www.anzhi.com/pkg/4f41_com.tencent.tmgp.WePop.html"] def parse(self, response): item = AnzhispiderItem() root = response.xpath('.//div[@class="content_left"]') # link item['link'] = response.url # Icon item['icon'] = root.xpath('.//div[@class="app_detail"]/div[@class="detail_icon"]/img/@src').extract()[0] # app name item['name'] = root.xpath( './/div[@class="app_detail"]/div[@class="detail_description"]/div[@class="detail_line"]/h3/text()').extract()[ 0] # version number item['versionCode'] = root.xpath( './/div[@class="app_detail"]/div[@class="detail_description"]/div[@class="detail_line"]/span[@class="app_detail_version"]/text()').extract()[ 0] if item['versionCode'] and item['versionCode'].startswith("(") and item['versionCode'].endswith(")"): item['versionCode'] = item['versionCode'][1:-1] # Category, time to go online, size, download volume, author gets all the details first details = root.xpath( './/div[@class="app_detail"]/div[@class="detail_description"]/ul[@id="detail_line_ul"]/li/text()').extract() details_right = root.xpath( './/div[@class="app_detail"]/div[@class="detail_description"]/ul[@id="detail_line_ul"]/li/span/text()').extract() details.extend(details_right) for detailItem in details: if detailItem.startswith("Classification:"): item['type'] = detailItem[3:] continue if detailItem.startswith("Time:"): item['onlineTime'] = detailItem[3:] continue if detailItem.startswith("Size:"): item['size'] = detailItem[3:] continue if detailItem.startswith("Download:"): item['download'] = detailItem[3:] continue if detailItem.startswith("Author:"): item['author'] = detailItem[3:] continue # brief introduction item['intro'] = root.xpath( './/div[@class="app_detail_list"][contains(./div[@class="app_detail_title"], "introduction")]/div[@class="app_detail_infor"]').extract() if item['intro']: item['intro'] = item['intro'][0].replace('\t', '').replace('\n', '').replace('\r', '') else: item['intro'] = "" # Update description item['updateInfo'] = root.xpath( './/div[@class="app_detail_list"][contains(./div[@class="app_detail_title"], "update description")]/div[@class="app_detail_infor"]').extract() if item['updateInfo']: item['updateInfo'] = item['updateInfo'][0].replace('\t', '').replace('\n', '').replace('\r', '') else: item['updateInfo'] = "" # Brilliant Content item['highlight'] = root.xpath( './/div[@class="app_detail_list"][contains(./div[@class="app_detail_title"], "exciting content")]/div[@class="app_detail_infor"]').extract() if item['highlight']: item['highlight'] = item['highlight'][0].replace('\t', '').replace('\n', '').replace('\r', '') else: item['highlight'] = "" # Market Map Address item['images'] = root.xpath( './/div[@class="app_detail_list"][contains(./div[@class="app_detail_title"], "software screenshot")//ul/li/img/@src').extract() yield item

Download icon and market map

Create ImageResPipeline and inherit from scrapy.pipelines.files import FilesPipeline, you can see why ImagesPipeline is not used Interpretation of ImagesPipeline's official website , its main functions are:

- Convert all downloaded pictures to a common format (JPG) and mode (RGB)

- Avoid downloading pictures that you have recently downloaded

- Thumbnail Generation

- Detect the width/height of the images to ensure they meet the minimum limit

The key downloaded pictures are in jpg format, the minor editions need to download the icon in png format, and the icon needs no background. ImagesPipeline pictures can not remove the background even if they are type converted, which will result in the filling of the icon gaps in rounded corners.

class ImageResPipeline(FilesPipeline): def get_media_requests(self, item, info): ''' //Send a request based on the URL of the file (url follow-up) :param item: :param info: :return: ''' # Differentiate icon from market based on index yield scrapy.Request(url='http://www.anzhi.com' + item['icon'], meta={'item': item, 'index': 0}) # Market Map Download for i in range(0, len(item['images'])): yield scrapy.Request(url='http://www.anzhi.com' + item['images'][i], meta={'item': item, 'index': (i + 1)}) def file_path(self, request, response=None, info=None): ''' //Custom File Save Path //The default save path is a full created under FILES_STORE to store. If we want to store directly under FILES_STORE or a date path, we need to customize the store path. //The default download is a file without a suffix. Depending on the index, the icon needs to have a.png suffix and the market map has a.jpg suffix. :param request: :param response: :param info: :return: ''' item = request.meta['item'] index = request.meta['index'] today = str(datetime.date.today()) # Define the store path under FILES_STORE as YYYY/MM/dd/app name, such as 2019/11/28/Peace Elite outDir = today[0:4] + r"\\" + today[5:7] + r"\\" + today[8:] + r"\\" + item['name'] + r"\\" if index > 0: # Index>0 is named [index].jpg Note: Files named with numbers need to be converted to strings, otherwise the download will fail and the specific reason will not be reported!!! file_name = outDir + str(index) + ".jpg" else: # index==0 is icon download, png format is required file_name = outDir + "icon.png" # Delete output file if it already exists if os.path.exists(FILES_STORE + outDir) and os.path.exists(FILES_STORE + file_name): os.remove(FILES_STORE + file_name) return file_name def item_completed(self, results, item, info): ''' //Processing request results :param results: :param item: :param info: :return: ''' ''' results The format is: [(True, {'checksum': '2b00042f7481c7b056c4b410d28f33cf', 'path': 'full/7d97e98f8af710c7e7fe703abc8f639e0ee507c4.jpg', 'url': 'http://www.example.com/images/product1.jpg'}), (True, {'checksum': 'b9628c4ab9b595f72f280b90c4fd093d', 'path': 'full/1ca5879492b8fd606df1964ea3c1e2f4520f076f.jpg', 'url': 'http://www.example.com/images/product2.jpg'}), (False, Failure(...)) ] ''' file_paths = [x['path'] for ok, x in results if ok] if not file_paths: raise DropItem("Item contains no files") for file_path in file_paths: if file_path.endswith("png"): # Assign icon's picture address to iconPath item['iconPath'] = FILES_STORE + file_path else: # Market Map Address Creates an empty array if there is no attribute for imagePaths if 'imagePaths' not in item: item['imagePaths'] = [] item['imagePaths'].append(FILES_STORE + file_path) return item

Database Storage

PyMySQL==0.9.2 is used to connect mysql. A new tool class is created in the subgroup. Insert, update and delete statements call update(self, sql) and query(self, sql) are called by query statements.

class MySQLHelper: def __init__(self): pass def query(self, sql): # Open database connection db = self.conn() # Use cursor() method to get operation cursor cur = db.cursor() # 1. Query operation # Write sql query statement user corresponding to my table name # sql = "select * from user" try: cur.execute(sql) # Execute sql statement results = cur.fetchall() # Get all records of the query return results except Exception as e: thread_logger.debug('[mysql]: {} \n\tError SQL: {}'.format(e, sql)) raise e finally: self.close(db) # Close Connection def update(self, sql): # 2. Insert operation db = self.conn() # Use cursor() method to get operation cursor cur = db.cursor() try: data = cur.execute(sql) # Submit data1 = db.commit() return True except Exception as e: thread_logger.debug('[mysql]: {} \n\tError SQL: {}'.format(e, sql)) # Error Rollback db.rollback() return False finally: self.close(db) # Establish links def conn(self): db = pymysql.connect(host="192.168.20.202", user="***", password="****", db="app_anzhigame", port=3306, use_unicode=True, charset="utf8mb4") return db # Close def close(self, db): db.close()

Change AnzhispiderPipeline, insert data, some data has default value processing,

class AnzhispiderPipeline(object): """ //Database Storage """ def __init__(self): # Open Database Link self.mysqlHelper = MySQLHelper() def process_item(self, item, spider): # sql stored in database sql = "INSERT INTO games(link,name,versionCode,icon,type,onlineTime,size,download,author,intro,updateInfo,highlight,image1,image2,image3,image4,image5) " \ "VALUES ('%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s')" % ( item['link'], item['name'], parseProperty(item, "versionCode", "v1.0"), parseProperty(item, "iconPath", ""), parseProperty(item, "type", ""), parseProperty(item, "onlineTime", ""), parseProperty(item, "size", "0B"), parseProperty(item, "download", "0"), parseProperty(item, "author", "Unknown"), parseProperty(item, "intro", "nothing"), parseProperty(item, "updateInfo", "nothing"), parseProperty(item, "highlight", "nothing"), parseImageList(item, 0), parseImageList(item, 1), parseImageList(item, 2), parseImageList(item, 3), parseImageList(item, 4)) # insert data self.mysqlHelper.update(sql) return item

def parseProperty(item, property, defaultValue) is a custom method to get the default value by void, def parseImageList(item, index) is used to get the market map.

def parseProperty(item, property, defaultValue): """ //Returns the default value if the corresponding property of the object is empty or not :param item: object :param property: Property Name :param defaultValue: Default value """ if property in item and item[property]: return item[property] else: return defaultValue def parseImageList(item, index): """ //Return to Market Map Address :param item: :param index: :return: """ if "imagePaths" in item and item["imagePaths"]: # With pictures # Get Array Size if len(item["imagePaths"]) >= index + 1: return item["imagePaths"][index] else: return "" else: return ""

Configure settings.py

Note that adding FILES_STORE to store the path for file downloads, MEDIA_ALLOW_REDIRECTS allows picture redirection, since smart picture links are redirected, no settings will fail the download.

# File download address FILES_STORE = ".\\anzhigames\\" # Allow redirection (optional) MEDIA_ALLOW_REDIRECTS = True

Configure pipelines, noting that the ImageResPipeline values need to be smaller than AnzhispiderPipeline values, ranging from 0 to 1000, with smaller values having higher priority.

# Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'anzhispider.pipelines.AnzhispiderPipeline': 300, 'anzhispider.pipelines.ImageResPipeline': 11, }

So far.End.scrapy crawl AnzhiSpider runs, shuts down.Under the project. \anzhigames\Pictures were generated,

Database Storage

Require project source, Click Text Link

(viii) More good text Welcome to my Public Number~