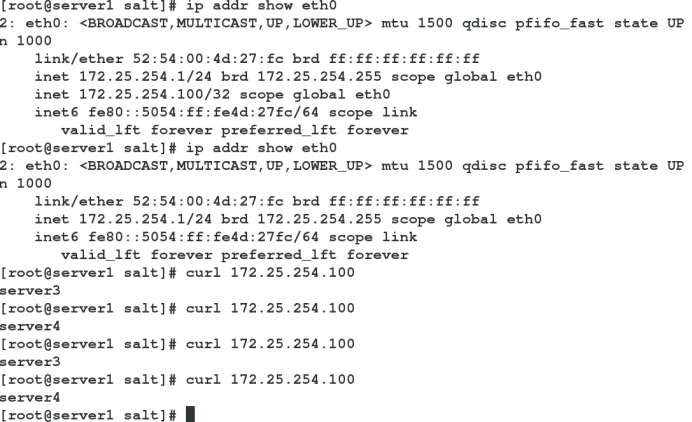

Saltstack deploys keepalived to achieve high availability of haproxy

Illustration:

Environmental Science:

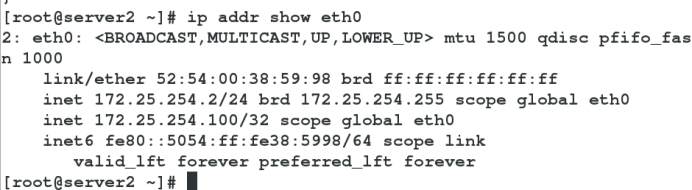

Server1(salt-master,keepalived-backup,haproxy)172.25.254.1

Server2(salt-minion,keepalived-master,haproxy)172.25.254.2

Server3(salt-minion,RS,httpd)172.25.254.3

Server4(salt-minion,RS,httpd)172.25.254.4

vip: 172.25.254.100

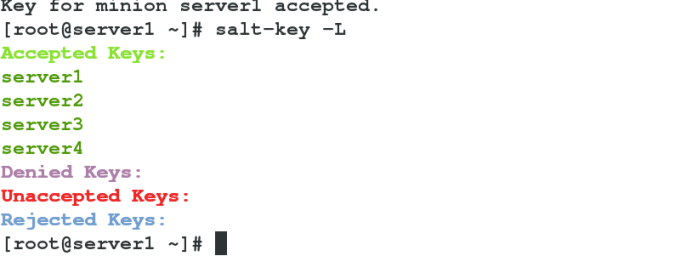

Configure salt first

haproxy implements nginx load balancing using the previous deployment file, see Installation, Deployment and Practice of Saltstack

Now you just need to configure the keepalived deployment

cd /srv/salt

mkdir keepalived/files -p

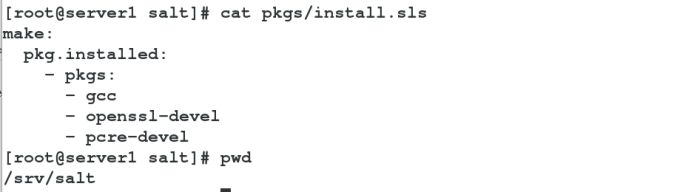

vim pkgs/install.sls

make:

pkg.installed:

- pkgs:

- gcc

- openssl-devel

- pcre-devel

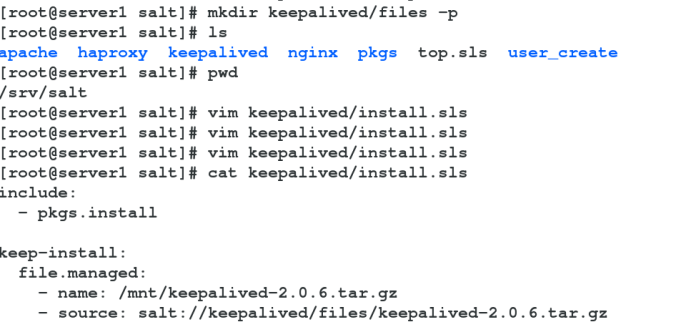

vim keepalived/install.sls

include:

- pkgs.install

keep-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --with-init=SYSV --prefix=/usr/local/keepalived &> /dev/null && make &> /dev/null && make install &> /dev/null && cd .. && rm -fr keepalived-2.0.6

- creates: /usr/local/keepalived

/etc/keepalived:

file.directory:

- mode: 755

/etc/sysconfig/keepalived:

file.symlink:

- target: /usr/local/keepalived/etc/sysconfig/keepalived

/sbin/keepalived:

file.symlink:

- target: /usr/local/keepalived/sbin/keepalived

First, test whether there are errors in compilation and soft connection.

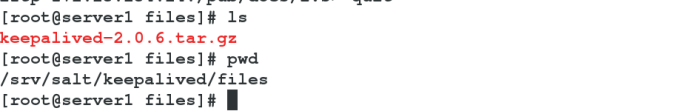

The keepalived source package needs to be placed in the / srv/salt/keepalived/files directory

No report error

Next, write the keepalived startup file

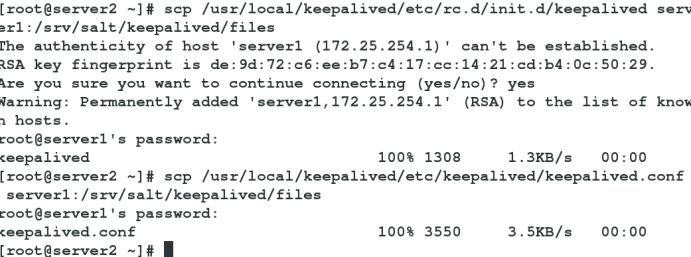

Place the keepalived startup script and the keepalived configuration file on server2 in server1's

/ srv/salt/keepalived/files directory

scp /usr/local/keepalived/etc/keepalived/keepalived.conf server1:/srv/salt/keepalived/files scp /usr/local/keepalived/etc/rc.d/init.d/keepalived server1:/srv/salt/keepalived/files

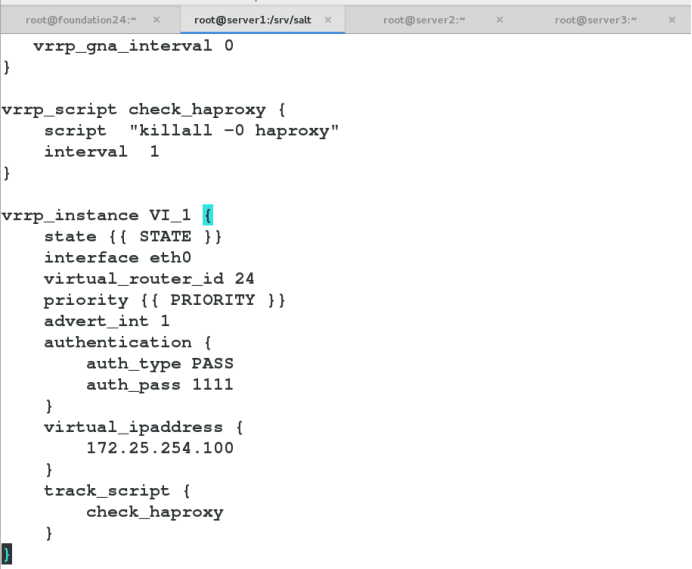

vim keepalived/files/keepalived.conf #Editing configuration files

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 1

}

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id 24

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.254.100

}

track_script {

check_haproxy

}

}

vim keepalived/service.sls

include:

- keepalived.install

/etc/keepalived/keepalived.conf:

file.managed:

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {{ pillar['state'] }}

PRIORITY: {{ pillar['priority'] }}

keep-service:

file.managed:

- name: /etc/init.d/keepalived

- source: salt://keepalived/files/keepalived

- mode: 755

service.running:

- name: keepalived

- reload: True

- watch:

- file: /etc/keepalived/keepalived.conf

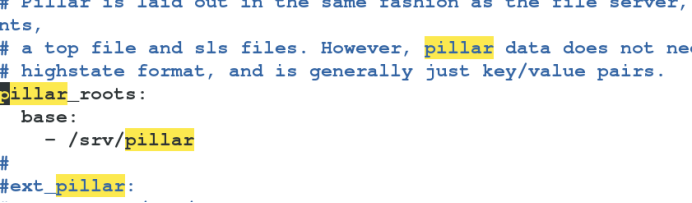

vim /etc/salt/master

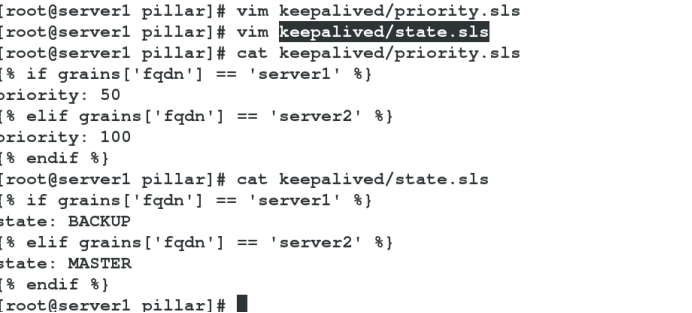

/etc/init.d/salt-master restart mkdir /srv/pillar cd /srv/pillar mkdir keepalived vim keepalived/state.sls vim keepalived/priority.sls

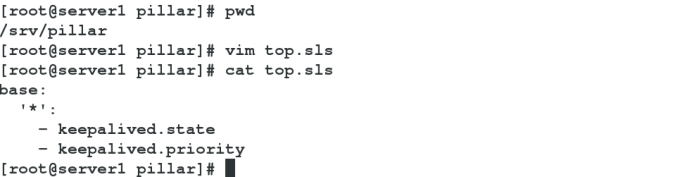

vim top.sls

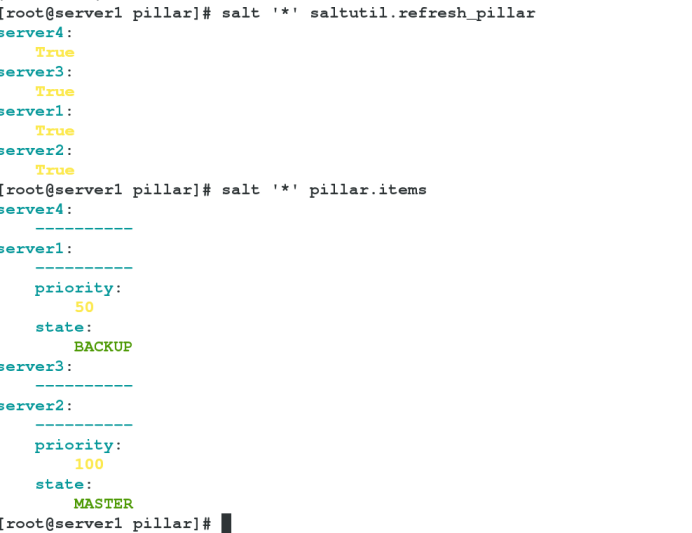

salt '*' saltutil.refresh_pillar #Refresh pillar salt '*' pillar.items #See

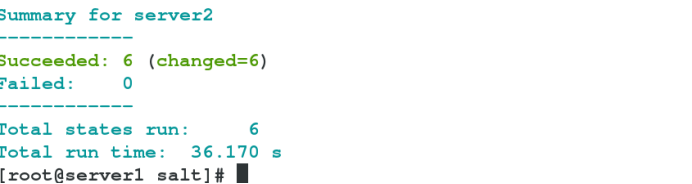

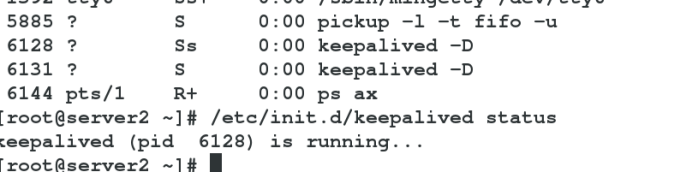

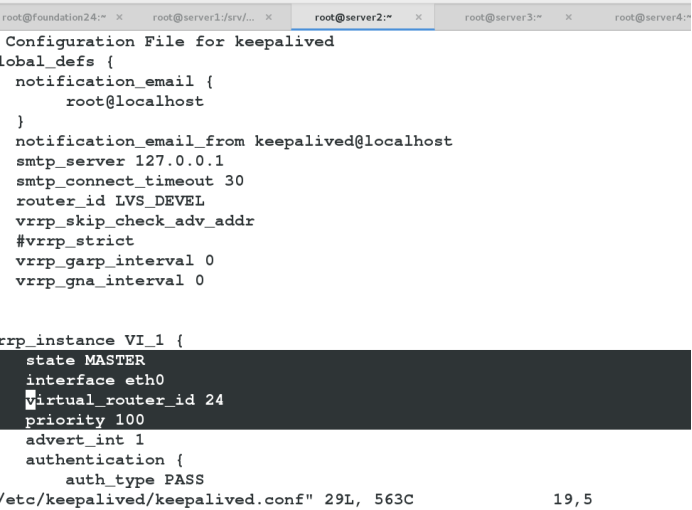

Test whether to start on server 2 and view configuration file parameters

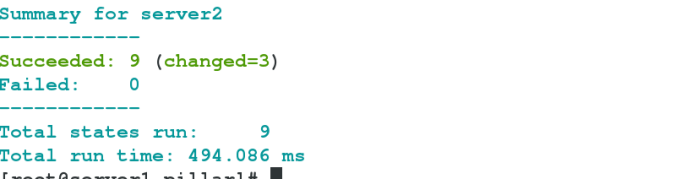

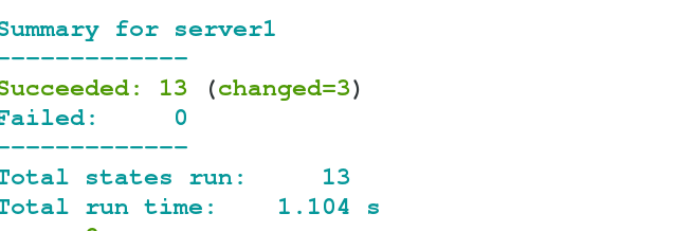

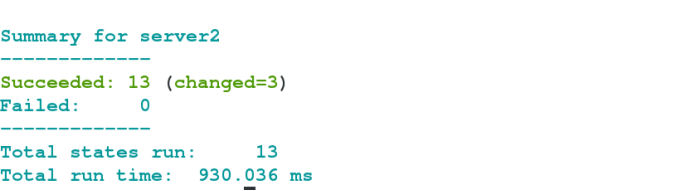

salt server2 state.sls keepalived.service

Modify the keepalived configuration file in server 1

vim /srv/salt/keepalived/files/keepalived.conf

# Customize a script function whose name check_haproxy can be customized

vrrp_script check_haproxy {

script "killall -0 haproxy" #This script needs to be written by yourself

interval 1 #How often do you probe each time?

}

vrrp_instance VI_1 {

track_script {

check_haproxy

}

}

vim top.sls

base:

'server1':

- haproxy.install

- keepalived.service

'server2':

- haproxy.install

- keepalived.service

'server3':

- nginx.install3

'server4':

- nginx.install4

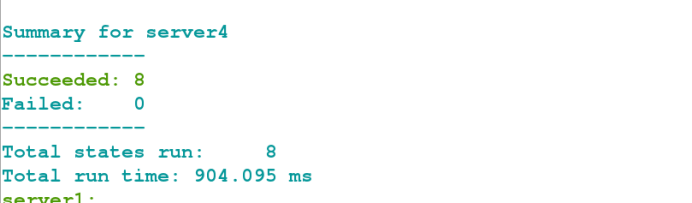

salt '*' state.highstate #No report error

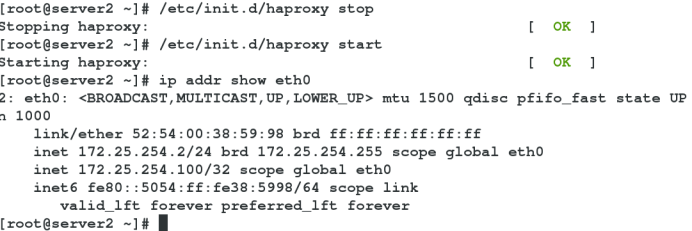

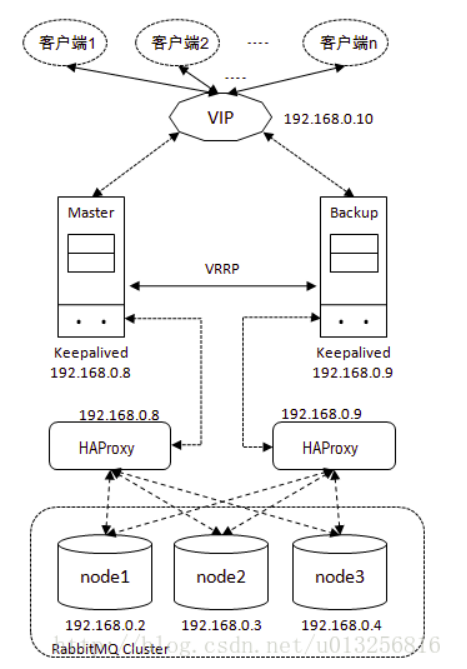

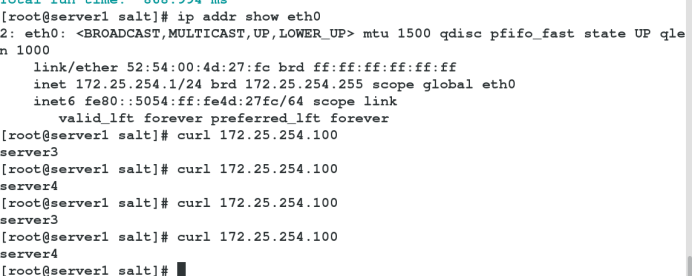

Test:

At this point vip is on server 2

On server 1

curl 172.25.254.100 #Realizing Load Balancing

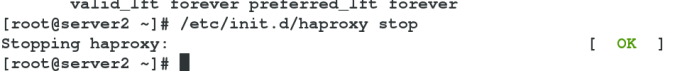

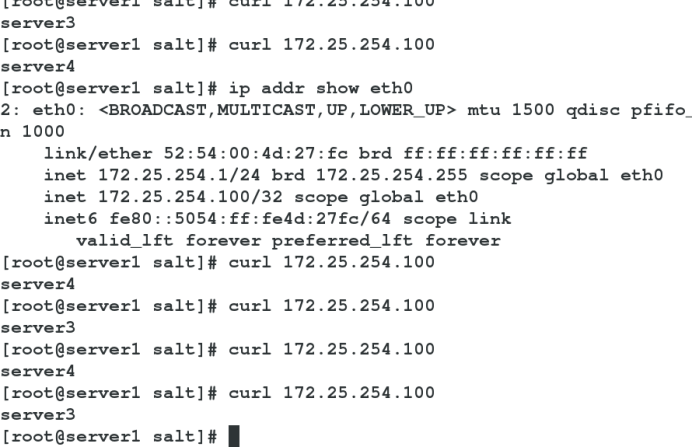

Close haproxy of server 2 and find vip floating on server 1. Load balancing can still be achieved at this time

When haproxy is started on server 2, vip floats to server 2, which can achieve load balancing and high availability of haproxy.