The article is long. It is recommended to collect it before reading it

In the previous article, we introduced the NameServer startup process and several core points involved in NameServer startup:

- Load the NameServer configuration class and NettyServer configuration class, and create the core component NamesrcController

- Initialize various data in the Controller, including Netty network processing class, worker thread pool, routing manager and two scheduled tasks. One is used to scan inactive brokers every 10s and remove them from the NameServer, and the other is used to print the KV configuration every 10min

- The routing manager maintains metadata information such as Broker, cluster and topic queue

- There is also a network request processor, which specially handles all kinds of network requests received by the NameServer

- And a hook function that gracefully closes the thread pool

This section will explain the startup process on the Broker side.

Before sorting out the source code, first think about what the Broker will do when it is started. Combined with the NameServer startup process, you can guess:

- Load the configuration of the Broker side;

- Initialize some basic components and thread pools on the Broker side

- After the Broker is started, it needs to register itself with the NameServer, so it will send a network request to the NameServer;

- The Broker will send heartbeat to the NameServer every 30s, and pass its own information to the NameServer and maintain it in the routing manager;

That's all I can think of for the time being. Let's have a look.

If you want to quickly understand the startup process, you can directly look at the summary at the end of the article.

The startup class of Broker is org.apache.rocketmq.broker.BrokerStartup

public static void main(String[] args) {

start(createBrokerController(args));

}

public static BrokerController start(BrokerController controller) {

//K1 Controller start

controller.start();

.....

return controller;

}

Like NameServer, there are two steps. The first step is to create the BrokerController, and the second step is to call the startup method of the Controller

1, Create broker controller

This part mainly completes the loading of various configurations on the Broker side and the creation of basic components and thread pools.

1.1 initialize core configuration class

final BrokerConfig brokerConfig = new BrokerConfig();

final NettyServerConfig nettyServerConfig = new NettyServerConfig();

final NettyClientConfig nettyClientConfig = new NettyClientConfig();

//TLS encryption related

nettyClientConfig.setUseTLS(Boolean.parseBoolean(System.getProperty(TLS_ENABLE,

String.valueOf(TlsSystemConfig.tlsMode == TlsMode.ENFORCING))));

//Listening port 10911 of Netty server

nettyServerConfig.setListenPort(10911);

//K2 is obviously some configuration information used by the Broker to store messages.

final MessageStoreConfig messageStoreConfig = new MessageStoreConfig();

//In the case of SLAVE, a parameter is set.

if (BrokerRole.SLAVE == messageStoreConfig.getBrokerRole()) {

int ratio = messageStoreConfig.getAccessMessageInMemoryMaxRatio() - 10;

// This parameter is the maximum ratio of messages in memory. It is used for master-slave synchronization. For details, please go to the official website

messageStoreConfig.setAccessMessageInMemoryMaxRatio(ratio);

}

You can see that the Broker side also has three core configuration classes:

-

BrokerConfig

It saves the basic information of the Broker, such as ip, name, cluster name, etc., as well as the default number of threads in various thread pools on the Broker side. You can check it in the source code yourself;

-

NettyServerConfig

Save some network configurations of Nerrty server, such as the number of working threads, listening ports, etc., which are similar to the corresponding Netty configuration class of NameServer;

-

NettyClientConfig

Network configuration information related to Netty client. Such as the number of working threads on the client, connection time exceeded, and so on

-

MessageStoreConfig

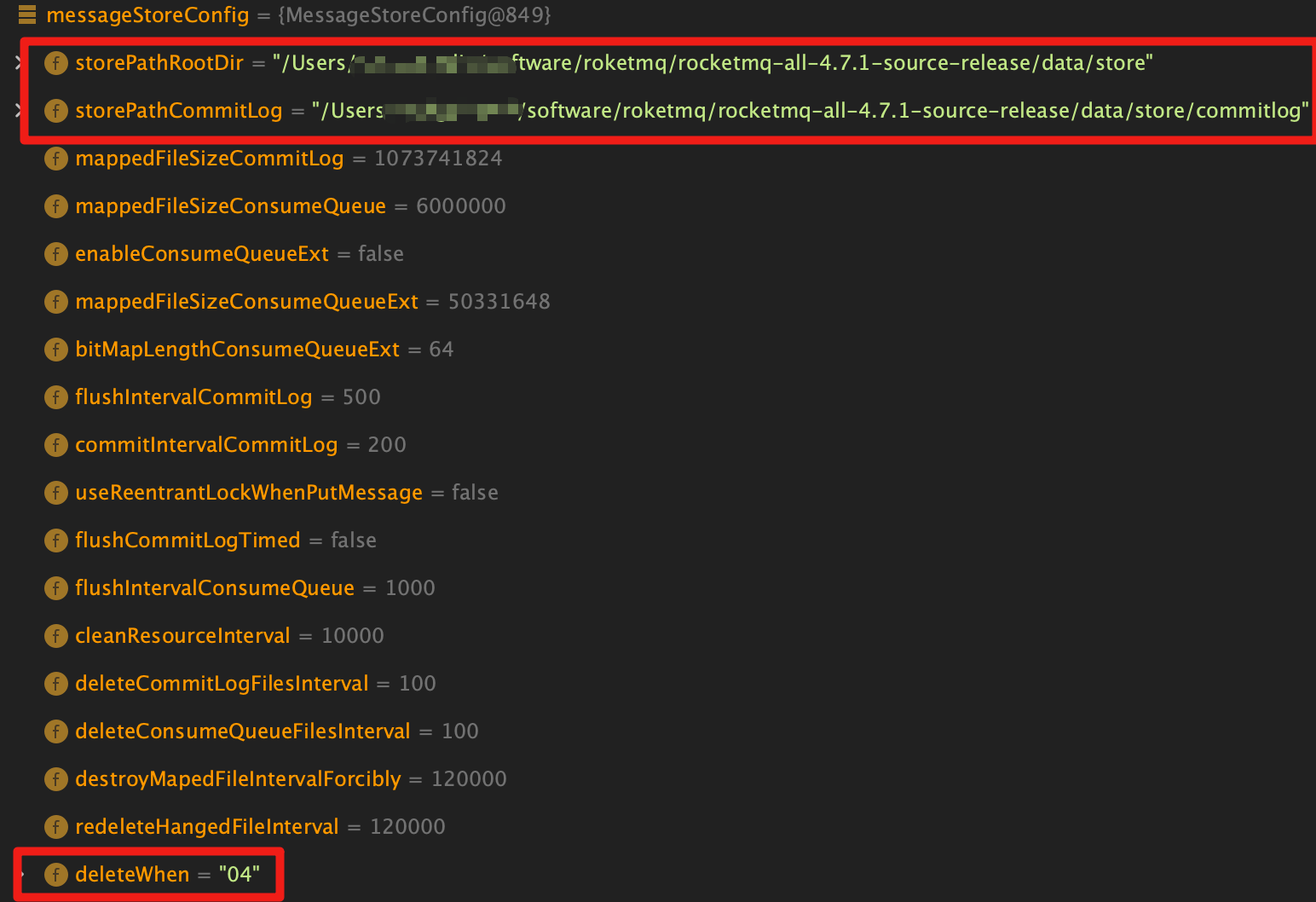

The configuration class for the Broker to store messages. The default storage path (user.home/store), commitLog path and other storage related default configurations are defined; In addition, the message delay time equivalence is also defined here:

private String messageDelayLevel = "1s 5s 10s 30s 1m 2m 3m 4m 5m 6m 7m 8m 9m 10m 20m 30m 1h 2h";

1.2. Load configuration file information to configuration class

The next step is to parse the command line parameters. For example, when starting the Broker, we will specify the configuration file through - c conf/broker.conf:

if (commandLine.hasOption('c')) {

String file = commandLine.getOptionValue('c');

if (file != null) {

configFile = file;

InputStream in = new BufferedInputStream(new FileInputStream(file));

properties = new Properties();

properties.load(in);

properties2SystemEnv(properties);

MixAll.properties2Object(properties, brokerConfig);

MixAll.properties2Object(properties, nettyServerConfig);

MixAll.properties2Object(properties, nettyClientConfig);

MixAll.properties2Object(properties, messageStoreConfig);

BrokerPathConfigHelper.setBrokerConfigPath(file);

in.close();

}

}

Here, the content of the configuration file will be parsed and the corresponding configuration will be loaded into the corresponding configuration classes. You can simply see the loaded configuration classes:

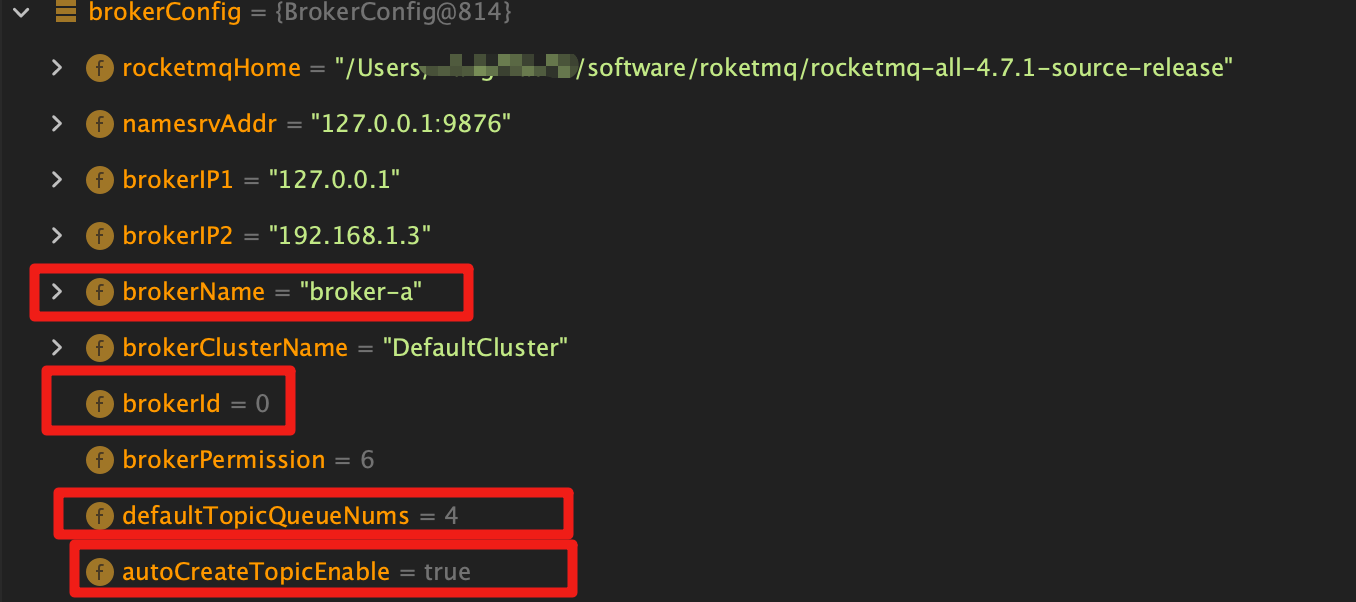

Of BrokerConfig:

For example, the NameServer address, ip address, broker-a name, cluster name, broker ID, and the number of queues under the default topic are 4. When automatic topic creation is enabled, there are still a lot of screenshots that have not been taken;

Storage related:

We modified the default storage path to the main directory of the current source code:

1.3 setting masterId

It can be seen here that the broker ID of the current broker will be set according to the broker's role. The broker ID of MASTER = 0, and the broker ID of SLAVE must be greater than 0:

switch (messageStoreConfig.getBrokerRole()) {

case ASYNC_MASTER:

case SYNC_MASTER:

brokerConfig.setBrokerId(MixAll.MASTER_ID);

break;

case SLAVE:

if (brokerConfig.getBrokerId() <= 0) {

System.out.printf("Slave's brokerId must be > 0");

System.exit(-3);

}

break;

default:

break;

}

if (messageStoreConfig.isEnableDLegerCommitLog()) {

brokerConfig.setBrokerId(-1);

}

In addition, if the Dledger technology is enabled, the brokerId defaults to - 1

Then, the log related configuration will not be viewed.

1.4 creating a BrokerController

Here, first save the above four core configurations to the Broker controller, and then initialize some basic components on the Broker side:

public BrokerController(

final BrokerConfig brokerConfig,

final NettyServerConfig nettyServerConfig,

final NettyClientConfig nettyClientConfig,

final MessageStoreConfig messageStoreConfig

) {

//Save the four core components.

this.brokerConfig = brokerConfig;

this.nettyServerConfig = nettyServerConfig;

this.nettyClientConfig = nettyClientConfig;

this.messageStoreConfig = messageStoreConfig;

//Components corresponding to various functions of Broker.

//Manage consumer consumption offset

this.consumerOffsetManager = new ConsumerOffsetManager(this);

//Topic configuration manager

this.topicConfigManager = new TopicConfigManager(this);

//Process the request of the Consumer to pull the message

this.pullMessageProcessor = new PullMessageProcessor(this);

//Push mode related components

this.pullRequestHoldService = new PullRequestHoldService(this);

//The message arrives at the listener (when the Broker receives the message in push mode, it will actively push the message to the consumer),

// pullRequestHoldService is used here. You can also know that the push mode of RocketMQ is also implemented by the pull mode

this.messageArrivingListener = new NotifyMessageArrivingListener(this.pullRequestHoldService);

this.consumerIdsChangeListener = new DefaultConsumerIdsChangeListener(this);

//Consumer Manager

this.consumerManager = new ConsumerManager(this.consumerIdsChangeListener);

// Consumption filtering related managers (it can be verified here that RocketMQ message filtering is implemented on the Broker side)

this.consumerFilterManager = new ConsumerFilterManager(this);

//Producer Manager

this.producerManager = new ProducerManager();

this.clientHousekeepingService = new ClientHousekeepingService(this);

// Provides a way to check the status of a transaction

this.broker2Client = new Broker2Client(this);

this.subscriptionGroupManager = new SubscriptionGroupManager(this);

//The external API can be regarded as a Netty client

this.brokerOuterAPI = new BrokerOuterAPI(nettyClientConfig);

// Server used to filter messages

this.filterServerManager = new FilterServerManager(this);

this.slaveSynchronize = new SlaveSynchronize(this);

// Various thread pool queues, see the name

// Message queue

this.sendThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getSendThreadPoolQueueCapacity());

this.pullThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getPullThreadPoolQueueCapacity());

this.replyThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getReplyThreadPoolQueueCapacity());

this.queryThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getQueryThreadPoolQueueCapacity());

this.clientManagerThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getClientManagerThreadPoolQueueCapacity());

this.consumerManagerThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getConsumerManagerThreadPoolQueueCapacity());

//Heartbeat thread pool queue

this.heartbeatThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getHeartbeatThreadPoolQueueCapacity());

this.endTransactionThreadPoolQueue = new LinkedBlockingQueue<Runnable>(this.brokerConfig.getEndTransactionPoolQueueCapacity());

this.brokerStatsManager = new BrokerStatsManager(this.brokerConfig.getBrokerClusterName());

this.setStoreHost(new InetSocketAddress(this.getBrokerConfig().getBrokerIP1(), this.getNettyServerConfig().getListenPort()));

// Fast failure mechanism

this.brokerFastFailure = new BrokerFastFailure(this);

//Save all configs

this.configuration = new Configuration(

log,

BrokerPathConfigHelper.getBrokerConfigPath(),

this.brokerConfig, this.nettyServerConfig, this.nettyClientConfig, this.messageStoreConfig

);

}

It seems that there are too many. If you take a closer look, it can be divided into three parts: the core components on the Broker side, various thread pool queues on the Broker side, and the final Configuration class, which stores the Configuration information of the four configurations. Here, we'll take a look at which components have an image, and then look at the specific functions later.

2, Broker controller initialization

I would like to emphasize that we only focus on the process of Broker initialization and the main line of what has been done, but we don't pay attention to how these things are implemented for the time being. Otherwise, it's too confusing to talk about things. We only follow up on some important points, such as Broker registration, heartbeat sending, etc.

2.1 Broker initialization process

Entry: BrokerController#initialize()

2.1.1 load disk configuration information such as topic and consumer

boolean result = this.topicConfigManager.load();

result = result && this.consumerOffsetManager.load();

result = result && this.subscriptionGroupManager.load();

result = result && this.consumerFilterManager.load();

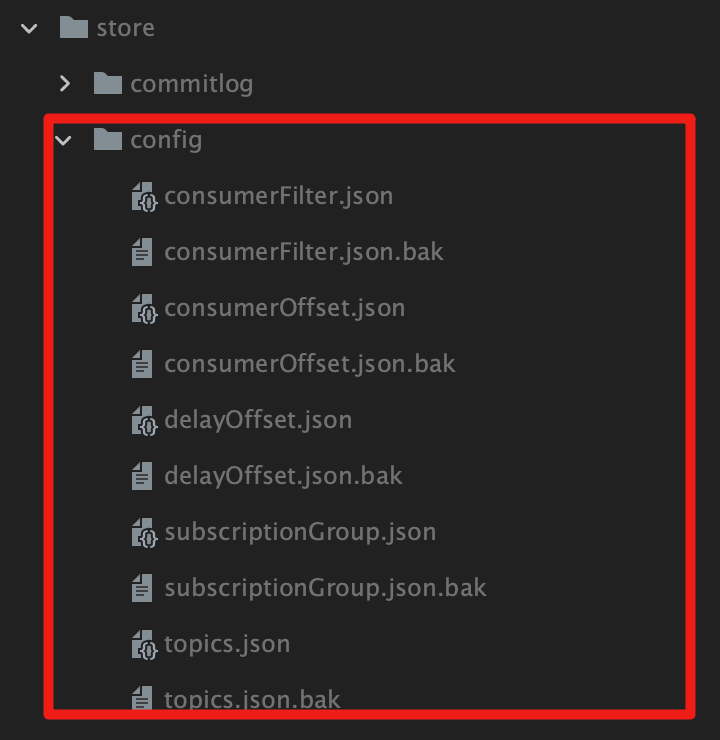

These methods actually load several json configuration information in the store/config directory into their own ConfigManager manager

2.1.2 message storage component DefaultMessageStore

Initialize the message store component

1. After the configuration is loaded successfully, the message storage manager will be initialized

//The message storage management component manages the of messages on disk.

this.messageStore =

new DefaultMessageStore(this.messageStoreConfig, this.brokerStatsManager, this.messageArrivingListener,

this.brokerConfig);

//If Dledger is enabled

if (messageStoreConfig.isEnableDLegerCommitLog()) {

//Initialize a bunch of components related to Dledger

.....

}

//Statistical component of broker

this.brokerStats = new BrokerStats((DefaultMessageStore) this.messageStore);

//load plugin

...........

DefaultMessageStore initializes various service components related to message store:

//Private final messagestoreconfig messagestoreconfig// CommitLog private final CommitLog commitLog; // Message queue cache private final concurrentmap < string / * topic * /, concurrentmap < integer / * queueid * /, consumqueue > > consumequetable// Message queue file disk brushing thread (inheriting Runnable interface) private final FlushConsumeQueueService flushConsumeQueueService// Clear the commitlog file service private final CleanCommitLogService cleanCommitLogService// Clear ConsumeQueue file service private final CleanConsumeQueueService cleanConsumeQueueService// Index service private final IndexService indexService// Allocate MappedFile serviceprivate final allocatemappedfileservice allocatemappedfileservice// Distribute the commitlog message and build the ConsumeQueue and indexFile files according to the commitlog file. private final ReputMessageService reputMessageService; //HA mechanism service Private Final haservice// private final ScheduleMessageService scheduleMessageService// private final StoreStatsService storeStatsService// Private final transientstorepool, transientstorepool; private final RunningFlags runningFlags = new RunningFlags(); private final SystemClock systemClock = new SystemClock(); // Used to create several scheduled tasks private final scheduledexecutorservice scheduledexecutorservice = executors.newsinglethreadscheduledexecurator (New threadfactoryimpl ("storescheduledthread")// Broker state manager private final BrokerStatsManager brokerStatsManager// It is the listener of the previous message arrival, the private final messagearrivinglistener messagearrivinglistener injected in// Broker configuration class private final brokerconfig brokerconfig// Private storecheckpoint related to checkpoint// Commitlog file forwarding request private final LinkedList < commitlogdispatcher > dispatcherlist; // Disk check cantaloupe your scheduled task thread pool private final scheduledexecutorservice diskcheckscheduledexecutorservice = executors.newsinglethreadscheduledexecutor (New threadfactoryimpl ("diskcheckscheduledthread");

Load local message information

result = result && this.messageStore.load();//Org. Apache. Rocketmq. Store. Defaultmessagestore #loadpublic Boolean load() {boolean result = true; try {/ / 1. Judge whether the service is shut down normally according to the abort temporary file. Boolean lastexitok =! This. Istempfileexist(); / / 2. Delay the loading of message related services and information. If (null! = schedulemessageservice) {result = result & & this. Schedulemessageservice. Load();} / / load commit log result = result & & this. Commitlog. Load(); / / load consumption queue result = result & & this. Loadconsumqueue(); if (result){ // checkpoint initialize this.storecheckpoint = new storecheckpoint (storepathconfighhelper. Getstorecheckpoint (this. Messagestoreconfig. Getstorepathrootdir()); this. Indexservice. Load (lastexitok); // 3. File recovery this. Recover (lastexitok);}}... Return result;}

As can be seen from the above source code, the following things are mainly done:

-

Judge whether it was closed normally last time according to whether the abort file under the store exists (if it does not exist, it means it is closed normally)

-

Initialize the content related to the delayed message service

-

Load local commit_log and consumption_ Queue file

Load the information in each local commitlog file into MappedFile;

Save the local ConsumeQueue file data to the ConsumeQueue consumption queue, and save the initial physical offset offset, message size and HashCode value of the queue message under the specified Topic in the CommitLog.

-

Load local checkPoint file

-

Load index information

Load the index file under the local index directory and save it to the IndexFile. The IndexFile provides a method to query messages through key or time interval

-

File recovery related

It is handled according to the commitlog. The specific recovery method is not concerned for the time being.

Here, let's take a look at the related delay messages:

//org.apache.rocketmq.store.schedule.ScheduleMessageService#load public boolean load() { boolean result = super.load(); result = result && this.parseDelayLevel(); return result; }

Two things:

-

Load the local delayOffset.json file

The loaded data is saved in the offsetTable of ScheduleMessageService, and the message offset data under each delay level is saved [details of delay messages]

private final ConcurrentMap<Integer /* level */, Long/* offset */> offsetTable = new ConcurrentHashMap<Integer, Long>(32);

-

Resolution delay level

Calculate the milliseconds of the default delay levels and save them in the delayLevelTable:

private final ConcurrentMap<Integer /* level */, Long/* delay timeMillis */> delayLevelTable = new ConcurrentHashMap<Integer, Long>(32);

2.1.3 creating Netty network component nettyremoteingserver

Initialize the communication components and on the Netty server side, just like on the NameServer side.

2.1.4 initialize various thread pools on the Broker side

It's all initialization work. The source code is not listed here. The thread pool is the business thread pool corresponding to several thread queues listed in 1.4. There are probably the following thread pools:

- send message

- Handling consumer pull messages

- Reply message

- Query message

- Handling command line related

- Client management

- Heartbeat transmission

- End of transaction message

- consumer management

2.1.5 register various business processors

Take sending messages as an example:

SendMessageProcessor sendProcessor = new SendMessageProcessor(this); sendProcessor.registerSendMessageHook(sendMessageHookList); sendProcessor.registerConsumeMessageHook(consumeMessageHookList); this.remotingServer.registerProcessor(RequestCode.SEND_MESSAGE, sendProcessor, this.sendMessageExecutor); this.remotingServer.registerProcessor(RequestCode.SEND_MESSAGE_V2, sendProcessor, this.sendMessageExecutor); this.remotingServer.registerProcessor(RequestCode.SEND_BATCH_MESSAGE, sendProcessor, this.sendMessageExecutor); this.remotingServer.registerProcessor(RequestCode.CONSUMER_SEND_MSG_BACK, sendProcessor, this.sendMessageExecutor);

-

SendMessageProcessor is a business processor. Here is the processor that sends messages

-

Register the processor and processing thread pool corresponding to each request Code in the processorTable of the NettyRemotingServer parent NettyRemotingAbstract. The structure is as follows:

protected final HashMap<Integer/* request code */, Pair<NettyRequestProcessor, ExecutorService>> processorTable = new HashMap<Integer, Pair<NettyRequestProcessor, ExecutorService>>(64);

In this way, when processing each request, you can get the corresponding processor and thread pool to send network requests according to the RequestCode, which is also corresponding to the NameServer end. The NameServer end will conduct different business processing according to the RequestCode of the received request.

The interface of the processor is the NettyRequestProcessor designed by RocketMQ itself

public interface NettyRequestProcessor { RemotingCommand processRequest(ChannelHandlerContext ctx, RemotingCommand request) throws Exception;}

2.1.6 start scheduled task

this.scheduledExecutorService.scheduleAtFixedRate does not release the source code because it is a normal code for starting scheduled tasks. The scheduled tasks started here mainly include the following:

-

broker data timing statistics

-

consumerOffset data is periodically persisted to disk

-

consumerFilter data is periodically persisted to disk

-

broker protection

-

Print watermark

public void printWaterMark() { LOG_WATER_MARK.info("[WATERMARK] Send Queue Size: {} SlowTimeMills: {}", this.sendThreadPoolQueue.size(), headSlowTimeMills4SendThreadPoolQueue()); LOG_WATER_MARK.info("[WATERMARK] Pull Queue Size: {} SlowTimeMills: {}", this.pullThreadPoolQueue.size(), headSlowTimeMills4PullThreadPoolQueue()); LOG_WATER_MARK.info("[WATERMARK] Query Queue Size: {} SlowTimeMills: {}", this.queryThreadPoolQueue.size(), headSlowTimeMills4QueryThreadPoolQueue()); LOG_WATER_MARK.info("[WATERMARK] Transaction Queue Size: {} SlowTimeMills: {}", this.endTransactionThreadPoolQueue.size(), headSlowTimeMills4EndTransactionThreadPoolQueue()); } -

Print commitLog distribution results

The ReputMessageService in the message store will build the logical consumption queue consumerQueue and the index file indexFIle according to the commitlog file

2.1.7 initialize transactions, ACL S, and RpcHooks

initialTransaction(); initialAcl();//Permission related initialrpchooks()// ACL related RPC hooks

We mainly look at the initialization work related to transactions:

private void initialTransaction() { this.transactionalMessageService = ServiceProvider.loadClass(ServiceProvider.TRANSACTION_SERVICE_ID, TransactionalMessageService.class); if (null == this.transactionalMessageService) { this.transactionalMessageService = new TransactionalMessageServiceImpl(new TransactionalMessageBridge(this, this.getMessageStore())); } this.transactionalMessageCheckListener = ServiceProvider.loadClass(ServiceProvider.TRANSACTION_LISTENER_ID, AbstractTransactionalMessageCheckListener.class); if (null == this.transactionalMessageCheckListener) { this.transactionalMessageCheckListener = new DefaultTransactionalMessageCheckListener(); } this.transactionalMessageCheckListener.setBrokerController(this); this.transactionalMessageCheckService = new TransactionalMessageCheckService(this); }

- Transaction message service class TransactionalMessageService

- Transactional message check service

- Transaction message check listening class AbstractTransactionalMessageCheckListener

You can see that the corresponding implementation classes are loaded based on SPI mechanism. If they are not configured, the corresponding default implementation classes are used.

Therefore, we can extend it ourselves and put the implementation class routing into the corresponding interface file

public static final String TRANSACTION_SERVICE_ID = "META-INF/service/org.apache.rocketmq.broker.transaction.TransactionalMessageService"; public static final String TRANSACTION_LISTENER_ID = "META-INF/service/org.apache.rocketmq.broker.transaction.AbstractTransactionalMessageCheckListener";

3, BrokerController startup

org.apache.rocketmq.broker.BrokerController#start

This part starts some core components initialized in the second part and the Broker's heartbeat registration task.

(1) Start core components

//1. Start the message store component if (this. Messagestore! = null) {this. Messagestore. Start();} / / start two Netty servers if (this. Remotingserver! = null) {this. Remotingserver. Start();} if (this. Fastremotingserver! = null) {this. Fastremotingserver. Start();} //File related services [omitted] if (this. Filewatchservice! = null) {this. Filewatchservice. Start();} / / 2. A Netty client of broker. Broker registration is requested here. If (this. Brokerouterapi! = null) {this. Brokerouterapi. Start();} // Pull related service startup if (this. Pullrequestholdservice! = null) {this. Pullrequestholdservice. Start();} if (this.clientHousekeepingService != null) { this.clientHousekeepingService.start(); } // Filter start if (this. Filterservermanager! = null) {this. Filterservermanager. Start();}

Here, we only look at what the message storage component does when it is started. We won't look at others first.

3.1 start message storage component

Here is to start several core services in the message storage component, such as delayed message service, commitlog, disk brushing, and some scheduled tasks related to message storage, such as regularly deleting expired messages, regularly deleting expired commitlog and consumeQueue.

3.1.1 start commitLog distribution

//When K2 Broker starts, a thread will be started to update the ConsumerQueue index file. this.reputMessageService.setReputFromOffset(maxPhysicalPosInLogicQueue); this.reputMessageService.start();

Start mainly calls the thread.start method. Therefore, when the Broker starts, it starts a thread to handle the distribution of commitlog (after receiving the message, construct ConsumeQueue and IndexFile according to the commitlog file). The specific processing is in the run() method of ReputMessageService, and then calls the doReput method:

public void run() { while (!this.isStopped()) { ... this.doReput(); ... }

After receiving the message, you can distribute it here. Later, you will see it when sending the message

3.1.2 start HA high availability and delay message service

if (!messageStoreConfig.isEnableDLegerCommitLog()) { this.haService.start(); this.handleScheduleMessageService(messageStoreConfig.getBrokerRole()); }

this.haService.start(); The HA high availability service will be started here. We'll look at it later when we talk about the active and standby services.

public void handleScheduleMessageService(final BrokerRole brokerRole) { if (this.scheduleMessageService != null) { if (brokerRole == BrokerRole.SLAVE) { this.scheduleMessageService.shutdown(); } else { this.scheduleMessageService.start(); } } }

Here is the specific startup delay message service:

public void start() { //CAS locking if (started. Compareandset (false, true)) {this.timer = new timer ("schedulemessagetimerthread", true); for (map. Entry < integer, long > entry: this. Delayleveltable. Entryset()) {integer level = entry. Getkey(); long timedelay = entry. Getvalue()) ; long offset = this.offsettable.get (level); if (null = = offset) {offset = 0l;} if (timedelay! = null) {/ / enable timing to execute the delayed message processing task this.timer.schedule (New deliverdelayedmessagetimertask (level, offset) , first_delay_time);}} / / 2. Persist the delayed message to the hard disk every 10 seconds. This.timer.scheduleatfixedrate (New timertask() {@ override public void run() {try {if (started. Get()) schedulemessageservice. This. Persist()) ; } catch (Throwable e) { log.error("scheduleAtFixedRate flush exception", e); } } }, 10000, this.defaultMessageStore.getMessageStoreConfig().getFlushDelayOffsetInterval()); } }

Two things:

- Traverse the delay level table. For each delay level, start a scheduled task to process the messages of the delay queue under this level (from the offsetTable) [send the delay message later and take a closer look]

- Start another scheduled task to refresh the delay message to disk every 10s, that is, synchronize to the store/config/delayOffset.json file of offsetTable when initializing the message storage component

3.1.3 file disk brushing

this.flushConsumeQueueService.start();this.commitLog.start();

Disk brushing processing location of consumeQueue: org.apache.rocketmq.store.DefaultMessageStore.FlushConsumeQueueService#doFlush

Disk brushing processing location of commitLog: org.apache.rocketmq.store.CommitLog.CommitRealTimeService#run

Here, the commitlog and consumeQueue data in memory will be refreshed to the disk. The details will be described in the following article.

3.1.4 create abort file

this.storeStatsService.start();//The thread storing relevant statistics starts this.createTempFile()// Create abort file

3.1.5 delete expired commitLog and consumeQueue tasks

this.addScheduleTask();

private void addScheduleTask() { //Regularly delete expired messages this. Scheduledexecutorservice. Scheduleatfixedrate (New runnable() {@ override public void run() {defaultmessagestore. This. Cleanfilesperiodically();}}, 1000 * 60, this. Messagestoreconfig. Getcleanresourceinterval(), timeunit. Milliseconds) ; ..... // There are also some data checking tasks below, so I won't read them}

By default, an expired message is deleted once every 10s.

private void cleanFilesPeriodically() { //Regularly delete expired commitlog this. Cleancommitlogservice. Run()// Regularly delete expired consumequeue this. Cleanconsumequeueservice. Run();}

3.2 register Broker ★

//Registration will be called first. The third parameter is whether to force the registration of this.registerBrokerAll(true, false, true)// Then, start the scheduled task for 30s and send a heartbeat this.scheduledexecutorservice.scheduleatfixedrate (New runnable() {@ override public void run() {try {/ / here, each heartbeat sent determines whether to force the registration of brokercontroller.this.registerbrokerall according to the broker's configuration (true, false, brokerConfig.isForceRegister()); } catch (Throwable e) { log.error("registerBrokerAll Exception", e); } } }, 1000 * 10, Math.max(10000, Math.min(brokerConfig.getRegisterNameServerPeriod(), 60000)), TimeUnit.MILLISECONDS);

By default, a heartbeat will be sent in 30s.

public synchronized void registerBrokerAll(final boolean checkOrderConfig, boolean oneway, boolean forceRegister) { //Topic configurationtopicconfigserializewrapper topicconfigwrapper = this. Gettopicconfigmanager(). Buildtopicconfigserializewrapper();... / / if bit configuration is forced to register, you need to determine whether to register if (forceregister | needregister (this. Brokerconfig. Getbrokerclustername(), this. Getbrokeraddr()) each time , this.brokerConfig.getBrokerName(), this.brokerConfig.getBrokerId(), this.brokerConfig.getRegisterBrokerTimeoutMills())) { doRegisterBrokerAll(checkOrderConfig, oneway, topicConfigWrapper); } }

Finally, the logic of needRegister. First, the specific registration business:

private void doRegisterBrokerAll(boolean checkOrderConfig, boolean oneway, TopicConfigSerializeWrapper topicConfigWrapper) { //The specific registration list < registerbrokerresult > registerbrokerresultlist = this. Brokerouterapi. Registerbrokerall (... See the following code for the parameters); / / if the number of registration results is greater than 0, process the results if (registerbrokerresultlist. Size() > 0) {registerbrokerresult registerbrokerresult = registerbrokerresultlist. Get (0); if (registerbrokerresult! = null) {/ / primary node address if (this. Updatemasterhaserveradddrperiodically & & registerbrokerresult. Gethaserveraddr()! = null) {this. Messagestore. Updatehamasteraddress (registerbrokerresult. Gethaserveraddr());} / / slave node address this. Slavesynchronize. Setmasteraddr (registerbrokerresult. Getmasteraddr()); if (checkorderconfig) {this. Gettopicconfigmanager(). Updateordertopicconfig (registerbrokerresult. Getkvtable());} } } }

Register through the brokerouter API, return the list of registration results (because multiple nameservers may be opened) RegisterBrokerResult, and then process the returned results to update the address information.

It mainly depends on the registration process:

Construct request parameters

public List<RegisterBrokerResult> registerBrokerAll( //Broker related parameters final string clustername, final string brokeraddr, final string brokername, final long brokerid, final string haserveraddr, final topicconfigserializewrapper, topicconfigwrapper, final list < string > filterserverlist, final Boolean oneway, final int timeoutmills, Final Boolean compressed) {/ / store the registration results of each NameServer final list < registerbrokerresult > registerbrokerresultlist = lists. Newarraylist(); / / get the address list of NameServer list < string > nameserveraddresslist = this. Remotingclient. Getnameserveraddresslist(); if (nameserveraddresslist! = null & & nameserveraddresslist. Size() > 0) {/ / broker registered request parameters final registerbrokerrequestheader requestheader = new registerbrokerrequestheader(); requestheader.setbrokeraddr (brokeraddr); / / broker address requestheader.setbrokerid (brokerid) ; / / brokerid requestheader.setbrokername (brokername); / / brokername requestheader.setclustername (clustername); / / cluster name requestheader.sethaserveraddr (haserveraddr); / / slave address requestHeader.setCompressed(compressed) ; / / registered request body registerbrokerbody requestbody = new registerbrokerbody(); requestbody.settopicconfigserializewrapper (topicconfigwrapper); requestbody.setfilterserverlist (filterserverlist); final byte [] body = requestbody.encode (compressed) ; / / code final int bodyCrc32 = UtilAll.crc32(body); / / use the cyclic redundancy check [you can review the group counting knowledge] requestheader.setbodycrc32 (bodycrc32); for (final string namesrvaddr: nameserveraddresslist) {brokerouterexecutor.execute (New runnable()) {@ override public void run() {try {/ / register registerbrokerresult result = registerbroker (namesrvaddr, oneway, timeoutmills, requestheader, body); if (result! = null) {/ / save the registration result registerbrokerresultlist. Add (result);}}...}});}...} return registerbrokerresultlist;}

First, traverse the NameServer list, then build the request body, bind the request parameters, and finally register:

Finally came to the place where the network request was finally sent:

private RegisterBrokerResult registerBroker( final String namesrvAddr, final boolean oneway, final int timeoutMills, final RegisterBrokerRequestHeader requestHeader, final byte[] body ) throws RemotingCommandException, MQBrokerException, RemotingConnectException, RemotingSendRequestException, RemotingTimeoutException, InterruptedException { //1. Build a remote network request, where the request type register is passed in_ BROKER RemotingCommand request = RemotingCommand.createRequestCommand(RequestCode.REGISTER_BROKER, requestHeader); request.setBody(body); ...... // 2. Netty client sends network request RemotingCommand response = this.remotingClient.invokeSync(namesrvAddr, request, timeoutMills)// Encapsulates the network request results. assert response != null; switch (response.getCode()) { case ResponseCode.SUCCESS: { RegisterBrokerResponseHeader responseHeader = (RegisterBrokerResponseHeader) response.decodeCommandCustomHeader(RegisterBrokerResponseHeader.class) ; / / 3. Encapsulate the returned result registerbrokerresult result = new registerbrokerresult(); result.setmasteraddr (responseheader. Getmasteraddr()); result.sethaserveraddr (responseheader. Gethaserveraddr()); if (response. Getbody()! = null) {result.setkvtable (kvtable. Decode (response. Getbody(), kvtable. Class));} return result;} default: break;} / / throw new MQBrokerException(response.getCode(), response.getRemark());}

Note:

- The constructed request Command passes in the request Code: RequestCode.REGISTER_BROKER. The NaemServer side processes such requests according to the Code

Take a look at the code that the Netty client sends the request:

Send Netty network request

//org.apache.rocketmq.remoting.netty.NettyRemotingClient#invokeSync public RemotingCommand invokeSync(String addr, final RemotingCommand request, long timeoutMillis) throws InterruptedException, RemotingConnectException, RemotingSendRequestException, RemotingTimeoutException { long beginStartTime = System.currentTimeMillis() ; / / 1. Create a connection with NameServer. The returned channel final channel channel = this.getandcreatechannel (addr); if (channel! = null & & channel. Isactive()) {/ / channel active try {..... / / 2. Send a network request RemotingCommand response = this.invokeSyncImpl(channel, request, timeoutMillis - costTime); .... return response; } ..... } else { ..... } }

-

Establish a connection with NameServer and return the Channel object

In fact, this is a fixed connection establishment process of Netty, which is not in-depth. You can learn it yourself, but the connection of each NameServer will be cached here:

private Channel getAndCreateChannel(final String addr) throws RemotingConnectException, InterruptedException { if (null == addr) { return getAndCreateNameserverChannel(); } //Get the connection object channelwrapper CW = this. Channeltables. Get (addr); if (CW! = null & & CW. Isok()) {return CW. Getchannel();} return this. Createchannel (addr);} -

Send a network request through channel [the standard process of sending a request by Netty can be ignored]

public RemotingCommand invokeSyncImpl(final Channel channel, final RemotingCommand request, final long timeoutMillis) throws InterruptedException, RemotingSendRequestException, RemotingTimeoutException { final int opaque = request.getOpaque(); try { //1. Create ResponseFuture and set the return result [Future task of Java type] in the callback. final ResponseFuture responseFuture = new ResponseFuture(channel, opaque, timeoutMillis, null, null); / / save the request id of the current request and the response result object this.responsetable.put (opaque, ResponseFuture) ; final socketaddress addr = channel. Remoteaddress(); / / 2. Send request channel. Writeandflush (request). AddListener (New channelfuturelistener() {@ override public void operationcomplete (channelfuture f) throws exception {if (f.issuccess()) {/ / listen to the sending result. Sending success or failure will trigger this callback ResponseFuture.setsendrequestok (true); return;} else {ResponseFuture.setsendrequestok (false);} // Remove the request record responsetable. Remove (opaque); ResponseFuture. Setcause (f.cause()); ResponseFuture. Putresponse (null); log. Warn ("send a request command to channel <" + addr + "> failed.");}}) ; / / block waiting for NameServer registration result remotingcommand responsecommand = ResponseFuture.waitresponse (timeoutmillis); if (null = = responsecommand) {/ / processing failure if (ResponseFuture. Issendrequestok()) {throw new remotingtimeoutexception (RemotingHelper.parseSocketAddressAddr(addr), timeoutMillis, ResponseFuture.getCause()); } else { throw new RemotingSendRequestException(RemotingHelper.parseSocketAddressAddr(addr), ResponseFuture.getCause()); } } return responseCommand; } finally { this.responseTable.remove(opaque); } }

Here, we can track the network request sent by the Broker at the case RequestCode.REGISTER_BROKER: location break point in the org.apache.rocketmq.namesrv.processor.DefaultRequestProcessor#processRequest method of NameServer:

NameServer handles registration Broker requests

case RequestCode.REGISTER_BROKER: //Get the version information of the current Broker version brokerversion = mqversion.value2version (request. Getversion()); if (brokerversion. Ordinal() > = mqversion. Version. V3_0_11. Ordinal()) {/ / if the version is greater than 3.0.11, go through the logic return this.registerBrokerWithFilterServer(ctx, request); } else { return this.registerBroker(ctx, request); }

There are three steps in registerBrokerWithFilterServer:

-

Parse request parameters (including crc32 verification)

-

Call the routing manager RouteInfoManager to register;

-

Encapsulate returned results

Status code, master address, Ha server address, etc

We mainly look at the registration process of the routing manager:

public RegisterBrokerResult registerBroker( final String clusterName, final String brokerAddr, final String brokerName, final long brokerId, final String haServerAddr, final TopicConfigSerializeWrapper topicConfigWrapper, final List<String> filterServerList, final Channel channel) { //Registration result object registerbrokerresult result = new registerbrokerresult(); Try {try {/ / add a write lock (exclusive lock). This. Lock. Writelock(). Lockinterruptible(); / / 1. Get the list of brokers under the cluster. Set < string > brokernames = this.clusteraddrtable.get (clustername); if (null = = brokernames) {/ / 1.1 if it is empty for the first time, save brokernames = new HashSet < string > (); this.clusteraddrtable.put (clustername, brokernames);} // 1.2 add the current BrokerName, because it is set, so don't worry about repeating brokernames.add (BrokerName); Boolean registerfirst = false; / / 2. Get BrokerData according to BrokerName BrokerData = this.brokeraddrtable.get (BrokerName); if (null = = BrokerData) {/ / 2.1 for the first registration, initialize and save the current broker information registerfirst = true; BrokerData = new BrokerData (clustername, BrokerName, new HashMap < long / * * brokerid * * /, string > () / * * broker address * * /); this.brokeraddrtable.put (BrokerName, BrokerData);} Map<Long, String> brokerAddrsMap = BrokerData.getBrokerAddrs() ; / / switch Slave to master: first remove < 1, IP: Port > in namesrv, then add < 0, IP: Port > / / the same IP: port must only have one record in brokeraddrtable / / as can be seen from the comments, this section mainly deals with the situation when Slave is switched to master. Remove the old Slave information and switch to new information Iterator<Entry<Long, String>> it = brokerAddrsMap.entrySet().iterator(); while (it.hasNext()) { Entry<Long, String> item = it.next(); // 2.2 if the brokerid corresponding to the current address is different from the existing brokerid in the local cache, remove the old if (null! = brokeraddr & & brokeraddr. Equals (item. Getvalue()) & & brokerid! = item. Getkey()) {it. Remove();}} // 2.3 save the mapping of new broker ID and broker address, and return the old address string oldaddr = BrokerData. Getbrokeraddrs(). Put (brokerid, brokeraddr); registerfirst = registerfirst | (null = = oldaddr); if (null! = topicconfigwrapper & & mixall. Master_id = = brokerid) {if (this. Isbrokertopicconfigchanged (brokeraddr, topicconfigwrapper. Getdataversion()) 𞓜 registerfirst) {/ / if it is the first registration or the topic configuration of the broker changes, concurrentmap < string, topicconfig > tctable = topicconfigwrapper. Gettopicconfigtable() ; if (tctable! = null) {for (map. Entry < string, topicconfig > entry: tctable. Entryset()) {/ / 3. Traverse the configuration of each topic, update the queue of topics, and new to topicqueueuetable [Topic - > List < queuedata >] this.createandupdatequedata (BrokerName, entry. Getvalue());}}}} / / 4. When registering a heartbeat for 30s, the broker's active information will be updated to the brokerLiveTable, and the latest timestamp of the currently received heartbeat will be saved. Brokerliveinfo prevbrokerliveinfo = this. brokerLiveTable. Put (brokerAddr, new BrokerLiveInfo( System.currentTimeMillis(), topicConfigWrapper.getDataVersion(), channel, haServerAddr)); if (null == prevBrokerLiveInfo) { log.info("new broker registered, {} HAServer: {} ", brokeraddr, haserveraddr);} if (filterserverlist! = null) {if (filterserverlist. Isempty()) {this. Filterservertable. Remove (brokeraddr);} else {/ / filterserver information update this. Filterservertable. Put (brokeraddr, filterserverlist);}} if (mixall. Master_id! = brokerid) {/ / if it is the heartbeat of Slave, string masteraddr = BrokerData. Getbrokeraddrs(). Get (mixall. Master_id); if (masteraddr! = null) {brokerliveinfo brokerliveinfo = this. brokerLiveTable. Get (masteraddr); if (brokerliveinfo! = null) {/ / update the Slave node address information under the master node result.sethaserveraddr (brokerliveinfo. Gethaserveraddr()) ; result. Setmasteraddr (masteraddr);}}}} finally {/ / release the write lock this. Lock. Writelock(). Unlock();}} catch (exception E) {log. Error ("registerbroker exception", e);} return result; }

Sort out the following:

The broker metadata information maintained in the routing manager is described in the NameServer startup process. You can go back and have a look. Here is a brief description. The following routing structures are mainly included (also updated during registration and heartbeat):

private final HashMap<String/* topic */, List<QueueData>> topicQueueTable;//Queue meta information under topic Private Final HashMap < string / * brokername * /, brokerdata > brokeraddrtable; / / broker address information private final HashMap < string / * clustername * /, set < string / * brokername * / > > clusteraddrtable; / / broker name under cluster Private Final HashMap < string / * brokeraddr * /, brokerliveinfo > brokerlivetable; / / broker address And the corresponding active information (including the timestamp of the last heartbeat) Private Final HashMap < string / * brokeraddr * /, list < string > / * filter server * / > filterservertable; / / filter the information of the service

- Write lock ensures that only one thread can modify it at a time, but multiple threads can read it at the same time

- The clusterAddrTable cluster routing information is initialized during the first registration, and the broker name is added directly after the heartbeat registration

- Initialize the broker address routing table brokerAddrTable during the first registration;

- If SLAVE is switched to MASTER, update the BrokerData information under brokerAddrTable;

- If the first registration or topic configuration information changes, update the topicqueueuetable data, mainly to modify the queue metadata information under topic;

- Update the broker's active record table (initialize a new object and save the latest version information DataVersion transmitted from the broker) and the broker livetable to record the latest heartbeat timestamp for NameServer timing scanning and remove the broker information that does not send heartbeat for 120s;

- If it is a SLAVE registration request, update the address of the SLAVE node in the BrokerLiveInfo of its MASTER node;

- Release write lock

Registration Broker conditions

The above is the whole process of broker registration. Finally, let's look at the entry to register the broker. If forced registration is not configured, there is a logic to judge whether registration is required. Let's look at the following conditions to register the Broker:

public List<Boolean> needRegister( final String clusterName, final String brokerAddr, final String brokerName, final long brokerId, final TopicConfigSerializeWrapper topicConfigWrapper, final int timeoutMills) { final List<Boolean> changedList = new CopyOnWriteArrayList<>(); List<String> nameServerAddressList = this.remotingClient.getNameServerAddressList(); if (nameServerAddressList != null && nameServerAddressList.size() > 0) { for (final String namesrvAddr : nameServerAddressList) { brokerOuterExecutor.execute(new Runnable() { @Override public void run() { try { //Encapsulate the query parameter querydataversionrequestheader. Requestheader = new querydataversionrequestheader(); requestHeader.setBrokerAddr(brokerAddr); requestHeader.setBrokerId(brokerId); requestHeader.setBrokerName(brokerName); requestHeader.setClusterName(clusterName); RemotingCommand request = RemotingCommand.createRequestCommand(RequestCode.QUERY_DATA_VERSION, requestHeader); request.setBody(topicConfigWrapper.getDataVersion().encode()); // Request NameServer to query remotingcommand response = remotingclient.invokesync (namesrvaddr, request, timeoutmills); DataVersion nameServerDataVersion = null; Boolean changed = false; Switch (response. Getcode()) {case responsecode. Success: {/ / after receiving the response, get the query result querydataversionresponseheader querydataversionresponseheader = (querydataversionresponseheader) response.decodeCommandCustomHeader(QueryDataVersionResponseHeader.class); changed = queryDataVersionResponseHeader.getChanged(); byte[] body = response.getBody(); if (body != null) { nameServerDataVersion = DataVersion.decode(body, DataVersion.class); if (!topicConfigWrapper.getDataVersion().equals(nameServerDataVersion)) {/ / if the current version is different from the NameServer side, it changes. Changed = true;}} if (changed = = null | changed) { changedList.add(Boolean.TRUE); } } default: break; } } catch (Exception e) {/ / if there is an error in the query, the default is changed. Changedlist. Add (Boolean. True);}}});}...} return changedlist;}

In fact, it is very simple to send a request requestcode.query to the NameServer to query the dadavision_ DATA_ Version: query the version information of the records saved on the NameServer side. If it is different from the current version, it indicates that it has changed. As long as the changedList has a true, that is, the data version of any NameServer is inconsistent, the needRegister on the upper layer will return true for Broker registration.

Next, let's take a brief look at the process of NameServer querying DataVersion:

//org.apache.rocketmq.namesrv.processor.DefaultRequestProcessor#queryBrokerTopicConfig public RemotingCommand queryBrokerTopicConfig(ChannelHandlerContext ctx, RemotingCommand request) throws RemotingCommandException { final RemotingCommand response = RemotingCommand.createResponseCommand(QueryDataVersionResponseHeader.class) ;.... request parameter parsing... DataVersion dataVersion = DataVersion.decode(request.getBody(), DataVersion.class); // 1. The DataVersion (saved in BrokerLiveInfo) information will be obtained from the brokerLiveTable according to the broker address // If the old DataVersion is empty (first query) or inconsistent with the current version, it is considered that a change has occurred. Boolean changed = this. Namesrvcontroller. Getrouteinfomanager(). Isbrokertopicconfigchanged (requestheader. Getbrokeraddr(), DataVersion); if (! Changed) {/ / if there is no change, update the timestamp in the current broker active table this. Namesrvcontroller. Getrouteinfomanager(). Updatebrakerinfo updatetimestamp (requestheader. Getbrokeraddr());} / / 2. Query the old DataVersion and return DataVersion nameSeverDataVersion = this.namesrvController.getRouteInfoManager().queryBrokerTopicConfig(requestHeader.getBrokerAddr()); response.setCode(ResponseCode.SUCCESS); response.setRemark(null); if (nameSeverDataVersion != null) { response.setBody(nameSeverDataVersion.encode()); } responseHeader.setChanged(changed); return response; }

- First, the DataVersion is saved in the BrokerLiveInfo object of the broker active list brokerLiveTable

- Get the old DataVersion information from the BrokerLiveInfo according to the requested broker address. If it is inconsistent with the current one, it is considered to have changed;

- If there is no change, the heartbeat timestamp of the corresponding BrokerLiveInfo will be updated, and the query request will be regarded as a simple heartbeat request [because if there is no change, the Broker will not initiate registration / heartbeat requests again]

- Then respond the old DataVersion information back to the Broker

- The update time of DataVersion is that when the metadata is sent and changed, the Broker will update the DataVersion information, and then update the version of NameServer at the next heartbeat

summary

OK, here we have sorted out the Broker startup process. Let's briefly summarize:

The first step - initialization

- First, load the configuration information of the Broker side itself and the configuration information related to NettyServer (from broker.conf), and create the Broker controller

- When the Broker controller is created, it will initialize various basic components on the Broker side, such as topic configuration manager, related service components in push and pull mode, delayed message service component, producer and consumer manager, and various thread pool queues that will be used;

- Then, the json configuration information under store/config in the disk will be loaded, such as topic, consumer and consumerFilter, and saved to the corresponding manager object;

- Then create the important message storage component DefaultMessageStore

- This component initializes various services and components related to message storage, such as IndexFile, commitlog and consumeQueue. Refer to 2.1.2 for details

- Similarly, the commitlog file in the store directory and the file data in the consumequeue directory will be loaded and saved, and the index file in the index directory will be saved to the IndexFile;

- File recovery and delay message service initialization (load the relationship between delay level and corresponding delay time)

- Create Netty server and various worker thread pools

- Many thread pool tasks are created at the same time

- Initialize the implementation class of transaction related services (SPI mechanism)

The second big step - start work

-

Start the core components of the Broker, the most important of which is the message storage component. When starting, it is actually to create threads to handle some tasks asynchronously

-

Start the commitlog distribution. After the producer sends a message, it distributes the commitlog. In fact, it builds the logical consumption queue consumequeue and the index file IndexFile through the commitlog

-

Start HA service

-

Start the delay message processing service to process messages with different delay levels; And start the task to periodically refresh the delay message to the delayOffset.json file under store/config /

-

Create a task to periodically brush the disk (refresh the commitlog and consumelog data to the disk)

-

Create abort file [used to mark whether the Broker is closed normally]

-

Create and delete scheduled tasks for expired commitlog and consumequeue

-

Register Broker and send Broker heartbeat

By default, a heartbeat is sent every 30s. When sending a heartbeat, the basic information of the current Broker will be carried

Among them, many processing details involved (such as message sending, delayed message processing, message disk brushing, master-slave synchronization, etc.) will be analyzed in the following articles

The next section will sort out the process of sending messages by Producer.