entrance

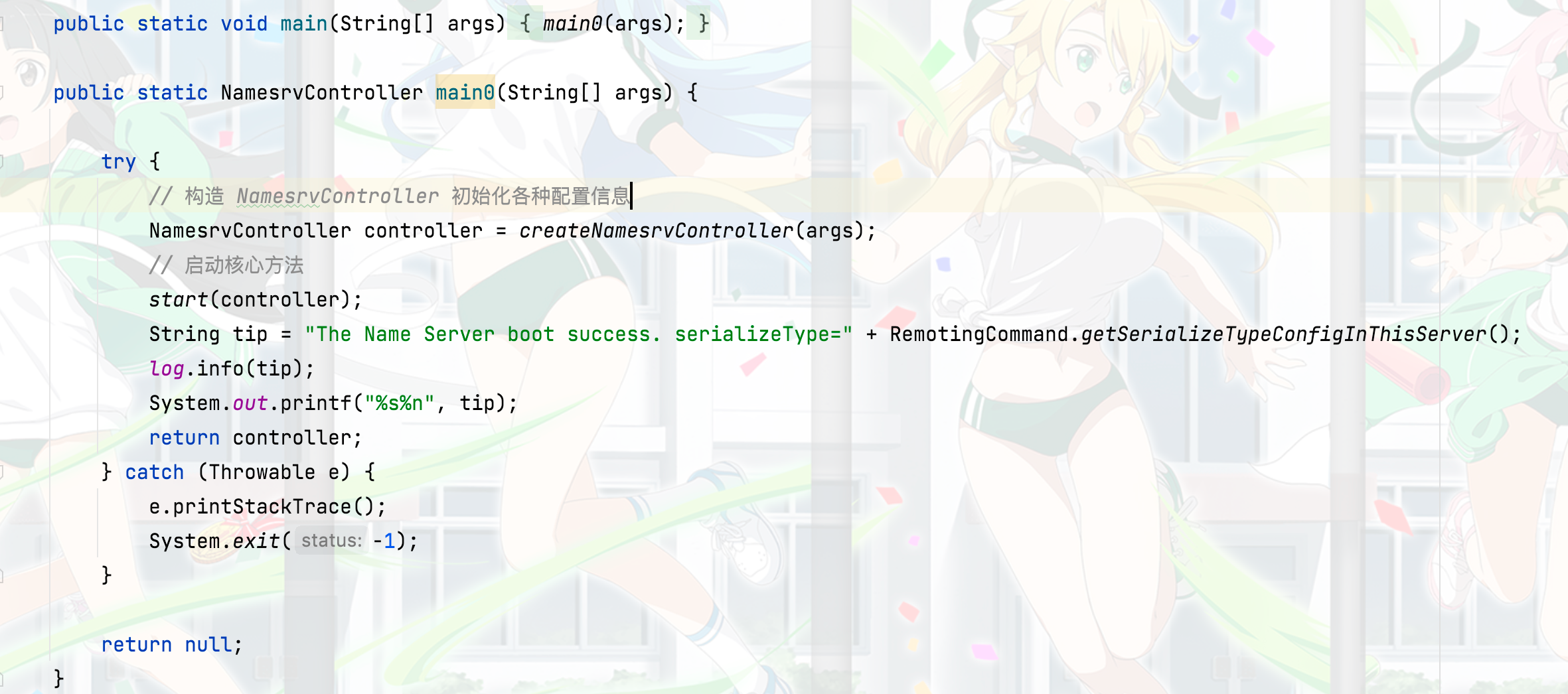

The startup entry of the NameServer source code is in the main method of the NamesrvStartup class

You can see that the methods are well encapsulated, and each method does one thing. The core method is these two

NamesrvController controller = createNamesrvController(args); start(controller);

How is NamesrvController created

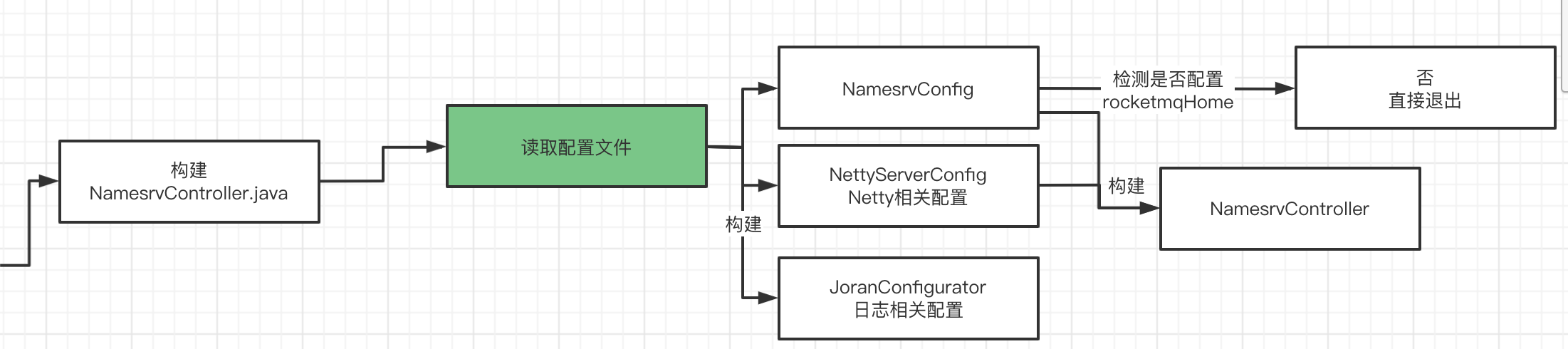

Let's see what the createNamesrvController() method does

public static NamesrvController createNamesrvController(String[] args) throws IOException, JoranException {

System.setProperty(RemotingCommand.REMOTING_VERSION_KEY, Integer.toString(MQVersion.CURRENT_VERSION));

//PackageConflictDetect.detectFastjson();

Options options = ServerUtil.buildCommandlineOptions(new Options());

commandLine = ServerUtil.parseCmdLine("mqnamesrv", args, buildCommandlineOptions(options), new PosixParser());

if (null == commandLine) {

System.exit(-1);

return null;

}

final NamesrvConfig namesrvConfig = new NamesrvConfig();

final NettyServerConfig nettyServerConfig = new NettyServerConfig();

nettyServerConfig.setListenPort(9876);

// Start the namesrv parameter with c to read the configuration file

if (commandLine.hasOption('c')) {

String file = commandLine.getOptionValue('c');

if (file != null) {

// read file

InputStream in = new BufferedInputStream(new FileInputStream(file));

properties = new Properties();

properties.load(in);

MixAll.properties2Object(properties, namesrvConfig);

MixAll.properties2Object(properties, nettyServerConfig);

namesrvConfig.setConfigStorePath(file);

System.out.printf("load config properties file OK, %s%n", file);

in.close();

}

}

// If the startup parameter is with p, print the NameSrv startup parameter

if (commandLine.hasOption('p')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_CONSOLE_NAME);

MixAll.printObjectProperties(console, namesrvConfig);

MixAll.printObjectProperties(console, nettyServerConfig);

System.exit(0);

}

// Get the configuration class content and fill it into namesrvConfig

MixAll.properties2Object(ServerUtil.commandLine2Properties(commandLine), namesrvConfig);

// Exit if rocketmqHome prints abnormally

if (null == namesrvConfig.getRocketmqHome()) {

System.out.printf("Please set the %s variable in your environment to match the location of the RocketMQ installation%n", MixAll.ROCKETMQ_HOME_ENV);

System.exit(-2);

}

// Log related configuration

LoggerContext lc = (LoggerContext) LoggerFactory.getILoggerFactory();

JoranConfigurator configurator = new JoranConfigurator();

configurator.setContext(lc);

lc.reset();

configurator.doConfigure(namesrvConfig.getRocketmqHome() + "/conf/logback_namesrv.xml");

// Print all configuration information of NameServer

log = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_LOGGER_NAME);

MixAll.printObjectProperties(log, namesrvConfig);

MixAll.printObjectProperties(log, nettyServerConfig);

final NamesrvController controller = new NamesrvController(namesrvConfig, nettyServerConfig);

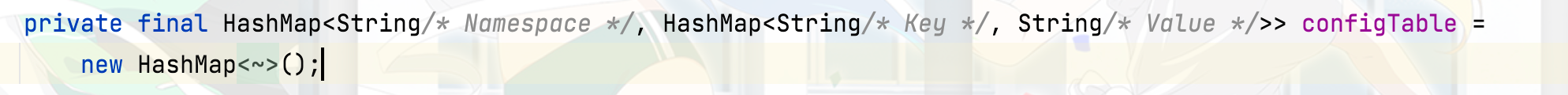

// remember all configs to prevent discard

controller.getConfiguration().registerConfig(properties);

return controller;

}

The above source code is relatively long. It analyzes parameters and constructs some configuration classes. Here is a summary

We don't see the following code or know what the configuration class is for, so we don't do too much analysis for the time being. We only know that we have parsed the configuration parameters of the startup script or the set configuration file, and then generated the corresponding configuration class

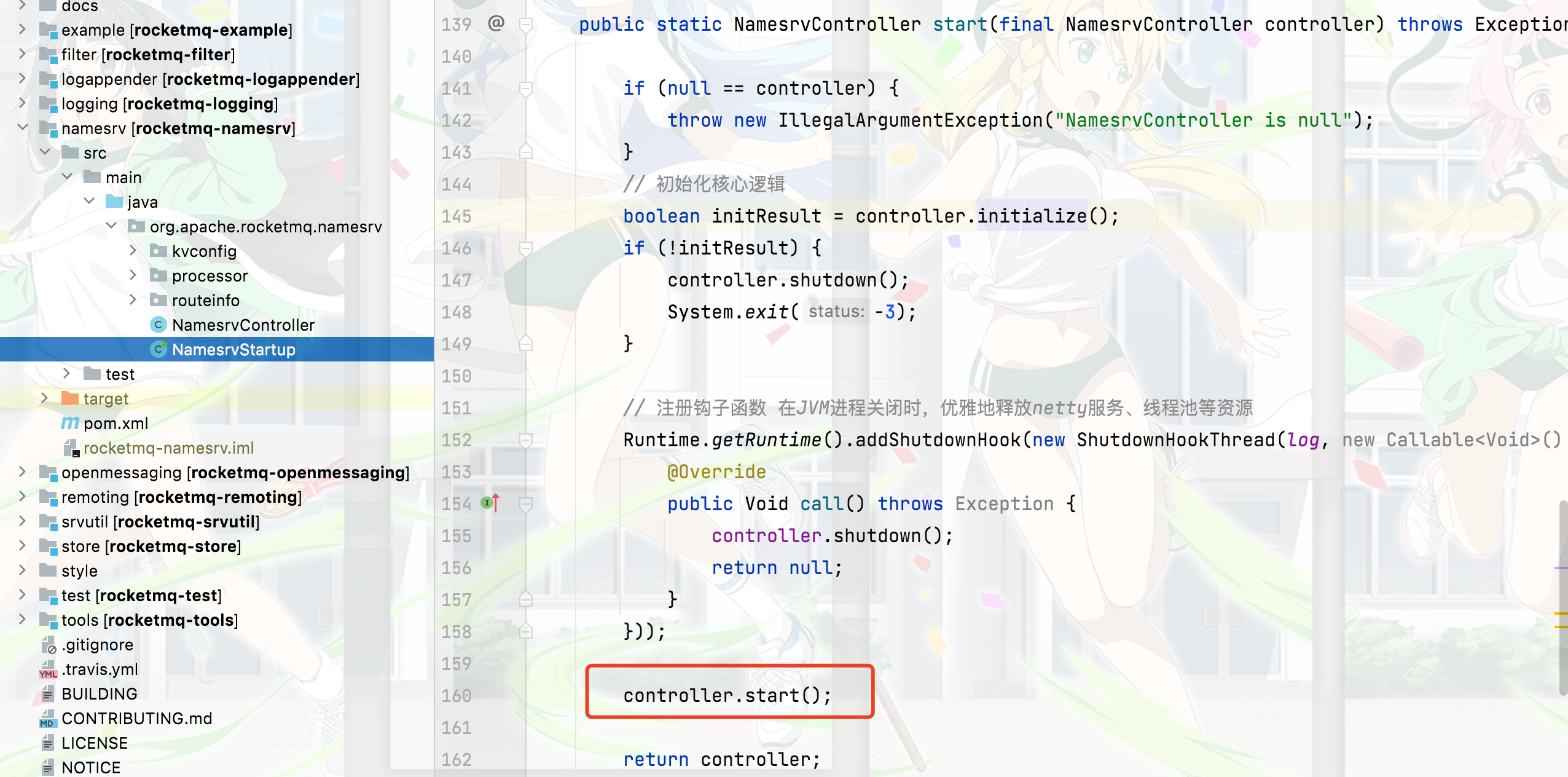

Start NameServer

The core entry method for starting NameServer is

start(controller);

Let's look at the implementation of this code

public static NamesrvController start(final NamesrvController controller) throws Exception {

if (null == controller) {

throw new IllegalArgumentException("NamesrvController is null");

}

// Initialize core logic

boolean initResult = controller.initialize();

if (!initResult) {

controller.shutdown();

System.exit(-3);

}

// The registration hook function gracefully releases the netty service, thread pool and other resources when the JVM process is closed

Runtime.getRuntime().addShutdownHook(new ShutdownHookThread(log, new Callable<Void>() {

@Override

public Void call() throws Exception {

controller.shutdown();

return null;

}

}));

controller.start();

return controller;

}

You can see that there is no core implementation in this method. Only a hook function is registered to release some thread pool and Netty server resources when the JVM is closed. The core implementation is still encapsulated in the method

boolean initResult = controller.initialize();

Let's focus on the implementation of this method

public boolean initialize() {

this.kvConfigManager.load();

// Building a Netty server

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.brokerHousekeepingService);

// netty worker

this.remotingExecutor = Executors.newFixedThreadPool(nettyServerConfig.getServerWorkerThreads(), new ThreadFactoryImpl("RemotingExecutorThread_"));

// Pool worker threads to netty

this.registerProcessor();

// Periodically detect inactive brokers

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.routeInfoManager.scanNotActiveBroker();

}

}, 5, 10, TimeUnit.SECONDS);

// Create scheduled tasks -- print the configuration every 10 minutes

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.kvConfigManager.printAllPeriodically();

}

}, 1, 10, TimeUnit.MINUTES);

if (TlsSystemConfig.tlsMode != TlsMode.DISABLED) {

// Register a listener to reload SslContext

try {

fileWatchService = new FileWatchService(

new String[] {

TlsSystemConfig.tlsServerCertPath,

TlsSystemConfig.tlsServerKeyPath,

TlsSystemConfig.tlsServerTrustCertPath

},

new FileWatchService.Listener() {

boolean certChanged, keyChanged = false;

@Override

public void onChanged(String path) {

if (path.equals(TlsSystemConfig.tlsServerTrustCertPath)) {

log.info("The trust certificate changed, reload the ssl context");

reloadServerSslContext();

}

if (path.equals(TlsSystemConfig.tlsServerCertPath)) {

certChanged = true;

}

if (path.equals(TlsSystemConfig.tlsServerKeyPath)) {

keyChanged = true;

}

if (certChanged && keyChanged) {

log.info("The certificate and private key changed, reload the ssl context");

certChanged = keyChanged = false;

reloadServerSslContext();

}

}

private void reloadServerSslContext() {

((NettyRemotingServer) remotingServer).loadSslContext();

}

});

} catch (Exception e) {

log.warn("FileWatchService created error, can't load the certificate dynamically");

}

}

return true;

}

Timed task thread pool

What the above code is easy to understand is that many timer task threads are opened, such as printing the k v configuration of Namespace

Start a scheduled task to scan inactive broker s and eliminate offline

NamesrvController.this.routeInfoManager.scanNotActiveBroker();

public void scanNotActiveBroker() {

Iterator<Entry<String, BrokerLiveInfo>> it = this.brokerLiveTable.entrySet().iterator();

while (it.hasNext()) {

Entry<String, BrokerLiveInfo> next = it.next();

long last = next.getValue().getLastUpdateTimestamp();

// The current time exceeds the last heartbeat time + the default 120s

if ((last + BROKER_CHANNEL_EXPIRED_TIME) < System.currentTimeMillis()) {

RemotingUtil.closeChannel(next.getValue().getChannel());

it.remove();

log.warn("The broker channel expired, {} {}ms", next.getKey(), BROKER_CHANNEL_EXPIRED_TIME);

// Remove the Broker when the task Broker goes offline

this.onChannelDestroy(next.getKey(), next.getValue().getChannel());

}

}

}

Construct Netty related thread pool

Then let's focus on the code related to Netty

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.brokerHousekeepingService);

public NettyRemotingServer(final NettyServerConfig nettyServerConfig,

final ChannelEventListener channelEventListener) {

super(nettyServerConfig.getServerOnewaySemaphoreValue(), nettyServerConfig.getServerAsyncSemaphoreValue());

//Auxiliary startup class for starting NIO server

this.serverBootstrap = new ServerBootstrap();

this.nettyServerConfig = nettyServerConfig;

this.channelEventListener = channelEventListener;

int publicThreadNums = nettyServerConfig.getServerCallbackExecutorThreads();

if (publicThreadNums <= 0) {

publicThreadNums = 4;

}

this.publicExecutor = Executors.newFixedThreadPool(publicThreadNums, new ThreadFactory() {

private AtomicInteger threadIndex = new AtomicInteger(0);

@Override

public Thread newThread(Runnable r) {

return new Thread(r, "NettyServerPublicExecutor_" + this.threadIndex.incrementAndGet());

}

});

if (useEpoll()) {

this.eventLoopGroupBoss = new EpollEventLoopGroup(1, new ThreadFactory() {

private AtomicInteger threadIndex = new AtomicInteger(0);

@Override

public Thread newThread(Runnable r) {

return new Thread(r, String.format("NettyEPOLLBoss_%d", this.threadIndex.incrementAndGet()));

}

});

this.eventLoopGroupSelector = new EpollEventLoopGroup(nettyServerConfig.getServerSelectorThreads(), new ThreadFactory() {

private AtomicInteger threadIndex = new AtomicInteger(0);

private int threadTotal = nettyServerConfig.getServerSelectorThreads();

@Override

public Thread newThread(Runnable r) {

return new Thread(r, String.format("NettyServerEPOLLSelector_%d_%d", threadTotal, this.threadIndex.incrementAndGet()));

}

});

} else {

// Worker thread

this.eventLoopGroupBoss = new NioEventLoopGroup(1, new ThreadFactory() {

private AtomicInteger threadIndex = new AtomicInteger(0);

@Override

public Thread newThread(Runnable r) {

return new Thread(r, String.format("NettyNIOBoss_%d", this.threadIndex.incrementAndGet()));

}

});

this.eventLoopGroupSelector = new NioEventLoopGroup(nettyServerConfig.getServerSelectorThreads(), new ThreadFactory() {

private AtomicInteger threadIndex = new AtomicInteger(0);

private int threadTotal = nettyServerConfig.getServerSelectorThreads();

@Override

public Thread newThread(Runnable r) {

return new Thread(r, String.format("NettyServerNIOSelector_%d_%d", threadTotal, this.threadIndex.incrementAndGet()));

}

});

}

loadSslContext();

}

You can see that this is mainly used to initialize some related threads of Netty. It will also determine whether NIO uses the common NIO implementation (NioEventLoopGroup) or the Linux encapsulated NIO implementation (EpollEventLoopGroup)

You can see that only some Netty related thread pools are created here, and the Netty server is not started.

Start the Netty server

After so much preparation, the implementation of the Netty server was started here

public void start() throws Exception {

this.remotingServer.start();

if (this.fileWatchService != null) {

this.fileWatchService.start();

}

}

@Override

public void start() {

this.defaultEventExecutorGroup = new DefaultEventExecutorGroup(

nettyServerConfig.getServerWorkerThreads(),

new ThreadFactory() {

private AtomicInteger threadIndex = new AtomicInteger(0);

@Override

public Thread newThread(Runnable r) {

return new Thread(r, "NettyServerCodecThread_" + this.threadIndex.incrementAndGet());

}

});

prepareSharableHandlers();

ServerBootstrap childHandler =

this.serverBootstrap.group(this.eventLoopGroupBoss, this.eventLoopGroupSelector)

.channel(useEpoll() ? EpollServerSocketChannel.class : NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 1024)//The maximum length of the queue used to temporarily hold requests that have completed three handshakes

.option(ChannelOption.SO_REUSEADDR, true)

.option(ChannelOption.SO_KEEPALIVE, false)// Enable heartbeat keep alive mechanism

.childOption(ChannelOption.TCP_NODELAY, true)//Enables or about the Nagle algorithm. If high real-time performance is required and data is sent immediately when there is data, set this option to true and turn off the Nagle algorithm; If you want to reduce the sending times and network interaction, set it to false and wait for a certain amount to accumulate before sending. The default is false

.childOption(ChannelOption.SO_SNDBUF, nettyServerConfig.getServerSocketSndBufSize())// Defines the size of the system buffer buf for transmission

.childOption(ChannelOption.SO_RCVBUF, nettyServerConfig.getServerSocketRcvBufSize())// Defines the size of the received system buffer buf

.localAddress(new InetSocketAddress(this.nettyServerConfig.getListenPort()))

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ch.pipeline()

.addLast(defaultEventExecutorGroup, HANDSHAKE_HANDLER_NAME, handshakeHandler)

.addLast(defaultEventExecutorGroup,

encoder,

new NettyDecoder(),

new IdleStateHandler(0, 0, nettyServerConfig.getServerChannelMaxIdleTimeSeconds()),

connectionManageHandler,

serverHandler

);

}

});

if (nettyServerConfig.isServerPooledByteBufAllocatorEnable()) {

childHandler.childOption(ChannelOption.ALLOCATOR, PooledByteBufAllocator.DEFAULT);

}

try {

ChannelFuture sync = this.serverBootstrap.bind().sync();

InetSocketAddress addr = (InetSocketAddress) sync.channel().localAddress();

this.port = addr.getPort();

} catch (InterruptedException e1) {

throw new RuntimeException("this.serverBootstrap.bind().sync() InterruptedException", e1);

}

if (this.channelEventListener != null) {

this.nettyEventExecutor.start();

}

// Requests with timed scan requests longer than 1 second

this.timer.scheduleAtFixedRate(new TimerTask() {

@Override

public void run() {

try {

NettyRemotingServer.this.scanResponseTable();

} catch (Throwable e) {

log.error("scanResponseTable exception", e);

}

}

}, 1000 * 3, 1000);

}

Here are all Netty related codes, which are nothing more than binding some codec handlers, some heartbeat and authentication handlers, and some Socket related configurations.

summary

So far, the NameServer calculation has been started. Then, listen to port 9876 and wait for Broker registration. We didn't focus on the above nety related codes because they are nety related. In short, we have a general understanding of the startup of NameServer. Later, we will see how the Broker registers with NameServer and how the Broker communicates with NameServer