In the previous sections, we talked about the application level of RocketMQ and some analysis of the source code. The storage of messages is only a simple analysis, but we did not explore the internal mechanism in depth. We all know that RocketMQ is a message model based on file storage. The intuitive feeling of file storage is that it is slower. Why? So RocketMQ produces and consumes messages in milliseconds. Is it really slow to store files as we think? This section analyses the internal memory mapping mechanism of the message.

<!--more-->

1. Overview

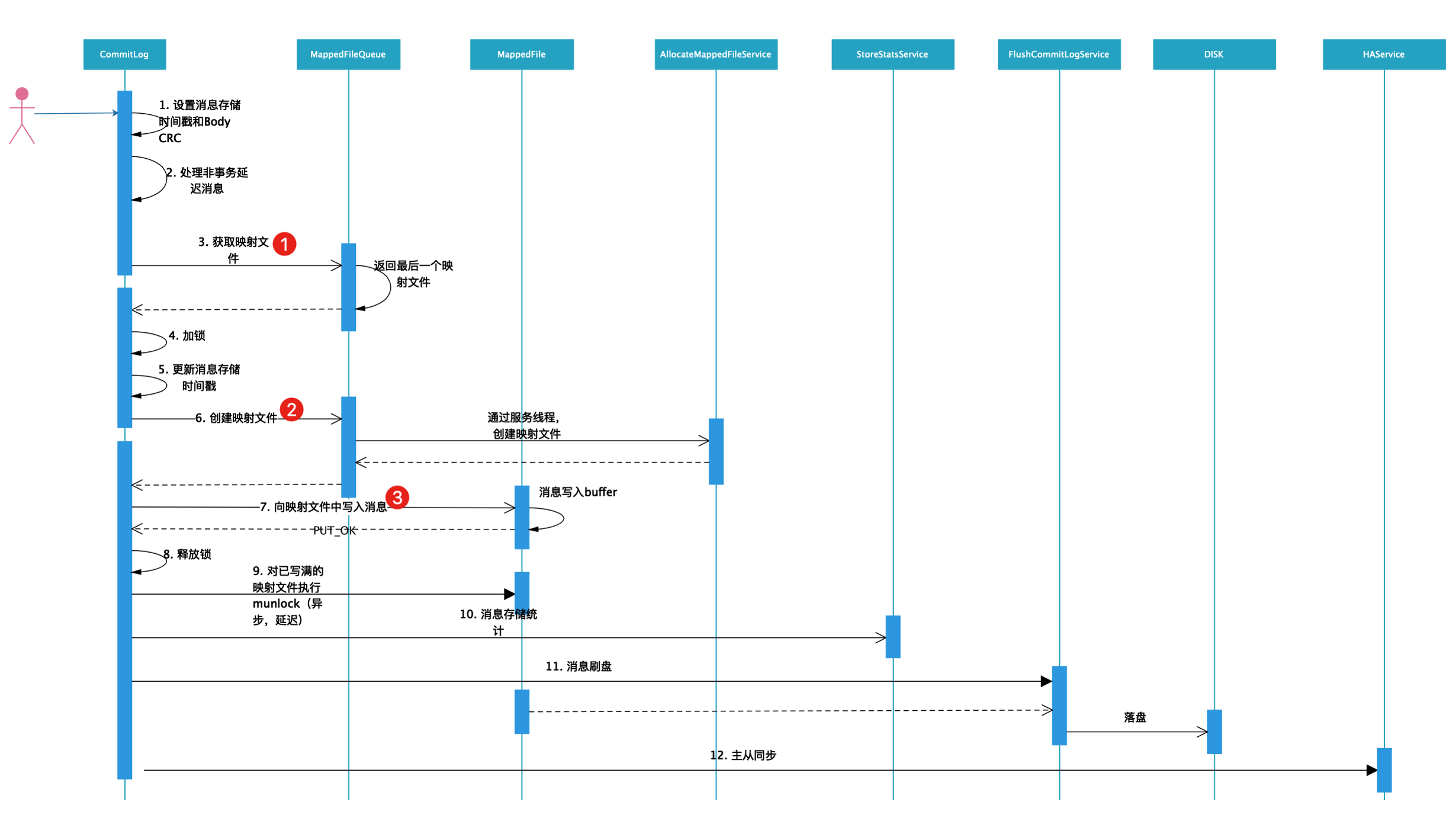

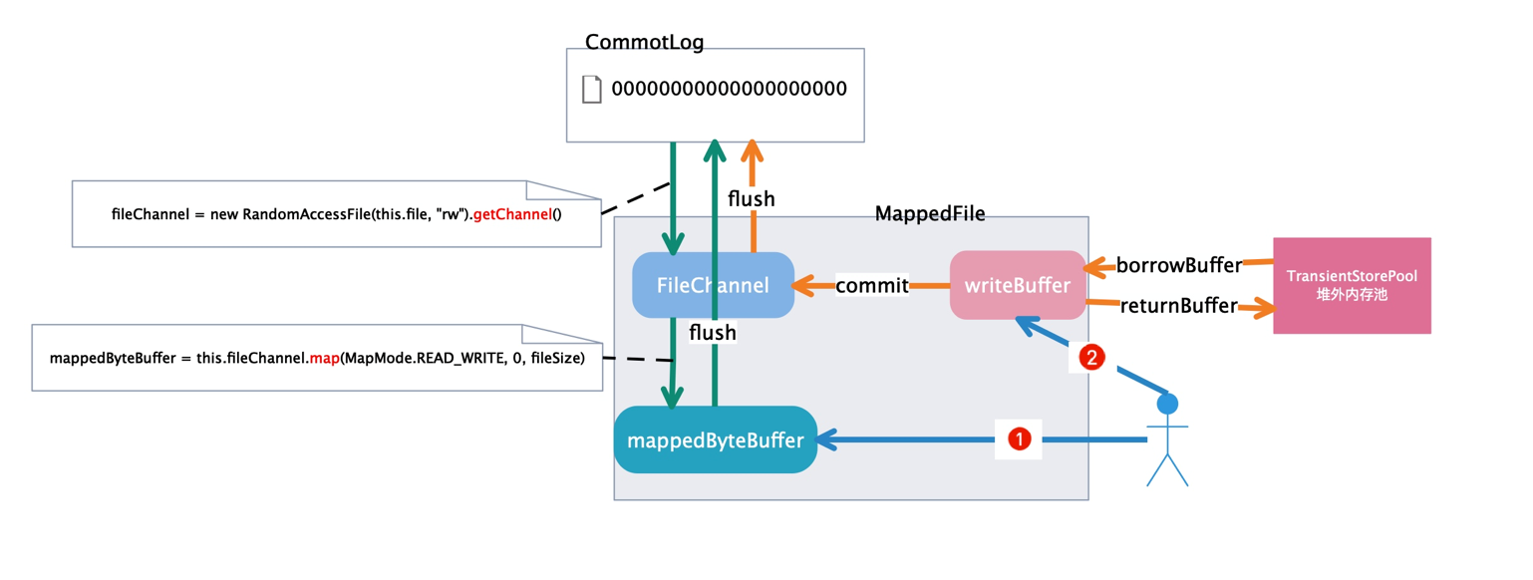

Let's first look at the sending flow chart of the message.

We are in front of you. RocketMQ transaction message In this chapter, we only analyze the CommitLog.putMessage() method for each kind of business process in message sending. Next we will discuss it. The flow of this message method is shown in the figure above.

public PutMessageResult putMessage(final MessageExtBrokerInner msg) { //Set the time when messages are stored in files msg.setStoreTimestamp(System.currentTimeMillis()); //Setting the check code CRC for messages msg.setBodyCRC(UtilAll.crc32(msg.getBody())); AppendMessageResult result = null; StoreStatsService storeStatsService = this.defaultMessageStore.getStoreStatsService(); String topic = msg.getTopic(); int queueId = msg.getQueueId(); final int tranType = MessageSysFlag.getTransactionValue(msg.getSysFlag()); if (tranType == MessageSysFlag.TRANSACTION_NOT_TYPE || tranType == MessageSysFlag.TRANSACTION_COMMIT_TYPE) { // Whether the delay level of Delay Delivery message is greater than 0 if (msg.getDelayTimeLevel() > 0) { //If the delay level of a message is greater than the maximum delay level, it is set to the maximum delay level. if (msg.getDelayTimeLevel() > this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()) { msg.setDelayTimeLevel(this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()); } //Set the message topic to SCHEDULE_TOPIC_XXXX topic = ScheduleMessageService.SCHEDULE_TOPIC; //Set the message queue to the ID of the delayed message queue queueId = ScheduleMessageService.delayLevel2QueueId(msg.getDelayTimeLevel()); //The original topic and message queue of the message are stored in attributes MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_TOPIC, msg.getTopic()); MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_QUEUE_ID, String.valueOf(msg.getQueueId())); msg.setPropertiesString(MessageDecoder.messageProperties2String(msg.getProperties())); msg.setTopic(topic); msg.setQueueId(queueId); } } long eclipseTimeInLock = 0; MappedFile unlockMappedFile = null; //Getting the mapping file for the last message, mappedFileQueue can be seen as a mapping of files under the CommitLog folder MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile(); //Apply for putMessageLock before writing a message, that is, to ensure that the message is written serially in the CommitLog file putMessageLock.lock(); //spin or ReentrantLock ,depending on store config try { long beginLockTimestamp = this.defaultMessageStore.getSystemClock().now(); this.beginTimeInLock = beginLockTimestamp; //Setting the Storage Time of Messages msg.setStoreTimestamp(beginLockTimestamp); //mappedFile==null identifies CommitLog files that have not been created, and CommitLog files are created for the first save message. //mappedFile.isFull() indicates that the mappedFile file is full and that the CommitLog file needs to be recreated if (null == mappedFile || mappedFile.isFull()) { //The parameter 0 inside represents the offset. mappedFile = this.mappedFileQueue.getLastMappedFile(0); // Mark: NewFile may be cause noise } //mappedFile==null indicates that an exception was thrown when the CommitLog file was created. The creation failure may be due to insufficient disk space or insufficient privileges. if (null == mappedFile) { log.error("create mapped file1 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString()); beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, null); } //Added message after mappedFile file result = mappedFile.appendMessage(msg, this.appendMessageCallback); switch (result.getStatus()) { case PUT_OK: break; case END_OF_FILE: unlockMappedFile = mappedFile; // Create a new file, re-write the message mappedFile = this.mappedFileQueue.getLastMappedFile(0); if (null == mappedFile) { // XXX: warn and notify me log.error("create mapped file2 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString()); beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result); } result = mappedFile.appendMessage(msg, this.appendMessageCallback); break; case MESSAGE_SIZE_EXCEEDED: case PROPERTIES_SIZE_EXCEEDED: beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result); case UNKNOWN_ERROR: beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result); default: beginTimeInLock = 0; return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result); } eclipseTimeInLock = this.defaultMessageStore.getSystemClock().now() - beginLockTimestamp; beginTimeInLock = 0; } finally { //Release lock putMessageLock.unlock(); } if (eclipseTimeInLock > 500) { log.warn("[NOTIFYME]putMessage in lock cost time(ms)={}, bodyLength={} AppendMessageResult={}", eclipseTimeInLock, msg.getBody().length, result); } if (null != unlockMappedFile && this.defaultMessageStore.getMessageStoreConfig().isWarmMapedFileEnable()) { this.defaultMessageStore.unlockMappedFile(unlockMappedFile); } PutMessageResult putMessageResult = new PutMessageResult(PutMessageStatus.PUT_OK, result); // Statistics storeStatsService.getSinglePutMessageTopicTimesTotal(msg.getTopic()).incrementAndGet(); storeStatsService.getSinglePutMessageTopicSizeTotal(topic).addAndGet(result.getWroteBytes()); //Message Brush handleDiskFlush(result, putMessageResult, msg); //Master-slave data synchronous replication handleHA(result, putMessageResult, msg); return putMessageResult; }

In this chapter, we focus on three points. The following chapters will introduce the brush mechanism.

- Get MappedFile

- Create MappedFile

- Write messages in mapping files

2. Getting MappedFile

2.1. The relationship between MappedFile and Commitlog

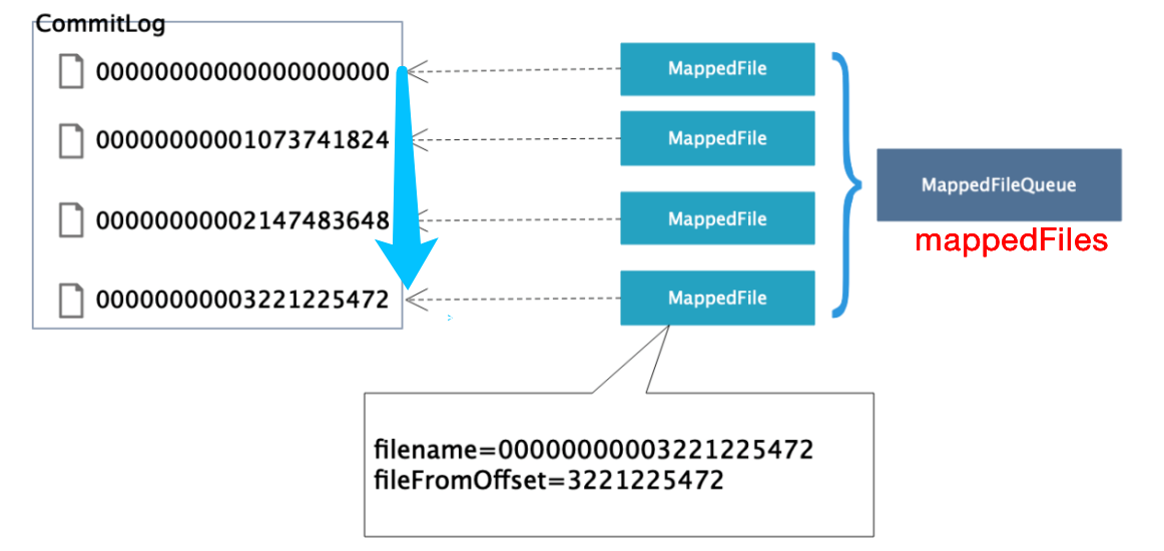

We know that when the message is stored in Commitlog file, what is the relationship with the memory mapped object MappedFile?

From the diagram, we can clearly know the relationship between them. For each MappedFile object, for a Commitlog file, we analyze when the business operation of the corresponding relationship takes place, and we analyze the source code.

Broker service startup creates BrokerController object and initializes it by calling DefaultMessageStore.load() method to load Commitlog file and consumption queue file

public boolean load() { //Eliminate code... // Load Commitlog file result = result && this.commitLog.load(); // Load the consumption queue file result = result && this.loadConsumeQueue(); //Eliminate code... }

Let's analyze commitLog.load() calling mappedFileQueue.load()

public boolean load() { //Message Storage Path File dir = new File(this.storePath); File[] files = dir.listFiles(); if (files != null) { // Ascending order Arrays.sort(files); for (File file : files) { if (file.length() != this.mappedFileSize) { log.warn(file + "\t" + file.length() + " length not matched message store config value, ignore it"); return true; } try { MappedFile mappedFile = new MappedFile(file.getPath(), mappedFileSize); //Write pointer to current file mappedFile.setWrotePosition(this.mappedFileSize); //Brush to the disk pointer, the data before the pointer is persisted to the disk mappedFile.setFlushedPosition(this.mappedFileSize); //Submission pointer for current file mappedFile.setCommittedPosition(this.mappedFileSize); //Add to MappedFile file collection this.mappedFiles.add(mappedFile); log.info("load " + file.getPath() + " OK"); } catch (IOException e) { log.error("load file " + file + " error", e); return false; } } } return true; }

Obviously, this method is the logical relationship between the MappedFile object and a Commitlog file.

The Commitlog file in the circular message storage path folder is arranged in ascending order, the MappedFile object is created, and the basic parameter data is added to the MappedFile file set. We look at the new MappedFile() and call the MappedFile.init() method.

private void init(final String fileName, final int fileSize) throws IOException { this.fileName = fileName; this.fileSize = fileSize; this.file = new File(fileName); //The initial offset for initialization is the file name this.fileFromOffset = Long.parseLong(this.file.getName()); boolean ok = false; ensureDirOK(this.file.getParent()); try { //Create read-write file channel NIO this.fileChannel = new RandomAccessFile(this.file, "rw").getChannel(); //Mapping files to memory this.mappedByteBuffer = this.fileChannel.map(MapMode.READ_WRITE, 0, fileSize); TOTAL_MAPPED_VIRTUAL_MEMORY.addAndGet(fileSize); TOTAL_MAPPED_FILES.incrementAndGet(); ok = true; } catch (FileNotFoundException e) { log.error("create file channel " + this.fileName + " Failed. ", e); throw e; } catch (IOException e) { log.error("map file " + this.fileName + " Failed. ", e); throw e; } finally { if (!ok && this.fileChannel != null) { this.fileChannel.close(); } } }

Map files to memory.

Above, we analyzed the logical relationship between mappedFile and commitlog, added mappedFile to mappedFileQueue, and explained the initialization process of MappedFile.

2.2. Get the last mappedFile in mappedFileQueue

As we have seen above, the relationship between commitlog and mappedFile is one-to-one. When we need to store messages, we need to find the last commitlog file that is not full of messages, that is, the last mappedFiled object.

public MappedFile getLastMappedFile() { MappedFile mappedFileLast = null; while (!this.mappedFiles.isEmpty()) { try { mappedFileLast = this.mappedFiles.get(this.mappedFiles.size() - 1); break; } catch (IndexOutOfBoundsException e) { //continue; } catch (Exception e) { log.error("getLastMappedFile has exception.", e); break; } } return mappedFileLast; }

This method is simple to get the last MappedFile object from the mappedFiles collection. In 2.1, we analyzed the initialization process and put the MappedFile object into the mappedFiles collection.

3. Create MappedFile

When the acquired Mapped File object does not exist or the message is full, we need to create this. mappedFileQueue. getLast MappedFile (0)

public MappedFile getLastMappedFile(final long startOffset, boolean needCreate) { //Create the initial offset of the mapping question price long createOffset = -1; //Get the last mapping file, and create logic if null or full MappedFile mappedFileLast = getLastMappedFile(); //The last mapping file is null, creating a new mapping file if (mappedFileLast == null) { //Calculate the initial offset of the mapping file to be created //If startOffset <= mappedFileSize, the initial offset is 0 //If startOffset > mappedFileSize, the initial offset is a multiple of mappedFileSize. createOffset = startOffset - (startOffset % this.mappedFileSize); } //The mapping file is full. Create a new mapping file if (mappedFileLast != null && mappedFileLast.isFull()) { //The offset of the created mapping file is equal to the initial offset of the last mapping file + the size of the mapping file (commitlog file size) createOffset = mappedFileLast.getFileFromOffset() + this.mappedFileSize; } //Create a new mapping file if (createOffset != -1 && needCreate) { //Construct commitlog name String nextFilePath = this.storePath + File.separator + UtilAll.offset2FileName(createOffset); String nextNextFilePath = this.storePath + File.separator + UtilAll.offset2FileName(createOffset + this.mappedFileSize); MappedFile mappedFile = null; //Priority is given to the construction of mapping files through allocateMappedFileService, which is pre-allocated with high performance. //If this fails, create a mapping file with new if (this.allocateMappedFileService != null) { mappedFile = this.allocateMappedFileService.putRequestAndReturnMappedFile(nextFilePath, nextNextFilePath, this.mappedFileSize); } else { try { mappedFile = new MappedFile(nextFilePath, this.mappedFileSize); } catch (IOException e) { log.error("create mappedFile exception", e); } } if (mappedFile != null) { if (this.mappedFiles.isEmpty()) { mappedFile.setFirstCreateInQueue(true); } this.mappedFiles.add(mappedFile); } return mappedFile; } return mappedFileLast; }

allocateMappedFileService.putRequestAndReturnMappedFile() Creates MappedFile through the MappedFile service class

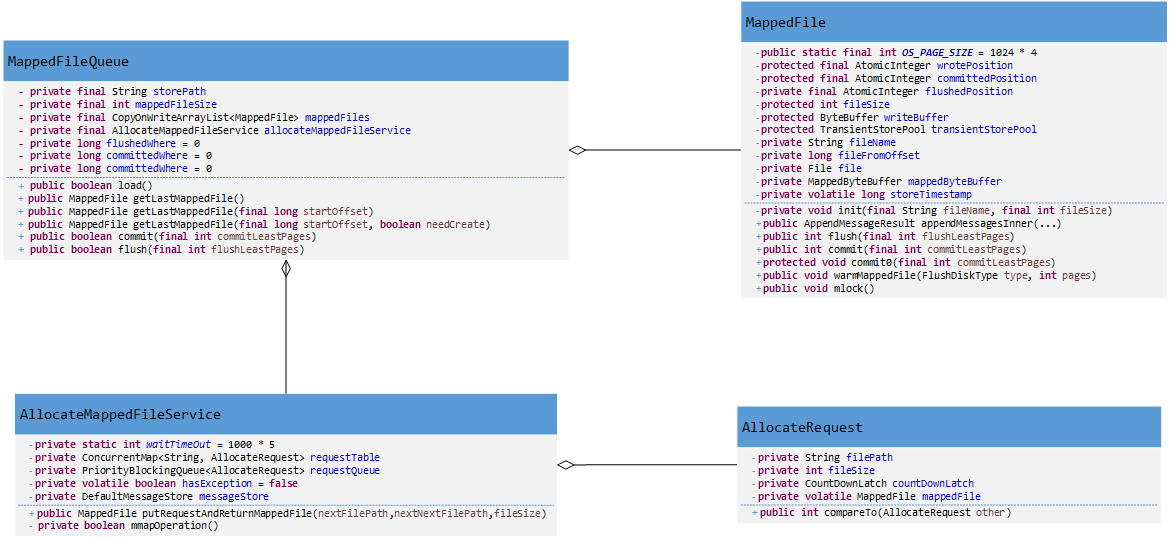

AllocateMappedFileService is the core class for creating MappedFile. Let's analyze this class.

| field | type | Explain |

|---|---|---|

| waitTimeOut | int | Time-out waiting to create the mapping file, default 5 seconds |

| requestTable | ConcurrentMap<String, AllocateRequest> | Used to save all current allocation requests to be processed, where KEY is filePath and VALUE is allocation request. If the allocation request is successfully processed, that is, the mapping file is retrieved, then the request is removed from the request table. |

| requestQueue | PriorityBlockingQueue<AllocateRequest> | Assign the request queue, note the priority queue, get the request from the queue, and then create the mapping file according to the request. |

| hasException | boolean | Identify whether an exception occurred |

| messageStore | DefaultMessageStore |

Analysis of its core methods

public MappedFile putRequestAndReturnMappedFile(String nextFilePath, String nextNextFilePath, int fileSize) { //Submit two requests by default int canSubmitRequests = 2; //When the transientStorePool Enable is true, the brush mode is ASYNC_FLUSH, and the broker is not SLAVE, the TransientStorePool is started. if (this.messageStore.getMessageStoreConfig().isTransientStorePoolEnable()) { //When starting a fast failure strategy, calculate the number of buffers remaining in the TransientStorePool minus the number to be allocated in the request Queue, and the number of buffers remaining if (this.messageStore.getMessageStoreConfig().isFastFailIfNoBufferInStorePool() && BrokerRole.SLAVE != this.messageStore.getMessageStoreConfig().getBrokerRole()) { //if broker is slave, don't fast fail even no buffer in pool canSubmitRequests = this.messageStore.getTransientStorePool().remainBufferNumbs() - this.requestQueue.size(); } } AllocateRequest nextReq = new AllocateRequest(nextFilePath, fileSize); //Determine whether there is an allocation request for the path in the request table, and if so, indicate that the request is already in the queue. boolean nextPutOK = this.requestTable.putIfAbsent(nextFilePath, nextReq) == null; //The path is not queued. if (nextPutOK) { //Fast failure if the number of buffer s remaining is less than or equal to zero if (canSubmitRequests <= 0) { log.warn("[NOTIFYME]TransientStorePool is not enough, so create mapped file error, " + "RequestQueueSize : {}, StorePoolSize: {}", this.requestQueue.size(), this.messageStore.getTransientStorePool().remainBufferNumbs()); this.requestTable.remove(nextFilePath); return null; } //Inserts the specified element into this priority queue boolean offerOK = this.requestQueue.offer(nextReq); if (!offerOK) { log.warn("never expected here, add a request to preallocate queue failed"); } //Reduce the number of buffer s remaining by 1 canSubmitRequests--; } //Create a second mapping file AllocateRequest nextNextReq = new AllocateRequest(nextNextFilePath, fileSize); boolean nextNextPutOK = this.requestTable.putIfAbsent(nextNextFilePath, nextNextReq) == null; if (nextNextPutOK) { //Check the number of buffer s if (canSubmitRequests <= 0) { log.warn("[NOTIFYME]TransientStorePool is not enough, so skip preallocate mapped file, " + "RequestQueueSize : {}, StorePoolSize: {}", this.requestQueue.size(), this.messageStore.getTransientStorePool().remainBufferNumbs()); this.requestTable.remove(nextNextFilePath); } else { //Inserts the specified element into this priority queue boolean offerOK = this.requestQueue.offer(nextNextReq); if (!offerOK) { log.warn("never expected here, add a request to preallocate queue failed"); } } } if (hasException) { log.warn(this.getServiceName() + " service has exception. so return null"); return null; } AllocateRequest result = this.requestTable.get(nextFilePath); try { if (result != null) { //wait for boolean waitOK = result.getCountDownLatch().await(waitTimeOut, TimeUnit.MILLISECONDS); if (!waitOK) { log.warn("create mmap timeout " + result.getFilePath() + " " + result.getFileSize()); return null; } else { this.requestTable.remove(nextFilePath); return result.getMappedFile(); } } else { log.error("find preallocate mmap failed, this never happen"); } } catch (InterruptedException e) { log.warn(this.getServiceName() + " service has exception. ", e); } return null; }

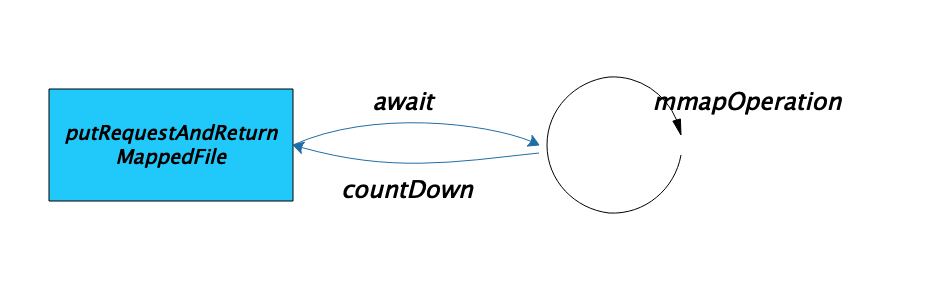

Insert the creation request into requestQueue and requestTable. Since the requestQueue in the priority queue is stored in AllocateRequest object, the compareTo method is implemented, and the priority is sorted. Since the two incoming requests are pre-created when creating MappedFile, we need to create the results of the latest requests. Other requests need to be created. Line up.

AllocateMappedFileService is a multithreaded class that implements the core method mmapOperation() of run().

private boolean mmapOperation() { boolean isSuccess = false; AllocateRequest req = null; try { //Retrieve and delete the header of this queue, waiting for elements to be available if necessary req = this.requestQueue.take(); // AllocateRequest expectedRequest = this.requestTable.get(req.getFilePath()); if (null == expectedRequest) { log.warn("this mmap request expired, maybe cause timeout " + req.getFilePath() + " " + req.getFileSize()); return true; } if (expectedRequest != req) { log.warn("never expected here, maybe cause timeout " + req.getFilePath() + " " + req.getFileSize() + ", req:" + req + ", expectedRequest:" + expectedRequest); return true; } if (req.getMappedFile() == null) { long beginTime = System.currentTimeMillis(); MappedFile mappedFile; //Determine whether TransientStorePool Enable is enabled if (messageStore.getMessageStoreConfig().isTransientStorePoolEnable()) { try { mappedFile = ServiceLoader.load(MappedFile.class).iterator().next(); mappedFile.init(req.getFilePath(), req.getFileSize(), messageStore.getTransientStorePool()); } catch (RuntimeException e) {//Create by default log.warn("Use default implementation."); mappedFile = new MappedFile(req.getFilePath(), req.getFileSize(), messageStore.getTransientStorePool()); } } else { mappedFile = new MappedFile(req.getFilePath(), req.getFileSize()); } long eclipseTime = UtilAll.computeEclipseTimeMilliseconds(beginTime); if (eclipseTime > 10) { int queueSize = this.requestQueue.size(); log.warn("create mappedFile spent time(ms) " + eclipseTime + " queue size " + queueSize + " " + req.getFilePath() + " " + req.getFileSize()); } // pre write mappedFile if (mappedFile.getFileSize() >= this.messageStore.getMessageStoreConfig().getMapedFileSizeCommitLog() && this.messageStore.getMessageStoreConfig().isWarmMapedFileEnable()) { //Preheating MappedFile mappedFile.warmMappedFile(this.messageStore.getMessageStoreConfig().getFlushDiskType(), this.messageStore.getMessageStoreConfig().getFlushLeastPagesWhenWarmMapedFile()); } req.setMappedFile(mappedFile); this.hasException = false; isSuccess = true; } } catch (InterruptedException e) { log.warn(this.getServiceName() + " interrupted, possibly by shutdown."); this.hasException = true; return false; } catch (IOException e) { log.warn(this.getServiceName() + " service has exception. ", e); this.hasException = true; if (null != req) { requestQueue.offer(req); try { Thread.sleep(1); } catch (InterruptedException ignored) { } } } finally { if (req != null && isSuccess) req.getCountDownLatch().countDown(); } return true; }

For a detailed analysis of MMAP, please check -" Principle analysis of linux memory mapping mmap Article 1

We found two ways to create mappedFile objects

1,mappedFile = new MappedFile(req.getFilePath(), req.getFileSize())

public MappedFile(final String fileName, final int fileSize) throws IOException { init(fileName, fileSize); } private void init(final String fileName, final int fileSize) throws IOException { this.fileName = fileName; this.fileSize = fileSize; this.file = new File(fileName); //The initial offset for initialization is the file name this.fileFromOffset = Long.parseLong(this.file.getName()); boolean ok = false; ensureDirOK(this.file.getParent()); try { //Create read-write file channel NIO this.fileChannel = new RandomAccessFile(this.file, "rw").getChannel(); //Mapping files to memory this.mappedByteBuffer = this.fileChannel.map(MapMode.READ_WRITE, 0, fileSize); TOTAL_MAPPED_VIRTUAL_MEMORY.addAndGet(fileSize); TOTAL_MAPPED_FILES.incrementAndGet(); ok = true; } catch (FileNotFoundException e) { log.error("create file channel " + this.fileName + " Failed. ", e); throw e; } catch (IOException e) { log.error("map file " + this.fileName + " Failed. ", e); throw e; } finally { if (!ok && this.fileChannel != null) { this.fileChannel.close(); } } }

2,mappedFile = ServiceLoader.load(MappedFile.class).iterator().next(); mappedFile.init(req.getFilePath(), req.getFileSize(), messageStore.getTransientStorePool())

//TransientStorePool Enable is true public void init(final String fileName, final int fileSize, final TransientStorePool transientStorePool) throws IOException { init(fileName, fileSize); //Initialize the writeBuffer of MappedFile this.writeBuffer = transientStorePool.borrowBuffer(); this.transientStorePool = transientStorePool; }

The init(fileName, fileSize) method is also called.

What are the differences in data processing between TransientStorePool and MappedFile? Analyzing its code, TransientStorePool will directly apply for external memory through ByteBuffer. AlloeDirect call, and message data is written to pre-applied memory when it is written to memory. When asynchronously brushing the disk, the thread of the brush disk writes the changes in memory to the file.

So what's the difference from using MappedByteBuffer directly? Modifying MappedByteBuffer actually writes data to the Page Cache corresponding to the file, whereas writing under the TransientStorePool scheme is pure memory. As a result, the message writing operation will be faster, and therefore the CommitLog.putMessageLock lock will be less occupied, thus increasing the message processing capacity. The drawback of using the TransientStorePool scheme is that more messages are lost in case of an abnormal crash.

After creating the mappedFile object, there is a preheating operation, with each byte filled with ** (byte) 0**

public void warmMappedFile(FlushDiskType type, int pages) { long beginTime = System.currentTimeMillis(); //Create a new byte buffer whose content is a shared subsequence of the buffer content ByteBuffer byteBuffer = this.mappedByteBuffer.slice(); //Number of bytes recorded for the last brush int flush = 0; long time = System.currentTimeMillis(); for (int i = 0, j = 0; i < this.fileSize; i += MappedFile.OS_PAGE_SIZE, j++) { byteBuffer.put(i, (byte) 0); // When the brush mode is synchronization strategy, the brush operation should be carried out. // Each page page is changed to brush a disk, equivalent to 4096*4k = 16M brush a disk every 16M, 1G file 1024M/16M = 64 times. if (type == FlushDiskType.SYNC_FLUSH) { if ((i / OS_PAGE_SIZE) - (flush / OS_PAGE_SIZE) >= pages) { flush = i; mappedByteBuffer.force(); } } // Preventing garbage recovery by GC if (j % 1000 == 0) { log.info("j={}, costTime={}", j, System.currentTimeMillis() - time); time = System.currentTimeMillis(); try { Thread.sleep(0); } catch (InterruptedException e) { log.error("Interrupted", e); } } } // force flush when prepare load finished if (type == FlushDiskType.SYNC_FLUSH) { log.info("mapped file warm-up done, force to disk, mappedFile={}, costTime={}", this.getFileName(), System.currentTimeMillis() - beginTime); //Brush disk, forcing any changes to the buffer contents to be written to the storage device containing the mapping file mappedByteBuffer.force(); } log.info("mapped file warm-up done. mappedFile={}, costTime={}", this.getFileName(), System.currentTimeMillis() - beginTime); this.mlock(); }

4. Writing messages in mapping files

The core method of MappedFile.appendMessage() MappedFile. appendMessages Inner ()

public AppendMessageResult appendMessagesInner(final MessageExt messageExt, final AppendMessageCallback cb) { assert messageExt != null; assert cb != null; //Get the current write pointer int currentPos = this.wrotePosition.get(); if (currentPos < this.fileSize) { //Create a shared memory area with MappedFile ByteBuffer byteBuffer = writeBuffer != null ? writeBuffer.slice() : this.mappedByteBuffer.slice(); //Setting Pointer byteBuffer.position(currentPos); AppendMessageResult result = null; if (messageExt instanceof MessageExtBrokerInner) { result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, (MessageExtBrokerInner) messageExt); } else if (messageExt instanceof MessageExtBatch) { result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, (MessageExtBatch) messageExt); } else { return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR); } this.wrotePosition.addAndGet(result.getWroteBytes()); this.storeTimestamp = result.getStoreTimestamp(); return result; } //Exceptions are thrown if the currently written pointer is larger than the file size log.error("MappedFile.appendMessage return null, wrotePosition: {} fileSize: {}", currentPos, this.fileSize); return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR); }

Commotlog.doAppend()

public AppendMessageResult doAppend(final long fileFromOffset, final ByteBuffer byteBuffer, final int maxBlank, final MessageExtBrokerInner msgInner) { // STORETIMESTAMP + STOREHOSTADDRESS + OFFSET <br> // PHY OFFSET //Write location long wroteOffset = fileFromOffset + byteBuffer.position(); this.resetByteBuffer(hostHolder, 8); //Create a globally unique message ID with 16 bytes, 4 bytes IP+4 bytes port number + 8 bytes message offset String msgId = MessageDecoder.createMessageId(this.msgIdMemory, msgInner.getStoreHostBytes(hostHolder), wroteOffset); // Record ConsumeQueue information keyBuilder.setLength(0); keyBuilder.append(msgInner.getTopic()); keyBuilder.append('-'); keyBuilder.append(msgInner.getQueueId()); String key = keyBuilder.toString(); //The offset of the combination of topics and queues to be written is saved from CommitLog Long queueOffset = CommitLog.this.topicQueueTable.get(key); //Perhaps for the first time, no offset has been set to zero. if (null == queueOffset) { queueOffset = 0L; CommitLog.this.topicQueueTable.put(key, queueOffset); } // Transaction messages that require special handling final int tranType = MessageSysFlag.getTransactionValue(msgInner.getSysFlag()); switch (tranType) { // Prepared and Rollback message is not consumed, will not enter the // consumer queuec case MessageSysFlag.TRANSACTION_PREPARED_TYPE: case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE: queueOffset = 0L; break; case MessageSysFlag.TRANSACTION_NOT_TYPE: case MessageSysFlag.TRANSACTION_COMMIT_TYPE: default: break; } /** * Serialize message */ final byte[] propertiesData = msgInner.getPropertiesString() == null ? null : msgInner.getPropertiesString().getBytes(MessageDecoder.CHARSET_UTF8); final int propertiesLength = propertiesData == null ? 0 : propertiesData.length; if (propertiesLength > Short.MAX_VALUE) { log.warn("putMessage message properties length too long. length={}", propertiesData.length); return new AppendMessageResult(AppendMessageStatus.PROPERTIES_SIZE_EXCEEDED); } final byte[] topicData = msgInner.getTopic().getBytes(MessageDecoder.CHARSET_UTF8); final int topicLength = topicData.length; final int bodyLength = msgInner.getBody() == null ? 0 : msgInner.getBody().length; //Computation: message length = message body length + message topic length + message attribute length final int msgLen = calMsgLength(bodyLength, topicLength, propertiesLength); // Exceeds the maximum message if (msgLen > this.maxMessageSize) { CommitLog.log.warn("message size exceeded, msg total size: " + msgLen + ", msg body size: " + bodyLength + ", maxMessageSize: " + this.maxMessageSize); return new AppendMessageResult(AppendMessageStatus.MESSAGE_SIZE_EXCEEDED); } //If the message length + END_FILE_MIN_BLANK_LENGTH is greater than the remaining idle length // Determines whether there is sufficient free space //Each CommitLog file will be free for at least 8 bytes, with the first four bits recording the remaining space of the current file and the last four bits storing magic numbers (CommitLog.MESSAGE_MAGIC_CODE) if ((msgLen + END_FILE_MIN_BLANK_LENGTH) > maxBlank) { this.resetByteBuffer(this.msgStoreItemMemory, maxBlank); // 1 TOTALSIZE this.msgStoreItemMemory.putInt(maxBlank); // 2 MAGICCODE this.msgStoreItemMemory.putInt(CommitLog.BLANK_MAGIC_CODE); // 3 The remaining space may be any value // Here the length of the specially set maxBlank final long beginTimeMills = CommitLog.this.defaultMessageStore.now(); byteBuffer.put(this.msgStoreItemMemory.array(), 0, maxBlank); return new AppendMessageResult(AppendMessageStatus.END_OF_FILE, wroteOffset, maxBlank, msgId, msgInner.getStoreTimestamp(), queueOffset, CommitLog.this.defaultMessageStore.now() - beginTimeMills); } // Initialization of storage space this.resetByteBuffer(msgStoreItemMemory, msgLen); // 1 TOTALSIZE The total length of the message entry, 4 bytes this.msgStoreItemMemory.putInt(msgLen); // 2 MAGICCODE magic number, 4 bytes this.msgStoreItemMemory.putInt(CommitLog.MESSAGE_MAGIC_CODE); // 3 BODYCRC message body crc check code 4 bytes this.msgStoreItemMemory.putInt(msgInner.getBodyCRC()); // 4 QUEUEID Message Consumption Queue ID 4 Bytes this.msgStoreItemMemory.putInt(msgInner.getQueueId()); // 5 FLAG this.msgStoreItemMemory.putInt(msgInner.getFlag()); // 6 QUEUE OFFSET message offset in message consumption queue, 8 bytes this.msgStoreItemMemory.putLong(queueOffset); // The offset of 7 PHYSICALOFFSET message in CommitLog file is 8 bytes this.msgStoreItemMemory.putLong(fileFromOffset + byteBuffer.position()); // 8 SYSFLAG this.msgStoreItemMemory.putInt(msgInner.getSysFlag()); // 9 BORNTIMESTAMP message producer invokes the API timestamp of the message sent by 8 bytes this.msgStoreItemMemory.putLong(msgInner.getBornTimestamp()); // 10 BORNHOST Message Sender's ip, port number 8 bytes this.resetByteBuffer(hostHolder, 8); this.msgStoreItemMemory.put(msgInner.getBornHostBytes(hostHolder)); // 11 STORETIMESTAMP message storage timestamp, 8 bytes this.msgStoreItemMemory.putLong(msgInner.getStoreTimestamp()); // 12 STOREHOSTADDRESS broker server IP+port number 8 bytes this.resetByteBuffer(hostHolder, 8); this.msgStoreItemMemory.put(msgInner.getStoreHostBytes(hostHolder)); //this.msgBatchMemory.put(msgInner.getStoreHostBytes()); // 13 RECONSUMETIMES message retries, 4 bytes this.msgStoreItemMemory.putInt(msgInner.getReconsumeTimes()); // 14 Prepared Transaction Offset transaction message physical offset, 8 bytes this.msgStoreItemMemory.putLong(msgInner.getPreparedTransactionOffset()); // 15 BODY Message Body Content, Body Length Length Length Length this.msgStoreItemMemory.putInt(bodyLength); if (bodyLength > 0) this.msgStoreItemMemory.put(msgInner.getBody()); // 16 TOPIC Theme this.msgStoreItemMemory.put((byte) topicLength); this.msgStoreItemMemory.put(topicData); // 17 PROPERTIES Message Properties this.msgStoreItemMemory.putShort((short) propertiesLength); if (propertiesLength > 0) this.msgStoreItemMemory.put(propertiesData); final long beginTimeMills = CommitLog.this.defaultMessageStore.now(); // Write messages to the queue buffer //Write to message queue cache byteBuffer.put(this.msgStoreItemMemory.array(), 0, msgLen); AppendMessageResult result = new AppendMessageResult(AppendMessageStatus.PUT_OK, wroteOffset, msgLen, msgId, msgInner.getStoreTimestamp(), queueOffset, CommitLog.this.defaultMessageStore.now() - beginTimeMills); switch (tranType) { case MessageSysFlag.TRANSACTION_PREPARED_TYPE: case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE: break; case MessageSysFlag.TRANSACTION_NOT_TYPE: case MessageSysFlag.TRANSACTION_COMMIT_TYPE: // The next update ConsumeQueue information CommitLog.this.topicQueueTable.put(key, ++queueOffset); break; default: break; } return result; }

The basic parameters of the message are constructed to return the status of the cache and the position of the write pointer.