Original text: Restrictions on Flink DataStream Java Lambda expressions

Java 8 introduces some new language features aimed at faster and clearer coding. With the most important feature 'lambda expression', it opens the door to functional programming. Lambda expressions allow functions to be implemented and passed directly without declaring additional (anonymous) classes. Flink supports lambda expressions for all operators of the Java API, but when lambda expressions use Java generics, we must explicitly declare type information.

The following two examples show how to use Lambda expressions and describe the restrictions on their use.

1. MapFunction and generics

The following example illustrates how to implement a simple map() function that squares its input using a Lambda expression and outputs a tuple of itself and square:

env.fromElements(1, 2, 3) .map(i -> Tuple2.of(i, i*i)) .print();

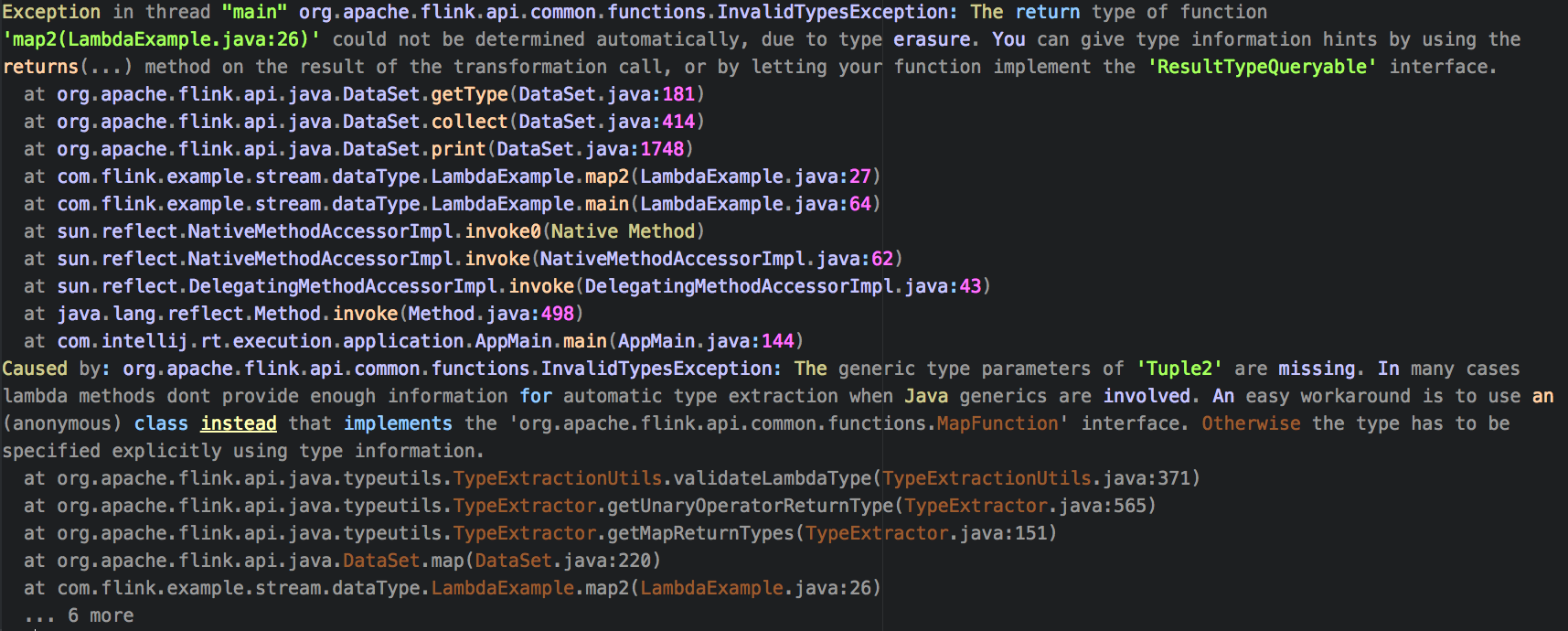

Due to the Lambda expression used in the map function, the following exception information is thrown:

From the above error information, we can know that the type erasure caused by the use of generics in Lambda throws exceptions. The return value tuple2 < integer, integer > of the map() function is erased as Tuple2 map(Integer value). In many cases, Lambda methods cannot provide enough information for automatic type extraction when Java generics are involved. Solutions are also given in the error message:

- Use a (anonymous) class instead of implementing the 'org. Apache. Flick. API. Common. Functions. Mapfunction' interface.

- The type must be specified explicitly using type information.

Let's take a detailed look at the specific corresponding scheme.

1.1 anonymous inner class method

Use anonymous inner classes instead of implementing the 'org.apache.flink.api.common.functions.MapFunction' interface:

env.fromElements(1, 2, 3)

.map(new MapFunction<Integer, Tuple2<Integer, Integer>>() {

@Override

public Tuple2<Integer, Integer> map(Integer i) throws Exception {

return new Tuple2<Integer, Integer>(i, i * i);

}

})

.print();

Why is there no problem with anonymous inner classes? Because the anonymous internal class will be compiled into relevant class bytecode and stored in the class file, and the Lambda expression is only a Java syntax sugar, and there will be no relevant class bytecode. The Lambda expression calls the invokedynamic instruction at runtime, that is, it will be determined when its logic is executed for the first time. Therefore, Lambda expressions lose more type information than anonymous inner classes.

1.2 user defined method

Use a custom class instead of implementing the 'org. Apache. Flick. API. Common. Functions. Mapfunction' interface:

public static class MyMapFunction implements MapFunction<Integer, Tuple2<Integer, Integer>> {

@Override

public Tuple2<Integer, Integer> map(Integer i) {

return Tuple2.of(i, i*i);

}

}

env.fromElements(1, 2, 3)

.map(new MyMapFunction())

.print();

1.3 returns mode

Indicate the type of information displayed using the returns statement:

env.fromElements(1, 2, 3)

.map(i -> Tuple2.of(i, i*i))

.returns(Types.TUPLE(Types.INT, Types.INT))

.print();

2. FlatMap and generics

In the following example, we use a Lambda expression in the flatMap() function to split the string into multiple lines:

env.fromElements("1,2,3", "4,5")

.flatMap((String input, Collector<String> out) -> {

String[] params = input.split(",");

for(String value : params) {

out.collect(value);

}

})

.print();

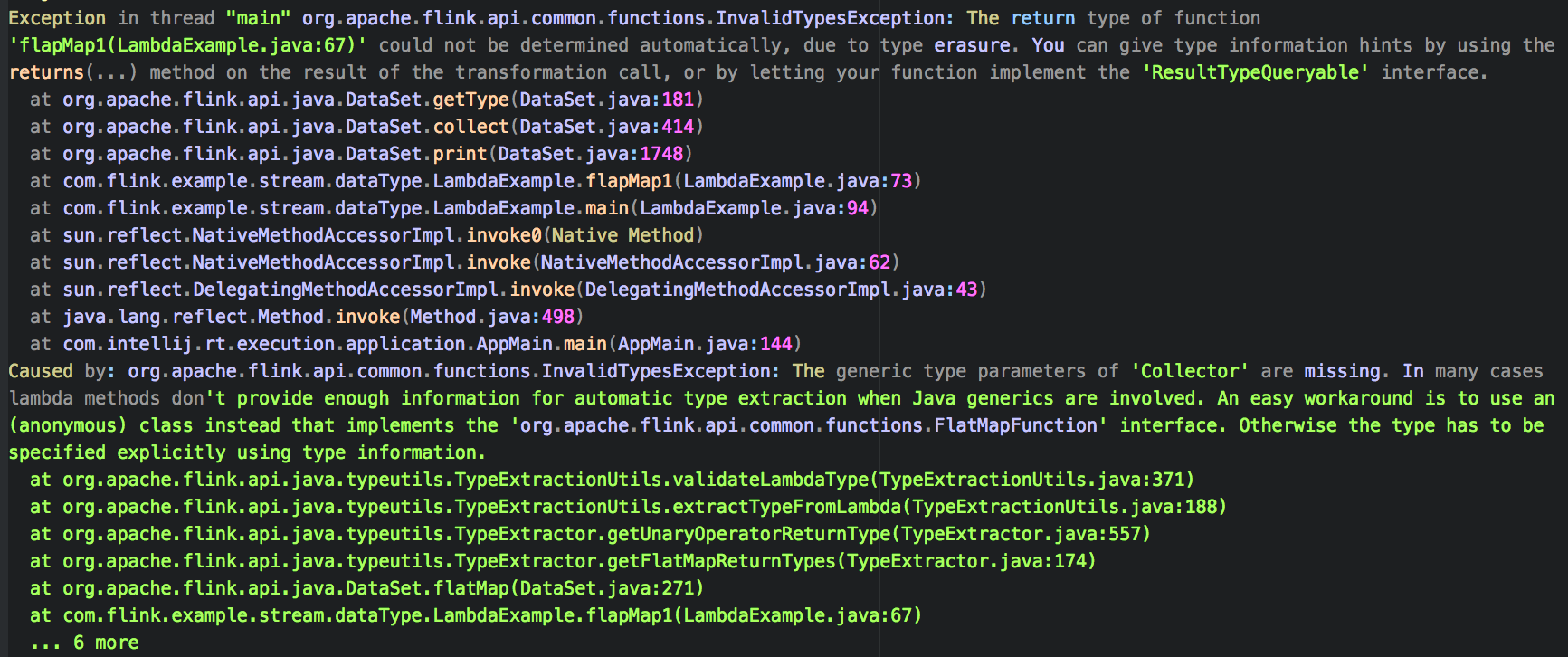

Since there is a generic Collector in the void flatMap(IN value, Collector out) of the flatMap() function signature, the generic type is erased during compilation and finally compiled into flatMap(IN value, Collector out). This makes Flink unable to automatically infer the type information of the output type. Therefore, using Lambda expression in flatMap() function will throw an exception similar to the following:

It is basically consistent with the error message of MapFunction. It is because the use of generics in Lambda leads to type erasure. The solution is similar:

- Use a (anonymous) class instead of implementing the 'org. Apache. Flick. API. Common. Functions. Flatmapfunction' interface.

- The type must be specified explicitly using type information.

Let's take a detailed look at the specific corresponding scheme.

2.1 anonymous inner class method

Use anonymous inner classes instead of implementing the 'org. Apache. Flick. API. Common. Functions. Flatmapfunction' interface:

env.fromElements("1,2,3", "4,5")

.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String input, Collector<String> out) throws Exception {

String[] params = input.split(",");

for(String value : params) {

out.collect(value);

}

}

})

.print();

2.2 user defined method

Use a custom class instead of implementing the 'org. Apache. Flick. API. Common. Functions. Flatmapfunction' interface:

public static class MyFlatMapFunction implements FlatMapFunction<String, String> {

@Override

public void flatMap(String input, Collector<String> out) throws Exception {

String[] params = input.split(",");

for(String value : params) {

out.collect(value);

}

}

}

env.fromElements("1,2,3", "4,5")

.flatMap(new MyFlatMapFunction())

.print();

2.3 returns mode

Indicate the type of information displayed using the returns statement:

env.fromElements("1,2,3", "4,5")

.flatMap((String input, Collector<String> out) -> {

String[] params = input.split(",");

for(String value : params) {

out.collect(value);

}

})

.returns(String.class)

.print();

Welcome to my official account and blog:

reference resources: