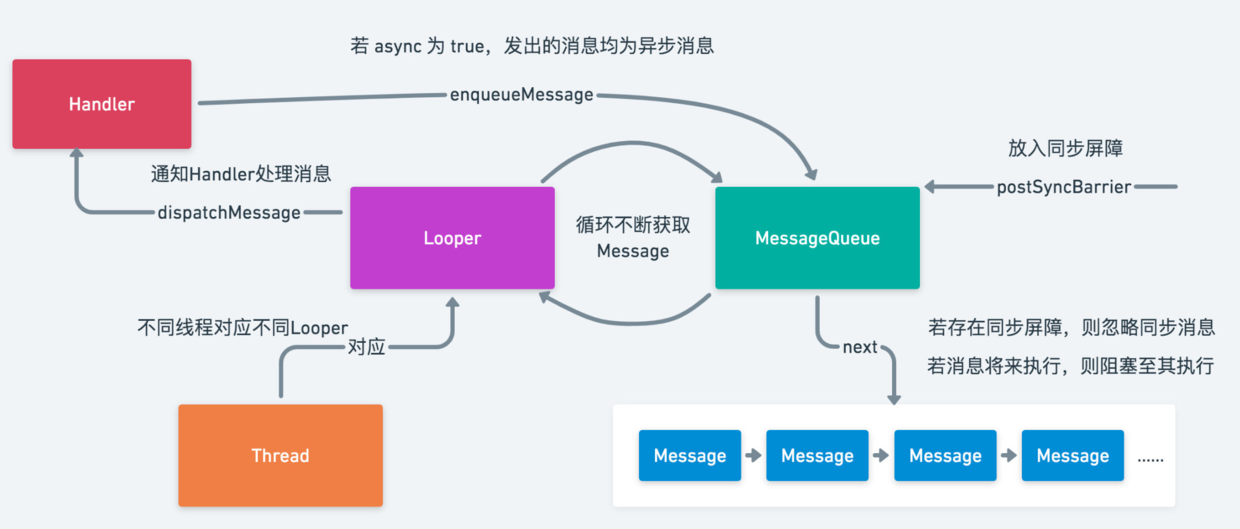

As you should all know, Android's messaging mechanism is based on Handler.Remember that I read several blogs a year ago and thought I knew Handler, Looper and MessageQueue.However, in fact, slowly during the process of looking at the source code, you will find that the contents of Handler are more than this, such as synchronization barrier, blocking wake-up mechanism of Handler's native layer, and so on, which were not understood before.So write this article and re-create the impression of Handler from scratch.

If you think the article is too long, you can find me and take the complete PDF to do your own research

Reference resources:

(More complete project downloads.Not finished yet.Source code.Graphic knowledge is then uploaded to github.)

Clickable About me Contact me for a complete PDF

(VX: mm14525201314)

Handler uses a producer-consumer model through which it can produce tasks that need to be performed.Looper, on the other hand, is a consumer who constantly consumes these messages out of the Message Queue. Let's take a look at its implementation.

send message

post & sendMessage

First of all, we all know that there are two main ways for Handler to execute the specified Runnable - post and sendMessage on the thread in which it resides, both of which have corresponding delay methods.Either post or sendMessage calls the sendMessageDelayed method.For example, here is an implementation of the post method:

public final boolean post(Runnable r) {

return sendMessageDelayed(getPostMessage(r), 0);

}You can see that it actually calls the sendMessageDelayed method, but wraps the Runnable into a Message object using the getPostMessage method.

private static Message getPostMessage(Runnable r) {

Message m = Message.obtain();

m.callback = r;

return m;

}This wrapped Message sets the callback to the corresponding Runnable.

All sendMessage and post methods actually end up calling the sendMessageAtTime method through the sendMessageDelayed method:

public final boolean sendMessageDelayed(Message msg, long delayMillis) {

if (delayMillis < 0) {

delayMillis = 0;

}

return sendMessageAtTime(msg, SystemClock.uptimeMillis() + delayMillis);

}In sendMessageAtTime, it first gets the corresponding MessageQueue object through mQueue, then calls enqueueMessage method to send the Message to MessageQueue.

public boolean sendMessageAtTime(Message msg, long uptimeMillis) {

MessageQueue queue = mQueue;

if (queue == null) {

RuntimeException e = new RuntimeException(

this + " sendMessageAtTime() called with no mQueue");

Log.w("Looper", e.getMessage(), e);

return false;

}

return enqueueMessage(queue, msg, uptimeMillis);

}Finally, the enqueueMessage method calling the MessageQueue actually passes the Message into the MessageQueue.It sets the target of the Message to the current Handler, and it is important to note that mAsynchronous is true before enqueueMessage, and Asynchronous is true if true.

private boolean enqueueMessage(MessageQueue queue, Message msg, long uptimeMillis) {

msg.target = this;

if (mAsynchronous) {

msg.setAsynchronous(true);

}

return queue.enqueueMessage(msg, uptimeMillis);

}So when was this mAsynchronous assigned?Looking in, you can see that its assignment occurs in Handler's constructor.That is, if you set async to true when you create a Handler, the Message sent by that Handler will be set to Async, which is "asynchronous Message".

- public Handler(Callback callback, boolean async)

- public Handler(Looper looper, Callback callback, boolean async)

We will discuss what asynchronous and synchronous messages are later.

Handler has a number of constructors, but other constructors end up calling both of them, with async set to false by default.

Let's look at these two constructors:

public Handler(Callback callback, boolean async) {

if (FIND_POTENTIAL_LEAKS) {

final Class<? extends Handler> klass = getClass();

if ((klass.isAnonymousClass() || klass.isMemberClass() || klass.isLocalClass()) &&

(klass.getModifiers() & Modifier.STATIC) == 0) {

Log.w(TAG, "The following Handler class should be static or leaks might occur: " +

klass.getCanonicalName());

}

}

mLooper = Looper.myLooper();

if (mLooper == null) {

throw new RuntimeException(

"Can't create handler inside thread that has not called Looper.prepare()");

}

mQueue = mLooper.mQueue;

mCallback = callback;

mAsynchronous = async;

}You can see that this constructor mainly assigns values to mLooper, mQueue, mCallback, mAsynchronous, where mLooper is obtained through the Looper.myLooper method, and the other constructor is similar to the implementation of this constructor except that Looper is passed in from outside.We can also see that mQueue, a MessageQueue, is a member variable inside the Looper object.

Message Entry

enqueueMessage

Next, let's look at the enqueueMessage method of MessageQueue after Handler sends a message:

boolean enqueueMessage(Message msg, long when) {

if (msg.target == null) {

throw new IllegalArgumentException("Message must have a target.");

}

if (msg.isInUse()) {

throw new IllegalStateException(msg + " This message is already in use.");

}

synchronized (this) {

if (mQuitting) {

IllegalStateException e = new IllegalStateException(

msg.target + " sending message to a Handler on a dead thread");

Log.w(TAG, e.getMessage(), e);

msg.recycle();

return false;

}

msg.markInUse();

msg.when = when;

Message p = mMessages;

boolean needWake;

if (p == null || when == 0 || when < p.when) {

// New head, wake up the event queue if blocked.

msg.next = p;

mMessages = msg;

needWake = mBlocked;

} else {

// Inserted within the middle of the queue. Usually we don't have to wake

// up the event queue unless there is a barrier at the head of the queue

// and the message is the earliest asynchronous message in the queue.

needWake = mBlocked && p.target == null && msg.isAsynchronous();

Message prev;

for (;;) {

prev = p;

p = p.next;

if (p == null || when < p.when) {

break;

}

if (needWake && p.isAsynchronous()) {

needWake = false;

}

}

msg.next = p; // invariant: p == prev.next

prev.next = msg;

}

// We can assume mPtr != 0 because mQuitting is false.

if (needWake) {

nativeWake(mPtr);

}

}

return true;

}You can see that MessageQueue actually maintains a chain of messages, and each insertion of data is in chronological order, that is, the messages in MessageQueue are chronologically ordered so that when the Message is taken out in a loop, it only needs to be taken one by one, so that the messages can be taken chronologicallySequence is consumed.

Finally, the native Wake method is invoked to wake up in case of wake-up, and the code related to the wake-up mechanism will be discussed later, for the time being.

Message Cycle

So let's see how the Looper.myLooper method gets the Looper object?

public static @Nullable Looper myLooper() {

return sThreadLocal.get();

}You can see that this Looper object is obtained by the sThreadLocal.get method, which means that this Looper is a thread-specific variable with a different Looper per thread.

So when was this Looper object created and put into this ThreadLocal?

We can see by tracing that it is actually put into ThreadLocal by the Looper.prepare method:

private static void prepare(boolean quitAllowed) {

if (sThreadLocal.get() != null) {

throw new RuntimeException("Only one Looper may be created per thread");

}

sThreadLocal.set(new Looper(quitAllowed));

}That makes sense, we don't call the Looper.prepare method in our application, so why can we send messages to the main thread through the main thread's Handler?

In fact, this Looper.prepare method has been created and called to set it when the main thread was created. Specifically, we can see the main function of the ActivityThread class:

public static void main(String[] args) {

// ...

Looper.prepareMainLooper();

// ...

Looper.loop();

// ...

}This main function is actually the entry to our process. You can see that it first calls Looper.prepareMainLooper to create the ooper for the main thread and passes it into ThreadLocal, where we can send messages.Why is it designed this way?Because, in fact, our View drawing events and so on are all scheduled through the main thread's Handler.

We then see the Looper.loop method:

public static void loop() {

final Looper me = myLooper();

if (me == null) {

throw new RuntimeException("No Looper; Looper.prepare() wasn't called on this thread.");

}

final MessageQueue queue = me.mQueue;

// Make sure the identity of this thread is that of the local process,

// and keep track of what that identity token actually is.

Binder.clearCallingIdentity();

final long ident = Binder.clearCallingIdentity();

for (;;) {

Message msg = queue.next(); // might block

if (msg == null) {

// No message indicates that the message queue is quitting.

return;

}

// ...

final long start = (slowDispatchThresholdMs == 0) ? 0 : SystemClock.uptimeMillis();

final long end;

try {

msg.target.dispatchMessage(msg);

end = (slowDispatchThresholdMs == 0) ? 0 : SystemClock.uptimeMillis();

} finally {

// ...

}

// ...

msg.recycleUnchecked();

}

}This is actually a dead-loop, its main function is to traverse MessageQueue, get Looper and MessageQueue, get the next Message in the Message list through the next method of MessageQueue, then call the dispatchMessage method of Message target to consume the Message, and finally recycle the Message.

From the code above, you can see that Looper's main function is to traverse the MessageQueue, where each Message found calls its target's dispatchMessage to consume the Message, where the target is the Handler from which we previously sent the Message.

Message traversal

We then see the message traversal process, which constantly calls the next method from the MessageQueue to get the message and consume it. Let's look at the next process in detail:

Message next() {

final long ptr = mPtr;

if (ptr == 0) {

return null;

}

int pendingIdleHandlerCount = -1;

int nextPollTimeoutMillis = 0;

for (;;) {

if (nextPollTimeoutMillis != 0) {

Binder.flushPendingCommands();

}

// 1

nativePollOnce(ptr, nextPollTimeoutMillis);

synchronized (this) {

final long now = SystemClock.uptimeMillis();

Message prevMsg = null;

Message msg = mMessages;

// 2

if (msg != null && msg.target == null) {

do {

prevMsg = msg;

msg = msg.next;

} while (msg != null && !msg.isAsynchronous());

}

if (msg != null) {

// 3

if (now < msg.when) {

nextPollTimeoutMillis = (int) Math.min(msg.when - now, Integer.MAX_VALUE);

} else {

// Got a message.

mBlocked = false;

if (prevMsg != null) {

prevMsg.next = msg.next;

} else {

mMessages = msg.next;

}

msg.next = null;

if (DEBUG) Log.v(TAG, "Returning message: " + msg);

msg.markInUse();

return msg;

}

} else {

// No more messages.

nextPollTimeoutMillis = -1;

}

if (mQuitting) {

dispose();

return null;

}

// 4

if (pendingIdleHandlerCount < 0

&& (mMessages == null || now < mMessages.when)) {

pendingIdleHandlerCount = mIdleHandlers.size();

}

if (pendingIdleHandlerCount <= 0) {

// No idle handlers to run. Loop and wait some more.

mBlocked = true;

continue;

}

if (mPendingIdleHandlers == null) {

mPendingIdleHandlers = new IdleHandler[Math.max(pendingIdleHandlerCount, 4)];

}

mPendingIdleHandlers = mIdleHandlers.toArray(mPendingIdleHandlers);

}

for (int i = 0; i < pendingIdleHandlerCount; i++) {

final IdleHandler idler = mPendingIdleHandlers[i];

mPendingIdleHandlers[i] = null; // release the reference to the handler

boolean keep = false;

try {

keep = idler.queueIdle();

} catch (Throwable t) {

Log.wtf(TAG, "IdleHandler threw exception", t);

}

if (!keep) {

synchronized (this) {

mIdleHandlers.remove(idler);

}

}

}

pendingIdleHandlerCount = 0;

nextPollTimeoutMillis = 0;

}

}The code above is long, so let's analyze it one step at a time

First, within the next method, it is continuously looping, and in one place it first calls the native PollOne method, which is related to the Handler's blocking wake-up mechanism, which we will discuss later.

Then, in two places, it did a very special treatment.This determines if the current Message is a Message with a null target. If the target is null, it keeps pulling down the Message until it encounters an asynchronous Message.It may seem strange to some readers here that the target of a Message is null, which is clearly avoided in enqueueMessage. Why is there a Message that the target is null?This is actually related to Handler's synchronous barrier mechanism, which we will discuss later

It then determines at Comment 3 whether the current message is at the time it should be sent, and if it is, it will be taken out and returned, or it will simply be set to the rest of the time (here to prevent the int from crossing the border)

Then at comment 4, under the precondition of the first loop, if the MessageQueue is empty or the message will not execute in the future, it will try to execute some idleHandler and set pendingIdleHandlerCount to 0 after execution to avoid the next execution.

If the message you get this time is not meant to be executed now, nativePollOnce will be called again, and this time nextPollTimeoutMillis is no longer zero, which is related to the blocking wake-up mechanism we will mention later.

Processing of messages

Message processing is achieved through dispatchMessage of Handler:

public void dispatchMessage(Message msg) {

if (msg.callback != null) {

handleCallback(msg);

} else {

if (mCallback != null) {

if (mCallback.handleMessage(msg)) {

return;

}

}

handleMessage(msg);

}

}It callbacks the callback of the Message first, callbacks the handleMessage method of the Callback in the Handler if no callback exists, and eventually calls the handleMessage method implemented by the Handler itself if it is still undefined.

So when we use them, we can rewrite one of the three above to suit our needs.

Synchronization barrier mechanism

There is a mechanism called synchronization barrier in Handler that allows asynchronous message execution to take precedence. Let's see how it works.

Join Synchronization Barrier

There is also a special message in the Handler that has a null target and is not consumed, just in the MessageQueue as an identity.It is a special message, SyncBarrier.MessageQueue::postSyncBarrier method allows us to queue messages.

private int postSyncBarrier(long when) {

// Enqueue a new sync barrier token.

// We don't need to wake the queue because the purpose of a barrier is to stall it.

synchronized (this) {

final int token = mNextBarrierToken++;

final Message msg = Message.obtain();

msg.markInUse();

msg.when = when;

msg.arg1 = token;

Message prev = null;

Message p = mMessages;

if (when != 0) {

while (p != null && p.when <= when) {

prev = p;

p = p.next;

}

}

if (prev != null) { // invariant: p == prev.next

msg.next = p;

prev.next = msg;

} else {

msg.next = p;

mMessages = msg;

}

return token;

}

}As you can see, there is nothing special here except that a message with a null target is added to the message queue, but as we have seen in the previous enqueueMessage method, it is impossible for a normal enqueue operation to put such a message with a null target in the message queue.Therefore, this synchronization barrier can only be emitted in this way.

Remove Synchronization Barrier

We can remove the message barrier through the removeSyncBarrier method.

public void removeSyncBarrier(int token) {

// Remove a sync barrier token from the queue.

// If the queue is no longer stalled by a barrier then wake it.

synchronized (this) {

Message prev = null;

Message p = mMessages;

// Found message with null target and same token

while (p != null && (p.target != null || p.arg1 != token)) {

prev = p;

p = p.next;

}

if (p == null) {

throw new IllegalStateException("The specified message queue synchronization "

+ " barrier token has not been posted or has already been removed.");

}

final boolean needWake;

if (prev != null) {

prev.next = p.next;

needWake = false;

} else {

mMessages = p.next;

needWake = mMessages == null || mMessages.target != null;

}

p.recycleUnchecked();

// If the loop is quitting then it is already awake.

// We can assume mPtr != 0 when mQuitting is false.

if (needWake && !mQuitting) {

nativeWake(mPtr);

}

}

}The main purpose here is to remove the synchronization barrier from the MessageQueue, which typically occurs when an asynchronous message is executed.

Finally, if you need to wake up, call the nativeWake method to wake up.

Role of synchronous barriers

Looking at the previous MessageQueue::next code, we know that when a synchronization barrier is encountered in a MessageQueue, it constantly ignores the following synchronization messages until it encounters an asynchronous message. This is designed to make the asynchronous messages execute first when a synchronization barrier is encountered in the queue, so that some messages take precedence.Execute.For example, TraversalRunnable messages during View drawing are asynchronous messages. A message barrier is placed before queuing, so that messages drawn by the interface will execute more preferentially than other messages, avoiding the jamming of drawing messages caused by too many messages in MessageQueue. When drawing is completed, the message barrier will be removed.

Blocking wake-up mechanism

As you can see from the previous section, there is actually a blocking wake-up mechanism in Handler. We all know that continuous looping is very resource-intensive. Sometimes the messages in our MessageQueue do not need to be executed right now, but take a while, at which point if Looper is still looping, it must be a waste of resources.Therefore, Handler designed a blocking wake-up mechanism that blocks Looper's loop process until the next task's execution time arrives or wakes it up in some special cases when there are no messages to execute at this time, thus avoiding the above-mentioned waste of resources.

epoll

This blocking wake-up mechanism is based on Linux's I/O multiplexing mechanism epoll, which monitors multiple file descriptors simultaneously and notifies the program to read/write when a file descriptor is ready.

Epoll has three main methods, epoll_create, epoll_ctl, epoll_wait.

epoll_create

int epoll_create(int size)

Its main function is to create an epoll handle and return it, and the size passed in represents the number of descriptors listened on (only the number of FDS initially allocated)

epoll_ctl

int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event);

Its function is to register the epoll event and perform the specified op operation on the fd with the following parameters:

- Handle value of epfd:epoll (that is, the return value of epoll_create)

-

op: operation on fd

- EPOLL_CTL_ADD: Register fd to epfd

- EPOLL_CTL_DEL: Delete fd from epfd

- EPOLL_CTL_MOD: Modify listening events for registered FDS

- fd: File descriptor to listen on

- epoll_event: Events to listen for

epoll_event is a structure in which events represent operations on corresponding file operators and data represents data available to the user.

Evets can take the following values:

- EPOLLIN: Indicates that the corresponding file descriptor is readable (including the normal shutdown of the opposite SOCKET);

- EPOLLOUT: Indicates that the corresponding file descriptor is writable;

- EPOLLPRI: Indicates that the corresponding file descriptor has urgent data to read (this should indicate that there is external data);

- EPOLLERR: Indicates an error in the corresponding file descriptor;

- EPOLLHUP: Indicates that the corresponding file descriptor is suspended;

- EPOLLET: Set EPOLL to Edge Triggered mode, which is relative to Level Triggered.

- EPOLLONESHOT: Listen for only one event. After listening for this event, if you need to continue listening for this socket, you need to add it to the EPOLL queue again

epoll_wait

int epoll_wait(int epfd, struct epoll_event * events, int maxevents, int timeout);

Its function is to wait for an event to be reported, and the parameters have the following meaning:

- Handle value of epfd:epoll

- Events: collection of events from the kernel

- Number of maxevents:events, cannot exceed size at creation

- Timeout: timeout

When this method is called, it enters a blocking state and waits for IO events on the epfd. If an event specified previously occurs for a file descriptor that EPFD listens on, it calls back, causing the epoll to wake up and return the number of events to be processed.If the set timeout is exceeded, it will also wake up and return to 0 to avoid blocking all the time.

Handler's blocking wake-up mechanism is based on the blocking characteristics of epoll above. Let's see its implementation.

native initialization

The nativeInit method is called when a MessageQueue is created in Java. NativeMessageQueue is created in the native layer and returned with its address, which is then used to communicate with the NativeMessageQueue (that is, mPtr in the MessageQueue, similar to MMKV), while Looper in the Native layer is created when a NativeMessageQueue is created.We see the constructor for Looper under Native:

Looper::Looper(bool allowNonCallbacks) :

mAllowNonCallbacks(allowNonCallbacks), mSendingMessage(false),

mPolling(false), mEpollFd(-1), mEpollRebuildRequired(false),

mNextRequestSeq(0), mResponseIndex(0), mNextMessageUptime(LLONG_MAX) {

mWakeEventFd = eventfd(0, EFD_NONBLOCK); //fd to construct wake-up events

AutoMutex _l(mLock);

rebuildEpollLocked();

}As you can see, it calls the rebuildEpollLocked method to initialize epoll, so let's see how it works

void Looper::rebuildEpollLocked() {

if (mEpollFd >= 0) {

close(mEpollFd);

}

mEpollFd = epoll_create(EPOLL_SIZE_HINT);

struct epoll_event eventItem;

memset(& eventItem, 0, sizeof(epoll_event));

eventItem.events = EPOLLIN;

eventItem.data.fd = mWakeEventFd;

int result = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, mWakeEventFd, & eventItem);

for (size_t i = 0; i < mRequests.size(); i++) {

const Request& request = mRequests.valueAt(i);

struct epoll_event eventItem;

request.initEventItem(&eventItem);

int epollResult = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, request.fd, & eventItem);

}

}As you can see, here the old epoll descriptor is first turned off, then epoll_create is called to create a new epoll descriptor, and after some initialization, both mWakeEventFd and fd from mRequests are registered in the epoll descriptor, registered events are EPOLLIN.

This means that when IO occurs for one of these file descriptors, epoll_wait is notified to wake it up, and we guess that the Handler blocking is done through epoll_wait.

At the same time, it can be found that the Native layer also has MessageQueue and Looper, that is, the ative layer actually has a set of message mechanisms, which we will discuss later.

native Blocking Implementation

Let's look at the blockage, which is implemented in the MessageQueue::next we saw earlier. When we find that the message to be returned will not be executed in the future, we calculate how many milliseconds are left before it will be executed and call the nativePollOne method to block the returned process to a specified time.

NativePollOne is obviously a native method, and it finally calls the pollOne method of Looper, a native layer class.

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

if (outFd != NULL) *outFd = fd;

if (outEvents != NULL) *outEvents = events;

if (outData != NULL) *outData = data;

return ident;

}

}

if (result != 0) {

if (outFd != NULL) *outFd = 0;

if (outEvents != NULL) *outEvents = 0;

if (outData != NULL) *outData = NULL;

return result;

}

result = pollInner(timeoutMillis);

}

}The first part is mainly about the handling of the Native layer message mechanism, which we don't care about for the moment. Finally, we call the pollInner method:

int Looper::pollInner(int timeoutMillis) {

// ...

int result = POLL_WAKE;

mResponses.clear();

mResponseIndex = 0;

mPolling = true;

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

// 1

int eventCount = epoll_wait(mEpollFd, eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

// ...

return result;

}You can see that the epoll_wait method was called here in one place and passed in the difference between the current time we had previously passed in the natviePollOne method and the next task execution time.This is the core implementation of our blocking functionality, which will be blocked until we reach the time we set or until IO occurs for several FDS that we registered in epoll's fd.In fact, here we can guess that the nativeWake method is to wake up by manipulating a registered mWakeEventFd.

Following is mainly some processing of the Native layer messaging mechanism, which is of little concern to this article because its logic is essentially consistent with the Java layer.

native wake-up

The nativeWake method finally calls the wake method of Looper under Native through the wake method of NativeMessageQueue:

void Looper::wake() {

uint64_t inc = 1;

ssize_t nWrite = TEMP_FAILURE_RETRY(write(mWakeEventFd, &inc, sizeof(uint64_t)));

if (nWrite != sizeof(uint64_t)) {

if (errno != EAGAIN) {

ALOGW("Could not write wake signal, errno=%d", errno);

}

}

}This is the call to the write method, which writes 1 to the mWakeEventFd, waking up the pollOne method that listens to the fd, and continuing the next method in Java.

So let's go back and see under what circumstances will the Java layer call the natvieWake method to wake up?

There are several main times to call the nativeWake method in the MessageQueue class:

- Wake up when quit method of MessageQueue is called to exit

- When a message enters the queue, if the inserted message is inserted at the front end of the chain list (which will execute first) or when there is a synchronization barrier, it is inserted at the front end of the chain (which will execute first).

- When removing the synchronization barrier, if the message list is empty or if there is no asynchronous message behind the synchronization barrier

It can be found that waking up is mainly done when the blocking may no longer be needed.(For example, joining an earlier task, where continuing to block obviously affects its execution)

summary

Android's messaging mechanism is composed of Handler, Looper and MessageQueue in Java and Native layers

- Handler: Sender and handler of an event whose async can be set in the construction method, defaulting to "Default to true".If async is false, the Message Sage sent by the Handler is asynchronous and will be processed first if there is a synchronization barrier.

- Looper: A class used to traverse MessageQueue, where each thread has a unique Looper that opens a dead loop, pulls messages from the MessageQueue and sends them to the target for processing

- MessageQueue: Used to store messages and maintains a chain of messages internally. Each time a Message is picked up, it will be blocked by nativePollOne to avoid wasting resources if it takes some time for the Message to actually execute.If there is a Message barrier, synchronous messages will be ignored to get asynchronous messages first, thus achieving the preferential consumption of asynchronous messages.

Related Issues

Here are also some common Handler-related questions that can be answered in conjunction with the previous ones.

Question 1

Looper is created in the main thread and its loop method is also executed in the main thread. Why doesn't such a dead loop block the main thread?

We see that in ActivityThread, it actually has a handleMessage method. Actually, ActivityThread is a Handler. Many events (such as the various life cycles of Activity and Service) in the process we use are in all kinds of Case s here. That is to say, the main thread we usually refer to is actually handled by the loop method of this Looper.Messages, which enable processing such as callbacks to Activity's declaration cycle, and so on, to call back to our users.

public void handleMessage(Message msg) {

if (DEBUG_MESSAGES) Slog.v(TAG, ">>> handling: " + codeToString(msg.what));

switch (msg.what) {

case XXX:

// ...

}

Object obj = msg.obj;

if (obj instanceof SomeArgs) {

((SomeArgs) obj).recycle();

}

if (DEBUG_MESSAGES) Slog.v(TAG, "<<< done: " + codeToString(msg.what));

}

}Therefore, it cannot be said that the main thread will not block, because the main thread itself is blocked, where all events are handled by the main thread, allowing us to do our own processing (such as drawing the View, etc.) during this cycle.

This question should mean why such a dead loop would not jam the interface for two reasons:

- The drawing of the interface itself is an event within this loop

- Interface drawing is an asynchronous message sent under the protection of a synchronous barrier, which is handled first by the main thread, so it has the highest priority for interface drawing and does not get stuck because there are too many events in the Handler.

Question 2

What is the memory leak in Handler?How does this happen?

First, memory leaks in Handler are often caused by code like this:

public class XXXActivity extends BaseActivity {

// ...

private Handler mHandler = new Handler() {

@Override

public void handleMessage(Message msg) {

// Some Processing

}

};

// ...

}So why does this cause a memory leak?

We all know that anonymous internal classes hold references to external classes, which means that Handler here holds references to its external class XXXActivity.And we can recall that during sendMessage, it sets the target of the message to Handler, which means that the message holds a reference to the mHandler.So let's assume we sent a two-minute delay message through mHandler, and we closed the interface before two minutes arrived.Activity can reasonably be recycled by GC at this time, but since Message is still in MessageQueue at this time, MessageQueue is an object that holds a reference to Message, Message holds a reference to our Handler, and since Handler also holds a reference to its external class XXXActivity.This results in XXXActivity being still reachable at this time, which prevents XXXActivity from being recycled by GC, causing a memory leak.

Therefore, it is better to define Handler as static to avoid similar memory leak problems when it holds references to external classes.If you still need some information about XXXActivity at this time, WeakReference can be used to make it hold weak references to the Activity, so that you can access some of the information and avoid memory leaks.

Reference resources:

(More complete project downloads.Not finished yet.Source code.Graphic knowledge is then uploaded to github.)

Clickable About me Contact me for a complete PDF

(VX: mm14525201314)